本文为您介绍如何在阿里云DSW中,基于so-vits-svc开源库端到端生成一个AI歌手。

背景信息

在人工智能浪潮的推动下,技术的不断加持让虚拟人类愈发逼真。越来越多的虚拟人类被开发并应用于互联网中。技术使机器具备了人的特性,而人类也在追求智能化的道路上越走越远。使用人工智能克隆人类声音的场景已经不再仅限于荧屏之中。在今天,虚拟人类作为技术创新和文艺创作的结合体,让AI歌手成为了打开虚拟人与人世界的钥匙。本文将为您介绍如何生成一个AI歌手。AI歌手的效果演示如下:

目标人物声音:

歌曲原声:

换声后的效果:

准备环境和资源

创建工作空间,详情请参见创建工作空间。

创建DSW实例,其中关键参数配置如下。具体操作,请参见创建及管理DSW实例。

地域及可用区:进行本实践操作时,建议选择华北2(北京)、华东2(上海)、华东1(杭州)、华南1(深圳)这四个地域。这四个地域在后续操作中下载ChatGLM模型数据时速度更快。

实例规格选择:ecs.gn6v-c8g1.2xlarge。

镜像选择:在官方镜像中选择

stable-diffusion-webui-env:pytorch1.13-gpu-py310-cu117-ubuntu22.04。

步骤一:在DSW中打开教程文件

进入PAI-DSW开发环境。

登录PAI控制台。

在左侧导航栏单击工作空间列表,在工作空间列表页面中单击待操作的工作空间名称,进入对应工作空间内。

在页面左上方,选择使用服务的地域。

在左侧导航栏,选择。

可选:在交互式建模(DSW)页面的搜索框,输入实例名称或关键字,搜索实例。

单击需要打开的实例操作列下的打开。

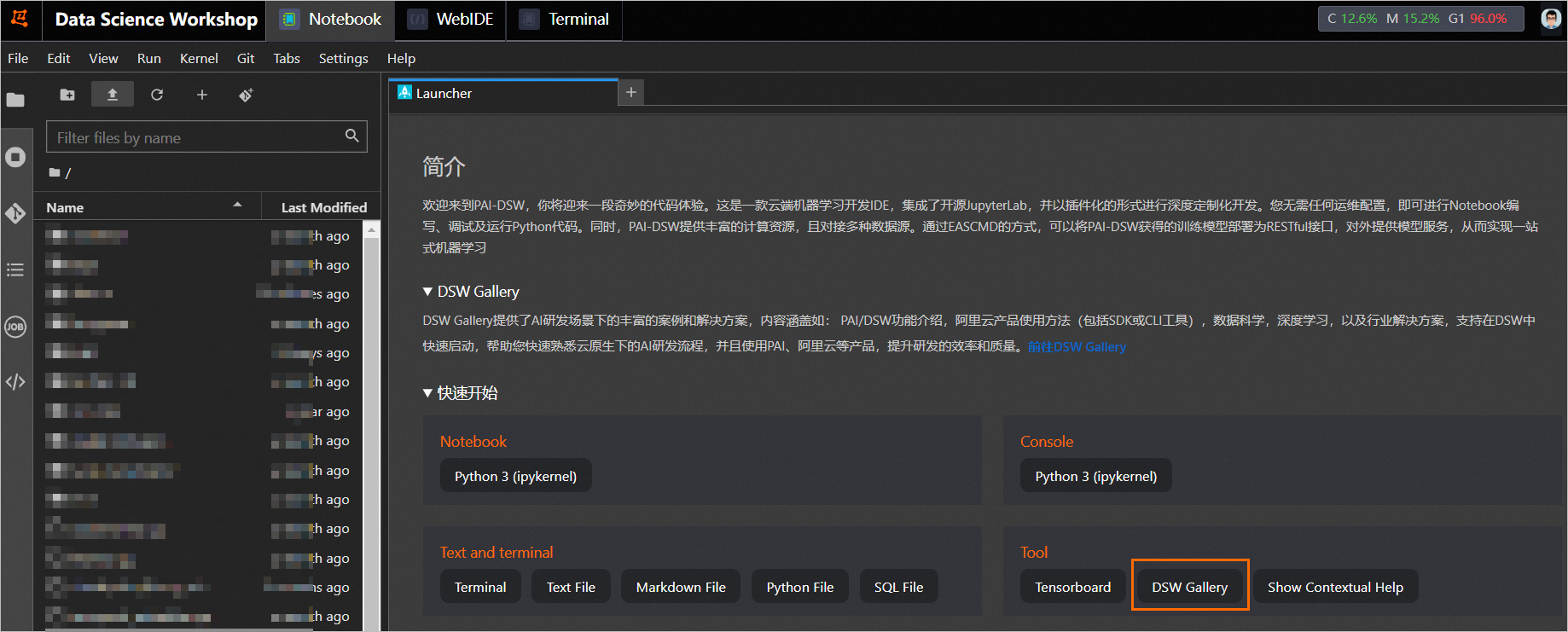

在Notebook页签的Launcher页面,单击快速开始区域Tool下的DSW Gallery,打开DSW Gallery页面。

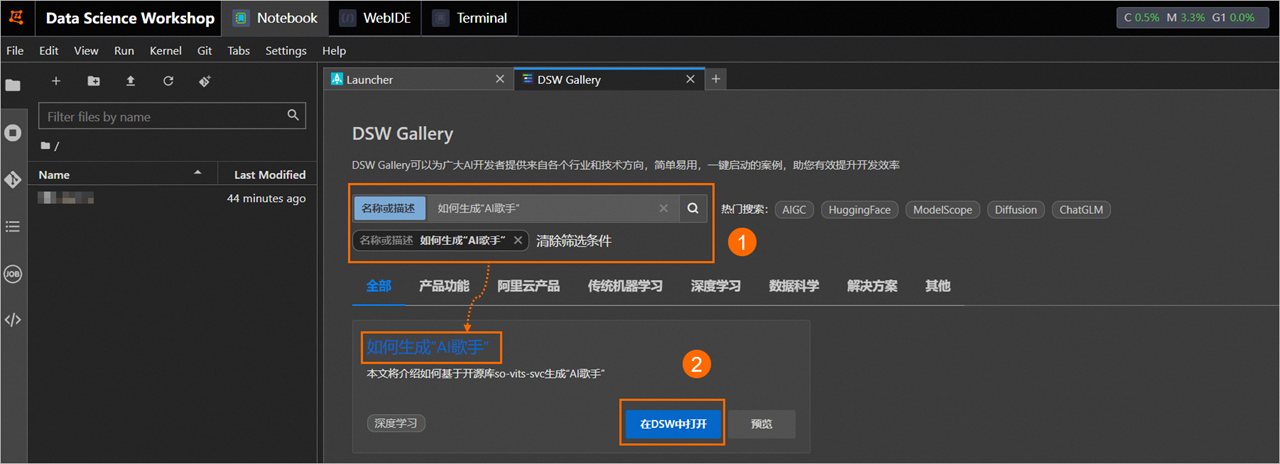

在DSW Gallery页面中,搜索并找到如何生成“AI歌手”教程,单击教程卡片中的在DSW中打开。

单击后即会自动将本教程所需的资源和教程文件下载至DSW实例中,并在下载完成后自动打开教程文件。

步骤二:运行教程文件

在打开的教程文件ai_singer.ipynb文件中,您可以直接看到教程文本,您可以在教程文件中直接运行对应的步骤的命令,当成功运行结束一个步骤命令后,再顺次运行下个步骤的命令。 本教程包含的操作步骤以及每个步骤的运行结果如下。

本教程包含的操作步骤以及每个步骤的运行结果如下。

下载so-vits-svc源码并安装依赖包。

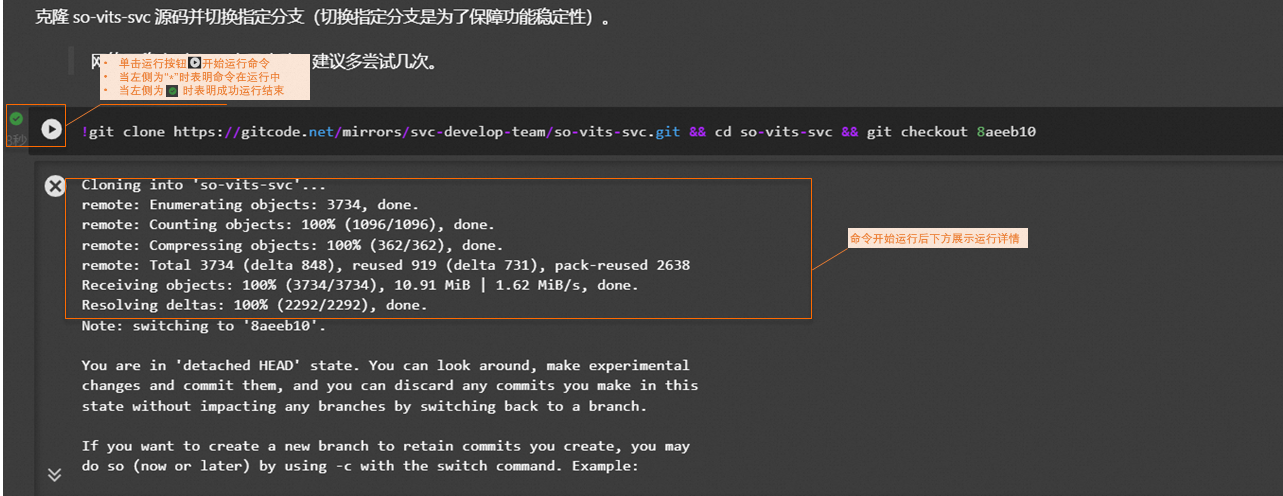

克隆开源代码。

安装依赖包。

【说明】结果中出现的ERROR和WARNING信息可以忽略。

下载预训练模型。

下载声音编码器模型。

下载预训练模型。

下载训练数据。

您可以直接下载PAI准备好的训练数据。您也可以自行下载数据并参照教程文本中的附录内容完成数据清洗操作。

下载的样本数据格式如下,支持多种人声的训练。

dataset_raw ├───speaker1(C12) │ ├───xxx1.wav │ ├───... │ └───xxxn.wav ├───speaker2(可选) │ ├───xxx1.wav │ ├───... │ └───xxxn.wav ├───speakerN(可选)预处理训练数据。

重采样数据。

将数据切分为训练集和验证集并生成配置文件。

生成音频特征数据,并保存至

./so-vits-svc/dataset/44k/C12目录下。

训练(可选)

【说明】由于模型训练时间比较长,您可以跳过该步骤,使用PAI准备好的模型文件直接进行模型推理。

为了获得更好的效果,建议您将epochs参数值修改为1000,每个epoch训练时长大约为20~30秒。训练时长大约持续500分钟。

步骤三:推理模型

完成以上操作后,您已经成功完成了AI歌手的模型训练。您可以使用上述步骤训练好的模型文件或者使用PAI准备好的模型文件进行离线推理。推理结果默认保存在./results目录下。您可以在教程文件中继续运行推理章节的操作步骤。具体操作步骤以及每个步骤的执行结果如下。

(可选)下载PAI准备好的模型文件,并将模型文件保存至

./so-vits-svc/logs/G_8800_8gpus.pth目录下。【说明】如果您使用上述步骤训练好的模型文件进行离线推理,则可以跳过该步骤。

--2023-08-30 08:50:10-- http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/projects/so-vits-svc/models/C12/G_8800.pth Resolving pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com (pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com)... 39.98.1.111 Connecting to pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com (pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com)|39.98.1.111|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 627897375 (599M) [application/octet-stream] Saving to: ‘logs/G_8800_8gpus.pth’ logs/G_8800_8gpus.p 100%[===================>] 598.81M 13.8MB/s in 45s 2023-08-30 08:50:55 (13.3 MB/s) - ‘logs/G_8800_8gpus.pth’ saved [627897375/627897375]下载测试数据,并保存至

./raw目录下。本教程使用UVR5分离好的数据作为测试数据。由于离线推理需要使用干净的人声数据,如果您想自行准备测试数据,则需要参照教程文本中的附录内容完成数据清洗操作。同时,推理数据必须存放在./raw目录下。--2023-08-30 08:51:48-- http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/projects/so-vits-svc/data/one.tar.gz Resolving pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com (pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com)... 39.98.1.111 Connecting to pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com (pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com)|39.98.1.111|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 15195943 (14M) [application/gzip] Saving to: ‘./raw/one.tar.gz’ one.tar.gz 100%[===================>] 14.49M 12.5MB/s in 1.2s 2023-08-30 08:51:50 (12.5 MB/s) - ‘./raw/one.tar.gz’ saved [15195943/15195943] one/ one/1_one_(Instrumental).wav one/1_one_(Vocals).wav one/one.mp3 one/1_1_one_(Vocals)_(Vocals).wav one/1_1_one_(Vocals)_(Instrumental).wav将声音替换为C12人物的声音。

load WARNING:xformers:WARNING[XFORMERS]: xFormers can't load C++/CUDA extensions. xFormers was built for: PyTorch 1.13.1+cu117 with CUDA 1107 (you have 2.0.1+cu117) Python 3.10.9 (you have 3.10.6) Please reinstall xformers (see https://github.com/facebookresearch/xformers#installing-xformers) Memory-efficient attention, SwiGLU, sparse and more won't be available. Set XFORMERS_MORE_DETAILS=1 for more details load model(s) from pretrain/checkpoint_best_legacy_500.pt #=====segment start, 7.76s====== vits use time:0.8072702884674072 #=====segment start, 6.62s====== vits use time:0.11305761337280273 #=====segment start, 6.76s====== vits use time:0.11228108406066895 #=====segment start, 6.98s====== vits use time:0.11324000358581543 #=====segment start, 0.005s====== jump empty segment读取声音。

合并人声和伴奏。

Export successfully!

- 本页导读 (1)