前提条件

本最佳实践的软件环境要求如下:

应用环境:

容器服务

公共云云企业网服务

公共云弹性伸缩组服务

配置条件:

使用专有云的容器服务或者在

开通云专线,打通容器服务所在

开通公共云弹性伸缩组服务(ESS)。

背景信息

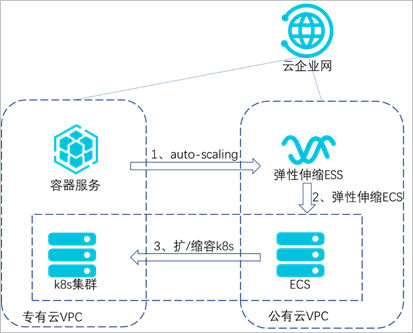

本实践基于K8s的业务集群运行在专有云上,对测试业务进行压力测试,主要基于以下三种产品和能力:

利用阿里云的云企业网专线打通专有云和公共云,实现两朵云上

利用

利用

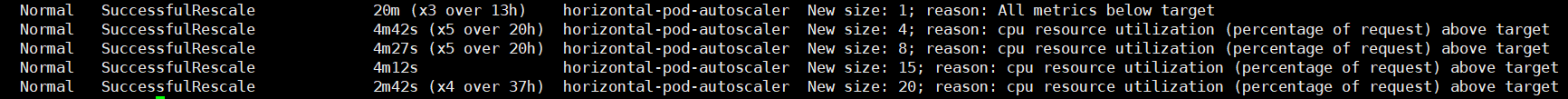

HPA(Horizontal Pod Autoscaler)是

当被测试业务指标达到上限时,触发

图 1. 架构原理图

配置

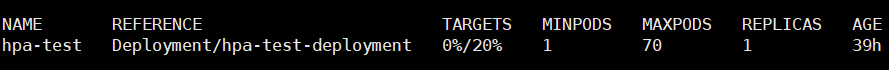

本示例创建了一个支持

若使用自建

创建一个 apiVersion:

app/v1beta2

kind: Deployment

spec:

template:

metadata:

creationTimestamp: null

labels:

app: hpa-test

spec:

dnsPolicy: ClusterFirst

terminationGracePeriodSeconds:30

containers:

image: '192.168.**.***:5000/admin/hpa-example:v1'

imagePullPolicy: IfNotPresent

terminationMessagePolicy:File

terminationMessagePath:/dev/termination-log

name: hpa-test

resources:

requests:

cpu: //必须设置创建 apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

annotations:

autoscaling.alpha.kubernetes.io/conditions:'[{"type":"AbleToScale","status":"True","lastTransitionTime":"2020-04-29T06:57:28Z","reason":"ScaleDownStabilized","message":"recent

recommendations were higher than current one, applying the highest recent

recommendation"},{"type":"ScalingActive","status":"True","lastTransitionTime":"2020-04-29T06:57:28Z","reason":"ValidMetricFound","message":"theHPA

was able to successfully calculate a replica count from cpu resource

utilization(percentage of

request)"},{"type":"ScalingLimited","status":"False","lastTransitionTime":"2020-04-29T06:57:28Z","reason":"DesiredWithinRange","message":"thedesired

count is within the acceptable range"}]'

autoscaling.alpha.kubernetes.io/currentmetrics:'[{"type":"Resource","resource":{"name":"cpu","currentAverageUtilization":0,"currentAverageValue":"0"}}]'

creationTimestamp: 2020-04-29T06:57:13Z

name: hpa-test

namespace: default

resourceVersion: "3092268"

selfLink:

/apis/autoscaling/v1/namespaces/default/horizontalpodautoscalers/hpa01

uid: a770ca26-89e6-11ea-a7d7-00163e0106e9

spec:

maxReplicas: //设置

若使用阿里云容器服务,需要在部署应用时选择配置

配置

资源请求(Request)的正确、合理设置,是弹性伸缩的前提条件。节点自动伸缩组件基于

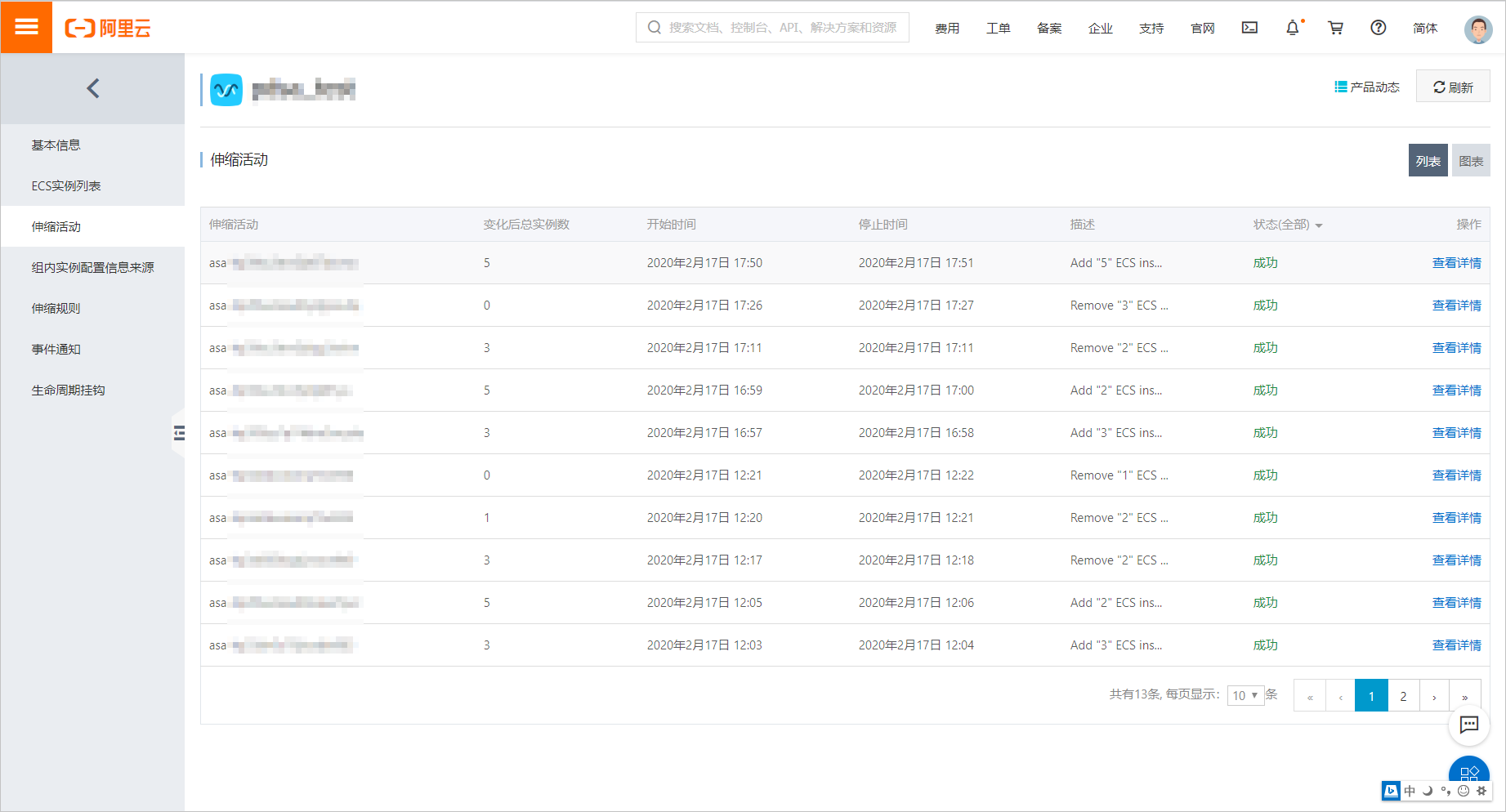

当

如果可以满足伸缩条件,则会触发伸缩组的节点加入。而当一个节点在弹性伸缩组中且节点上

配置弹性伸缩组

创建

创建伸缩配置,记录伸缩配置的 #!/bin/sh

yum install -y ntpdate && ntpdate -u ntp1.aliyun.com && curl http:// example.com/public/hybrid/attach_local_node_aliyun.sh | bash -s -- --docker-version 17.06.2-ce-3 --token

9s92co.y2gkocbumal4fz1z --endpoint 192.168.**.***:6443 --cluster-dns 10.254.**.**

--region cn-huhehaote

echo "{" > /etc/docker/daemon.json

echo "\"registry-mirrors\": [" >>

/etc/docker/daemon.json

echo "\"https://registry-vpc.cn-huhehaote.aliyuncs.com\"" >> /etc/docker/daemon.json

echo "]," >> /etc/docker/daemon.json

echo "\"insecure-registries\": [\"https://192.168.**.***:5000\"]" >> /etc/docker/daemon.json

echo "}" >> /etc/docker/daemon.json

systemctl restart docker

K8s kubectl apply -f ca.yml 参考

access-key-id: "TFRBSWlCSFJyeHd2QXZ6****"

access-key-secret: "bGIyQ3NuejFQOWM0WjFUNjR4WTVQZzVPRXND****"

region-id: "Y24taHVoZWhh****"ca.yal

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

name: cluster-autoscaler

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: cluster-autoscaler

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

rules:

- apiGroups: [""]

resources: ["events","endpoints"]

verbs: ["create", "patch"]

- apiGroups: [""]

resources: ["pods/eviction"]

verbs: ["create"]

- apiGroups: [""]

resources: ["pods/status"]

verbs: ["update"]

- apiGroups: [""]

resources: ["endpoints"]

resourceNames: ["cluster-autoscaler"]

verbs: ["get","update"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["watch","list","get","update"]

- apiGroups: [""]

resources: ["pods","services","replicationcontrollers","persistentvolumeclaims","persistentvolumes"]

verbs: ["watch","list","get"]

- apiGroups: ["extensions"]

resources: ["replicasets","daemonsets"]

verbs: ["watch","list","get"]

- apiGroups: ["policy"]

resources: ["poddisruptionbudgets"]

verbs: ["watch","list"]

- apiGroups: ["apps"]

resources: ["statefulsets"]

verbs: ["watch","list","get"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["watch","list","get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: cluster-autoscaler

namespace: kube-system

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create","list","watch"]

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["cluster-autoscaler-status", "cluster-autoscaler-priority-expander"]

verbs: ["delete","get","update","watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: cluster-autoscaler

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-autoscaler

subjects:

- kind: ServiceAccount

name: cluster-autoscaler

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: cluster-autoscaler

namespace: kube-system

labels:

k8s-addon: cluster-autoscaler.addons.k8s.io

k8s-app: cluster-autoscaler

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: cluster-autoscaler

subjects:

- kind: ServiceAccount

name: cluster-autoscaler

namespace: kube-system

---

apiVersion: v1

kind: Secret

metadata:

name: cloud-config

namespace: kube-system

type: Opaque

data:

access-key-id: "TFRBSWlCSFJyeHd2********"

access-key-secret: "bGIyQ3NuejFQOWM0WjFUNjR4WTVQZzVP*********"

region-id: "Y24taHVoZW********"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: cluster-autoscaler

namespace: kube-system

labels:

app: cluster-autoscaler

spec:

replicas: 1

selector:

matchLabels:

app: cluster-autoscaler

template:

metadata:

labels:

app: cluster-autoscaler

spec:

dnsConfig:

nameservers:

- 100.XXX.XXX.XXX

- 100.XXX.XXX.XXX

nodeSelector:

ca-key: ca-value

priorityClassName: system-cluster-critical

serviceAccountName: admin

containers:

- image: 192.XXX.XXX.XXX:XX/admin/autoscaler:v1.3.1-7369cf1

name: cluster-autoscaler

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 100m

memory: 300Mi

command:

- ./cluster-autoscaler

- '--v=5'

- '--stderrthreshold=info'

- '--cloud-provider=alicloud'

- '--scan-interval=30s'

- '--scale-down-delay-after-add=8m'

- '--scale-down-delay-after-failure=1m'

- '--scale-down-unready-time=1m'

- '--ok-total-unready-count=1000'

- '--max-empty-bulk-delete=50'

- '--expander=least-waste'

- '--leader-elect=false'

- '--scale-down-unneeded-time=8m'

- '--scale-down-utilization-threshold=0.2'

- '--scale-down-gpu-utilization-threshold=0.3'

- '--skip-nodes-with-local-storage=false'

- '--nodes=0:5:asg-hp3fbu2zeu9bg3clraqj'

imagePullPolicy: "Always"

env:

- name: ACCESS_KEY_ID

valueFrom:

secretKeyRef:

name: cloud-config

key: access-key-id

- name: ACCESS_KEY_SECRET

valueFrom:

secretKeyRef:

name: cloud-config

key: access-key-secret

- name: REGION_ID

valueFrom:

secretKeyRef:

name: cloud-config

key: region-id