AIGC Stable Diffusion文生图Lora模型微调实现虚拟上装

learn

手动配置

50

教程简介

在本教程中,您将学习如何在阿里云交互式建模(PAI-DSW)中,基于Diffusers开源库进行AIGC Stable Diffusion模型的微调训练,以及基于Stable-Diffusion-WebUI开源库启动WebUI进行模型推理。

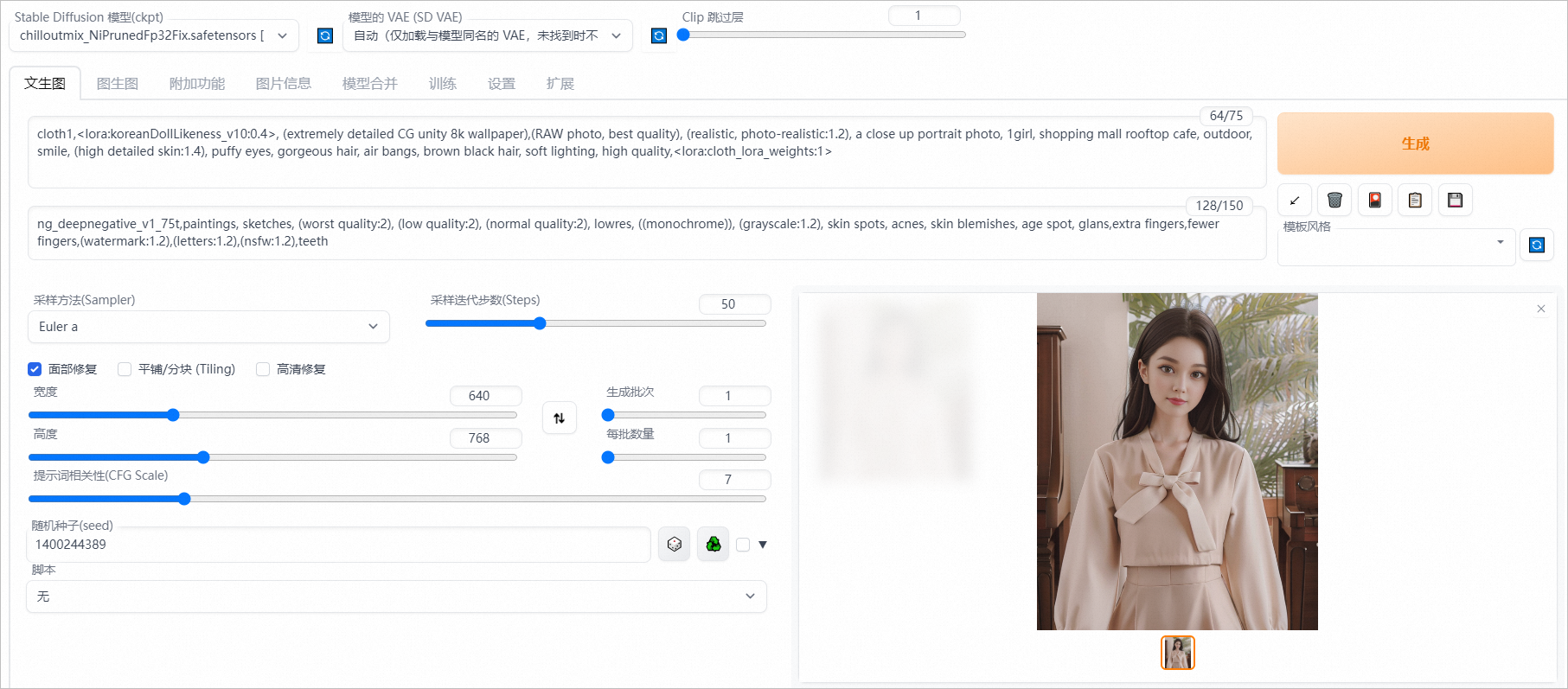

AIGC是指通过人工智能技术自动生成内容的生产方式,已经成为继互联网时代的下一个产业时代风口。其中,文生图(Text-to-image Generation)任务是流行的跨模态生成任务,旨在生成与给定文本对应的图像。WebUI文生图推理效果如图所示。

我能学到什么

学会如何在DSW中进行模型训练和模型推理。

学会如何在DSW中启动WebUI。

学会如何基于Diffusers开源库进行AIGC Stable Diffusion模型微调。

操作难度 | 中 |

所需时间 | 50分钟 |

使用的阿里云产品 | 阿里云在华北2(北京)、华东2(上海)、华东1(杭州)、华南1(深圳)地域为您提供了免费的PAI-DSW资源供您免费体验,您可根据需要选择对应地域申请试用,本教程以杭州地域为例。 【重要】:PAI-DSW免费资源包只适用于本教程中的PAI-DSW产品。如果您领取了PAI-DSW资源包后,使用了PAI-DSW及PAI的其他产品功能(如PAI-DLC、PAI-EAS等),PAI-DSW产品产生的费用由资源包抵扣,其他产品功能产生的费用无法抵扣,会产生对应的费用账单。 |

所需费用 | 0元 |

准备环境及资源

5

开始教程前,请按以下步骤准备环境和资源:

【重要】:PAI-DSW免费资源包只适用于本教程中的PAI-DSW产品。如果您领取了PAI-DSW资源包后,使用了PAI-DSW及PAI的其他产品功能(如PAI-DLC、PAI-EAS等),PAI-DSW产品产生的费用由资源包抵扣,其他产品功能产生的费用无法抵扣,会产生对应的费用账单。

访问阿里云免费试用。单击页面右上方的登录/注册按钮,并根据页面提示完成账号登录(已有阿里云账号)、账号注册(尚无阿里云账号)或实名认证(根据试用产品要求完成个人实名认证或企业实名认证)。

成功登录后,在产品类别下选择人工智能与机器学习 > 人工智能平台,在交互式建模PAI-DSW卡片上单击立即试用。

【说明】:如果您此前已申请过试用PAI的免费资源包,此时界面会提示为已试用,您可以直接单击已试用按钮,进入PAI的控制台。

在交互式建模PAI-DSW面板,勾选服务协议后,单击立即试用,进入免费开通页面。

【重要】以下几种情况可能产生额外费用。

使用了除免费资源类型外的计费资源类型:

您申请试用的是PAI-DSW免费资源包,但您创建的DSW实例使用的资源类型非阿里云免费试用提供的资源类型。当前可申请免费使用的资源类型有:ecs.gn6v-c8g1.2xlarge、ecs.g6.xlarge、ecs.gn7i-c8g1.2xlarge。

申请试用的免费资源包与使用的产品资源不对应:

您创建了DSW实例,但您申请试用的是DLC或EAS产品的免费资源包。您使用DSW产品产生的费用无法使用免费资源包抵扣,会产生后付费账单。

您申请试用的是DSW免费资源包,但您使用的产品是DLC或EAS。使用DLC和EAS产品产生的费用无法使用DSW免费资源包抵扣,会产生后付费账单。

免费额度用尽或超出试用期:

领取免费资源包后,请在免费额度和有效试用期内使用。如果免费额度用尽或试用期结束后,继续使用计算资源,会产生后付费账单。

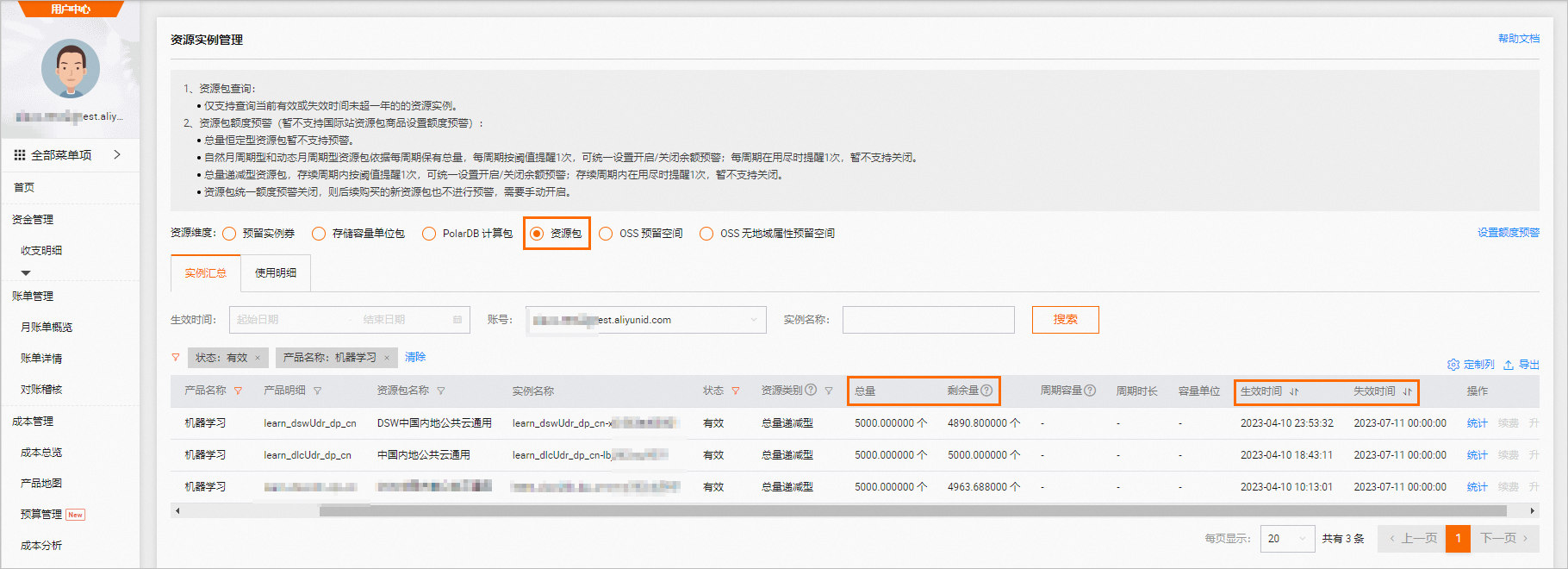

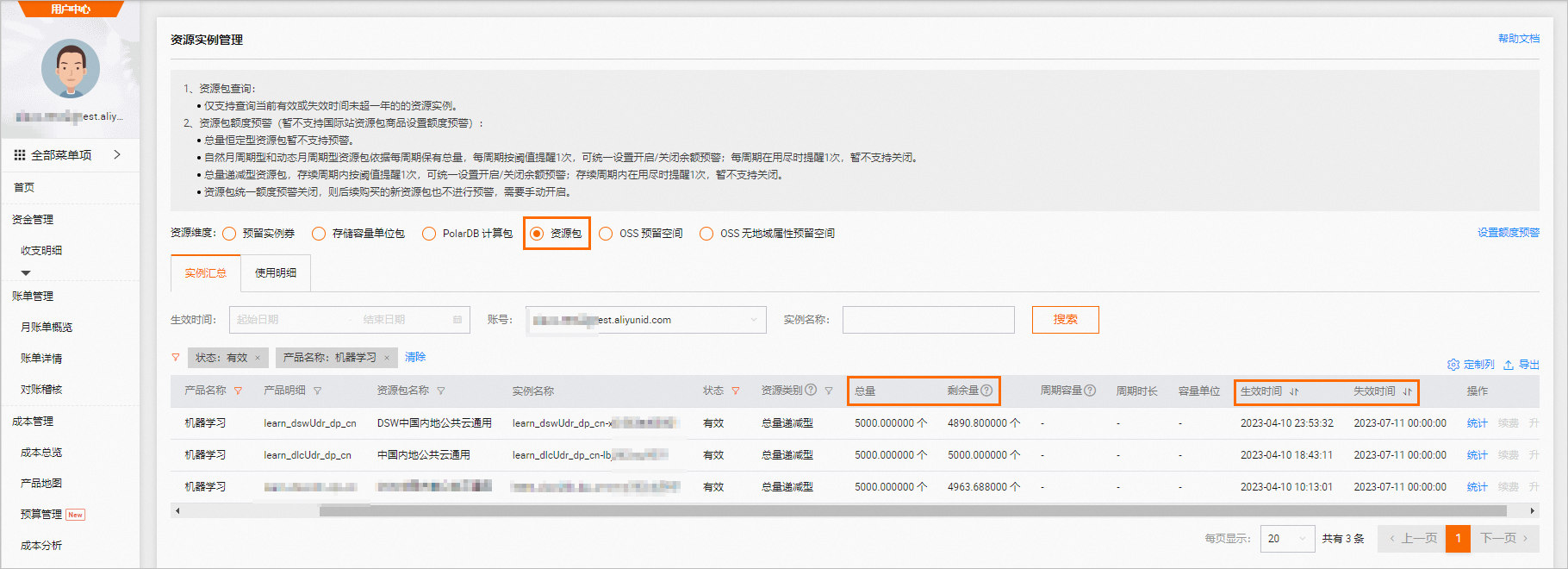

请前往资源实例管理页面,查看免费额度使用量和过期时间,如下图所示。

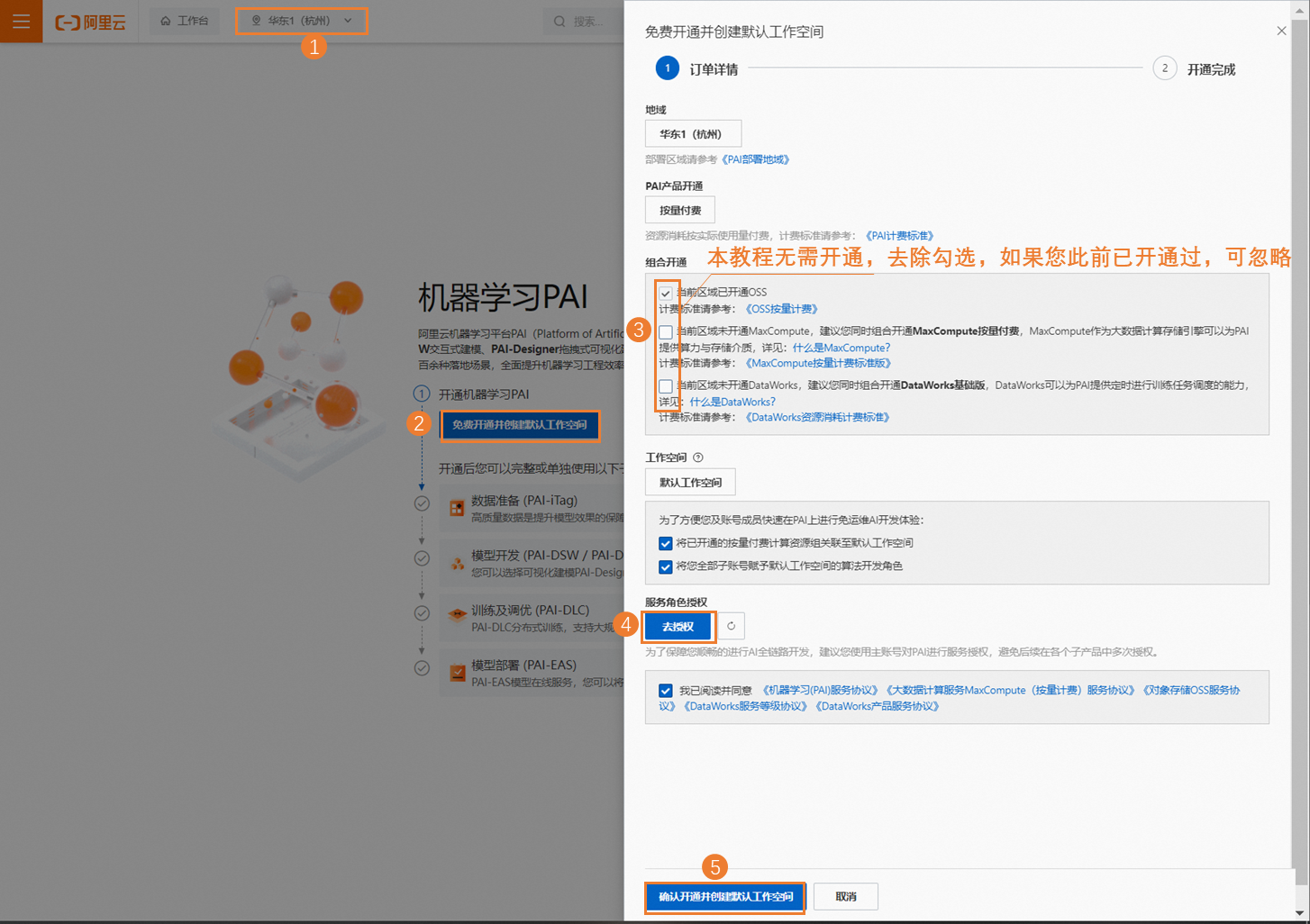

开通人工智能平台PAI并创建默认工作空间。其中关键参数配置如下,更多详细内容,请参见开通并创建默认工作空间。

本教程地域选择:华东1(杭州)。您也可以根据情况选择华北2(北京)、华东2(上海)、华南1(深圳)地域。

单击免费开通并创建默认工作空间:在弹出的开通页面中配置订单详情。配置要点如下。

本教程不需要开通其他产品,您需要在组合开通配置模块,去勾选其他产品的复选框。

在服务角色授权模块单击去授权,根据界面提示为PAI完成授权,然后返回开通页面,刷新页面,继续开通操作。

开通成功后单击进入PAI控制台,在默认工作空间中创建DSW实例。其中关键参数配置如下,其他参数取默认配置即可。更多详细内容,请参见创建DSW实例。

【说明】:创建DSW实例需要一定时间,与当前的资源数有关,通常大约需要15分钟。如果您使用地域资源不足,可更换其他支持免费试用的地域申请开通试用并创建DSW实例。

参数

描述

地域及可用区

本教程选择:华东1(杭州)。

实例名称

您可以自定义实例名称,本教程示例为:AIGC_test。

资源配额

本教程需选择公共资源(后付费)的GPU规格,规格名称为ecs.gn7i-c8g1.2xlarge。

【说明】:阿里云免费试用提供的资源类型包括以下几种类型:

ecs.gn7i-c8g1.2xlarge(仅此规格可运行本教程内容)

ecs.g6.xlarge

ecs.gn6v-c8g1.2xlarge

选择镜像

选择官方镜像中的stable-diffusion-webui-develop:1.0-pytorch2.0-gpu-py310-cu117-ubuntu22.04。

在DSW中打开教程文件

5

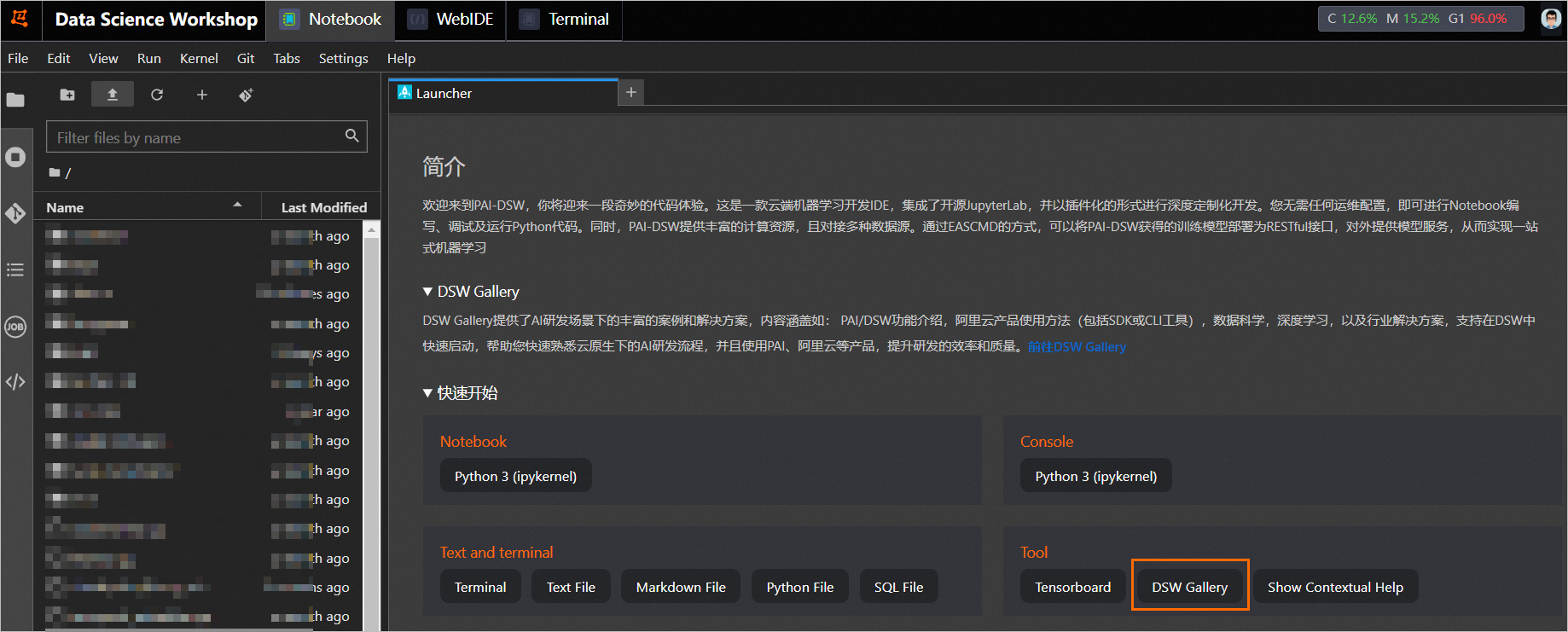

进入PAI-DSW开发环境。

登录PAI控制台。

在页面左上方,选择DSW实例所在的地域。

在左侧导航栏单击工作空间列表,在工作空间列表页面中单击默认工作空间名称,进入对应工作空间内。

在左侧导航栏,选择模型开发与训练>交互式建模(DSW)。

单击需要打开的实例操作列下的打开,进入PAI-DSW实例开发环境。

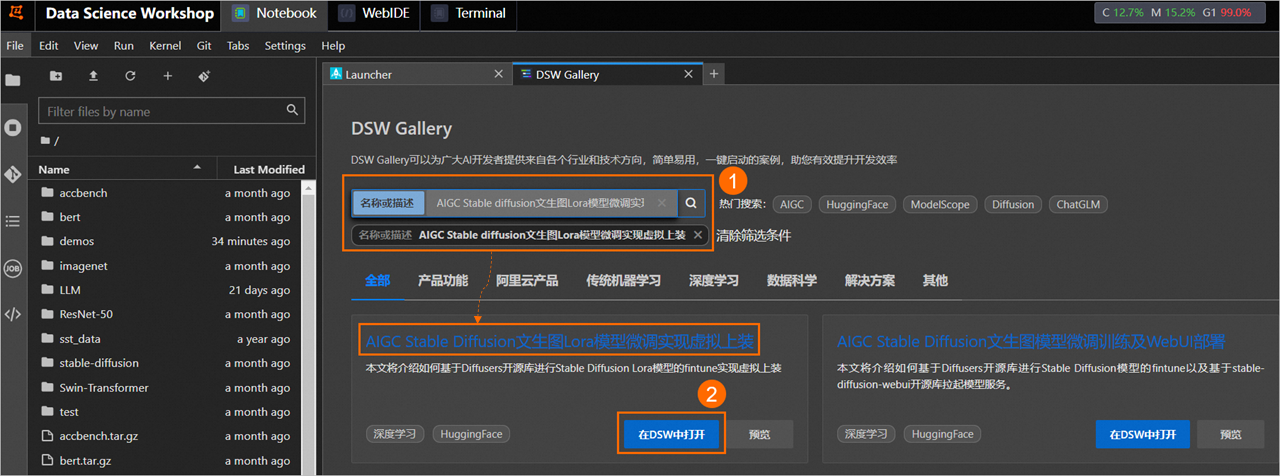

在Notebook页签的Launcher页面,单击快速开始区域Tool下的DSW Gallery,打开DSW Gallery页面。

在DSW Gallery页面中,搜索并找到AIGC Stable diffusion文生图Lora模型微调实现虚拟上装教程,单击教程卡片中的在DSW中打开。

单击后即会自动将本教程所需的资源和教程文件下载至DSW实例中,并在下载完成后自动打开教程文件。

运行教程文件

30

在打开的教程文件stable_diffusion_try_on.ipynb文件中,您可以直接看到教程文本,您可以在教程文件中直接运行对应的步骤的命令,当成功运行结束一个步骤命令后,再顺次运行下个步骤的命令。

本教程包含的操作步骤以及每个步骤的执行结果如下。

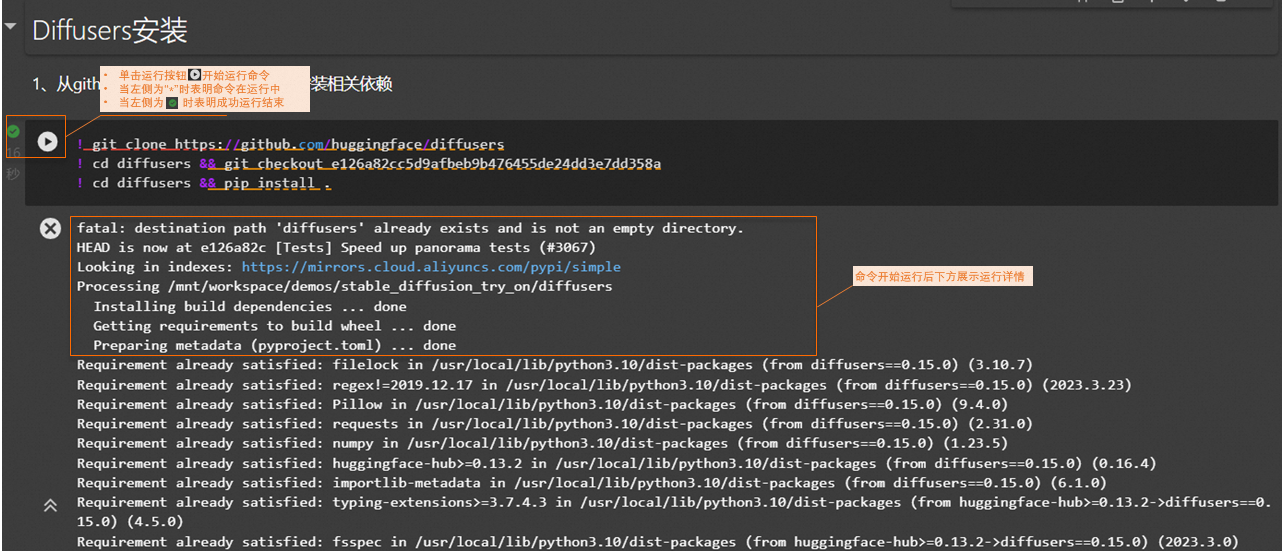

安装Diffusers。

从GitHub下载Diffusers开源库,并安装相关依赖。

单击此处查看运行结果

Cloning into 'diffusers'... remote: Enumerating objects: 38370, done. remote: Counting objects: 100% (193/193), done. remote: Compressing objects: 100% (117/117), done. remote: Total 38370 (delta 98), reused 142 (delta 61), pack-reused 38177 Receiving objects: 100% (38370/38370), 23.99 MiB | 13.30 MiB/s, done. Resolving deltas: 100% (28387/28387), done. Note: switching to 'e126a82cc5d9afbeb9b476455de24dd3e7dd358a'. You are in 'detached HEAD' state. You can look around, make experimental changes and commit them, and you can discard any commits you make in this state without impacting any branches by switching back to a branch. If you want to create a new branch to retain commits you create, you may do so (now or later) by using -c with the switch command. Example: git switch -c <new-branch-name> Or undo this operation with: git switch - Turn off this advice by setting config variable advice.detachedHead to false HEAD is now at e126a82c [Tests] Speed up panorama tests (#3067) Looking in indexes: https://mirrors.cloud.aliyuncs.com/pypi/simple Processing /mnt/workspace/demos/stable_diffusion_try_on/diffusers Installing build dependencies ... done Getting requirements to build wheel ... done Preparing metadata (pyproject.toml) ... done Requirement already satisfied: huggingface-hub>=0.13.2 in /usr/local/lib/python3.10/dist-packages (from diffusers==0.15.0) (0.13.3) Requirement already satisfied: Pillow in /usr/local/lib/python3.10/dist-packages (from diffusers==0.15.0) (9.4.0) Requirement already satisfied: requests in /usr/local/lib/python3.10/dist-packages (from diffusers==0.15.0) (2.25.1) Requirement already satisfied: regex!=2019.12.17 in /usr/local/lib/python3.10/dist-packages (from diffusers==0.15.0) (2023.3.23) Requirement already satisfied: importlib-metadata in /usr/local/lib/python3.10/dist-packages (from diffusers==0.15.0) (6.1.0) Requirement already satisfied: filelock in /usr/local/lib/python3.10/dist-packages (from diffusers==0.15.0) (3.10.7) Requirement already satisfied: numpy in /usr/local/lib/python3.10/dist-packages (from diffusers==0.15.0) (1.23.3) Requirement already satisfied: tqdm>=4.42.1 in /usr/local/lib/python3.10/dist-packages (from huggingface-hub>=0.13.2->diffusers==0.15.0) (4.65.0) Requirement already satisfied: typing-extensions>=3.7.4.3 in /usr/local/lib/python3.10/dist-packages (from huggingface-hub>=0.13.2->diffusers==0.15.0) (4.5.0) Requirement already satisfied: packaging>=20.9 in /usr/local/lib/python3.10/dist-packages (from huggingface-hub>=0.13.2->diffusers==0.15.0) (23.0) Requirement already satisfied: pyyaml>=5.1 in /usr/local/lib/python3.10/dist-packages (from huggingface-hub>=0.13.2->diffusers==0.15.0) (6.0) Requirement already satisfied: zipp>=0.5 in /usr/local/lib/python3.10/dist-packages (from importlib-metadata->diffusers==0.15.0) (3.15.0) Requirement already satisfied: urllib3<1.27,>=1.21.1 in /usr/local/lib/python3.10/dist-packages (from requests->diffusers==0.15.0) (1.26.15) Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.10/dist-packages (from requests->diffusers==0.15.0) (2022.12.7) Requirement already satisfied: chardet<5,>=3.0.2 in /usr/local/lib/python3.10/dist-packages (from requests->diffusers==0.15.0) (4.0.0) Requirement already satisfied: idna<3,>=2.5 in /usr/local/lib/python3.10/dist-packages (from requests->diffusers==0.15.0) (2.10) Building wheels for collected packages: diffusers Building wheel for diffusers (pyproject.toml) ... done Created wheel for diffusers: filename=diffusers-0.15.0-py3-none-any.whl size=851791 sha256=e83e698cdae49d0717759d693ba75cfdb3cffe12a89340543462c255ba8ed2ce Stored in directory: /tmp/pip-ephem-wheel-cache-6gixjdiu/wheels/d3/1e/00/f8f22390fdf7d521da123c7cf7496d598216654481076ec878 Successfully built diffusers Installing collected packages: diffusers Attempting uninstall: diffusers Found existing installation: diffusers 0.15.0.dev0 Uninstalling diffusers-0.15.0.dev0: Successfully uninstalled diffusers-0.15.0.dev0 Successfully installed diffusers-0.15.0 WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv [notice] A new release of pip is available: 23.0.1 -> 23.2.1 [notice] To update, run: python3 -m pip install --upgrade pip验证是否安装成功。

单击此处查看运行结果

/usr/local/lib/python3.10/dist-packages/tqdm/auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html from .autonotebook import tqdm as notebook_tqdm配置accelerate。您可以直接运行命令下载默认配置文件,如果需要自定义配置则需要在Terminal中执行

accelerate config命令,并根据DSW实例详情,选择对应配置即可。单击此处查看运行结果

--2023-09-10 14:55:10-- http://pai-vision-data-sh.oss-cn-shanghai.aliyuncs.com/aigc-data/accelerate/default_config.yaml Resolving pai-vision-data-sh.oss-cn-shanghai.aliyuncs.com (pai-vision-data-sh.oss-cn-shanghai.aliyuncs.com)... 106.14.228.10 Connecting to pai-vision-data-sh.oss-cn-shanghai.aliyuncs.com (pai-vision-data-sh.oss-cn-shanghai.aliyuncs.com)|106.14.228.10|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 306 [application/octet-stream] Saving to: ‘/root/.cache/huggingface/accelerate/default_config.yaml’ /root/.cache/huggin 100%[===================>] 306 --.-KB/s in 0s 2023-09-10 14:55:10 (62.9 MB/s) - ‘/root/.cache/huggingface/accelerate/default_config.yaml’ saved [306/306]安装文生图算法相关依赖库。回显结果中出现的WARNING信息可忽略。

单击此处查看运行结果

Looking in indexes: https://mirrors.cloud.aliyuncs.com/pypi/simple Requirement already satisfied: accelerate>=0.16.0 in /usr/local/lib/python3.10/dist-packages (from -r requirements.txt (line 1)) (0.18.0) Requirement already satisfied: torchvision in /usr/local/lib/python3.10/dist-packages (from -r requirements.txt (line 2)) (0.14.1+cu117) Requirement already satisfied: transformers>=4.25.1 in /usr/local/lib/python3.10/dist-packages (from -r requirements.txt (line 3)) (4.25.1) Requirement already satisfied: datasets in /usr/local/lib/python3.10/dist-packages (from -r requirements.txt (line 4)) (2.11.0) Requirement already satisfied: ftfy in /usr/local/lib/python3.10/dist-packages (from -r requirements.txt (line 5)) (6.1.1) Requirement already satisfied: tensorboard in /usr/local/lib/python3.10/dist-packages (from -r requirements.txt (line 6)) (2.12.1) Requirement already satisfied: Jinja2 in /usr/local/lib/python3.10/dist-packages (from -r requirements.txt (line 7)) (3.1.2) Requirement already satisfied: numpy>=1.17 in /usr/local/lib/python3.10/dist-packages (from accelerate>=0.16.0->-r requirements.txt (line 1)) (1.23.3) Requirement already satisfied: torch>=1.4.0 in /usr/local/lib/python3.10/dist-packages (from accelerate>=0.16.0->-r requirements.txt (line 1)) (1.13.1+cu117) Requirement already satisfied: packaging>=20.0 in /usr/local/lib/python3.10/dist-packages (from accelerate>=0.16.0->-r requirements.txt (line 1)) (23.0) Requirement already satisfied: psutil in /usr/local/lib/python3.10/dist-packages (from accelerate>=0.16.0->-r requirements.txt (line 1)) (5.9.4) Requirement already satisfied: pyyaml in /usr/local/lib/python3.10/dist-packages (from accelerate>=0.16.0->-r requirements.txt (line 1)) (6.0) Requirement already satisfied: typing-extensions in /usr/local/lib/python3.10/dist-packages (from torchvision->-r requirements.txt (line 2)) (4.5.0) Requirement already satisfied: pillow!=8.3.*,>=5.3.0 in /usr/local/lib/python3.10/dist-packages (from torchvision->-r requirements.txt (line 2)) (9.4.0) Requirement already satisfied: requests in /usr/local/lib/python3.10/dist-packages (from torchvision->-r requirements.txt (line 2)) (2.25.1) Requirement already satisfied: filelock in /usr/local/lib/python3.10/dist-packages (from transformers>=4.25.1->-r requirements.txt (line 3)) (3.10.7) Requirement already satisfied: huggingface-hub<1.0,>=0.10.0 in /usr/local/lib/python3.10/dist-packages (from transformers>=4.25.1->-r requirements.txt (line 3)) (0.13.3) Requirement already satisfied: tqdm>=4.27 in /usr/local/lib/python3.10/dist-packages (from transformers>=4.25.1->-r requirements.txt (line 3)) (4.65.0) Requirement already satisfied: tokenizers!=0.11.3,<0.14,>=0.11.1 in /usr/local/lib/python3.10/dist-packages (from transformers>=4.25.1->-r requirements.txt (line 3)) (0.12.1) Requirement already satisfied: regex!=2019.12.17 in /usr/local/lib/python3.10/dist-packages (from transformers>=4.25.1->-r requirements.txt (line 3)) (2023.3.23) Requirement already satisfied: pandas in /usr/local/lib/python3.10/dist-packages (from datasets->-r requirements.txt (line 4)) (1.5.3) Requirement already satisfied: fsspec[http]>=2021.11.1 in /usr/local/lib/python3.10/dist-packages (from datasets->-r requirements.txt (line 4)) (2023.3.0) Requirement already satisfied: dill<0.3.7,>=0.3.0 in /usr/local/lib/python3.10/dist-packages (from datasets->-r requirements.txt (line 4)) (0.3.6) Requirement already satisfied: multiprocess in /usr/local/lib/python3.10/dist-packages (from datasets->-r requirements.txt (line 4)) (0.70.14) Requirement already satisfied: aiohttp in /usr/local/lib/python3.10/dist-packages (from datasets->-r requirements.txt (line 4)) (3.8.4) Requirement already satisfied: xxhash in /usr/local/lib/python3.10/dist-packages (from datasets->-r requirements.txt (line 4)) (3.2.0) Requirement already satisfied: pyarrow>=8.0.0 in /usr/local/lib/python3.10/dist-packages (from datasets->-r requirements.txt (line 4)) (11.0.0) Requirement already satisfied: responses<0.19 in /usr/local/lib/python3.10/dist-packages (from datasets->-r requirements.txt (line 4)) (0.18.0) Requirement already satisfied: wcwidth>=0.2.5 in /usr/local/lib/python3.10/dist-packages (from ftfy->-r requirements.txt (line 5)) (0.2.6) Requirement already satisfied: tensorboard-data-server<0.8.0,>=0.7.0 in /usr/local/lib/python3.10/dist-packages (from tensorboard->-r requirements.txt (line 6)) (0.7.0) Requirement already satisfied: google-auth-oauthlib<1.1,>=0.5 in /usr/local/lib/python3.10/dist-packages (from tensorboard->-r requirements.txt (line 6)) (1.0.0) Requirement already satisfied: protobuf>=3.19.6 in /usr/local/lib/python3.10/dist-packages (from tensorboard->-r requirements.txt (line 6)) (3.20.3) Requirement already satisfied: google-auth<3,>=1.6.3 in /usr/local/lib/python3.10/dist-packages (from tensorboard->-r requirements.txt (line 6)) (2.17.2) Requirement already satisfied: markdown>=2.6.8 in /usr/local/lib/python3.10/dist-packages (from tensorboard->-r requirements.txt (line 6)) (3.4.3) Requirement already satisfied: tensorboard-plugin-wit>=1.6.0 in /usr/local/lib/python3.10/dist-packages (from tensorboard->-r requirements.txt (line 6)) (1.8.1) Requirement already satisfied: grpcio>=1.48.2 in /usr/local/lib/python3.10/dist-packages (from tensorboard->-r requirements.txt (line 6)) (1.53.0) Requirement already satisfied: setuptools>=41.0.0 in /usr/lib/python3/dist-packages (from tensorboard->-r requirements.txt (line 6)) (59.6.0) Requirement already satisfied: wheel>=0.26 in /usr/lib/python3/dist-packages (from tensorboard->-r requirements.txt (line 6)) (0.37.1) Requirement already satisfied: absl-py>=0.4 in /usr/local/lib/python3.10/dist-packages (from tensorboard->-r requirements.txt (line 6)) (1.4.0) Requirement already satisfied: werkzeug>=1.0.1 in /usr/local/lib/python3.10/dist-packages (from tensorboard->-r requirements.txt (line 6)) (2.2.3) Requirement already satisfied: MarkupSafe>=2.0 in /usr/local/lib/python3.10/dist-packages (from Jinja2->-r requirements.txt (line 7)) (2.1.2) Requirement already satisfied: multidict<7.0,>=4.5 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets->-r requirements.txt (line 4)) (6.0.4) Requirement already satisfied: async-timeout<5.0,>=4.0.0a3 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets->-r requirements.txt (line 4)) (4.0.2) Requirement already satisfied: aiosignal>=1.1.2 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets->-r requirements.txt (line 4)) (1.3.1) Requirement already satisfied: frozenlist>=1.1.1 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets->-r requirements.txt (line 4)) (1.3.3) Requirement already satisfied: attrs>=17.3.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets->-r requirements.txt (line 4)) (22.2.0) Requirement already satisfied: charset-normalizer<4.0,>=2.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets->-r requirements.txt (line 4)) (3.1.0) Requirement already satisfied: yarl<2.0,>=1.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets->-r requirements.txt (line 4)) (1.8.2) Requirement already satisfied: cachetools<6.0,>=2.0.0 in /usr/local/lib/python3.10/dist-packages (from google-auth<3,>=1.6.3->tensorboard->-r requirements.txt (line 6)) (5.3.0) Requirement already satisfied: pyasn1-modules>=0.2.1 in /usr/local/lib/python3.10/dist-packages (from google-auth<3,>=1.6.3->tensorboard->-r requirements.txt (line 6)) (0.2.8) Requirement already satisfied: rsa<5,>=3.1.4 in /usr/local/lib/python3.10/dist-packages (from google-auth<3,>=1.6.3->tensorboard->-r requirements.txt (line 6)) (4.9) Requirement already satisfied: six>=1.9.0 in /usr/local/lib/python3.10/dist-packages (from google-auth<3,>=1.6.3->tensorboard->-r requirements.txt (line 6)) (1.16.0) Requirement already satisfied: requests-oauthlib>=0.7.0 in /usr/local/lib/python3.10/dist-packages (from google-auth-oauthlib<1.1,>=0.5->tensorboard->-r requirements.txt (line 6)) (1.3.1) Requirement already satisfied: chardet<5,>=3.0.2 in /usr/local/lib/python3.10/dist-packages (from requests->torchvision->-r requirements.txt (line 2)) (4.0.0) Requirement already satisfied: urllib3<1.27,>=1.21.1 in /usr/local/lib/python3.10/dist-packages (from requests->torchvision->-r requirements.txt (line 2)) (1.26.15) Requirement already satisfied: idna<3,>=2.5 in /usr/local/lib/python3.10/dist-packages (from requests->torchvision->-r requirements.txt (line 2)) (2.10) Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.10/dist-packages (from requests->torchvision->-r requirements.txt (line 2)) (2022.12.7) Requirement already satisfied: python-dateutil>=2.8.1 in /usr/local/lib/python3.10/dist-packages (from pandas->datasets->-r requirements.txt (line 4)) (2.8.2) Requirement already satisfied: pytz>=2020.1 in /usr/local/lib/python3.10/dist-packages (from pandas->datasets->-r requirements.txt (line 4)) (2023.3) Requirement already satisfied: pyasn1<0.5.0,>=0.4.6 in /usr/local/lib/python3.10/dist-packages (from pyasn1-modules>=0.2.1->google-auth<3,>=1.6.3->tensorboard->-r requirements.txt (line 6)) (0.4.8) Requirement already satisfied: oauthlib>=3.0.0 in /usr/local/lib/python3.10/dist-packages (from requests-oauthlib>=0.7.0->google-auth-oauthlib<1.1,>=0.5->tensorboard->-r requirements.txt (line 6)) (3.2.2) WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv [notice] A new release of pip is available: 23.0.1 -> 23.2.1 [notice] To update, run: python3 -m pip install --upgrade pip下载stable-diffusion-webui开源库。

单击此处查看运行结果

Hit:1 http://mirrors.cloud.aliyuncs.com/ubuntu jammy InRelease Get:2 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-updates InRelease [119 kB] Get:3 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-backports InRelease [109 kB] Get:4 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-security InRelease [110 kB] Fetched 338 kB in 0s (1276 kB/s) Reading package lists... Done Building dependency tree... Done Reading state information... Done 99 packages can be upgraded. Run 'apt list --upgradable' to see them. Reading package lists... Done Building dependency tree... Done Reading state information... Done aria2 is already the newest version (1.36.0-1). 0 upgraded, 0 newly installed, 0 to remove and 99 not upgraded. fatal: destination path 'stable-diffusion-webui' already exists and is not an empty directory. /mnt/workspace/demos/stable_diffusion_try_on/stable-diffusion-webui HEAD is now at a9fed7c3 Merge pull request #8503 from mcmonkey4eva/filename-length-limit-fix Download Results: gid |stat|avg speed |path/URI ======+====+===========+======================================================= add653|OK | 0B/s|.//repositories.tar.gz Status Legend: (OK):download completed. /mnt/workspace/demos/stable_diffusion_try_on/stable-diffusion-webui/extensions fatal: destination path 'a1111-sd-webui-tagcomplete' already exists and is not an empty directory. fatal: destination path 'stable-diffusion-webui-localization-zh_CN' already exists and is not an empty directory. /mnt/workspace/demos/stable_diffusion_try_on/stable-diffusion-webui --2023-09-11 02:37:31-- http://pai-vision-data-sh.oss-cn-shanghai.aliyuncs.com/aigc-data/webui_config/config_tryon.json Resolving pai-vision-data-sh.oss-cn-shanghai.aliyuncs.com (pai-vision-data-sh.oss-cn-shanghai.aliyuncs.com)... 106.14.228.10 Connecting to pai-vision-data-sh.oss-cn-shanghai.aliyuncs.com (pai-vision-data-sh.oss-cn-shanghai.aliyuncs.com)|106.14.228.10|:80... connected. HTTP request sent, awaiting response... 200 OK The file is already fully retrieved; nothing to do. /mnt/workspace/demos/stable_diffusion_try_on

微调Stable Diffusion模型。

准备数据集和训练代码。后续会使用该数据集和训练代码进行模型训练。

单击此处查看运行结果

--2023-09-10 14:57:01-- http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/datasets/try_on/cloth_train_example.tar.gz Resolving pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com (pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com)... 39.98.1.111 Connecting to pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com (pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com)|39.98.1.111|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 4755690 (4.5M) [application/gzip] Saving to: ‘cloth_train_example.tar.gz’ cloth_train_example 100%[===================>] 4.54M 11.6MB/s in 0.4s 2023-09-10 14:57:02 (11.6 MB/s) - ‘cloth_train_example.tar.gz’ saved [4755690/4755690] cloth_train_example/ cloth_train_example/train/ cloth_train_example/train/20230407174322.jpg cloth_train_example/train/metadata.jsonl cloth_train_example/train/20230407174421.jpg cloth_train_example/train/20230407174403.jpg cloth_train_example/train/20230407174429.jpg cloth_train_example/train/20230407174311.jpg cloth_train_example/train/20230407174332.jpg cloth_train_example/train/20230407174450.jpg cloth_train_example/train/.ipynb_checkpoints/ cloth_train_example/train/.ipynb_checkpoints/20230407174332-checkpoint.jpg cloth_train_example/train/20230407174343.jpg cloth_train_example/train/20230407174354.jpg cloth_train_example/train/20230407174413.jpg cloth_train_example/train/20230407174439.jpg cloth_train_example/.ipynb_checkpoints/ --2023-09-10 14:57:02-- http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/datasets/try_on/train_text_to_image_lora.py Resolving pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com (pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com)... 39.98.1.111 Connecting to pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com (pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com)|39.98.1.111|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 37688 (37K) [application/octet-stream] Saving to: ‘train_text_to_image_lora.py’ train_text_to_image 100%[===================>] 36.80K --.-KB/s in 0.03s 2023-09-10 14:57:02 (1.06 MB/s) - ‘train_text_to_image_lora.py’ saved [37688/37688]查看示例服装。

单击此处查看运行结果

下载预训练模型并转化成diffusers格式。

单击此处查看运行结果

[#5e1558 681MiB/685MiB(99%) CN:4 DL:143MiB]m0m] Download Results: gid |stat|avg speed |path/URI ======+====+===========+======================================================= 5e1558|OK | 138MiB/s|.//models--CompVis--stable-diffusion-safety-checker.tar.gz Status Legend: (OK):download completed. [#49605a 0.9GiB/0.9GiB(98%) CN:6 DL:147MiB]m0m] Download Results: gid |stat|avg speed |path/URI ======+====+===========+======================================================= 49605a|OK | 140MiB/s|.//models--openai--clip-vit-large-patch14.tar.gz Status Legend: (OK):download completed. [#e0974b 3.9GiB/3.9GiB(99%) CN:4 DL:169MiB]m0m]m Download Results: gid |stat|avg speed |path/URI ======+====+===========+======================================================= e0974b|OK | 166MiB/s|stable-diffusion-webui/models/Stable-diffusion//chilloutmix_NiPrunedFp32Fix.safetensors Status Legend: (OK):download completed. global_step key not found in model Some weights of the model checkpoint at openai/clip-vit-large-patch14 were not used when initializing CLIPTextModel: ['vision_model.encoder.layers.5.self_attn.q_proj.bias', 'vision_model.encoder.layers.2.mlp.fc1.weight', 'vision_model.encoder.layers.8.layer_norm1.weight', 'vision_model.encoder.layers.7.self_attn.v_proj.weight', 'vision_model.encoder.layers.3.mlp.fc2.bias', 'vision_model.encoder.layers.23.self_attn.out_proj.weight', 'logit_scale', 'vision_model.encoder.layers.10.layer_norm2.bias', 'vision_model.encoder.layers.16.self_attn.k_proj.bias', 'vision_model.embeddings.class_embedding', 'vision_model.encoder.layers.6.mlp.fc1.bias', 'vision_model.encoder.layers.10.self_attn.out_proj.bias', 'vision_model.encoder.layers.19.mlp.fc1.bias', 'vision_model.encoder.layers.5.self_attn.k_proj.bias', 'vision_model.encoder.layers.20.layer_norm1.bias', 'vision_model.encoder.layers.23.mlp.fc1.bias', 'vision_model.encoder.layers.6.self_attn.k_proj.weight', 'vision_model.encoder.layers.14.mlp.fc2.bias', 'vision_model.encoder.layers.3.layer_norm1.weight', 'vision_model.encoder.layers.0.layer_norm1.weight', 'vision_model.encoder.layers.5.layer_norm1.weight', 'vision_model.encoder.layers.5.self_attn.q_proj.weight', 'vision_model.encoder.layers.13.layer_norm1.bias', 'vision_model.encoder.layers.2.layer_norm2.bias', 'vision_model.encoder.layers.9.self_attn.k_proj.bias', 'vision_model.encoder.layers.20.mlp.fc2.bias', 'vision_model.encoder.layers.18.self_attn.k_proj.weight', 'vision_model.encoder.layers.21.self_attn.out_proj.weight', 'vision_model.encoder.layers.13.layer_norm2.weight', 'vision_model.encoder.layers.15.self_attn.out_proj.weight', 'vision_model.encoder.layers.9.layer_norm2.weight', 'vision_model.encoder.layers.23.self_attn.k_proj.bias', 'vision_model.encoder.layers.18.self_attn.q_proj.weight', 'vision_model.encoder.layers.23.layer_norm1.weight', 'vision_model.encoder.layers.17.self_attn.k_proj.bias', 'vision_model.encoder.layers.20.mlp.fc1.bias', 'vision_model.encoder.layers.9.layer_norm1.weight', 'vision_model.encoder.layers.7.mlp.fc2.bias', 'vision_model.encoder.layers.17.layer_norm1.weight', 'vision_model.encoder.layers.2.self_attn.out_proj.weight', 'vision_model.encoder.layers.15.layer_norm2.bias', 'vision_model.encoder.layers.18.self_attn.k_proj.bias', 'vision_model.encoder.layers.10.self_attn.v_proj.bias', 'vision_model.encoder.layers.13.mlp.fc1.bias', 'vision_model.encoder.layers.13.self_attn.v_proj.weight', 'vision_model.encoder.layers.1.mlp.fc1.weight', 'vision_model.encoder.layers.23.layer_norm1.bias', 'vision_model.encoder.layers.6.self_attn.out_proj.bias', 'vision_model.encoder.layers.2.layer_norm1.bias', 'vision_model.encoder.layers.13.layer_norm2.bias', 'vision_model.encoder.layers.4.layer_norm1.bias', 'vision_model.encoder.layers.20.self_attn.q_proj.weight', 'vision_model.encoder.layers.13.mlp.fc1.weight', 'vision_model.encoder.layers.23.mlp.fc2.weight', 'vision_model.encoder.layers.16.mlp.fc2.weight', 'vision_model.encoder.layers.10.layer_norm1.weight', 'vision_model.encoder.layers.16.self_attn.out_proj.weight', 'vision_model.encoder.layers.9.self_attn.out_proj.weight', 'vision_model.encoder.layers.3.mlp.fc2.weight', 'vision_model.encoder.layers.16.mlp.fc2.bias', 'vision_model.encoder.layers.1.self_attn.q_proj.weight', 'vision_model.encoder.layers.17.layer_norm2.bias', 'vision_model.encoder.layers.11.self_attn.q_proj.bias', 'vision_model.encoder.layers.19.layer_norm2.bias', 'vision_model.encoder.layers.9.layer_norm1.bias', 'vision_model.encoder.layers.0.self_attn.q_proj.bias', 'vision_model.encoder.layers.18.self_attn.out_proj.bias', 'vision_model.encoder.layers.2.self_attn.k_proj.bias', 'vision_model.encoder.layers.11.layer_norm2.weight', 'vision_model.encoder.layers.14.self_attn.q_proj.weight', 'vision_model.encoder.layers.22.self_attn.k_proj.weight', 'vision_model.encoder.layers.17.self_attn.q_proj.weight', 'vision_model.encoder.layers.0.layer_norm2.bias', 'vision_model.encoder.layers.17.mlp.fc2.bias', 'vision_model.encoder.layers.20.self_attn.k_proj.bias', 'vision_model.encoder.layers.22.layer_norm1.bias', 'vision_model.encoder.layers.11.self_attn.k_proj.weight', 'vision_model.encoder.layers.14.self_attn.out_proj.bias', 'vision_model.encoder.layers.23.self_attn.out_proj.bias', 'vision_model.encoder.layers.8.self_attn.k_proj.bias', 'vision_model.encoder.layers.5.mlp.fc2.bias', 'vision_model.encoder.layers.1.layer_norm1.bias', 'vision_model.encoder.layers.4.self_attn.q_proj.weight', 'vision_model.encoder.layers.17.layer_norm2.weight', 'vision_model.encoder.layers.4.mlp.fc2.bias', 'vision_model.encoder.layers.5.self_attn.v_proj.weight', 'vision_model.encoder.layers.0.layer_norm1.bias', 'vision_model.encoder.layers.3.layer_norm2.bias', 'vision_model.encoder.layers.7.mlp.fc1.bias', 'vision_model.encoder.layers.10.self_attn.q_proj.bias', 'vision_model.encoder.layers.10.layer_norm1.bias', 'vision_model.encoder.layers.8.self_attn.q_proj.bias', 'vision_model.encoder.layers.0.layer_norm2.weight', 'vision_model.encoder.layers.14.self_attn.v_proj.bias', 'vision_model.encoder.layers.6.self_attn.q_proj.bias', 'vision_model.encoder.layers.11.self_attn.out_proj.bias', 'vision_model.encoder.layers.3.mlp.fc1.bias', 'vision_model.encoder.layers.19.self_attn.out_proj.weight', 'vision_model.encoder.layers.16.layer_norm2.bias', 'vision_model.pre_layrnorm.bias', 'vision_model.encoder.layers.21.layer_norm2.weight', 'text_projection.weight', 'vision_model.encoder.layers.7.self_attn.out_proj.bias', 'vision_model.encoder.layers.4.self_attn.v_proj.weight', 'vision_model.encoder.layers.21.layer_norm2.bias', 'vision_model.encoder.layers.18.layer_norm2.weight', 'vision_model.encoder.layers.4.mlp.fc2.weight', 'vision_model.encoder.layers.6.self_attn.out_proj.weight', 'vision_model.encoder.layers.10.mlp.fc2.weight', 'vision_model.encoder.layers.12.layer_norm1.bias', 'vision_model.encoder.layers.22.layer_norm2.bias', 'vision_model.encoder.layers.23.self_attn.k_proj.weight', 'vision_model.encoder.layers.2.self_attn.k_proj.weight', 'vision_model.encoder.layers.11.self_attn.k_proj.bias', 'vision_model.encoder.layers.9.mlp.fc1.bias', 'vision_model.encoder.layers.14.layer_norm1.weight', 'vision_model.encoder.layers.21.self_attn.v_proj.bias', 'vision_model.encoder.layers.1.layer_norm1.weight', 'vision_model.encoder.layers.6.self_attn.v_proj.weight', 'vision_model.encoder.layers.14.self_attn.k_proj.bias', 'vision_model.encoder.layers.22.mlp.fc1.bias', 'vision_model.encoder.layers.11.self_attn.q_proj.weight', 'vision_model.encoder.layers.22.self_attn.out_proj.bias', 'vision_model.encoder.layers.6.layer_norm1.weight', 'vision_model.encoder.layers.23.mlp.fc2.bias', 'vision_model.encoder.layers.13.mlp.fc2.weight', 'vision_model.encoder.layers.1.self_attn.out_proj.bias', 'vision_model.encoder.layers.10.self_attn.k_proj.weight', 'vision_model.encoder.layers.17.self_attn.v_proj.bias', 'vision_model.encoder.layers.7.layer_norm1.bias', 'vision_model.encoder.layers.20.layer_norm2.weight', 'vision_model.encoder.layers.10.self_attn.v_proj.weight', 'vision_model.encoder.layers.10.mlp.fc2.bias', 'vision_model.encoder.layers.0.mlp.fc2.bias', 'vision_model.encoder.layers.7.self_attn.k_proj.bias', 'vision_model.encoder.layers.16.self_attn.q_proj.weight', 'vision_model.encoder.layers.1.layer_norm2.weight', 'vision_model.encoder.layers.13.self_attn.out_proj.weight', 'vision_model.encoder.layers.15.self_attn.v_proj.bias', 'vision_model.encoder.layers.12.mlp.fc2.weight', 'vision_model.encoder.layers.23.self_attn.v_proj.bias', 'vision_model.encoder.layers.1.self_attn.k_proj.bias', 'vision_model.encoder.layers.17.self_attn.q_proj.bias', 'vision_model.encoder.layers.17.self_attn.out_proj.bias', 'vision_model.encoder.layers.1.mlp.fc2.bias', 'vision_model.encoder.layers.8.self_attn.v_proj.weight', 'vision_model.encoder.layers.19.self_attn.v_proj.weight', 'vision_model.encoder.layers.11.mlp.fc2.weight', 'vision_model.encoder.layers.5.layer_norm1.bias', 'vision_model.encoder.layers.22.self_attn.out_proj.weight', 'vision_model.encoder.layers.10.layer_norm2.weight', 'vision_model.encoder.layers.14.self_attn.v_proj.weight', 'vision_model.encoder.layers.6.self_attn.k_proj.bias', 'vision_model.encoder.layers.2.mlp.fc2.weight', 'vision_model.encoder.layers.20.mlp.fc1.weight', 'vision_model.encoder.layers.8.self_attn.q_proj.weight', 'vision_model.encoder.layers.5.mlp.fc1.bias', 'vision_model.encoder.layers.19.self_attn.q_proj.bias', 'vision_model.encoder.layers.18.self_attn.out_proj.weight', 'vision_model.encoder.layers.3.self_attn.k_proj.bias', 'vision_model.encoder.layers.12.mlp.fc1.weight', 'vision_model.encoder.layers.16.layer_norm1.bias', 'vision_model.encoder.layers.7.mlp.fc2.weight', 'vision_model.encoder.layers.5.self_attn.v_proj.bias', 'vision_model.encoder.layers.6.mlp.fc1.weight', 'vision_model.encoder.layers.20.layer_norm2.bias', 'vision_model.encoder.layers.15.self_attn.q_proj.bias', 'vision_model.encoder.layers.16.mlp.fc1.weight', 'vision_model.encoder.layers.23.layer_norm2.weight', 'vision_model.encoder.layers.11.self_attn.out_proj.weight', 'vision_model.encoder.layers.4.mlp.fc1.bias', 'vision_model.encoder.layers.12.mlp.fc1.bias', 'vision_model.encoder.layers.8.mlp.fc2.bias', 'vision_model.encoder.layers.10.self_attn.q_proj.weight', 'vision_model.encoder.layers.19.layer_norm1.weight', 'vision_model.encoder.layers.22.layer_norm1.weight', 'vision_model.encoder.layers.15.mlp.fc2.weight', 'vision_model.encoder.layers.13.mlp.fc2.bias', 'vision_model.encoder.layers.8.layer_norm2.bias', 'vision_model.encoder.layers.8.self_attn.k_proj.weight', 'vision_model.encoder.layers.8.mlp.fc2.weight', 'vision_model.encoder.layers.9.self_attn.v_proj.weight', 'vision_model.encoder.layers.8.self_attn.v_proj.bias', 'vision_model.encoder.layers.11.layer_norm1.weight', 'vision_model.encoder.layers.13.self_attn.out_proj.bias', 'vision_model.encoder.layers.23.layer_norm2.bias', 'vision_model.encoder.layers.22.mlp.fc1.weight', 'vision_model.encoder.layers.0.mlp.fc1.weight', 'vision_model.encoder.layers.1.mlp.fc1.bias', 'vision_model.encoder.layers.11.self_attn.v_proj.bias', 'vision_model.encoder.layers.16.self_attn.v_proj.bias', 'vision_model.encoder.layers.7.self_attn.k_proj.weight', 'vision_model.encoder.layers.14.mlp.fc2.weight', 'vision_model.encoder.layers.23.self_attn.v_proj.weight', 'vision_model.encoder.layers.9.self_attn.out_proj.bias', 'vision_model.encoder.layers.21.self_attn.q_proj.bias', 'vision_model.encoder.layers.4.self_attn.k_proj.bias', 'vision_model.encoder.layers.1.self_attn.k_proj.weight', 'vision_model.encoder.layers.2.layer_norm2.weight', 'vision_model.encoder.layers.9.mlp.fc2.bias', 'vision_model.encoder.layers.13.self_attn.k_proj.bias', 'vision_model.encoder.layers.9.self_attn.q_proj.weight', 'vision_model.encoder.layers.11.mlp.fc1.weight', 'vision_model.encoder.layers.10.mlp.fc1.bias', 'vision_model.encoder.layers.9.self_attn.q_proj.bias', 'vision_model.encoder.layers.9.self_attn.k_proj.weight', 'vision_model.encoder.layers.16.self_attn.k_proj.weight', 'vision_model.encoder.layers.7.self_attn.v_proj.bias', 'vision_model.encoder.layers.13.self_attn.q_proj.bias', 'vision_model.encoder.layers.12.self_attn.out_proj.bias', 'vision_model.encoder.layers.13.layer_norm1.weight', 'vision_model.encoder.layers.3.layer_norm1.bias', 'vision_model.encoder.layers.19.layer_norm2.weight', 'vision_model.encoder.layers.15.self_attn.k_proj.bias', 'vision_model.encoder.layers.15.mlp.fc1.bias', 'vision_model.encoder.layers.17.mlp.fc1.weight', 'vision_model.embeddings.patch_embedding.weight', 'vision_model.encoder.layers.17.layer_norm1.bias', 'vision_model.encoder.layers.22.self_attn.v_proj.weight', 'visual_projection.weight', 'vision_model.encoder.layers.5.mlp.fc1.weight', 'vision_model.encoder.layers.11.layer_norm2.bias', 'vision_model.encoder.layers.21.mlp.fc1.bias', 'vision_model.encoder.layers.4.layer_norm2.weight', 'vision_model.encoder.layers.2.self_attn.q_proj.weight', 'vision_model.encoder.layers.4.self_attn.v_proj.bias', 'vision_model.encoder.layers.20.self_attn.v_proj.weight', 'vision_model.encoder.layers.21.self_attn.v_proj.weight', 'vision_model.encoder.layers.12.mlp.fc2.bias', 'vision_model.encoder.layers.9.mlp.fc1.weight', 'vision_model.encoder.layers.4.mlp.fc1.weight', 'vision_model.encoder.layers.0.mlp.fc2.weight', 'vision_model.encoder.layers.1.self_attn.q_proj.bias', 'vision_model.encoder.layers.7.layer_norm2.bias', 'vision_model.encoder.layers.22.self_attn.v_proj.bias', 'vision_model.encoder.layers.18.mlp.fc1.bias', 'vision_model.encoder.layers.6.mlp.fc2.weight', 'vision_model.encoder.layers.13.self_attn.q_proj.weight', 'vision_model.encoder.layers.7.mlp.fc1.weight', 'vision_model.encoder.layers.1.mlp.fc2.weight', 'vision_model.encoder.layers.21.layer_norm1.weight', 'vision_model.encoder.layers.18.self_attn.q_proj.bias', 'vision_model.encoder.layers.8.layer_norm2.weight', 'vision_model.encoder.layers.18.self_attn.v_proj.weight', 'vision_model.encoder.layers.18.layer_norm1.weight', 'vision_model.encoder.layers.7.self_attn.q_proj.bias', 'vision_model.encoder.layers.11.self_attn.v_proj.weight', 'vision_model.encoder.layers.5.self_attn.out_proj.bias', 'vision_model.encoder.layers.21.mlp.fc1.weight', 'vision_model.encoder.layers.17.self_attn.out_proj.weight', 'vision_model.encoder.layers.12.self_attn.v_proj.weight', 'vision_model.encoder.layers.2.self_attn.v_proj.bias', 'vision_model.encoder.layers.2.self_attn.out_proj.bias', 'vision_model.encoder.layers.6.self_attn.v_proj.bias', 'vision_model.encoder.layers.9.layer_norm2.bias', 'vision_model.encoder.layers.19.self_attn.k_proj.weight', 'vision_model.encoder.layers.12.layer_norm2.weight', 'vision_model.encoder.layers.0.self_attn.v_proj.bias', 'vision_model.encoder.layers.6.self_attn.q_proj.weight', 'vision_model.encoder.layers.22.mlp.fc2.bias', 'vision_model.encoder.layers.4.self_attn.k_proj.weight', 'vision_model.encoder.layers.10.mlp.fc1.weight', 'vision_model.encoder.layers.3.mlp.fc1.weight', 'vision_model.encoder.layers.6.layer_norm2.bias', 'vision_model.encoder.layers.0.self_attn.k_proj.weight', 'vision_model.encoder.layers.3.layer_norm2.weight', 'vision_model.encoder.layers.9.mlp.fc2.weight', 'vision_model.encoder.layers.4.self_attn.q_proj.bias', 'vision_model.encoder.layers.15.layer_norm1.bias', 'vision_model.encoder.layers.8.mlp.fc1.weight', 'vision_model.encoder.layers.20.self_attn.out_proj.bias', 'vision_model.encoder.layers.12.self_attn.q_proj.bias', 'vision_model.encoder.layers.18.self_attn.v_proj.bias', 'vision_model.encoder.layers.6.layer_norm2.weight', 'vision_model.encoder.layers.18.layer_norm2.bias', 'vision_model.encoder.layers.21.layer_norm1.bias', 'vision_model.encoder.layers.5.self_attn.out_proj.weight', 'vision_model.embeddings.position_ids', 'vision_model.encoder.layers.15.self_attn.q_proj.weight', 'vision_model.encoder.layers.12.self_attn.v_proj.bias', 'vision_model.encoder.layers.2.mlp.fc1.bias', 'vision_model.encoder.layers.3.self_attn.q_proj.weight', 'vision_model.encoder.layers.2.layer_norm1.weight', 'vision_model.encoder.layers.4.self_attn.out_proj.weight', 'vision_model.encoder.layers.1.self_attn.v_proj.bias', 'vision_model.encoder.layers.8.self_attn.out_proj.bias', 'vision_model.encoder.layers.8.mlp.fc1.bias', 'vision_model.encoder.layers.5.self_attn.k_proj.weight', 'vision_model.encoder.layers.15.self_attn.v_proj.weight', 'vision_model.encoder.layers.15.self_attn.k_proj.weight', 'vision_model.pre_layrnorm.weight', 'vision_model.encoder.layers.20.mlp.fc2.weight', 'vision_model.encoder.layers.16.self_attn.q_proj.bias', 'vision_model.encoder.layers.6.layer_norm1.bias', 'vision_model.encoder.layers.19.self_attn.q_proj.weight', 'vision_model.encoder.layers.7.self_attn.q_proj.weight', 'vision_model.encoder.layers.19.self_attn.v_proj.bias', 'vision_model.encoder.layers.17.mlp.fc2.weight', 'vision_model.encoder.layers.0.self_attn.out_proj.bias', 'vision_model.encoder.layers.5.layer_norm2.weight', 'vision_model.encoder.layers.3.self_attn.k_proj.weight', 'vision_model.encoder.layers.17.mlp.fc1.bias', 'vision_model.encoder.layers.16.layer_norm2.weight', 'vision_model.encoder.layers.23.self_attn.q_proj.bias', 'vision_model.encoder.layers.12.layer_norm1.weight', 'vision_model.encoder.layers.13.self_attn.v_proj.bias', 'vision_model.encoder.layers.15.self_attn.out_proj.bias', 'vision_model.encoder.layers.11.mlp.fc1.bias', 'vision_model.encoder.layers.7.layer_norm1.weight', 'vision_model.encoder.layers.3.self_attn.out_proj.bias', 'vision_model.encoder.layers.2.self_attn.q_proj.bias', 'vision_model.encoder.layers.21.self_attn.q_proj.weight', 'vision_model.encoder.layers.12.self_attn.k_proj.weight', 'vision_model.encoder.layers.23.self_attn.q_proj.weight', 'vision_model.encoder.layers.3.self_attn.out_proj.weight', 'vision_model.encoder.layers.19.layer_norm1.bias', 'vision_model.encoder.layers.14.self_attn.out_proj.weight', 'vision_model.encoder.layers.0.self_attn.q_proj.weight', 'vision_model.encoder.layers.16.mlp.fc1.bias', 'vision_model.encoder.layers.4.layer_norm1.weight', 'vision_model.encoder.layers.14.layer_norm1.bias', 'vision_model.encoder.layers.20.self_attn.v_proj.bias', 'vision_model.encoder.layers.21.mlp.fc2.weight', 'vision_model.encoder.layers.12.self_attn.k_proj.bias', 'vision_model.encoder.layers.20.self_attn.k_proj.weight', 'vision_model.encoder.layers.1.layer_norm2.bias', 'vision_model.encoder.layers.23.mlp.fc1.weight', 'vision_model.encoder.layers.12.layer_norm2.bias', 'vision_model.encoder.layers.14.layer_norm2.bias', 'vision_model.encoder.layers.18.mlp.fc2.weight', 'vision_model.encoder.layers.19.mlp.fc2.bias', 'vision_model.encoder.layers.10.self_attn.out_proj.weight', 'vision_model.encoder.layers.17.self_attn.v_proj.weight', 'vision_model.encoder.layers.16.self_attn.out_proj.bias', 'vision_model.encoder.layers.22.layer_norm2.weight', 'vision_model.encoder.layers.14.mlp.fc1.bias', 'vision_model.encoder.layers.0.self_attn.k_proj.bias', 'vision_model.encoder.layers.14.self_attn.q_proj.bias', 'vision_model.encoder.layers.22.self_attn.k_proj.bias', 'vision_model.encoder.layers.12.self_attn.q_proj.weight', 'vision_model.encoder.layers.21.self_attn.k_proj.bias', 'vision_model.encoder.layers.22.self_attn.q_proj.weight', 'vision_model.encoder.layers.15.layer_norm1.weight', 'vision_model.post_layernorm.bias', 'vision_model.encoder.layers.18.layer_norm1.bias', 'vision_model.encoder.layers.5.layer_norm2.bias', 'vision_model.encoder.layers.19.self_attn.k_proj.bias', 'vision_model.encoder.layers.11.mlp.fc2.bias', 'vision_model.encoder.layers.11.layer_norm1.bias', 'vision_model.encoder.layers.0.mlp.fc1.bias', 'vision_model.encoder.layers.14.mlp.fc1.weight', 'vision_model.encoder.layers.22.mlp.fc2.weight', 'vision_model.encoder.layers.20.self_attn.out_proj.weight', 'vision_model.encoder.layers.7.layer_norm2.weight', 'vision_model.encoder.layers.8.layer_norm1.bias', 'vision_model.encoder.layers.14.layer_norm2.weight', 'vision_model.encoder.layers.4.layer_norm2.bias', 'vision_model.encoder.layers.22.self_attn.q_proj.bias', 'vision_model.encoder.layers.15.mlp.fc1.weight', 'vision_model.encoder.layers.19.self_attn.out_proj.bias', 'vision_model.encoder.layers.15.mlp.fc2.bias', 'vision_model.encoder.layers.3.self_attn.v_proj.bias', 'vision_model.encoder.layers.0.self_attn.v_proj.weight', 'vision_model.encoder.layers.16.self_attn.v_proj.weight', 'vision_model.encoder.layers.16.layer_norm1.weight', 'vision_model.encoder.layers.1.self_attn.out_proj.weight', 'vision_model.encoder.layers.18.mlp.fc1.weight', 'vision_model.encoder.layers.8.self_attn.out_proj.weight', 'vision_model.encoder.layers.4.self_attn.out_proj.bias', 'vision_model.encoder.layers.18.mlp.fc2.bias', 'vision_model.encoder.layers.10.self_attn.k_proj.bias', 'vision_model.encoder.layers.14.self_attn.k_proj.weight', 'vision_model.encoder.layers.20.layer_norm1.weight', 'vision_model.encoder.layers.21.mlp.fc2.bias', 'vision_model.encoder.layers.19.mlp.fc2.weight', 'vision_model.encoder.layers.0.self_attn.out_proj.weight', 'vision_model.encoder.layers.21.self_attn.out_proj.bias', 'vision_model.embeddings.position_embedding.weight', 'vision_model.encoder.layers.5.mlp.fc2.weight', 'vision_model.encoder.layers.3.self_attn.v_proj.weight', 'vision_model.post_layernorm.weight', 'vision_model.encoder.layers.2.self_attn.v_proj.weight', 'vision_model.encoder.layers.12.self_attn.out_proj.weight', 'vision_model.encoder.layers.20.self_attn.q_proj.bias', 'vision_model.encoder.layers.17.self_attn.k_proj.weight', 'vision_model.encoder.layers.2.mlp.fc2.bias', 'vision_model.encoder.layers.13.self_attn.k_proj.weight', 'vision_model.encoder.layers.3.self_attn.q_proj.bias', 'vision_model.encoder.layers.19.mlp.fc1.weight', 'vision_model.encoder.layers.9.self_attn.v_proj.bias', 'vision_model.encoder.layers.7.self_attn.out_proj.weight', 'vision_model.encoder.layers.6.mlp.fc2.bias', 'vision_model.encoder.layers.15.layer_norm2.weight', 'vision_model.encoder.layers.21.self_attn.k_proj.weight', 'vision_model.encoder.layers.1.self_attn.v_proj.weight'] - This IS expected if you are initializing CLIPTextModel from the checkpoint of a model trained on another task or with another architecture (e.g. initializing a BertForSequenceClassification model from a BertForPreTraining model). - This IS NOT expected if you are initializing CLIPTextModel from the checkpoint of a model that you expect to be exactly identical (initializing a BertForSequenceClassification model from a BertForSequenceClassification model).训练模型。

单击此处查看运行结果

/usr/local/lib/python3.10/dist-packages/accelerate/accelerator.py:249: FutureWarning: `logging_dir` is deprecated and will be removed in version 0.18.0 of Accelerate. Use `project_dir` instead. warnings.warn( 09/11/2023 02:06:31 - INFO - __main__ - Distributed environment: MULTI_GPU Backend: nccl Num processes: 1 Process index: 0 Local process index: 0 Device: cuda:0 Mixed precision type: fp16 {'variance_type'} was not found in config. Values will be initialized to default values. Downloading and preparing dataset imagefolder/cloth_train_example to /root/.cache/huggingface/datasets/imagefolder/cloth_train_example-42de2ef4c663cf76/0.0.0/37fbb85cc714a338bea574ac6c7d0b5be5aff46c1862c1989b20e0771199e93f... Downloading data files: 100%|████████████████| 13/13 [00:00<00:00, 55356.30it/s] Downloading data files: 0it [00:00, ?it/s] Extracting data files: 0it [00:00, ?it/s] Dataset imagefolder downloaded and prepared to /root/.cache/huggingface/datasets/imagefolder/cloth_train_example-42de2ef4c663cf76/0.0.0/37fbb85cc714a338bea574ac6c7d0b5be5aff46c1862c1989b20e0771199e93f. Subsequent calls will reuse this data. 100%|████████████████████████████████████████████| 1/1 [00:00<00:00, 360.09it/s] 09/11/2023 02:07:00 - INFO - __main__ - ***** Running training ***** 09/11/2023 02:07:00 - INFO - __main__ - Num examples = 11 09/11/2023 02:07:00 - INFO - __main__ - Num Epochs = 200 09/11/2023 02:07:00 - INFO - __main__ - Instantaneous batch size per device = 1 09/11/2023 02:07:00 - INFO - __main__ - Total train batch size (w. parallel, distributed & accumulation) = 1 09/11/2023 02:07:00 - INFO - __main__ - Gradient Accumulation steps = 1 09/11/2023 02:07:00 - INFO - __main__ - Total optimization steps = 2200 Steps: 0%| | 0/2200 [00:00<?, ?it/s]/usr/local/lib/python3.10/dist-packages/diffusers/schedulers/scheduling_ddpm.py:172: FutureWarning: Accessing `num_train_timesteps` directly via scheduler.num_train_timesteps is deprecated. Please use `scheduler.config.num_train_timesteps instead` deprecate( Steps: 0%| | 11/2200 [00:07<16:24, 2.22it/s, lr=0.0001, step_loss=0.299]09/11/2023 02:07:08 - INFO - __main__ - Running validation... Generating 4 images with prompt: cloth1. Potential NSFW content was detected in one or more images. A black image will be returned instead. Try again with a different prompt and/or seed. Potential NSFW content was detected in one or more images. A black image will be returned instead. Try again with a different prompt and/or seed. Steps: 50%|█▌ | 1111/2200 [08:28<07:54, 2.30it/s, lr=0.0001, step_loss=0.0404]09/11/2023 02:15:28 - INFO - __main__ - Running validation... Generating 4 images with prompt: cloth1. Steps: 100%|█████| 2200/2200 [16:41<00:00, 2.30it/s, lr=0.0001, step_loss=0.38]Model weights saved in cloth-model-lora/pytorch_lora_weights.bin 0%| | 0/30 [00:00<?, ?it/s] 3%|█▍ | 1/30 [00:00<00:05, 5.77it/s] 10%|████▍ | 3/30 [00:00<00:02, 9.03it/s] 17%|███████▎ | 5/30 [00:00<00:02, 10.06it/s] 23%|██████████▎ | 7/30 [00:00<00:02, 10.53it/s] 30%|█████████████▏ | 9/30 [00:00<00:01, 10.79it/s] 37%|███████████████▊ | 11/30 [00:01<00:01, 10.86it/s] 43%|██████████████████▋ | 13/30 [00:01<00:01, 10.98it/s] 50%|█████████████████████▌ | 15/30 [00:01<00:01, 11.06it/s] 57%|████████████████████████▎ | 17/30 [00:01<00:01, 11.12it/s] 63%|███████████████████████████▏ | 19/30 [00:01<00:00, 11.15it/s] 70%|██████████████████████████████ | 21/30 [00:01<00:00, 11.17it/s] 77%|████████████████████████████████▉ | 23/30 [00:02<00:00, 11.18it/s] 83%|███████████████████████████████████▊ | 25/30 [00:02<00:00, 11.18it/s] 90%|██████████████████████████████████████▋ | 27/30 [00:02<00:00, 11.20it/s] 100%|███████████████████████████████████████████| 30/30 [00:02<00:00, 10.87it/s] 0%| | 0/30 [00:00<?, ?it/s] 3%|█▍ | 1/30 [00:00<00:05, 5.80it/s] 10%|████▍ | 3/30 [00:00<00:02, 9.02it/s] 17%|███████▎ | 5/30 [00:00<00:02, 10.04it/s] 23%|██████████▎ | 7/30 [00:00<00:02, 10.51it/s] 30%|█████████████▏ | 9/30 [00:00<00:01, 10.77it/s] 37%|███████████████▊ | 11/30 [00:01<00:01, 10.83it/s] 43%|██████████████████▋ | 13/30 [00:01<00:01, 10.96it/s] 50%|█████████████████████▌ | 15/30 [00:01<00:01, 11.04it/s] 57%|████████████████████████▎ | 17/30 [00:01<00:01, 11.11it/s] 63%|███████████████████████████▏ | 19/30 [00:01<00:00, 11.14it/s] 70%|██████████████████████████████ | 21/30 [00:01<00:00, 11.16it/s] 77%|████████████████████████████████▉ | 23/30 [00:02<00:00, 11.18it/s] 83%|███████████████████████████████████▊ | 25/30 [00:02<00:00, 11.19it/s] 90%|██████████████████████████████████████▋ | 27/30 [00:02<00:00, 11.20it/s] 100%|███████████████████████████████████████████| 30/30 [00:02<00:00, 10.86it/s] 0%| | 0/30 [00:00<?, ?it/s] 3%|█▍ | 1/30 [00:00<00:05, 5.71it/s] 10%|████▍ | 3/30 [00:00<00:03, 8.97it/s] 17%|███████▎ | 5/30 [00:00<00:02, 10.01it/s] 23%|██████████▎ | 7/30 [00:00<00:02, 10.49it/s] 30%|█████████████▏ | 9/30 [00:00<00:01, 10.75it/s] 37%|███████████████▊ | 11/30 [00:01<00:01, 10.84it/s] 43%|██████████████████▋ | 13/30 [00:01<00:01, 10.95it/s] 50%|█████████████████████▌ | 15/30 [00:01<00:01, 11.02it/s] 57%|████████████████████████▎ | 17/30 [00:01<00:01, 11.07it/s] 63%|███████████████████████████▏ | 19/30 [00:01<00:00, 11.11it/s] 70%|██████████████████████████████ | 21/30 [00:01<00:00, 11.15it/s] 77%|████████████████████████████████▉ | 23/30 [00:02<00:00, 11.18it/s] 83%|███████████████████████████████████▊ | 25/30 [00:02<00:00, 11.20it/s] 90%|██████████████████████████████████████▋ | 27/30 [00:02<00:00, 11.20it/s] 100%|███████████████████████████████████████████| 30/30 [00:02<00:00, 10.85it/s] 0%| | 0/30 [00:00<?, ?it/s] 3%|█▍ | 1/30 [00:00<00:05, 5.74it/s] 10%|████▍ | 3/30 [00:00<00:03, 9.00it/s] 17%|███████▎ | 5/30 [00:00<00:02, 10.02it/s] 23%|██████████▎ | 7/30 [00:00<00:02, 10.50it/s] 30%|█████████████▏ | 9/30 [00:00<00:01, 10.75it/s] 37%|███████████████▊ | 11/30 [00:01<00:01, 10.86it/s] 43%|██████████████████▋ | 13/30 [00:01<00:01, 10.98it/s] 50%|█████████████████████▌ | 15/30 [00:01<00:01, 11.06it/s] 57%|████████████████████████▎ | 17/30 [00:01<00:01, 11.11it/s] 63%|███████████████████████████▏ | 19/30 [00:01<00:00, 11.14it/s] 70%|██████████████████████████████ | 21/30 [00:01<00:00, 11.13it/s] 77%|████████████████████████████████▉ | 23/30 [00:02<00:00, 11.14it/s] 83%|███████████████████████████████████▊ | 25/30 [00:02<00:00, 11.15it/s] 90%|██████████████████████████████████████▋ | 27/30 [00:02<00:00, 11.17it/s] 100%|███████████████████████████████████████████| 30/30 [00:02<00:00, 10.85it/s] Steps: 100%|█████| 2200/2200 [16:58<00:00, 2.16it/s, lr=0.0001, step_loss=0.38]准备WebUI所需模型文件。

将lora模型转化成WebUI支持格式并拷贝到WebUI所在目录。

单击此处查看运行结果

--2023-09-11 02:30:23-- http://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/datasets/convert-to-safetensors.py Resolving pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com (pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com)... 39.98.1.111 Connecting to pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com (pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com)|39.98.1.111|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 2032 (2.0K) [application/octet-stream] Saving to: ‘convert-to-safetensors.py’ convert-to-safetens 100%[===================>] 1.98K --.-KB/s in 0s 2023-09-11 02:30:23 (760 MB/s) - ‘convert-to-safetensors.py’ saved [2032/2032] device is cuda Saving cloth-model-lora/pytorch_lora_weights_converted.safetensors准备额外模型文件,为了加速下载,您可以运行如下命令直接下载额外模型文件。

单击此处查看运行结果

[#2647df 96MiB/104MiB(92%) CN:2 DL:51MiB]0m] Download Results: gid |stat|avg speed |path/URI ======+====+===========+======================================================= 2647df|OK | 45MiB/s|stable-diffusion-webui/repositories/CodeFormer/weights/facelib//detection_Resnet50_Final.pth Status Legend: (OK):download completed. [#843240 78MiB/81MiB(95%) CN:2 DL:41MiB] Download Results: gid |stat|avg speed |path/URI ======+====+===========+======================================================= 843240|OK | 40MiB/s|stable-diffusion-webui/repositories/CodeFormer/weights/facelib//parsing_parsenet.pth Status Legend: (OK):download completed. [#d9cd20 355MiB/359MiB(98%) CN:1 DL:121MiB][0m] Download Results: gid |stat|avg speed |path/URI ======+====+===========+======================================================= d9cd20|OK | 112MiB/s|stable-diffusion-webui/models/Codeformer//codeformer-v0.1.0.pth Status Legend: (OK):download completed. Download Results: gid |stat|avg speed |path/URI ======+====+===========+======================================================= 60d640|OK | 16MiB/s|stable-diffusion-webui/embeddings//ng_deepnegative_v1_75t.pt Status Legend: (OK):download completed. [#653c40 144MiB/144MiB(99%) CN:1 DL:37MiB]m] Download Results: gid |stat|avg speed |path/URI ======+====+===========+======================================================= 653c40|OK | 37MiB/s|stable-diffusion-webui/models/Lora//koreanDollLikeness_v10.safetensors Status Legend: (OK):download completed.

在DSW中启动WebUI。

【说明】:由于Github访问存在不稳定性,如果运行后未出现正常返回结果且提示网络相关原因,例如:

Network is unreachable、unable to access 'https://github.com/......',您可以重新运行命令。单击此处查看运行结果

################################################################ Install script for stable-diffusion + Web UI Tested on Debian 11 (Bullseye) ################################################################ ################################################################ Running on root user ################################################################ ################################################################ Repo already cloned, using it as install directory ################################################################ ################################################################ Create and activate python venv ################################################################ ################################################################ Launching launch.py... ################################################################ Python 3.10.6 (main, Mar 10 2023, 10:55:28) [GCC 11.3.0] Commit hash: a9fed7c364061ae6efb37f797b6b522cb3cf7aa2 Installing requirements for Web UI Launching Web UI with arguments: --no-download-sd-model --xformers --gradio-queue Tag Autocomplete: Could not locate model-keyword extension, Lora trigger word completion will be limited to those added through the extra networks menu. Checkpoint chilloutmix_NiPrunedFp32Fix.safetensors [fc2511737a] not found; loading fallback chilloutmix_NiPrunedFp32Fix.safetensors Calculating sha256 for /mnt/workspace/demos/stable_diffusion_try_on/stable-diffusion-webui/models/Stable-diffusion/chilloutmix_NiPrunedFp32Fix.safetensors: fc2511737a54c5e80b89ab03e0ab4b98d051ab187f92860f3cd664dc9d08b271 Loading weights [fc2511737a] from /mnt/workspace/demos/stable_diffusion_try_on/stable-diffusion-webui/models/Stable-diffusion/chilloutmix_NiPrunedFp32Fix.safetensors Creating model from config: /mnt/workspace/demos/stable_diffusion_try_on/stable-diffusion-webui/configs/v1-inference.yaml LatentDiffusion: Running in eps-prediction mode DiffusionWrapper has 859.52 M params. Applying xformers cross attention optimization. Textual inversion embeddings loaded(1): ng_deepnegative_v1_75t Model loaded in 25.2s (calculate hash: 23.0s, create model: 0.4s, apply weights to model: 0.4s, apply half(): 0.3s, load VAE: 0.8s, move model to device: 0.3s). Running on local URL: http://127.0.0.1:7860 To create a public link, set `share=True` in `launch()`.当第3步启动WebUI运行完成后,在返回的运行详情结果中单击URL链接(http://127.0.0.1:7860),进入WebUI页面。后续您可以在该页面进行模型推理。

【说明】由于

http://127.0.0.1:7860为内网访问地址,仅支持在当前的DSW实例内部通过单击链接来访问WebUI页面,不支持通过外部浏览器直接访问。

完成

5

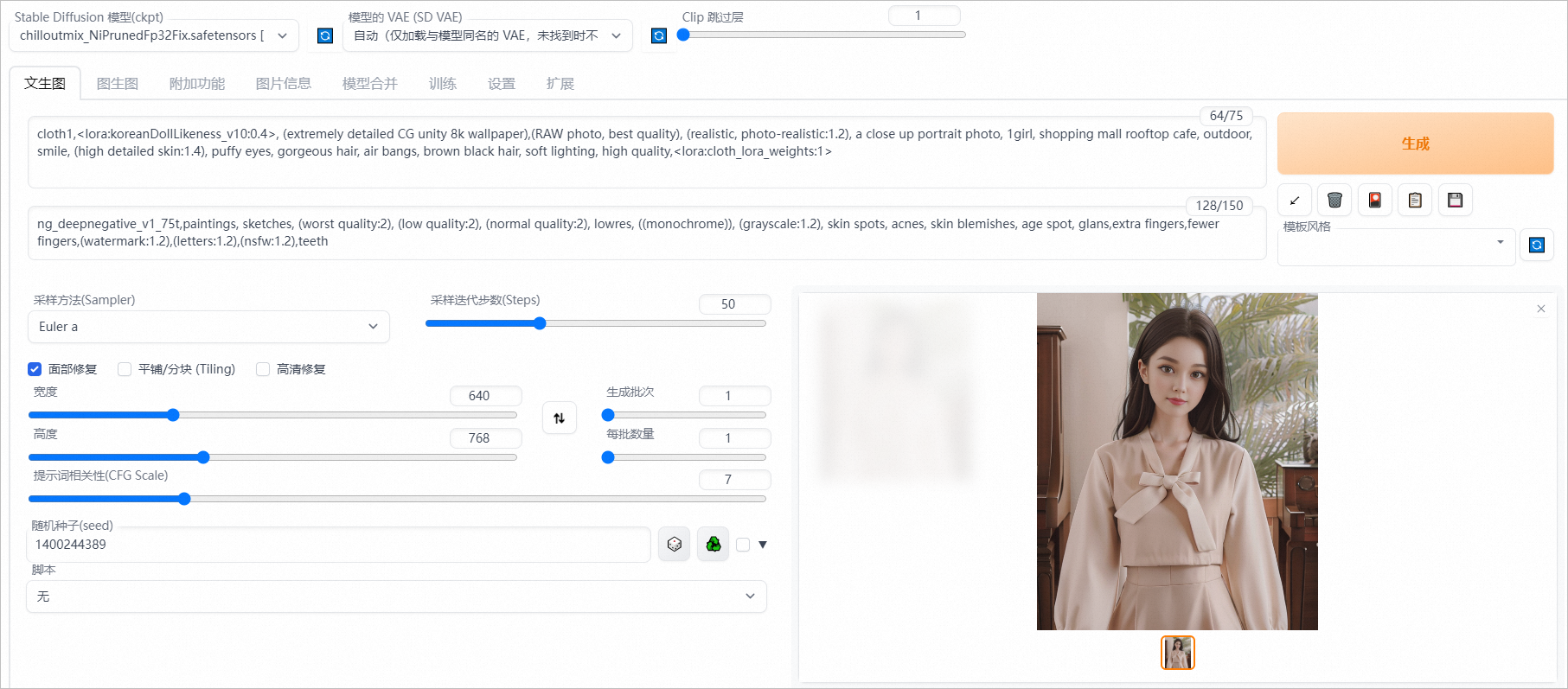

完成以上操作后,您已经成功完成了AIGC文生图模型微调训练及WebUI部署。您可以在WebUI页面,进行模型推理验证。

在文生图页签配置以下参数:

Prompt:cloth1,

<lora:koreanDollLikeness_v10:0.4>, (extremely detailed CG unity 8k wallpaper),(RAW photo, best quality), (realistic, photo-realistic:1.2), a close up portrait photo, 1girl, shopping mall rooftop cafe, outdoor, smile, (high detailed skin:1.4), puffy eyes, gorgeous hair, air bangs, brown black hair, soft lighting, high quality,<lora:cloth_lora_weights:1>Negative prompt:ng_deepnegative_v1_75t,paintings, sketches, (worst quality:2), (low quality:2), (normal quality:2), lowres, ((monochrome)), (grayscale:1.2), skin spots, acnes, skin blemishes, age spot, glans,extra fingers,fewer fingers,(watermark:1.2),(letters:1.2),(nsfw:1.2),teeth

采样方法(Sampler): Euler a

采样迭代步数(Steps): 50

宽度和高度: 640,768

随机种子(seed): 1400244389

提示词相关性(CFG Scale):7

选中面部修复复选框

单击生成,输出如图推理结果。

清理及后续

5

清理

领取免费资源包后,请在免费额度和有效试用期内使用。如果免费额度用尽或试用期结束后,继续使用计算资源,会产生后付费账单。

请前往资源实例管理页面,查看免费额度使用量和过期时间,如下图所示。

如果无需继续使用DSW实例,您可以按照以下操作步骤停止DSW实例。

登录PAI控制台。

在页面左上方,选择DSW实例的地域。

在左侧导航栏单击工作空间列表,在工作空间列表页面中单击默认工作空间名称,进入对应工作空间内。

在工作空间页面的左侧导航栏选择模型开发与训练>交互式建模(DSW),进入交互式建模(DSW)页面。

单击目标实例操作列下的停止,成功停止后即停止资源消耗。

如果需要继续使用DSW实例,请务必至少在试用到期1小时前为您的阿里云账号充值,到期未续费的DSW实例会因欠费而被自动停止。

后续

在试用有效期期间,您还可以继续使用DSW实例进行模型训练和推理验证。

总结

常用知识点

问题1:本教程使用了DSW的哪个功能完成了AIGC的训练及推理?(单选题)

Notebook

Terminal

WebIDE

正确答案是Notebook。