阿里云服务网格 ASM(Service Mesh)支持将指标数据上报到阿里云可观测链路OpenTelemetry版和自建系统。您可以通过控制台进行配置。本文介绍如何将链路追踪数据采集到阿里云可观测链路OpenTelemetry版和自建系统。

前提条件

已添加ACK集群到ASM实例。具体操作,请参见添加集群到ASM实例。

阿里云账号已开通阿里云可观测链路OpenTelemetry版。关于如何计费,请参见计费规则。

ASM实例已部署入口网关。具体操作,请参见创建入口网关。

将链路追踪数据采集到阿里云可观测链路OpenTelemetry版

请您按照ASM实例版本选择对应的页签进行操作。若您需要升级现有的实例版本,请参见升级ASM实例。

1.17.2.35以下

登录ASM控制台,在左侧导航栏,选择。

在网格管理页面,单击目标实例名称,然后在左侧导航栏,选择。

在基本信息页面,单击功能设置,在功能设置更新面板,选中启用链路追踪,设置采样百分比,采样方式选择阿里云可观测链路OpenTelemetry版,然后单击确定。

在左侧导航栏,选择,跳转至可观测链路OpenTelemetry版控制台查看链路追踪信息。

关于链路追踪的更多信息,请参见什么是可观测链路OpenTelemetry版。

若您不再需要此功能,可以在功能设置更新面板取消选中启用链路追踪,然后单击确定。

1.17.2.35及以上,1.18.0.124以下

登录ASM控制台,在左侧导航栏,选择。

在网格管理页面,单击目标实例名称,然后在左侧导航栏,选择。

在链路追踪页面,单击将服务网格链路追踪数据采集到阿里云可观测链路OpenTelemetry版,然后在确认对话框,单击确定。

单击打开阿里云可观测链路OpenTelemetry版控制台,查看链路追踪信息。

关于链路追踪的更多信息,请参见什么是可观测链路OpenTelemetry版。

若您不再需要此功能,可以在链路追踪页面,单击关闭采集,然后在确认对话框,单击确定。

1.18.0.124及以上,1.22.6.89以下

在当前版本范围内,控制台不支持直接配置导出至阿里云可观测链路OpenTelemetry版,您可以通过以下方式在系统中配置Collector进行上报。

步骤一:部署OpenTelemetry Operator

在ACK集群对应的kubeconfig环境,执行以下命令,创建opentelemetry-operator-system命名空间。

kubectl create namespace opentelemetry-operator-system执行以下命令,使用Helm在opentelemetry-operator-system命名空间下安装OpenTelemetry Operator。

helm repo add open-telemetry https://open-telemetry.github.io/opentelemetry-helm-charts helm install --namespace=opentelemetry-operator-system opentelemetry-operator open-telemetry/opentelemetry-operator \ --set "manager.collectorImage.repository=otel/opentelemetry-collector-k8s" \ --set admissionWebhooks.certManager.enabled=false \ --set admissionWebhooks.autoGenerateCert.enabled=true执行以下命令,检查opentelemetry-operator是否正常运行。

kubectl get pod -n opentelemetry-operator-system预期输出:

NAME READY STATUS RESTARTS AGE opentelemetry-operator-854fb558b5-pvllj 2/2 Running 0 1mSTATUS为Running,表明opentelemetry-operator正常运行。

步骤二:创建OpenTelemetry Collector

使用以下内容,创建collector.yaml文件。

请将YAML中的

${ENDPOINT}替换为gRPC协议的VPC网络接入点,${TOKEN}替换为鉴权Token。关于如何获取阿里云可观测链路OpenTelemetry版的接入点和鉴权Token,请参见接入和鉴权说明。在ACK集群对应的kubeconfig环境下,执行以下命令,将collector部署到集群。

kubectl apply -f collector.yaml执行以下命令,检查collector是否正常启动。

kubectl get pod -n opentelemetry-operator-system预期输出:

NAME READY STATUS RESTARTS AGE opentelemetry-operator-854fb558b5-pvllj 2/2 Running 0 3m default-collector-5cbb4497f4-2hjqv 1/1 Running 0 30s预期输出表明collector正常启动。

执行以下命令,检查服务是否创建。

kubectl get svc -n opentelemetry-operator-system预期输出:

opentelemetry-operator ClusterIP 172.16.138.165 <none> 8443/TCP,8080/TCP 3m opentelemetry-operator-webhook ClusterIP 172.16.127.0 <none> 443/TCP 3m default-collector ClusterIP 172.16.145.93 <none> 4317/TCP 30s default-collector-headless ClusterIP None <none> 4317/TCP 30s default-collector-monitoring ClusterIP 172.16.136.5 <none> 8888/TCP 30s预期输出表明服务已创建成功。

步骤三:通过ASM控制台启用链路追踪

登录ASM控制台,在左侧导航栏,选择。

在网格管理页面,单击目标实例名称,然后在左侧导航栏,选择。

在可观测配置页面的链路追踪设置区域,将采样百分比设置为100,然后单击提交。

在左侧导航栏,选择,在OpenTelemetry服务地址/域名输入default-collector.opentelemetry-operator-system.svc.cluster.local,在OpenTelemetry服务端口输入4317,然后单击将服务网格链路追踪数据采集到OpenTelemetry。

1.22.6.89及以上

登录ASM控制台,在左侧导航栏,选择。

在网格管理页面,单击目标实例名称,然后在左侧导航栏,选择。

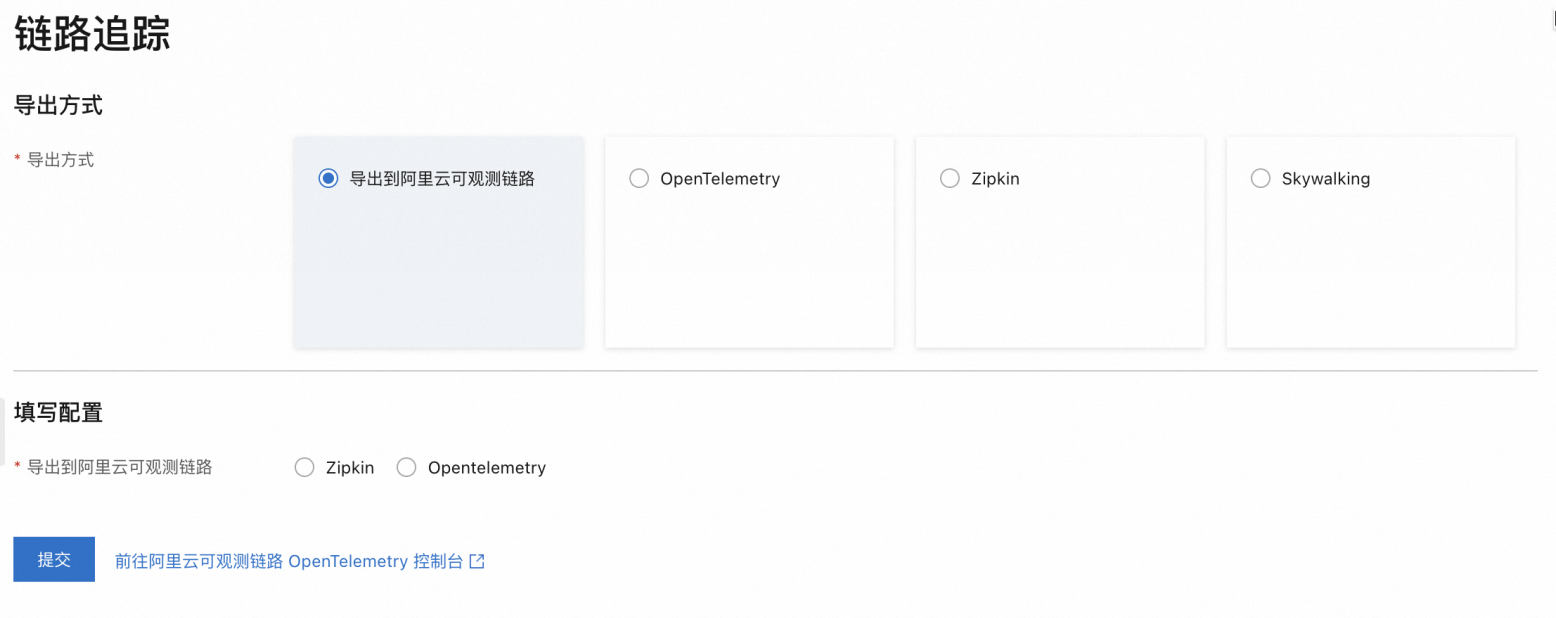

在链路追踪页面,选择导出到阿里云可观测链路的导出方式,然后再填写配置选择您的上报方式,如Zipkin,然后单击提交。

单击前往阿里云可观测链路OpenTelemetry版控制台,查看链路追踪信息。关于链路追踪的更多信息,请参见什么是可观测链路 OpenTelemetry 版。

说明

说明若您不再需要此功能,可以在链路追踪页面,单击关闭导出,然后在确认对话框,单击确定。

向自建系统导出ASM链路追踪数据

不同版本的ASM实例所支持的向自建系统导出链路追踪数据的方式不同,请按照您实例的版本进行选择。

1.18.0.124以下

ASM实例版本为1.17.2.28以下:登录ASM控制台,在目标实例的基本信息页面,单击功能设置,选中启用链路追踪,按需进行配置,然后单击确定。

ASM实例版本为1.17.2.28及以上,1.18.0.124以下:启用链路追踪的方式,请参见链路追踪设置说明。

1.18.0.124及以上,1.22.6.89以下

在此版本范围内,仅支持在控制台上报到阿里云可观测链路OpenTelemetry。您可以登录ASM控制台,在目标实例的页面进行配置。

配置说明

配置项 | 说明 |

OpenTelemetry服务域名(完整FQDN) | 自建系统服务域名,需要为完整的FQDN。例如 |

OpenTelemetry服务端口 | 自建系统服务端口,例如 |

1.22.6.89及以上

您可以登录ASM控制台,在目标实例的页面选择您的链路追踪自建系统进行配置。

在配置链路追踪上报到自建系统时,请确保您的自建系统是网格内服务。如果自建系统在网格外部,您可以通过ServiceEntry将自建系统注册到网格内。具体操作,请参见集群外服务(Service Entry)CRD说明。

配置说明

上报到OpenTelemetry

配置项 | 说明 | |

服务域名(完整FQDN) | 自建系统服务域名,需要为完整的FQDN。例如 | |

服务端口 | 自建系统服务端口,例如 | |

通过gRPC上报 | 超时时间 | 可选,配置上报链路追踪数据的请求超时时间,单位为秒。例如 |

请求头 | 可选,配置上报链路追踪数据时携带的请求头,例如 | |

通过HTTP上报 | 请求路径 | 上报链路追踪数据的请求路径,例如 |

超时时间 | 可选,配置上报链路追踪数据的请求超时时间,单位为秒。例如 | |

请求头 | 可选,配置上报链路追踪数据时携带的请求头,例如 | |

上报到Zipkin

配置项 | 说明 |

服务域名(完整FQDN) | 自建系统服务域名,需要为完整的FQDN。例如 |

服务端口 | 自建系统服务端口,例如 |

请求路径 | 上报链路追踪数据的请求路径,例如 |

上报到Skywalking

配置项 | 说明 |

服务域名(完整FQDN) | 自建系统服务域名,需要为完整的FQDN。例如 |

服务端口 | 自建系统服务端口,例如 |

验证链路追踪数据上报

部署应用

部署示例应用。

使用以下内容创建bookinfo.yaml。

将Bookinfo应用部署到数据面集群中。

kubectl --kubeconfig=${DATA_PLANE_KUBECONFIG} apply -f bookinfo.yaml

部署sleep应用。

使用以下内容,创建sleep.yaml

部署sleep应用。

kubectl --kubeconfig=${DATA_PLANE_KUBECONFIG} apply -f sleep.yaml

发起测试

执行以下命令,发起测试。

kubectl exec -it deploy/sleep -- sh -c 'for i in $(seq 1 100); do curl -s productpage:9080/productpage > /dev/null; done'查看上报数据

以下以上报到阿里云可观测链路OpenTelemetry版为例,展示数据上报结果。

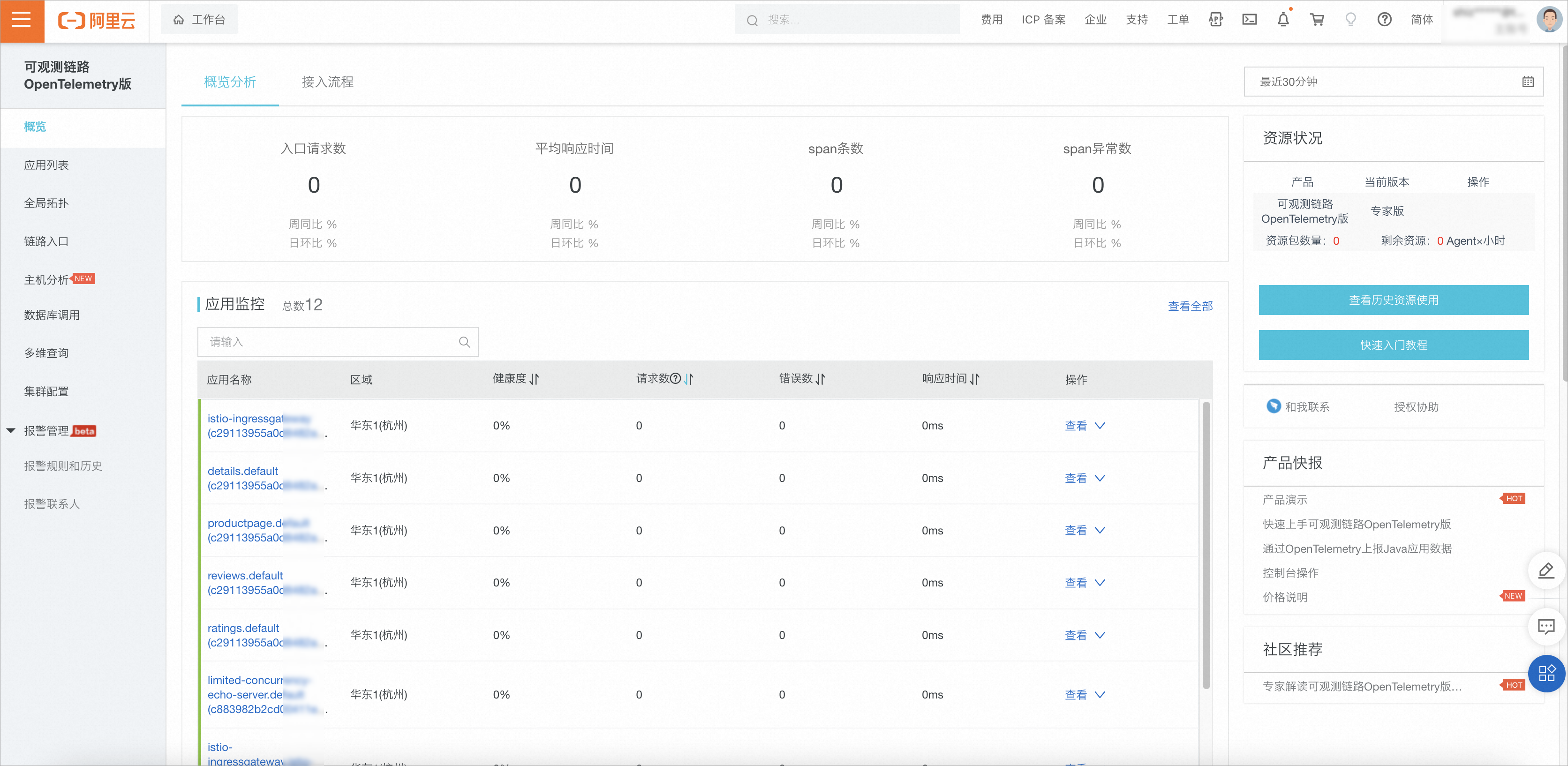

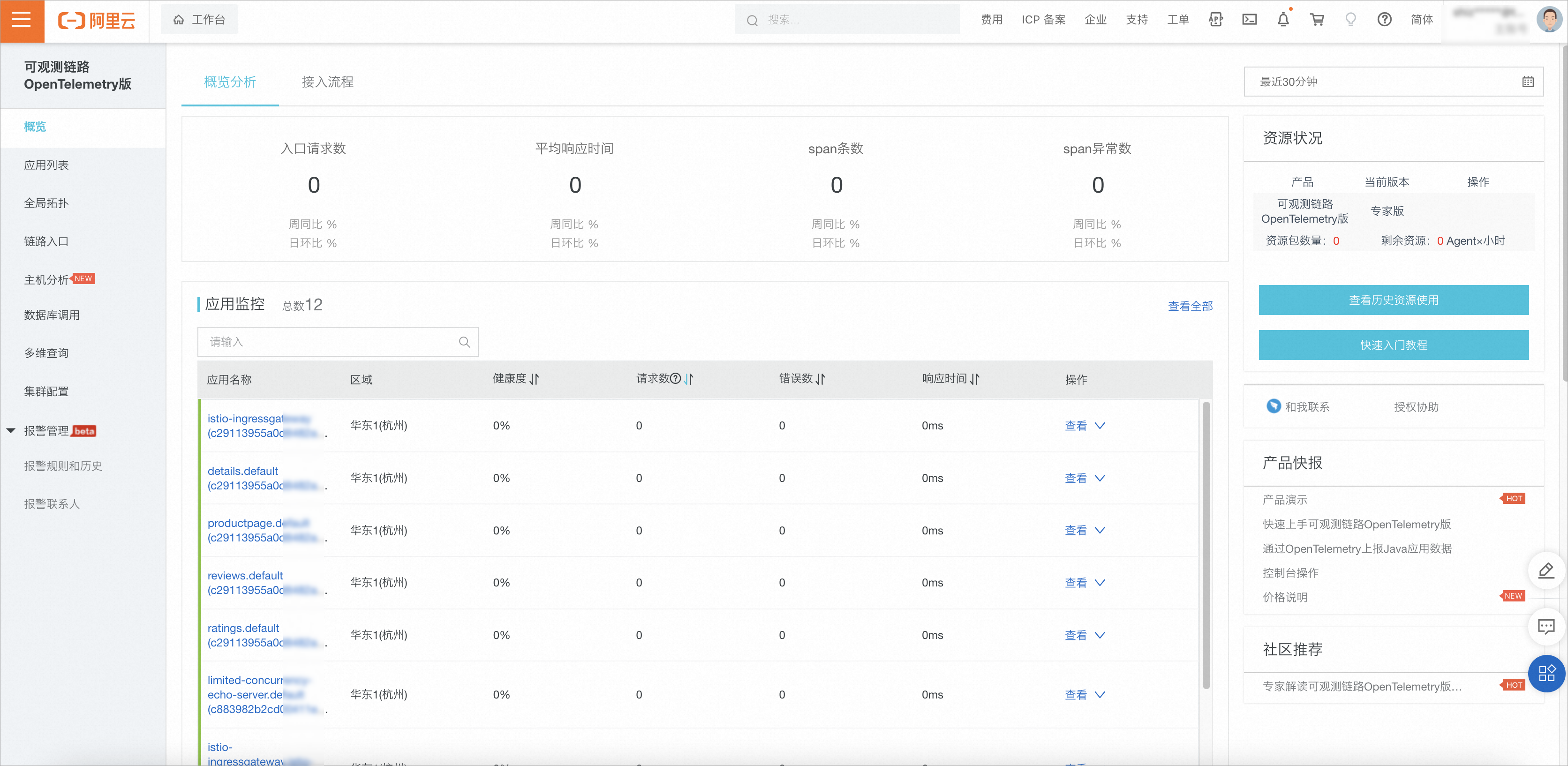

单击左侧导航栏的应用列表。可以看到类似下图的数据。