LLM大语言模型部署

EAS提供了场景化部署方式,您只需配置几个参数,即可一键部署流行的开源LLM大语言模型服务应用,以获得大模型的推理能力。本文为您介绍如何通过EAS一键部署和调用LLM大语言模型服务。

功能介绍

随着ChatGPT和通义千问等大模型在业界广泛应用,基于LLM大语言模型的推理成为热门应用之一。EAS平台提供了便捷且高效的方式来部署LLM大语言模型,支持两种部署版本:

开源模型一键部署:通过EAS,您可以一键部署多种开源大模型服务应用,包括DeepSeek-R1、DeepSeek-V3、QVQ-72B-Preview、QwQ-32B-Preview、Llama、Qwen、Marco、internlm3、Qwen2-VL、AlphaFold2等。支持标准部署、加速部署:BladeLLM和加速部署:vLLM三种部署方式。

高性能部署:利用PAI自主研发的BladeLLM引擎进行高效部署,以实现更低延迟和更高吞吐量的大语言模型(LLM)推理。支持部署开源公共模型和自定义模型。如果您需要部署自定义模型,可以选择该方式。

两种版本的功能区别如下:

区别类型 | 开源模型一键部署 | 高性能部署 |

模型配置 | 开源公共模型 |

|

加速框架 |

| 加速框架:BladeLLM |

调用方式 |

| API调用 |

本文将以开源模型一键部署为例来说明如何部署LLM大语言模型服务。如何进行高性能部署,请参见BladeLLM快速入门。

部署EAS服务

登录PAI控制台,在页面上方选择目标地域,并在右侧选择目标工作空间,然后单击进入EAS。

在模型在线服务(EAS)页面,单击部署服务,然后在场景化模型部署区域,单击LLM大语言模型部署。

在部署LLM大语言模型页面,配置以下关键参数。

参数

描述

基本信息

服务名称

自定义模型服务名称。

版本选择

选择开源模型一键部署。关于如何进行高性能部署,详情请参见BladeLLM快速入门。

模型类别

选择模型类别。

部署方式

不同类别的模型可能支持不同的部署方式,包括:

加速部署:BladeLLM

加速部署:vLLM

标准部署:不使用任何加速框架。

您可以在部署服务时查看具体模型类别对应的部署方式,加速部署仅支持API推理。

资源部署

资源类型

默认选择公共资源。如果您需要使用独立资源部署服务,可以选择使用EAS资源组或资源配额,如何购买资源组和创建资源配额,请参见使用专属资源组和灵骏智算资源配额。

说明仅华北6(乌兰察布)和新加坡地域支持使用资源配额。

部署资源

使用公共资源时,当选择模型类别后,系统会自动推荐适合的资源规格。

单击部署。

调用EAS服务

根据您所采用的部署方式,调用方法会有所不同。请依据您的具体部署版本,选取合适的调用方法。

标准部署

通过WebUI调用EAS服务

单击目标服务服务方式列下的查看Web应用。

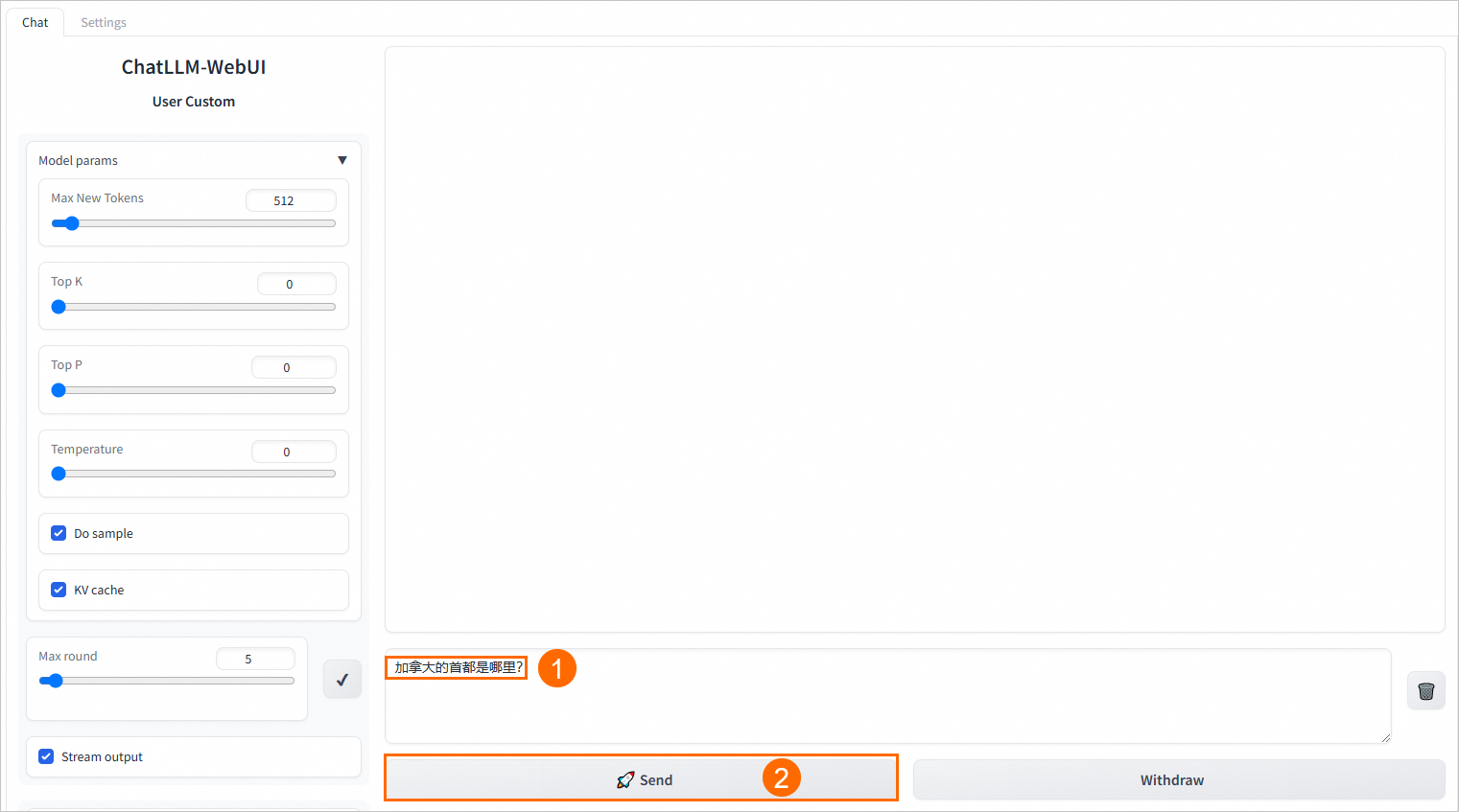

在WebUI页面,进行模型推理验证。

在ChatLLM-WebUI页面的文本框中输入对话内容,例如

加拿大的首都是哪里?,单击Send,即可开始对话。

通过API调用EAS服务

获取服务访问地址和Token。

访问模型在线服务(EAS),选择工作空间后,进入EAS。

单击目标服务名称,进入服务详情页面。

在基本信息区域单击查看调用信息,在公网地址调用页签获取服务Token和访问地址。

启动API进行模型推理。

使用HTTP方式调用服务

非流式调用

客户端使用标准的HTTP格式,使用命令行调用时,支持发送以下两种类型的请求:

发送String类型的请求

curl $host -H 'Authorization: $authorization' --data-binary @chatllm_data.txt -v其中:$authorization需替换为服务Token;$host:需替换为服务访问地址;chatllm_data.txt:该文件为包含问题的纯文本文件,例如

加拿大的首都是哪里?。发送结构化类型的请求

curl $host -H 'Authorization: $authorization' -H "Content-type: application/json" --data-binary @chatllm_data.json -v -H "Connection: close"使用chatllm_data.json文件来设置推理参数,chatllm_data.json文件的内容格式如下:

{ "max_new_tokens": 4096, "use_stream_chat": false, "prompt": "What is the capital of Canada?", "system_prompt": "Act like you are a knowledgeable assistant who can provide information on geography and related topics.", "history": [ [ "Can you tell me what's the capital of France?", "The capital of France is Paris." ] ], "temperature": 0.8, "top_k": 10, "top_p": 0.8, "do_sample": true, "use_cache": true }参数说明如下,请酌情添加或删除。

参数

描述

默认值

max_new_tokens

生成输出token的最大长度,单位为个。

2048

use_stream_chat

是否使用流式输出形式。

true

prompt

用户的Prompt。

""

system_prompt

系统Prompt。

""

history

对话的历史记录,类型为List[Tuple(str, str)]。

[()]

temperature

用于调节模型输出结果的随机性,值越大随机性越强,0值为固定输出。Float类型,区间为0~1。

0.95

top_k

从生成结果中选择候选输出的数量。

30

top_p

从生成结果中按百分比选择输出结果。Float类型,区间为0~1。

0.8

do_sample

开启输出采样。

true

use_cache

开启KV Cache。

true

您可以使用Python的requests库来构建自己的客户端,示例代码如下。您可以通过命令行参数

--prompt来指定请求的内容,例如:python xxx.py --prompt "What is the capital of Canada?"。import argparse import json from typing import Iterable, List import requests def post_http_request(prompt: str, system_prompt: str, history: list, host: str, authorization: str, max_new_tokens: int = 2048, temperature: float = 0.95, top_k: int = 1, top_p: float = 0.8, langchain: bool = False, use_stream_chat: bool = False) -> requests.Response: headers = { "User-Agent": "Test Client", "Authorization": f"{authorization}" } if not history: history = [ ( "San Francisco is a", "city located in the state of California in the United States. \ It is known for its iconic landmarks, such as the Golden Gate Bridge \ and Alcatraz Island, as well as its vibrant culture, diverse population, \ and tech industry. The city is also home to many famous companies and \ startups, including Google, Apple, and Twitter." ) ] pload = { "prompt": prompt, "system_prompt": system_prompt, "top_k": top_k, "top_p": top_p, "temperature": temperature, "max_new_tokens": max_new_tokens, "use_stream_chat": use_stream_chat, "history": history } if langchain: pload["langchain"] = langchain response = requests.post(host, headers=headers, json=pload, stream=use_stream_chat) return response def get_response(response: requests.Response) -> List[str]: data = json.loads(response.content) output = data["response"] history = data["history"] return output, history if __name__ == "__main__": parser = argparse.ArgumentParser() parser.add_argument("--top-k", type=int, default=4) parser.add_argument("--top-p", type=float, default=0.8) parser.add_argument("--max-new-tokens", type=int, default=2048) parser.add_argument("--temperature", type=float, default=0.95) parser.add_argument("--prompt", type=str, default="How can I get there?") parser.add_argument("--langchain", action="store_true") args = parser.parse_args() prompt = args.prompt top_k = args.top_k top_p = args.top_p use_stream_chat = False temperature = args.temperature langchain = args.langchain max_new_tokens = args.max_new_tokens host = "EAS服务公网地址" authorization = "EAS服务公网Token" print(f"Prompt: {prompt!r}\n", flush=True) # 在客户端请求中可设置语言模型的system prompt。 system_prompt = "Act like you are programmer with \ 5+ years of experience." # 客户端请求中可设置对话的历史信息,客户端维护当前用户的对话记录,用于实现多轮对话。通常情况下可以使用上一轮对话返回的histroy信息,history格式为List[Tuple(str, str)]。 history = [] response = post_http_request( prompt, system_prompt, history, host, authorization, max_new_tokens, temperature, top_k, top_p, langchain=langchain, use_stream_chat=use_stream_chat) output, history = get_response(response) print(f" --- output: {output} \n --- history: {history}", flush=True) # 服务端返回JSON格式的响应结果,包含推理结果与对话历史。 def get_response(response: requests.Response) -> List[str]: data = json.loads(response.content) output = data["response"] history = data["history"] return output, history其中:

host:配置为服务访问地址。

authorization:配置为服务Token。

流式调用

流式调用使用HTTP SSE方式,其他设置与非流式相同,代码参考如下。您可以通过命令行参数

--prompt来指定请求的内容,例如python xxx.py --prompt "What is the capital of Canada?"。import argparse import json from typing import Iterable, List import requests def clear_line(n: int = 1) -> None: LINE_UP = '\033[1A' LINE_CLEAR = '\x1b[2K' for _ in range(n): print(LINE_UP, end=LINE_CLEAR, flush=True) def post_http_request(prompt: str, system_prompt: str, history: list, host: str, authorization: str, max_new_tokens: int = 2048, temperature: float = 0.95, top_k: int = 1, top_p: float = 0.8, langchain: bool = False, use_stream_chat: bool = False) -> requests.Response: headers = { "User-Agent": "Test Client", "Authorization": f"{authorization}" } if not history: history = [ ( "San Francisco is a", "city located in the state of California in the United States. \ It is known for its iconic landmarks, such as the Golden Gate Bridge \ and Alcatraz Island, as well as its vibrant culture, diverse population, \ and tech industry. The city is also home to many famous companies and \ startups, including Google, Apple, and Twitter." ) ] pload = { "prompt": prompt, "system_prompt": system_prompt, "top_k": top_k, "top_p": top_p, "temperature": temperature, "max_new_tokens": max_new_tokens, "use_stream_chat": use_stream_chat, "history": history } if langchain: pload["langchain"] = langchain response = requests.post(host, headers=headers, json=pload, stream=use_stream_chat) return response def get_streaming_response(response: requests.Response) -> Iterable[List[str]]: for chunk in response.iter_lines(chunk_size=8192, decode_unicode=False, delimiter=b"\0"): if chunk: data = json.loads(chunk.decode("utf-8")) output = data["response"] history = data["history"] yield output, history if __name__ == "__main__": parser = argparse.ArgumentParser() parser.add_argument("--top-k", type=int, default=4) parser.add_argument("--top-p", type=float, default=0.8) parser.add_argument("--max-new-tokens", type=int, default=2048) parser.add_argument("--temperature", type=float, default=0.95) parser.add_argument("--prompt", type=str, default="How can I get there?") parser.add_argument("--langchain", action="store_true") args = parser.parse_args() prompt = args.prompt top_k = args.top_k top_p = args.top_p use_stream_chat = True temperature = args.temperature langchain = args.langchain max_new_tokens = args.max_new_tokens host = "" authorization = "" print(f"Prompt: {prompt!r}\n", flush=True) system_prompt = "Act like you are programmer with \ 5+ years of experience." history = [] response = post_http_request( prompt, system_prompt, history, host, authorization, max_new_tokens, temperature, top_k, top_p, langchain=langchain, use_stream_chat=use_stream_chat) for h, history in get_streaming_response(response): print( f" --- stream line: {h} \n --- history: {history}", flush=True)其中:

host:配置为服务访问地址。

authorization:配置为服务Token。

使用WebSocket方式调用服务

为了更好地维护用户对话信息,您也可以使用WebSocket方式保持与服务的连接完成单轮或多轮对话,代码示例如下:

import os import time import json import struct from multiprocessing import Process import websocket round = 5 questions = 0 def on_message_1(ws, message): if message == "<EOS>": print('pid-{} timestamp-({}) receives end message: {}'.format(os.getpid(), time.time(), message), flush=True) ws.send(struct.pack('!H', 1000), websocket.ABNF.OPCODE_CLOSE) else: print("{}".format(time.time())) print('pid-{} timestamp-({}) --- message received: {}'.format(os.getpid(), time.time(), message), flush=True) def on_message_2(ws, message): global questions print('pid-{} --- message received: {}'.format(os.getpid(), message)) # end the client-side streaming if message == "<EOS>": questions = questions + 1 if questions == 5: ws.send(struct.pack('!H', 1000), websocket.ABNF.OPCODE_CLOSE) def on_message_3(ws, message): print('pid-{} --- message received: {}'.format(os.getpid(), message)) # end the client-side streaming ws.send(struct.pack('!H', 1000), websocket.ABNF.OPCODE_CLOSE) def on_error(ws, error): print('error happened: ', str(error)) def on_close(ws, a, b): print("### closed ###", a, b) def on_pong(ws, pong): print('pong:', pong) # stream chat validation test def on_open_1(ws): print('Opening Websocket connection to the server ... ') params_dict = {} params_dict['prompt'] = """Show me a golang code example: """ params_dict['temperature'] = 0.9 params_dict['top_p'] = 0.1 params_dict['top_k'] = 30 params_dict['max_new_tokens'] = 2048 params_dict['do_sample'] = True raw_req = json.dumps(params_dict, ensure_ascii=False).encode('utf8') # raw_req = f"""To open a Websocket connection to the server: """ ws.send(raw_req) # end the client-side streaming # multi-round query validation test def on_open_2(ws): global round print('Opening Websocket connection to the server ... ') params_dict = {"max_new_tokens": 6144} params_dict['temperature'] = 0.9 params_dict['top_p'] = 0.1 params_dict['top_k'] = 30 params_dict['use_stream_chat'] = True params_dict['prompt'] = "您好!" params_dict = { "system_prompt": "Act like you are programmer with 5+ years of experience." } raw_req = json.dumps(params_dict, ensure_ascii=False).encode('utf8') ws.send(raw_req) params_dict['prompt'] = "请使用Python,编写一个排序算法" raw_req = json.dumps(params_dict, ensure_ascii=False).encode('utf8') ws.send(raw_req) params_dict['prompt'] = "请转写成java语言的实现" raw_req = json.dumps(params_dict, ensure_ascii=False).encode('utf8') ws.send(raw_req) params_dict['prompt'] = "请介绍一下你自己?" raw_req = json.dumps(params_dict, ensure_ascii=False).encode('utf8') ws.send(raw_req) params_dict['prompt'] = "请总结上述对话" raw_req = json.dumps(params_dict, ensure_ascii=False).encode('utf8') ws.send(raw_req) # Langchain validation test. def on_open_3(ws): global round print('Opening Websocket connection to the server ... ') params_dict = {} # params_dict['prompt'] = """To open a Websocket connection to the server: """ params_dict['prompt'] = """Can you tell me what's the MNN?""" params_dict['temperature'] = 0.9 params_dict['top_p'] = 0.1 params_dict['top_k'] = 30 params_dict['max_new_tokens'] = 2048 params_dict['use_stream_chat'] = False params_dict['langchain'] = True raw_req = json.dumps(params_dict, ensure_ascii=False).encode('utf8') ws.send(raw_req) authorization = "" host = "ws://" + "" def single_call(on_open_func, on_message_func, on_clonse_func=on_close): ws = websocket.WebSocketApp( host, on_open=on_open_func, on_message=on_message_func, on_error=on_error, on_pong=on_pong, on_close=on_clonse_func, header=[ 'Authorization: ' + authorization], ) # setup ping interval to keep long connection. ws.run_forever(ping_interval=2) if __name__ == "__main__": for i in range(5): p1 = Process(target=single_call, args=(on_open_1, on_message_1)) p2 = Process(target=single_call, args=(on_open_2, on_message_2)) p3 = Process(target=single_call, args=(on_open_3, on_message_3)) p1.start() p2.start() p3.start() p1.join() p2.join() p3.join()其中:

authorization:配置为服务Token。

host:配置为服务访问地址。并将访问地址中前端的http替换为ws。

use_stream_chat:通过该请求参数来控制客户端是否为流式输出。默认值为True,表示服务端返回流式数据。

参考上述示例代码中的on_open_2函数的实现方法实现多轮对话。

加速部署:BladeLLM

仅支持通过API方式调用服务,具体操作步骤如下:

查看服务访问地址和Token。

在模型在线服务(EAS)页面,单击目标服务的服务方式列下的调用信息。

在调用信息对话框,查看服务访问地址和Token。

在终端中执行以下代码调用服务,流式地获取生成文本。

# Call EAS service curl -X POST \ -H "Content-Type: application/json" \ -H "Authorization: AUTH_TOKEN_FOR_EAS" \ -d '{"prompt":"What is the capital of Canada?", "stream":"true"}' \ <service_url>/v1/completions其中:

Authorization:配置为上述步骤获取的服务Token。

<service_url>:替换为上述步骤获取的服务访问地址。

返回结果示例如下:

data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"","index":0,"logprobs":null,"text":" The"}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":1,"total_tokens":8},"error_info":null} data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"","index":0,"logprobs":null,"text":" capital"}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":2,"total_tokens":9},"error_info":null} data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"","index":0,"logprobs":null,"text":" of"}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":3,"total_tokens":10},"error_info":null} data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"","index":0,"logprobs":null,"text":" Canada"}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":4,"total_tokens":11},"error_info":null} data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"","index":0,"logprobs":null,"text":" is"}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":5,"total_tokens":12},"error_info":null} data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"","index":0,"logprobs":null,"text":" Ottawa"}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":6,"total_tokens":13},"error_info":null} data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"","index":0,"logprobs":null,"text":"."}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":7,"total_tokens":14},"error_info":null} data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"stop","index":0,"logprobs":null,"text":""}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":8,"total_tokens":15},"error_info":null} data: [DONE]

加速部署:vLLM

仅支持通过API方式调用服务,具体操作步骤如下:

查看服务访问地址和Token。

在模型在线服务(EAS)页面,单击目标服务的服务方式列下的调用信息。

在调用信息对话框,查看服务访问地址和Token。

在终端中执行以下代码调用服务。

Python

from openai import OpenAI ##### API 配置 ##### openai_api_key = "<EAS API KEY>" openai_api_base = "<EAS API Endpoint>/v1" client = OpenAI( api_key=openai_api_key, base_url=openai_api_base, ) models = client.models.list() model = models.data[0].id print(model) def main(): stream = True chat_completion = client.chat.completions.create( messages=[ { "role": "user", "content": [ { "type": "text", "text": "加拿大的首都在哪里?", } ], } ], model=model, max_completion_tokens=2048, stream=stream, ) if stream: for chunk in chat_completion: print(chunk.choices[0].delta.content, end="") else: result = chat_completion.choices[0].message.content print(result) if __name__ == "__main__": main()其中:

<EAS API KEY>:替换为已查询的服务Token。

<EAS API Endpoint>:替换为已查询的服务访问地址。

命令行

curl -X POST <service_url>/v1/chat/completions -d '{ "model": "Qwen2.5-7B-Instruct", "messages": [ { "role": "system", "content": [ { "type": "text", "text": "You are a helpful and harmless assistant." } ] }, { "role": "user", "content": "加拿大的首都在哪里?" } ] }' -H "Content-Type: application/json" -H "Authorization: <your-token>"其中:

<service_url>:替换为已查询的服务访问地址。

<your-token>:替换为已查询的服务Token。

相关文档

您可以通过EAS一键部署集成了大语言模型(LLM)和检索增强生成(RAG)技术的对话系统服务,该服务支持使用本地知识库进行信息检索。在WebUI界面中集成了LangChain业务数据后,您可以通过WebUI或API接口进行模型推理功能验证,详情请参见大模型RAG对话系统。