本方案整合了来自开源社区的高质量图像修复、去噪、上色等算法,并使用Stable Diffusion WebUI进行交互式图像修复。您可以根据需要调整参数,组合不同的处理方法,以达到最佳的修复效果。本文为您介绍如何在阿里云DSW中,进行交互式图像修复。

准备环境和资源

创建工作空间,详情请参见创建工作空间。

创建DSW实例,其中关键参数配置如下。具体操作,请参见创建及管理DSW实例。

实例规格选择:ecs.gn7i-c8g1.2xlarge。

镜像选择:在官方镜像中选择

stable-diffusion-webui-env:pytorch1.13-gpu-py310-cu117-ubuntu22.04。

步骤一:在DSW中打开教程文件

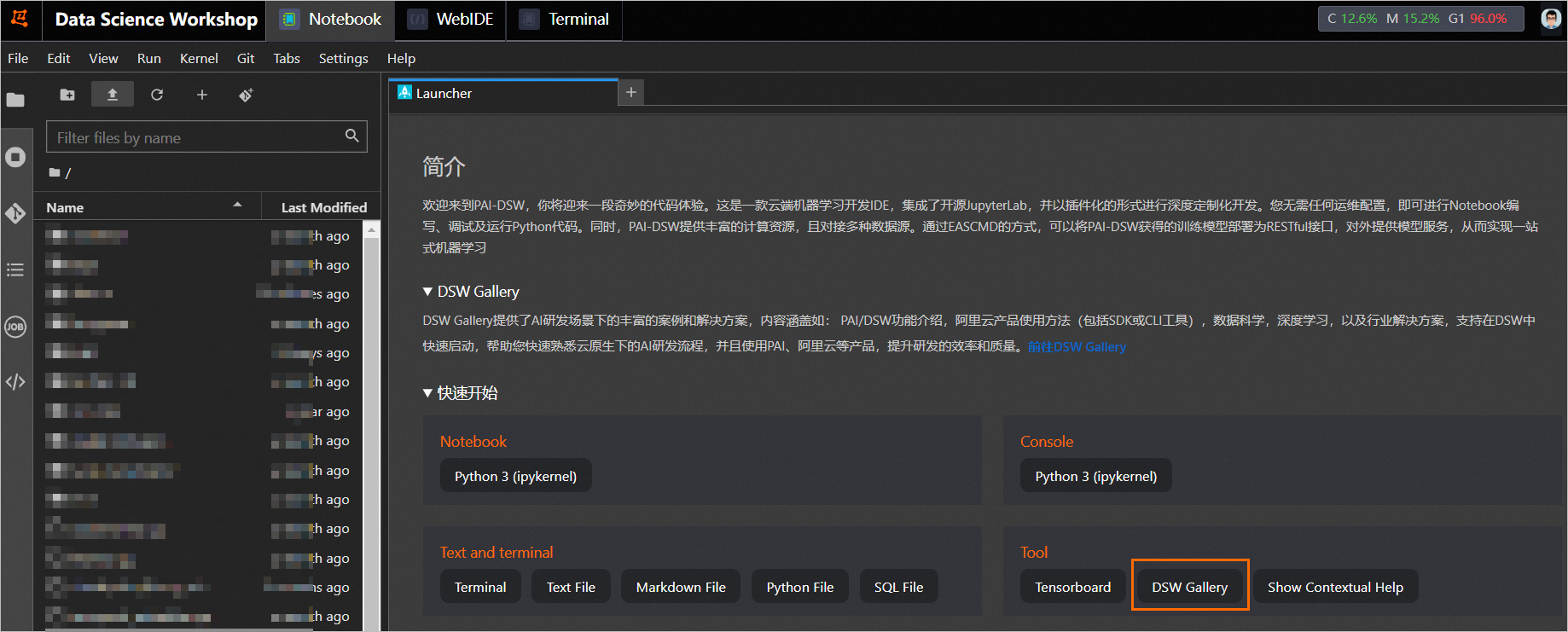

进入PAI-DSW开发环境。

登录PAI控制台。

在页面左上方,选择DSW实例所在的地域。

在左侧导航栏单击工作空间列表,在工作空间列表页面中单击默认工作空间名称,进入对应工作空间内。

在左侧导航栏,选择模型开发与训练>交互式建模(DSW)。

单击需要打开的实例操作列下的打开,进入PAI-DSW实例开发环境。

在Notebook页签的Launcher页面,单击快速开始区域Tool下的DSW Gallery,打开DSW Gallery页面。

在DSW Gallery页面中,搜索并找到用AI重燃亚运经典教程,单击教程卡片中的在DSW中打开。

单击后即会自动将本教程所需的资源和教程文件下载至DSW实例中,并在下载完成后自动打开教程文件。

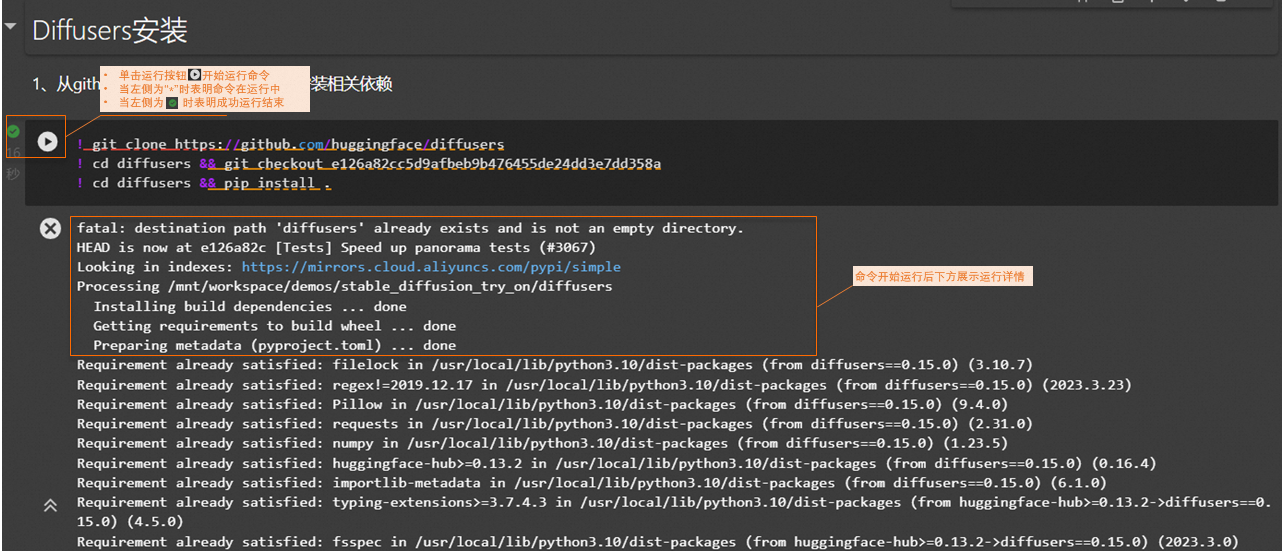

步骤二:运行教程文件

在打开的教程文件image_restoration.ipynb文件中,您可以直接看到教程文本,您可以在教程文件中直接运行对应的步骤的命令,当成功运行结束一个步骤命令后,再顺次运行下个步骤的命令。 本教程包含的操作步骤以及每个步骤的执行结果如下。

本教程包含的操作步骤以及每个步骤的执行结果如下。

导入待修复的照片。依次运行数据准备章节的命令,下载提供的亚运老照片并解压至

input文件夹中。安装工具。

单击此处查看运行结果

Get:1 http://mirrors.cloud.aliyuncs.com/ubuntu jammy InRelease [270 kB] Get:2 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-updates InRelease [119 kB] Get:3 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-backports InRelease [109 kB] Get:4 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-security InRelease [110 kB] Get:5 http://mirrors.cloud.aliyuncs.com/ubuntu jammy/multiverse Sources [361 kB] Get:6 http://mirrors.cloud.aliyuncs.com/ubuntu jammy/main Sources [1668 kB] Get:7 http://mirrors.cloud.aliyuncs.com/ubuntu jammy/restricted Sources [28.2 kB] Get:8 http://mirrors.cloud.aliyuncs.com/ubuntu jammy/universe Sources [22.0 MB] Get:9 http://mirrors.cloud.aliyuncs.com/ubuntu jammy/universe amd64 Packages [17.5 MB] Get:10 http://mirrors.cloud.aliyuncs.com/ubuntu jammy/multiverse amd64 Packages [266 kB] Get:11 http://mirrors.cloud.aliyuncs.com/ubuntu jammy/restricted amd64 Packages [164 kB] Get:12 http://mirrors.cloud.aliyuncs.com/ubuntu jammy/main amd64 Packages [1792 kB] Get:13 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-updates/multiverse Sources [21.0 kB] Get:14 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-updates/universe Sources [347 kB] Get:15 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-updates/main Sources [531 kB] Get:16 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-updates/restricted Sources [56.3 kB] Get:17 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-updates/restricted amd64 Packages [1015 kB] Get:18 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-updates/main amd64 Packages [1185 kB] Get:19 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-updates/universe amd64 Packages [1251 kB] Get:20 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-updates/multiverse amd64 Packages [49.8 kB] Get:21 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-backports/main Sources [9392 B] Get:22 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-backports/universe Sources [10.5 kB] Get:23 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-backports/main amd64 Packages [50.3 kB] Get:24 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-backports/universe amd64 Packages [28.1 kB] Get:25 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-security/restricted Sources [53.4 kB] Get:26 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-security/universe Sources [202 kB] Get:27 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-security/multiverse Sources [11.3 kB] Get:28 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-security/main Sources [270 kB] Get:29 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-security/main amd64 Packages [915 kB] Get:30 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-security/multiverse amd64 Packages [44.0 kB] Get:31 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-security/restricted amd64 Packages [995 kB] Get:32 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-security/universe amd64 Packages [990 kB] Fetched 52.4 MB in 2s (26.0 MB/s) Reading package lists... Done Reading package lists... Done Building dependency tree... Done Reading state information... Done Suggested packages: zip The following NEW packages will be installed: unzip 0 upgraded, 1 newly installed, 0 to remove and 99 not upgraded. Need to get 174 kB of archives. After this operation, 385 kB of additional disk space will be used. Get:1 http://mirrors.cloud.aliyuncs.com/ubuntu jammy-updates/main amd64 unzip amd64 6.0-26ubuntu3.1 [174 kB] Fetched 174 kB in 0s (7160 kB/s) debconf: delaying package configuration, since apt-utils is not installed Selecting previously unselected package unzip. (Reading database ... 20089 files and directories currently installed.) Preparing to unpack .../unzip_6.0-26ubuntu3.1_amd64.deb ... Unpacking unzip (6.0-26ubuntu3.1) ... Setting up unzip (6.0-26ubuntu3.1) ...使用内网下载链接可以提升下载速度。

下载图片数据并解压至

input目录。单击此处查看运行结果

http://pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com/aigc-data/restoration/img/input.zip cn-hangzhou --2023-09-04 11:20:55-- http://pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com/aigc-data/restoration/img/input.zip Resolving pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com (pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com)... 100.118.28.49, 100.118.28.45, 100.118.28.44, ... Connecting to pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com (pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com)|100.118.28.49|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 1657458 (1.6M) [application/zip] Saving to: ‘input.zip’ input.zip 100%[===================>] 1.58M --.-KB/s in 0.09s 2023-09-04 11:20:56 (16.8 MB/s) - ‘input.zip’ saved [1657458/1657458] Archive: input.zip creating: input/ inflating: input/54.jpg inflating: input/20.jpg inflating: input/10.jpg inflating: input/50.jpg inflating: input/2.jpg inflating: input/40.jpg inflating: input/4.png inflating: input/70.jpg inflating: input/34.jpg inflating: input/64.jpg

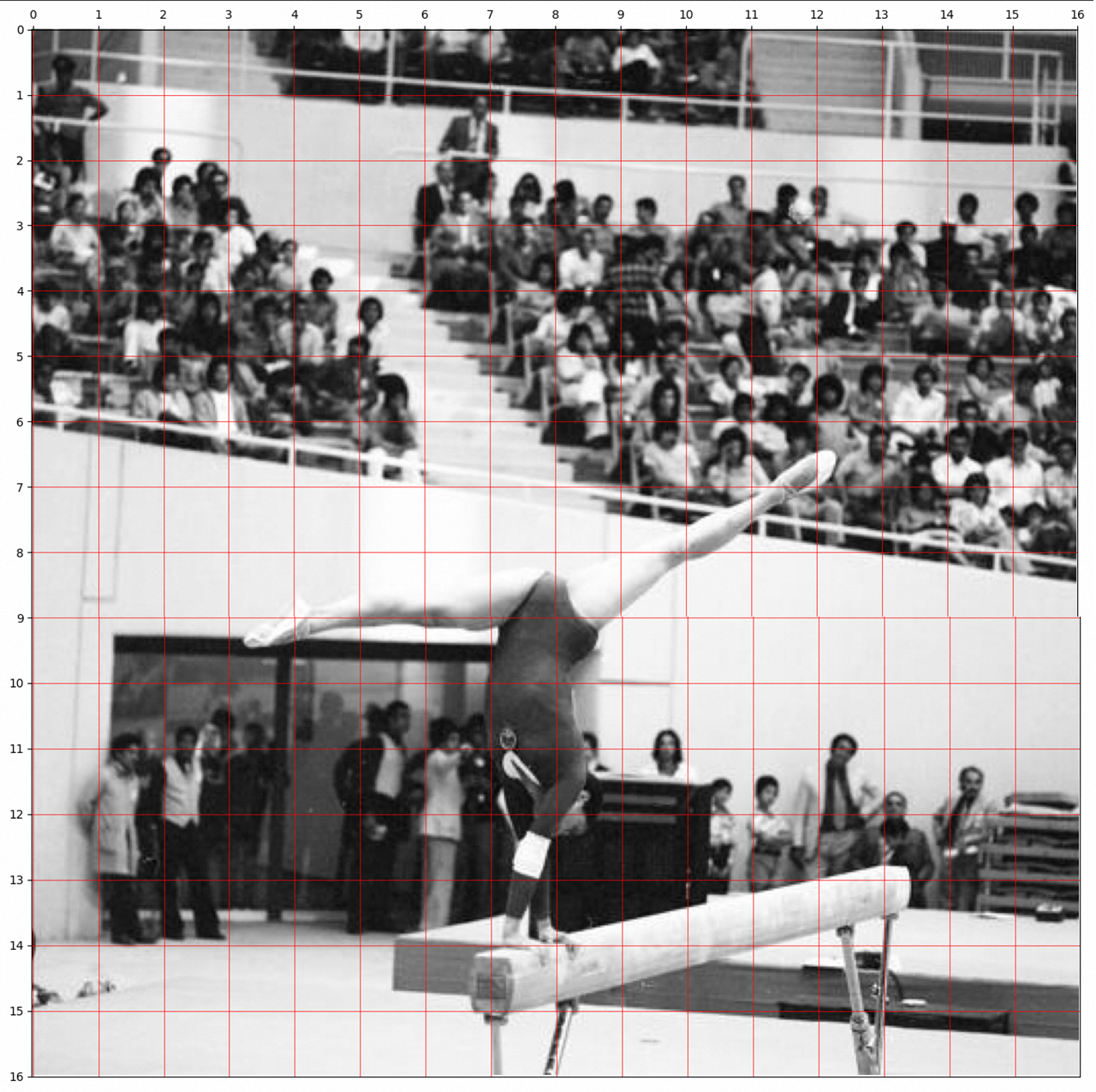

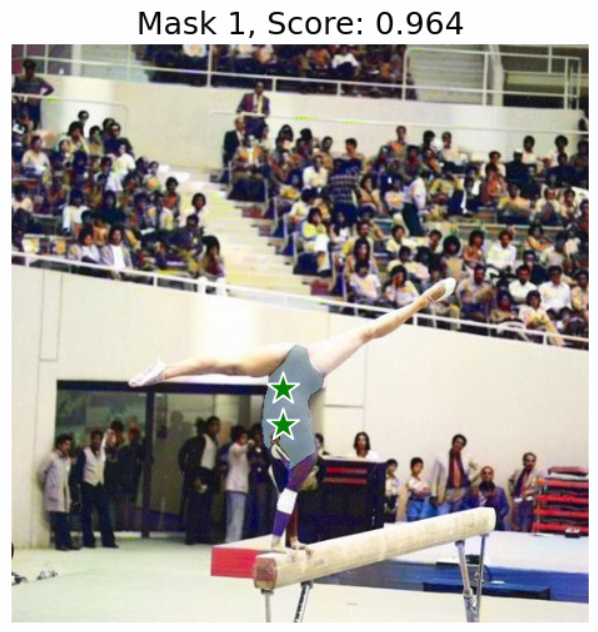

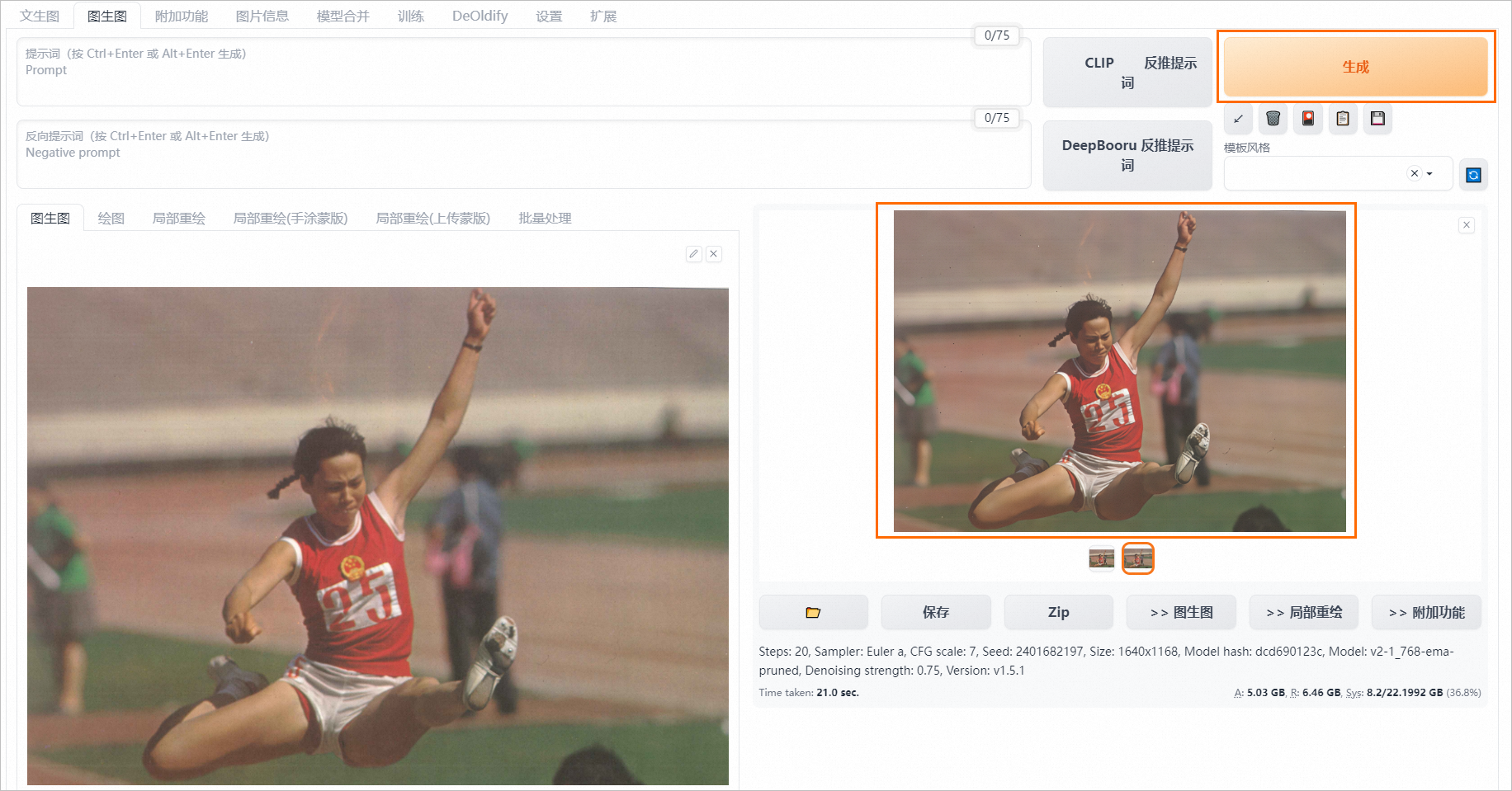

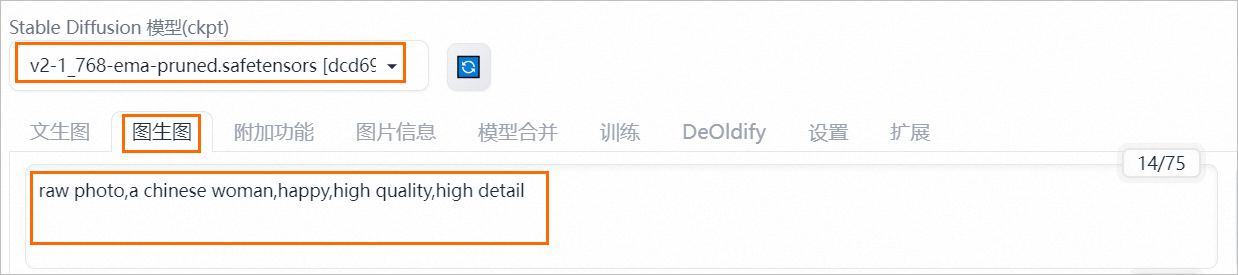

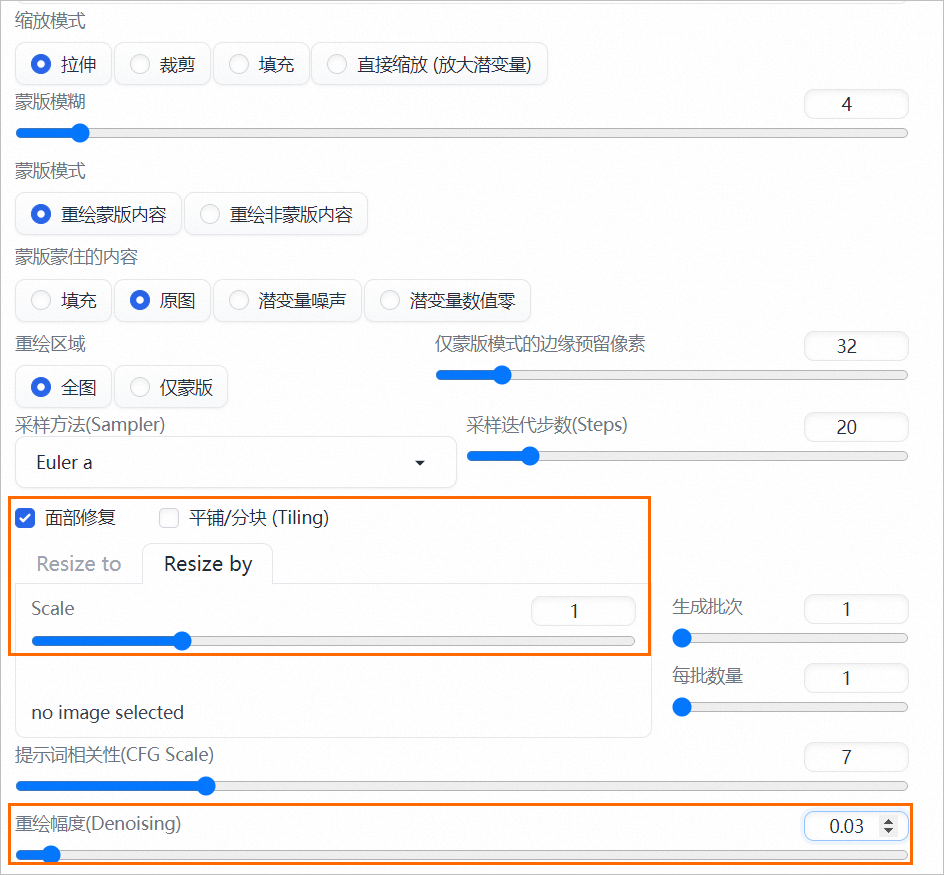

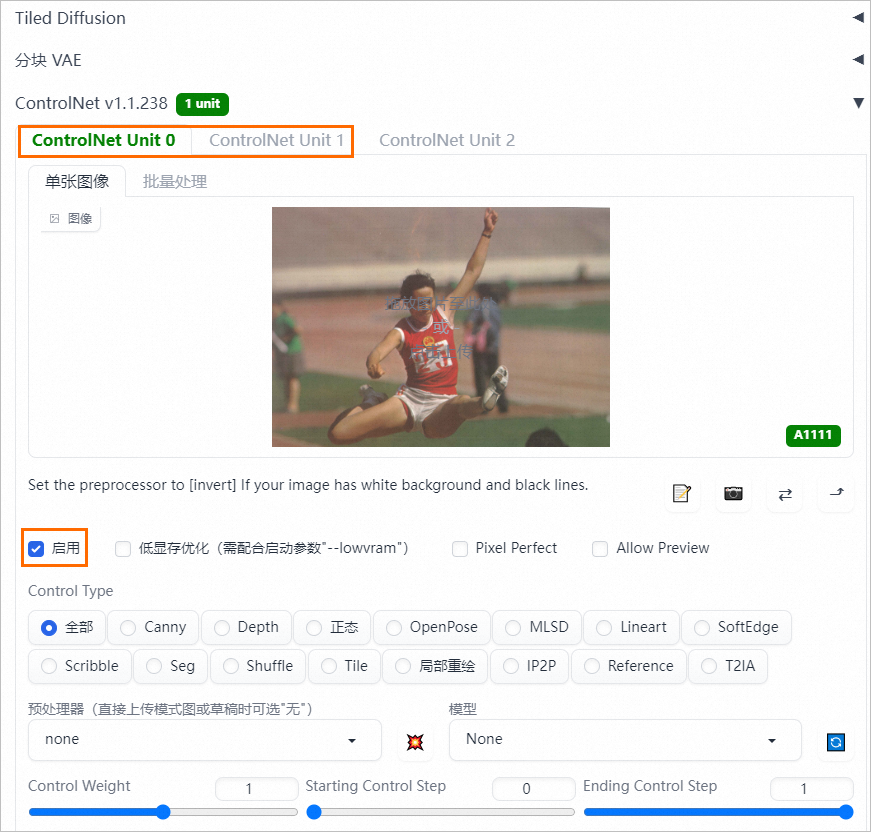

针对给定的图片,您可以选择以下两种方式,或将它们进行组合,以进行老照片修复任务。

基于源码修复图片

在Gallery中,PAI集成了大量相关领域的开源算法和预训练模型,供您方便地进行一键式使用。您也可以在Notebook中进一步优化或开发您的老照片修复算法。根据模型处理方式的不同,PAI将老照片修复任务大致分为以下几个操作步骤:

图像去噪,即去除图像中的噪声、模糊等。支持以下两种算法,您可以任意选择一种来处理图像。

Restormer

下载代码及预训练文件。下载解压完成后,您可以在

./Restormer文件夹中查看该算法的源代码。单击此处查看运行结果

http://pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com/aigc-data/restoration/repo/restormer.zip cn-hangzhou --2023-09-04 11:30:30-- http://pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com/aigc-data/restoration/repo/restormer.zip Resolving pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com (pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com)... 100.118.28.49, 100.118.28.45, 100.118.28.44, ... Connecting to pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com (pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com)|100.118.28.49|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 2859106485 (2.7G) [application/zip] Saving to: ‘restormer.zip’ restormer.zip 100%[===================>] 2.66G 14.2MB/s in 3m 18s 2023-09-04 11:33:48 (13.8 MB/s) - ‘restormer.zip’ saved [2859106485/2859106485] Archive: restormer.zip creating: Restormer/ creating: Restormer/.ipynb_checkpoints/ inflating: Restormer/.ipynb_checkpoints/demo-checkpoint.py inflating: Restormer/setup.cfg creating: Restormer/Denoising/ inflating: Restormer/Denoising/test_real_denoising_dnd.py creating: Restormer/Denoising/pretrained_models/ inflating: Restormer/Denoising/pretrained_models/gaussian_gray_denoising_sigma25.pth inflating: Restormer/Denoising/pretrained_models/real_denoising.pth inflating: Restormer/Denoising/pretrained_models/gaussian_color_denoising_blind.pth inflating: Restormer/Denoising/pretrained_models/gaussian_gray_denoising_sigma15.pth inflating: Restormer/Denoising/pretrained_models/gaussian_color_denoising_sigma50.pth inflating: Restormer/Denoising/pretrained_models/gaussian_color_denoising_sigma25.pth inflating: Restormer/Denoising/pretrained_models/gaussian_color_denoising_sigma15.pth inflating: Restormer/Denoising/pretrained_models/gaussian_gray_denoising_blind.pth inflating: Restormer/Denoising/pretrained_models/gaussian_gray_denoising_sigma50.pth inflating: Restormer/Denoising/test_gaussian_color_denoising.py inflating: Restormer/Denoising/evaluate_gaussian_gray_denoising.py inflating: Restormer/Denoising/test_real_denoising_sidd.py creating: Restormer/Denoising/Datasets/ inflating: Restormer/Denoising/Datasets/README.md inflating: Restormer/Denoising/generate_patches_sidd.py inflating: Restormer/Denoising/generate_patches_dfwb.py creating: Restormer/Denoising/Options/ inflating: Restormer/Denoising/Options/GaussianColorDenoising_RestormerSigma50.yml inflating: Restormer/Denoising/Options/GaussianGrayDenoising_Restormer.yml inflating: Restormer/Denoising/Options/GaussianColorDenoising_Restormer.yml inflating: Restormer/Denoising/Options/GaussianColorDenoising_RestormerSigma15.yml inflating: Restormer/Denoising/Options/GaussianGrayDenoising_RestormerSigma25.yml inflating: Restormer/Denoising/Options/GaussianGrayDenoising_RestormerSigma15.yml inflating: Restormer/Denoising/Options/GaussianGrayDenoising_RestormerSigma50.yml inflating: Restormer/Denoising/Options/GaussianColorDenoising_RestormerSigma25.yml inflating: Restormer/Denoising/Options/RealDenoising_Restormer.yml inflating: Restormer/Denoising/evaluate_gaussian_color_denoising.py inflating: Restormer/Denoising/README.md inflating: Restormer/Denoising/test_gaussian_gray_denoising.py inflating: Restormer/Denoising/evaluate_sidd.m inflating: Restormer/Denoising/download_data.py inflating: Restormer/Denoising/utils.py extracting: Restormer/hat.zip creating: Restormer/Deraining/ creating: Restormer/Deraining/pretrained_models/ inflating: Restormer/Deraining/pretrained_models/deraining.pth inflating: Restormer/Deraining/pretrained_models/README.md inflating: Restormer/Deraining/test.py creating: Restormer/Deraining/Datasets/ inflating: Restormer/Deraining/Datasets/README.md creating: Restormer/Deraining/Options/ inflating: Restormer/Deraining/Options/Deraining_Restormer.yml inflating: Restormer/Deraining/README.md inflating: Restormer/Deraining/evaluate_PSNR_SSIM.m inflating: Restormer/Deraining/download_data.py inflating: Restormer/Deraining/utils.py creating: Restormer/demo/ creating: Restormer/demo/restored/ creating: Restormer/demo/restored/Single_Image_Defocus_Deblurring/ inflating: Restormer/demo/restored/Single_Image_Defocus_Deblurring/couple.png inflating: Restormer/demo/restored/Single_Image_Defocus_Deblurring/engagement.png inflating: Restormer/demo/restored/Single_Image_Defocus_Deblurring/portrait.png creating: Restormer/demo/degraded/ inflating: Restormer/demo/degraded/couple.jpg inflating: Restormer/demo/degraded/portrait.jpg inflating: Restormer/demo/degraded/engagement.jpg inflating: Restormer/.gitignore inflating: Restormer/LICENSE.md inflating: Restormer/demo.py extracting: Restormer/Resformer_pretrain.zip creating: Restormer/Motion_Deblurring/ inflating: Restormer/Motion_Deblurring/evaluate_gopro_hide.m creating: Restormer/Motion_Deblurring/pretrained_models/ inflating: Restormer/Motion_Deblurring/pretrained_models/motion_deblurring.pth inflating: Restormer/Motion_Deblurring/pretrained_models/README.md inflating: Restormer/Motion_Deblurring/test.py creating: Restormer/Motion_Deblurring/Datasets/ inflating: Restormer/Motion_Deblurring/Datasets/README.md creating: Restormer/Motion_Deblurring/Options/ inflating: Restormer/Motion_Deblurring/Options/Deblurring_Restormer.yml inflating: Restormer/Motion_Deblurring/README.md inflating: Restormer/Motion_Deblurring/evaluate_realblur.py inflating: Restormer/Motion_Deblurring/generate_patches_gopro.py inflating: Restormer/Motion_Deblurring/download_data.py inflating: Restormer/Motion_Deblurring/utils.py creating: Restormer/basicsr/ creating: Restormer/basicsr/models/ inflating: Restormer/basicsr/models/base_model.py creating: Restormer/basicsr/models/archs/ inflating: Restormer/basicsr/models/archs/restormer_arch.py inflating: Restormer/basicsr/models/archs/arch_util.py inflating: Restormer/basicsr/models/archs/__init__.py inflating: Restormer/basicsr/models/lr_scheduler.py creating: Restormer/basicsr/models/losses/ inflating: Restormer/basicsr/models/losses/loss_util.py inflating: Restormer/basicsr/models/losses/__init__.py inflating: Restormer/basicsr/models/losses/losses.py inflating: Restormer/basicsr/models/__init__.py inflating: Restormer/basicsr/models/image_restoration_model.py inflating: Restormer/basicsr/train.py inflating: Restormer/basicsr/version.py inflating: Restormer/basicsr/test.py creating: Restormer/basicsr/utils/ inflating: Restormer/basicsr/utils/bundle_submissions.py inflating: Restormer/basicsr/utils/file_client.py inflating: Restormer/basicsr/utils/face_util.py inflating: Restormer/basicsr/utils/create_lmdb.py inflating: Restormer/basicsr/utils/logger.py inflating: Restormer/basicsr/utils/options.py inflating: Restormer/basicsr/utils/img_util.py inflating: Restormer/basicsr/utils/matlab_functions.py inflating: Restormer/basicsr/utils/download_util.py inflating: Restormer/basicsr/utils/__init__.py inflating: Restormer/basicsr/utils/misc.py inflating: Restormer/basicsr/utils/dist_util.py inflating: Restormer/basicsr/utils/lmdb_util.py inflating: Restormer/basicsr/utils/flow_util.py creating: Restormer/basicsr/metrics/ inflating: Restormer/basicsr/metrics/fid.py inflating: Restormer/basicsr/metrics/metric_util.py inflating: Restormer/basicsr/metrics/niqe_pris_params.npz inflating: Restormer/basicsr/metrics/psnr_ssim.py inflating: Restormer/basicsr/metrics/niqe.py inflating: Restormer/basicsr/metrics/__init__.py creating: Restormer/basicsr/data/ creating: Restormer/basicsr/data/meta_info/ inflating: Restormer/basicsr/data/meta_info/meta_info_Vimeo90K_test_medium_GT.txt inflating: Restormer/basicsr/data/meta_info/meta_info_REDS4_test_GT.txt inflating: Restormer/basicsr/data/meta_info/meta_info_Vimeo90K_test_GT.txt inflating: Restormer/basicsr/data/meta_info/meta_info_DIV2K800sub_GT.txt inflating: Restormer/basicsr/data/meta_info/meta_info_REDS_GT.txt inflating: Restormer/basicsr/data/meta_info/meta_info_Vimeo90K_test_slow_GT.txt inflating: Restormer/basicsr/data/meta_info/meta_info_REDSofficial4_test_GT.txt inflating: Restormer/basicsr/data/meta_info/meta_info_Vimeo90K_test_fast_GT.txt inflating: Restormer/basicsr/data/meta_info/meta_info_Vimeo90K_train_GT.txt inflating: Restormer/basicsr/data/meta_info/meta_info_REDSval_official_test_GT.txt inflating: Restormer/basicsr/data/paired_image_dataset.py inflating: Restormer/basicsr/data/data_util.py inflating: Restormer/basicsr/data/reds_dataset.py inflating: Restormer/basicsr/data/video_test_dataset.py inflating: Restormer/basicsr/data/single_image_dataset.py inflating: Restormer/basicsr/data/vimeo90k_dataset.py inflating: Restormer/basicsr/data/__init__.py inflating: Restormer/basicsr/data/prefetch_dataloader.py inflating: Restormer/basicsr/data/ffhq_dataset.py inflating: Restormer/basicsr/data/transforms.py inflating: Restormer/basicsr/data/data_sampler.py creating: Restormer/pretrained_models_demotion/ inflating: Restormer/pretrained_models_demotion/motion_deblurring.pth creating: Restormer/.git/ creating: Restormer/.git/logs/ creating: Restormer/.git/logs/refs/ creating: Restormer/.git/logs/refs/remotes/ creating: Restormer/.git/logs/refs/remotes/origin/ inflating: Restormer/.git/logs/refs/remotes/origin/HEAD creating: Restormer/.git/logs/refs/heads/ inflating: Restormer/.git/logs/refs/heads/main inflating: Restormer/.git/logs/HEAD inflating: Restormer/.git/config creating: Restormer/.git/refs/ creating: Restormer/.git/refs/remotes/ creating: Restormer/.git/refs/remotes/origin/ extracting: Restormer/.git/refs/remotes/origin/HEAD creating: Restormer/.git/refs/heads/ extracting: Restormer/.git/refs/heads/main creating: Restormer/.git/refs/tags/ extracting: Restormer/.git/HEAD creating: Restormer/.git/hooks/ inflating: Restormer/.git/hooks/push-to-checkout.sample inflating: Restormer/.git/hooks/commit-msg.sample inflating: Restormer/.git/hooks/applypatch-msg.sample inflating: Restormer/.git/hooks/pre-receive.sample inflating: Restormer/.git/hooks/pre-push.sample inflating: Restormer/.git/hooks/fsmonitor-watchman.sample inflating: Restormer/.git/hooks/post-update.sample inflating: Restormer/.git/hooks/update.sample inflating: Restormer/.git/hooks/pre-merge-commit.sample inflating: Restormer/.git/hooks/pre-commit.sample inflating: Restormer/.git/hooks/pre-applypatch.sample inflating: Restormer/.git/hooks/prepare-commit-msg.sample inflating: Restormer/.git/hooks/pre-rebase.sample creating: Restormer/.git/info/ inflating: Restormer/.git/info/exclude creating: Restormer/.git/objects/ creating: Restormer/.git/objects/pack/ inflating: Restormer/.git/objects/pack/pack-c5acb539fe9f4f374066c96759a798aee30d3def.pack inflating: Restormer/.git/objects/pack/pack-c5acb539fe9f4f374066c96759a798aee30d3def.idx creating: Restormer/.git/objects/info/ inflating: Restormer/.git/packed-refs inflating: Restormer/.git/index inflating: Restormer/.git/description creating: Restormer/pretrained_models_defocus_deblur/ inflating: Restormer/pretrained_models_defocus_deblur/single_image_defocus_deblurring.pth inflating: Restormer/pretrained_models_defocus_deblur/dual_pixel_defocus_deblurring.pth creating: Restormer/Defocus_Deblurring/ creating: Restormer/Defocus_Deblurring/pretrained_models/ inflating: Restormer/Defocus_Deblurring/pretrained_models/single_image_defocus_deblurring.pth inflating: Restormer/Defocus_Deblurring/pretrained_models/README.md inflating: Restormer/Defocus_Deblurring/generate_patches_dpdd.py creating: Restormer/Defocus_Deblurring/Datasets/ inflating: Restormer/Defocus_Deblurring/Datasets/README.md creating: Restormer/Defocus_Deblurring/Options/ inflating: Restormer/Defocus_Deblurring/Options/DefocusDeblur_DualPixel_16bit_Restormer.yml inflating: Restormer/Defocus_Deblurring/Options/DefocusDeblur_Single_8bit_Restormer.yml inflating: Restormer/Defocus_Deblurring/README.md inflating: Restormer/Defocus_Deblurring/test_single_image_defocus_deblur.py inflating: Restormer/Defocus_Deblurring/download_data.py inflating: Restormer/Defocus_Deblurring/utils.py inflating: Restormer/Defocus_Deblurring/test_dual_pixel_defocus_deblur.py inflating: Restormer/README.md extracting: Restormer/VERSION inflating: Restormer/setup.py creating: Restormer/pretrained_models_derain/ inflating: Restormer/pretrained_models_derain/deraining.pth inflating: Restormer/train.sh inflating: Restormer/INSTALL.md creating: Restormer/__MACOSX/ creating: Restormer/__MACOSX/pretrained_models_demotion/ inflating: Restormer/__MACOSX/pretrained_models_demotion/._motion_deblurring.pth creating: Restormer/__MACOSX/pretrained_models_defocus_deblur/ inflating: Restormer/__MACOSX/pretrained_models_defocus_deblur/._single_image_defocus_deblurring.pth inflating: Restormer/__MACOSX/pretrained_models_defocus_deblur/._dual_pixel_defocus_deblurring.pth inflating: Restormer/__MACOSX/._pretrained_models_denoise inflating: Restormer/__MACOSX/._pretrained_models_defocus_deblur creating: Restormer/__MACOSX/pretrained_models_derain/ inflating: Restormer/__MACOSX/pretrained_models_derain/._deraining.pth inflating: Restormer/__MACOSX/._pretrained_models_derain inflating: Restormer/__MACOSX/._pretrained_models_demotion creating: Restormer/__MACOSX/pretrained_models_denoise/ inflating: Restormer/__MACOSX/pretrained_models_denoise/._gaussian_gray_denoising_sigma25.pth inflating: Restormer/__MACOSX/pretrained_models_denoise/._gaussian_gray_denoising_sigma50.pth inflating: Restormer/__MACOSX/pretrained_models_denoise/._gaussian_color_denoising_sigma50.pth inflating: Restormer/__MACOSX/pretrained_models_denoise/._gaussian_color_denoising_sigma25.pth inflating: Restormer/__MACOSX/pretrained_models_denoise/._gaussian_gray_denoising_sigma15.pth inflating: Restormer/__MACOSX/pretrained_models_denoise/._gaussian_gray_denoising_blind.pth inflating: Restormer/__MACOSX/pretrained_models_denoise/._gaussian_color_denoising_blind.pth inflating: Restormer/__MACOSX/pretrained_models_denoise/._real_denoising.pth inflating: Restormer/__MACOSX/pretrained_models_denoise/._gaussian_color_denoising_sigma15.pth安装额外的环境包。

单击此处查看运行结果

Looking in indexes: https://mirrors.cloud.aliyuncs.com/pypi/simple Collecting natsort Downloading https://mirrors.cloud.aliyuncs.com/pypi/packages/ef/82/7a9d0550484a62c6da82858ee9419f3dd1ccc9aa1c26a1e43da3ecd20b0d/natsort-8.4.0-py3-none-any.whl (38 kB) Installing collected packages: natsort Successfully installed natsort-8.4.0 WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv [notice] A new release of pip is available: 23.0.1 -> 23.2.1 [notice] To update, run: python3 -m pip install --upgrade pip根据需要运行合适的推理任务,包括去运动模糊、去focus模糊、去雨滴等。您可以参考Notebook中的相关参数来进行设置。通过指定输入文件夹或输出文件夹,您可以运行相关算法来进行图像修复任务。命令执行成功后,您可以在

./results/{task_name}中查看修复后的图像结果。单击此处查看运行结果

==> Running Motion_Deblurring with weights /mnt/workspace/Restormer/Motion_Deblurring/pretrained_models/motion_deblurring.pth 100%|███████████████████████████████████████████| 10/10 [00:28<00:00, 2.81s/it] Restored images are saved at results/Motion_Deblurring ==> Running Single_Image_Defocus_Deblurring with weights /mnt/workspace/Restormer/Defocus_Deblurring/pretrained_models/single_image_defocus_deblurring.pth 100%|███████████████████████████████████████████| 10/10 [00:26<00:00, 2.64s/it] Restored images are saved at results/Single_Image_Defocus_Deblurring ==> Running Deraining with weights /mnt/workspace/Restormer/Deraining/pretrained_models/deraining.pth 100%|███████████████████████████████████████████| 10/10 [00:26<00:00, 2.65s/it] Restored images are saved at results/Deraining ==> Running Real_Denoising with weights /mnt/workspace/Restormer/Denoising/pretrained_models/real_denoising.pth 100%|███████████████████████████████████████████| 10/10 [00:24<00:00, 2.48s/it] Restored images are saved at results/Real_Denoising ==> Running Gaussian_Gray_Denoising with weights /mnt/workspace/Restormer/Denoising/pretrained_models/gaussian_gray_denoising_blind.pth 100%|███████████████████████████████████████████| 10/10 [00:24<00:00, 2.45s/it] Restored images are saved at results/Gaussian_Gray_Denoising ==> Running Gaussian_Color_Denoising with weights /mnt/workspace/Restormer/Denoising/pretrained_models/gaussian_color_denoising_blind.pth 100%|███████████████████████████████████████████| 10/10 [00:24<00:00, 2.50s/it] Restored images are saved at results/Gaussian_Color_Denoising

NAFNet

下载代码及预训练文件(基于ModelScope)。下载解压完成后,您可以在对应文件夹中查看该算法的源代码。

单击此处查看运行结果

Looking in indexes: https://mirrors.cloud.aliyuncs.com/pypi/simple Collecting modelscope Downloading https://mirrors.cloud.aliyuncs.com/pypi/packages/2b/17/53845f398e340217ecb2169033f547f640db3663ffb0fb5a6218c5f3bfec/modelscope-1.8.4-py3-none-any.whl (4.9 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 4.9/4.9 MB 42.9 MB/s eta 0:00:0000:0100:01 Collecting gast>=0.2.2 Downloading https://mirrors.cloud.aliyuncs.com/pypi/packages/fa/39/5aae571e5a5f4de9c3445dae08a530498e5c53b0e74410eeeb0991c79047/gast-0.5.4-py3-none-any.whl (19 kB) Requirement already satisfied: urllib3>=1.26 in /usr/local/lib/python3.10/dist-packages (from modelscope) (1.26.15) Requirement already satisfied: pyyaml in /usr/local/lib/python3.10/dist-packages (from modelscope) (6.0) Requirement already satisfied: tqdm>=4.64.0 in /usr/local/lib/python3.10/dist-packages (from modelscope) (4.65.0) Collecting sortedcontainers>=1.5.9 Downloading https://mirrors.cloud.aliyuncs.com/pypi/packages/32/46/9cb0e58b2deb7f82b84065f37f3bffeb12413f947f9388e4cac22c4621ce/sortedcontainers-2.4.0-py2.py3-none-any.whl (29 kB) Requirement already satisfied: Pillow>=6.2.0 in /usr/local/lib/python3.10/dist-packages (from modelscope) (9.4.0) Requirement already satisfied: pyarrow!=9.0.0,>=6.0.0 in /usr/local/lib/python3.10/dist-packages (from modelscope) (11.0.0) Collecting oss2 Downloading https://mirrors.cloud.aliyuncs.com/pypi/packages/4a/e7/08b90651a435acde68c537eebff970011422f61c465f6d1c88c4b3af6774/oss2-2.18.1.tar.gz (274 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 274.3/274.3 kB 73.3 MB/s eta 0:00:00 Preparing metadata (setup.py) ... done Requirement already satisfied: setuptools in /usr/lib/python3/dist-packages (from modelscope) (59.6.0) Requirement already satisfied: yapf in /usr/local/lib/python3.10/dist-packages (from modelscope) (0.32.0) Requirement already satisfied: attrs in /usr/local/lib/python3.10/dist-packages (from modelscope) (22.2.0) Requirement already satisfied: numpy in /usr/local/lib/python3.10/dist-packages (from modelscope) (1.23.3) Requirement already satisfied: python-dateutil>=2.1 in /usr/local/lib/python3.10/dist-packages (from modelscope) (2.8.2) Collecting simplejson>=3.3.0 Downloading https://mirrors.cloud.aliyuncs.com/pypi/packages/b8/00/9720ea26c0da200a39b89e25721970f50f4b80bb2ab6de0199324f93c4ca/simplejson-3.19.1-cp310-cp310-manylinux_2_5_x86_64.manylinux1_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl (137 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 137.9/137.9 kB 48.0 MB/s eta 0:00:00 Requirement already satisfied: datasets<=2.13.0,>=2.8.0 in /usr/local/lib/python3.10/dist-packages (from modelscope) (2.11.0) Requirement already satisfied: einops in /usr/local/lib/python3.10/dist-packages (from modelscope) (0.4.1) Requirement already satisfied: addict in /usr/local/lib/python3.10/dist-packages (from modelscope) (2.4.0) Requirement already satisfied: requests>=2.25 in /usr/local/lib/python3.10/dist-packages (from modelscope) (2.25.1) Requirement already satisfied: pandas in /usr/local/lib/python3.10/dist-packages (from modelscope) (1.5.3) Requirement already satisfied: filelock>=3.3.0 in /usr/local/lib/python3.10/dist-packages (from modelscope) (3.10.7) Requirement already satisfied: scipy in /usr/local/lib/python3.10/dist-packages (from modelscope) (1.10.1) Requirement already satisfied: huggingface-hub<1.0.0,>=0.11.0 in /usr/local/lib/python3.10/dist-packages (from datasets<=2.13.0,>=2.8.0->modelscope) (0.13.3) Requirement already satisfied: packaging in /usr/local/lib/python3.10/dist-packages (from datasets<=2.13.0,>=2.8.0->modelscope) (23.0) Requirement already satisfied: multiprocess in /usr/local/lib/python3.10/dist-packages (from datasets<=2.13.0,>=2.8.0->modelscope) (0.70.14) Requirement already satisfied: xxhash in /usr/local/lib/python3.10/dist-packages (from datasets<=2.13.0,>=2.8.0->modelscope) (3.2.0) Requirement already satisfied: dill<0.3.7,>=0.3.0 in /usr/local/lib/python3.10/dist-packages (from datasets<=2.13.0,>=2.8.0->modelscope) (0.3.6) Requirement already satisfied: aiohttp in /usr/local/lib/python3.10/dist-packages (from datasets<=2.13.0,>=2.8.0->modelscope) (3.8.4) Requirement already satisfied: fsspec[http]>=2021.11.1 in /usr/local/lib/python3.10/dist-packages (from datasets<=2.13.0,>=2.8.0->modelscope) (2023.3.0) Requirement already satisfied: responses<0.19 in /usr/local/lib/python3.10/dist-packages (from datasets<=2.13.0,>=2.8.0->modelscope) (0.18.0) Requirement already satisfied: six>=1.5 in /usr/local/lib/python3.10/dist-packages (from python-dateutil>=2.1->modelscope) (1.16.0) Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.10/dist-packages (from requests>=2.25->modelscope) (2022.12.7) Requirement already satisfied: chardet<5,>=3.0.2 in /usr/local/lib/python3.10/dist-packages (from requests>=2.25->modelscope) (4.0.0) Requirement already satisfied: idna<3,>=2.5 in /usr/local/lib/python3.10/dist-packages (from requests>=2.25->modelscope) (2.10) Collecting aliyun-python-sdk-core>=2.13.12 Downloading https://mirrors.cloud.aliyuncs.com/pypi/packages/55/5a/6eec6c6e78817e5ca2afee661f2bbb33dbcfa2ce09a2980b52223323bd2e/aliyun-python-sdk-core-2.13.36.tar.gz (440 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 440.5/440.5 kB 26.0 MB/s eta 0:00:00 Preparing metadata (setup.py) ... done Collecting aliyun-python-sdk-kms>=2.4.1 Downloading https://mirrors.cloud.aliyuncs.com/pypi/packages/13/90/02d05478df643ceac0021bd3db4f19b42dd06c2b73e082569d0d340de70c/aliyun_python_sdk_kms-2.16.1-py2.py3-none-any.whl (70 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 70.8/70.8 kB 28.1 MB/s eta 0:00:00 Collecting crcmod>=1.7 Downloading https://mirrors.cloud.aliyuncs.com/pypi/packages/6b/b0/e595ce2a2527e169c3bcd6c33d2473c1918e0b7f6826a043ca1245dd4e5b/crcmod-1.7.tar.gz (89 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 89.7/89.7 kB 31.2 MB/s eta 0:00:00 Preparing metadata (setup.py) ... done Requirement already satisfied: pycryptodome>=3.4.7 in /usr/local/lib/python3.10/dist-packages (from oss2->modelscope) (3.17) Requirement already satisfied: pytz>=2020.1 in /usr/local/lib/python3.10/dist-packages (from pandas->modelscope) (2023.3) Requirement already satisfied: cryptography>=2.6.0 in /usr/local/lib/python3.10/dist-packages (from aliyun-python-sdk-core>=2.13.12->oss2->modelscope) (40.0.1) Collecting jmespath<1.0.0,>=0.9.3 Downloading https://mirrors.cloud.aliyuncs.com/pypi/packages/07/cb/5f001272b6faeb23c1c9e0acc04d48eaaf5c862c17709d20e3469c6e0139/jmespath-0.10.0-py2.py3-none-any.whl (24 kB) Requirement already satisfied: frozenlist>=1.1.1 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets<=2.13.0,>=2.8.0->modelscope) (1.3.3) Requirement already satisfied: yarl<2.0,>=1.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets<=2.13.0,>=2.8.0->modelscope) (1.8.2) Requirement already satisfied: charset-normalizer<4.0,>=2.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets<=2.13.0,>=2.8.0->modelscope) (3.1.0) Requirement already satisfied: multidict<7.0,>=4.5 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets<=2.13.0,>=2.8.0->modelscope) (6.0.4) Requirement already satisfied: async-timeout<5.0,>=4.0.0a3 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets<=2.13.0,>=2.8.0->modelscope) (4.0.2) Requirement already satisfied: aiosignal>=1.1.2 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets<=2.13.0,>=2.8.0->modelscope) (1.3.1) Requirement already satisfied: typing-extensions>=3.7.4.3 in /usr/local/lib/python3.10/dist-packages (from huggingface-hub<1.0.0,>=0.11.0->datasets<=2.13.0,>=2.8.0->modelscope) (4.5.0) Requirement already satisfied: cffi>=1.12 in /usr/local/lib/python3.10/dist-packages (from cryptography>=2.6.0->aliyun-python-sdk-core>=2.13.12->oss2->modelscope) (1.15.1) Requirement already satisfied: pycparser in /usr/local/lib/python3.10/dist-packages (from cffi>=1.12->cryptography>=2.6.0->aliyun-python-sdk-core>=2.13.12->oss2->modelscope) (2.21) Building wheels for collected packages: oss2, aliyun-python-sdk-core, crcmod Building wheel for oss2 (setup.py) ... done Created wheel for oss2: filename=oss2-2.18.1-py3-none-any.whl size=115202 sha256=f9143856d3968608c696d2556f1f64953f89a31f7931d695e5f09ffe96a10fe4 Stored in directory: /root/.cache/pip/wheels/2b/e2/2d/a55f3aabf369a023a14d1fc570dd3a3824cdd2223f0f73e902 Building wheel for aliyun-python-sdk-core (setup.py) ... done Created wheel for aliyun-python-sdk-core: filename=aliyun_python_sdk_core-2.13.36-py3-none-any.whl size=533196 sha256=5ec4012574fc4cb6e83ed02aebc59fe9747c1a536e346e07e7eed5073e3a3be9 Stored in directory: /root/.cache/pip/wheels/0b/4f/1c/459b3309c6370bdaa926bb358dbc1b42aa0e0a26c0476ac401 Building wheel for crcmod (setup.py) ... done Created wheel for crcmod: filename=crcmod-1.7-cp310-cp310-linux_x86_64.whl size=31428 sha256=5078b5859e75ffe6d90a36bac0f4db76801b4db5a04e0f5a5523912ee5e65447 Stored in directory: /root/.cache/pip/wheels/3d/1b/33/88c98fcba7f84f7d448c44de21caf3e98deaebae12cb5104ba Successfully built oss2 aliyun-python-sdk-core crcmod Installing collected packages: sortedcontainers, crcmod, simplejson, jmespath, gast, aliyun-python-sdk-core, aliyun-python-sdk-kms, oss2, modelscope Successfully installed aliyun-python-sdk-core-2.13.36 aliyun-python-sdk-kms-2.16.1 crcmod-1.7 gast-0.5.4 jmespath-0.10.0 modelscope-1.8.4 oss2-2.18.1 simplejson-3.19.1 sortedcontainers-2.4.0 WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv [notice] A new release of pip is available: 23.0.1 -> 23.2.1 [notice] To update, run: python3 -m pip install --upgrade pip http://pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com/aigc-data/restoration/repo/nafnet.zip cn-hangzhou --2023-09-04 11:45:09-- http://pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com/aigc-data/restoration/repo/nafnet.zip Resolving pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com (pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com)... 100.118.28.49, 100.118.28.44, 100.118.28.45, ... Connecting to pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com (pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com)|100.118.28.49|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 943903968 (900M) [application/zip] Saving to: ‘nafnet.zip’ nafnet.zip 100%[===================>] 900.18M 10.7MB/s in 84s 2023-09-04 11:46:33 (10.7 MB/s) - ‘nafnet.zip’ saved [943903968/943903968] Archive: nafnet.zip creating: NAFNet/ creating: NAFNet/pretrain_model/ creating: NAFNet/pretrain_model/cv_nafnet_image-deblur_reds/ inflating: NAFNet/pretrain_model/cv_nafnet_image-deblur_reds/configuration.json inflating: NAFNet/pretrain_model/cv_nafnet_image-deblur_reds/README.md creating: NAFNet/pretrain_model/cv_nafnet_image-deblur_reds/data/ inflating: NAFNet/pretrain_model/cv_nafnet_image-deblur_reds/data/deblur.gif inflating: NAFNet/pretrain_model/cv_nafnet_image-deblur_reds/data/nafnet_arch.png inflating: NAFNet/pretrain_model/cv_nafnet_image-deblur_reds/data/blurry.jpg extracting: NAFNet/pretrain_model/cv_nafnet_image-deblur_reds/.mdl inflating: NAFNet/pretrain_model/cv_nafnet_image-deblur_reds/.msc inflating: NAFNet/pretrain_model/cv_nafnet_image-deblur_reds/pytorch_model.pt creating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/ inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/configuration.json inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/README.md creating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/ creating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/ creating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0003_001_S6_00100_00060_3200_H/ inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0003_001_S6_00100_00060_3200_H/0003_GT_SRGB_010.PNG inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0003_001_S6_00100_00060_3200_H/0003_NOISY_SRGB_010.PNG inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0003_001_S6_00100_00060_3200_H/0003_GT_SRGB_011.PNG inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0003_001_S6_00100_00060_3200_H/0003_NOISY_SRGB_011.PNG creating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0001_001_S6_00100_00060_3200_L/ inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0001_001_S6_00100_00060_3200_L/0001_NOISY_SRGB_010.PNG inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0001_001_S6_00100_00060_3200_L/0001_GT_SRGB_010.PNG inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0001_001_S6_00100_00060_3200_L/0001_GT_SRGB_011.PNG inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0001_001_S6_00100_00060_3200_L/0001_NOISY_SRGB_011.PNG creating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0002_001_S6_00100_00020_3200_N/ inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0002_001_S6_00100_00020_3200_N/0002_GT_SRGB_011.PNG inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0002_001_S6_00100_00020_3200_N/0002_NOISY_SRGB_010.PNG inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0002_001_S6_00100_00020_3200_N/0002_NOISY_SRGB_011.PNG inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0002_001_S6_00100_00020_3200_N/0002_GT_SRGB_010.PNG creating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0004_001_S6_00100_00060_4400_L/ inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0004_001_S6_00100_00060_4400_L/0004_NOISY_SRGB_010.PNG inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0004_001_S6_00100_00060_4400_L/0004_GT_SRGB_010.PNG inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0004_001_S6_00100_00060_4400_L/0004_GT_SRGB_011.PNG inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/SIDD_example/0004_001_S6_00100_00060_4400_L/0004_NOISY_SRGB_011.PNG inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/noisy-demo-1.png inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/nafnet_arch.png inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/noisy-demo-0.png inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/data/denoise.gif extracting: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/.mdl inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/.msc inflating: NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/pytorch_model.pt creating: NAFNet/pretrain_model/cv_nafnet_image-deblur_gopro/ inflating: NAFNet/pretrain_model/cv_nafnet_image-deblur_gopro/configuration.json inflating: NAFNet/pretrain_model/cv_nafnet_image-deblur_gopro/README.md creating: NAFNet/pretrain_model/cv_nafnet_image-deblur_gopro/data/ inflating: NAFNet/pretrain_model/cv_nafnet_image-deblur_gopro/data/deblur.gif inflating: NAFNet/pretrain_model/cv_nafnet_image-deblur_gopro/data/nafnet_arch.png inflating: NAFNet/pretrain_model/cv_nafnet_image-deblur_gopro/data/blurry.jpg extracting: NAFNet/pretrain_model/cv_nafnet_image-deblur_gopro/.mdl inflating: NAFNet/pretrain_model/cv_nafnet_image-deblur_gopro/.msc inflating: NAFNet/pretrain_model/cv_nafnet_image-deblur_gopro/pytorch_model.pt inflating: NAFNet/demo.py根据需要运行合适的推理任务,包括去模糊、去噪和去运动模糊。命令执行成功后,您可以在

./results/{task_name}中查看修复后的图像结果。单击此处查看运行结果

2023-09-04 11:47:14,618 - modelscope - INFO - PyTorch version 1.13.1+cu117 Found. 2023-09-04 11:47:14,619 - modelscope - INFO - Loading ast index from /root/.cache/modelscope/ast_indexer 2023-09-04 11:47:14,619 - modelscope - INFO - No valid ast index found from /root/.cache/modelscope/ast_indexer, generating ast index from prebuilt! 2023-09-04 11:47:14,695 - modelscope - INFO - Loading done! Current index file version is 1.8.4, with md5 80fa9349fc3e7b04fcfad511918062b1 and a total number of 902 components indexed /mnt/workspace/NAFNet 2023-09-04 11:47:15,604 - modelscope - INFO - initiate model from /mnt/workspace/NAFNet/pretrain_model/cv_nafnet_image-deblur_gopro 2023-09-04 11:47:15,604 - modelscope - INFO - initiate model from location /mnt/workspace/NAFNet/pretrain_model/cv_nafnet_image-deblur_gopro. 2023-09-04 11:47:15,605 - modelscope - INFO - initialize model from /mnt/workspace/NAFNet/pretrain_model/cv_nafnet_image-deblur_gopro 2023-09-04 11:47:16,267 - modelscope - INFO - Loading NAFNet model from /mnt/workspace/NAFNet/pretrain_model/cv_nafnet_image-deblur_gopro/pytorch_model.pt, with param key: [params]. 2023-09-04 11:47:16,368 - modelscope - INFO - load model done. 2023-09-04 11:47:16,400 - modelscope - INFO - load image denoise model done Total Image: 10 2023-09-04 11:47:17,517 - modelscope - WARNING - task image-deblurring input definition is missing 2023-09-04 11:47:18,893 - modelscope - WARNING - task image-deblurring output keys are missing 0/10 saved at results/nafnet_deblur/64.jpg 1/10 saved at results/nafnet_deblur/2.jpg 2/10 saved at results/nafnet_deblur/34.jpg 3/10 saved at results/nafnet_deblur/54.jpg 4/10 saved at results/nafnet_deblur/40.jpg 5/10 saved at results/nafnet_deblur/10.jpg 6/10 saved at results/nafnet_deblur/70.jpg 7/10 saved at results/nafnet_deblur/4.png 8/10 saved at results/nafnet_deblur/20.jpg 9/10 saved at results/nafnet_deblur/50.jpg 2023-09-04 11:47:23,689 - modelscope - INFO - PyTorch version 1.13.1+cu117 Found. 2023-09-04 11:47:23,690 - modelscope - INFO - Loading ast index from /root/.cache/modelscope/ast_indexer 2023-09-04 11:47:23,755 - modelscope - INFO - Loading done! Current index file version is 1.8.4, with md5 80fa9349fc3e7b04fcfad511918062b1 and a total number of 902 components indexed /mnt/workspace/NAFNet 2023-09-04 11:47:24,316 - modelscope - INFO - initiate model from /mnt/workspace/NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd 2023-09-04 11:47:24,316 - modelscope - INFO - initiate model from location /mnt/workspace/NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd. 2023-09-04 11:47:24,317 - modelscope - INFO - initialize model from /mnt/workspace/NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd 2023-09-04 11:47:24,627 - modelscope - INFO - Loading NAFNet model from /mnt/workspace/NAFNet/pretrain_model/cv_nafnet_image-denoise_sidd/pytorch_model.pt, with param key: [params]. 2023-09-04 11:47:24,685 - modelscope - INFO - load model done. 2023-09-04 11:47:24,707 - modelscope - INFO - load image denoise model done Total Image: 10 0/10 saved at results/nafnet_denoise/64.jpg 1/10 saved at results/nafnet_denoise/2.jpg 2/10 saved at results/nafnet_denoise/34.jpg 3/10 saved at results/nafnet_denoise/54.jpg 4/10 saved at results/nafnet_denoise/40.jpg 5/10 saved at results/nafnet_denoise/10.jpg 6/10 saved at results/nafnet_denoise/70.jpg 7/10 saved at results/nafnet_denoise/4.png 8/10 saved at results/nafnet_denoise/20.jpg 9/10 saved at results/nafnet_denoise/50.jpg 2023-09-04 11:47:30,906 - modelscope - INFO - PyTorch version 1.13.1+cu117 Found. 2023-09-04 11:47:30,907 - modelscope - INFO - Loading ast index from /root/.cache/modelscope/ast_indexer 2023-09-04 11:47:30,969 - modelscope - INFO - Loading done! Current index file version is 1.8.4, with md5 80fa9349fc3e7b04fcfad511918062b1 and a total number of 902 components indexed /mnt/workspace/NAFNet 2023-09-04 11:47:31,522 - modelscope - INFO - initiate model from /mnt/workspace/NAFNet/pretrain_model/cv_nafnet_image-deblur_reds 2023-09-04 11:47:31,522 - modelscope - INFO - initiate model from location /mnt/workspace/NAFNet/pretrain_model/cv_nafnet_image-deblur_reds. 2023-09-04 11:47:31,523 - modelscope - INFO - initialize model from /mnt/workspace/NAFNet/pretrain_model/cv_nafnet_image-deblur_reds 2023-09-04 11:47:32,187 - modelscope - INFO - Loading NAFNet model from /mnt/workspace/NAFNet/pretrain_model/cv_nafnet_image-deblur_reds/pytorch_model.pt, with param key: [params]. 2023-09-04 11:47:32,296 - modelscope - INFO - load model done. 2023-09-04 11:47:32,320 - modelscope - INFO - load image denoise model done Total Image: 10 2023-09-04 11:47:33,402 - modelscope - WARNING - task image-deblurring input definition is missing 2023-09-04 11:47:34,753 - modelscope - WARNING - task image-deblurring output keys are missing 0/10 saved at results/nafnet_de_motion_blur/64.jpg 1/10 saved at results/nafnet_de_motion_blur/2.jpg 2/10 saved at results/nafnet_de_motion_blur/34.jpg 3/10 saved at results/nafnet_de_motion_blur/54.jpg 4/10 saved at results/nafnet_de_motion_blur/40.jpg 5/10 saved at results/nafnet_de_motion_blur/10.jpg 6/10 saved at results/nafnet_de_motion_blur/70.jpg 7/10 saved at results/nafnet_de_motion_blur/4.png 8/10 saved at results/nafnet_de_motion_blur/20.jpg 9/10 saved at results/nafnet_de_motion_blur/50.jpg

图像超分,即提升图像分辨率和清晰度。支持以下几种算法,您可以任意选择一种来处理图像。

RealESRGAN

下载代码及预训练文件。下载解压完成后,您可以在

./Real-ESRGAN文件夹中查看该算法的源代码。单击此处查看运行结果

http://pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com/aigc-data/restoration/repo/realesrgan.zip cn-hangzhou --2023-09-05 01:29:05-- http://pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com/aigc-data/restoration/repo/realesrgan.zip Resolving pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com (pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com)... 100.118.28.50, 100.118.28.49, 100.118.28.44, ... Connecting to pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com (pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com)|100.118.28.50|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 151719684 (145M) [application/zip] Saving to: ‘realesrgan.zip’ realesrgan.zip 100%[===================>] 144.69M 11.3MB/s in 13s 2023-09-05 01:29:17 (11.5 MB/s) - ‘realesrgan.zip’ saved [151719684/151719684] Archive: realesrgan.zip creating: Real-ESRGAN/ creating: Real-ESRGAN/.ipynb_checkpoints/ inflating: Real-ESRGAN/.ipynb_checkpoints/inference_realesrgan-checkpoint.py inflating: Real-ESRGAN/setup.cfg creating: Real-ESRGAN/tests/ inflating: Real-ESRGAN/tests/test_discriminator_arch.py inflating: Real-ESRGAN/tests/test_model.py inflating: Real-ESRGAN/tests/test_utils.py creating: Real-ESRGAN/tests/data/ inflating: Real-ESRGAN/tests/data/meta_info_pair.txt inflating: Real-ESRGAN/tests/data/test_realesrnet_model.yml inflating: Real-ESRGAN/tests/data/test_realesrgan_dataset.yml creating: Real-ESRGAN/tests/data/gt.lmdb/ inflating: Real-ESRGAN/tests/data/gt.lmdb/meta_info.txt inflating: Real-ESRGAN/tests/data/gt.lmdb/lock.mdb inflating: Real-ESRGAN/tests/data/gt.lmdb/data.mdb inflating: Real-ESRGAN/tests/data/test_realesrgan_model.yml inflating: Real-ESRGAN/tests/data/meta_info_gt.txt inflating: Real-ESRGAN/tests/data/test_realesrgan_paired_dataset.yml creating: Real-ESRGAN/tests/data/gt/ inflating: Real-ESRGAN/tests/data/gt/comic.png inflating: Real-ESRGAN/tests/data/gt/baboon.png creating: Real-ESRGAN/tests/data/lq.lmdb/ inflating: Real-ESRGAN/tests/data/lq.lmdb/meta_info.txt inflating: Real-ESRGAN/tests/data/lq.lmdb/lock.mdb inflating: Real-ESRGAN/tests/data/lq.lmdb/data.mdb creating: Real-ESRGAN/tests/data/lq/ extracting: Real-ESRGAN/tests/data/lq/comic.png inflating: Real-ESRGAN/tests/data/lq/baboon.png inflating: Real-ESRGAN/tests/test_dataset.py inflating: Real-ESRGAN/requirements.txt creating: Real-ESRGAN/assets/ inflating: Real-ESRGAN/assets/teaser.jpg inflating: Real-ESRGAN/assets/realesrgan_logo_ai.png inflating: Real-ESRGAN/assets/realesrgan_logo_gv.png inflating: Real-ESRGAN/assets/realesrgan_logo.png inflating: Real-ESRGAN/assets/realesrgan_logo_gi.png inflating: Real-ESRGAN/assets/teaser-text.png inflating: Real-ESRGAN/assets/realesrgan_logo_av.png creating: Real-ESRGAN/pretrained_model/ inflating: Real-ESRGAN/.gitignore creating: Real-ESRGAN/.vscode/ inflating: Real-ESRGAN/.vscode/settings.json inflating: Real-ESRGAN/demo.py creating: Real-ESRGAN/experiments/ creating: Real-ESRGAN/experiments/pretrained_models/ extracting: Real-ESRGAN/experiments/pretrained_models/README.md inflating: Real-ESRGAN/cog.yaml inflating: Real-ESRGAN/LICENSE creating: Real-ESRGAN/inputs/ inflating: Real-ESRGAN/inputs/wolf_gray.jpg inflating: Real-ESRGAN/inputs/0030.jpg creating: Real-ESRGAN/inputs/video/ inflating: Real-ESRGAN/inputs/video/onepiece_demo.mp4 inflating: Real-ESRGAN/inputs/0014.jpg inflating: Real-ESRGAN/inputs/OST_009.png inflating: Real-ESRGAN/inputs/tree_alpha_16bit.png inflating: Real-ESRGAN/inputs/children-alpha.png inflating: Real-ESRGAN/inputs/00003.png inflating: Real-ESRGAN/inputs/00017_gray.png inflating: Real-ESRGAN/inputs/ADE_val_00000114.jpg inflating: Real-ESRGAN/MANIFEST.in inflating: Real-ESRGAN/.pre-commit-config.yaml creating: Real-ESRGAN/.git/ creating: Real-ESRGAN/.git/logs/ creating: Real-ESRGAN/.git/logs/refs/ creating: Real-ESRGAN/.git/logs/refs/remotes/ creating: Real-ESRGAN/.git/logs/refs/remotes/origin/ inflating: Real-ESRGAN/.git/logs/refs/remotes/origin/HEAD creating: Real-ESRGAN/.git/logs/refs/heads/ inflating: Real-ESRGAN/.git/logs/refs/heads/master inflating: Real-ESRGAN/.git/logs/HEAD inflating: Real-ESRGAN/.git/config creating: Real-ESRGAN/.git/refs/ creating: Real-ESRGAN/.git/refs/remotes/ creating: Real-ESRGAN/.git/refs/remotes/origin/ extracting: Real-ESRGAN/.git/refs/remotes/origin/HEAD creating: Real-ESRGAN/.git/refs/heads/ extracting: Real-ESRGAN/.git/refs/heads/master creating: Real-ESRGAN/.git/refs/tags/ extracting: Real-ESRGAN/.git/HEAD creating: Real-ESRGAN/.git/hooks/ inflating: Real-ESRGAN/.git/hooks/push-to-checkout.sample inflating: Real-ESRGAN/.git/hooks/commit-msg.sample inflating: Real-ESRGAN/.git/hooks/applypatch-msg.sample inflating: Real-ESRGAN/.git/hooks/pre-receive.sample inflating: Real-ESRGAN/.git/hooks/pre-push.sample inflating: Real-ESRGAN/.git/hooks/fsmonitor-watchman.sample inflating: Real-ESRGAN/.git/hooks/post-update.sample inflating: Real-ESRGAN/.git/hooks/update.sample inflating: Real-ESRGAN/.git/hooks/pre-merge-commit.sample inflating: Real-ESRGAN/.git/hooks/pre-commit.sample inflating: Real-ESRGAN/.git/hooks/pre-applypatch.sample inflating: Real-ESRGAN/.git/hooks/prepare-commit-msg.sample inflating: Real-ESRGAN/.git/hooks/pre-rebase.sample creating: Real-ESRGAN/.git/info/ inflating: Real-ESRGAN/.git/info/exclude creating: Real-ESRGAN/.git/objects/ creating: Real-ESRGAN/.git/objects/pack/ inflating: Real-ESRGAN/.git/objects/pack/pack-4d4a54ddcab2e146413d01c2262bbf138200efd1.pack inflating: Real-ESRGAN/.git/objects/pack/pack-4d4a54ddcab2e146413d01c2262bbf138200efd1.idx creating: Real-ESRGAN/.git/objects/info/ inflating: Real-ESRGAN/.git/packed-refs inflating: Real-ESRGAN/.git/index inflating: Real-ESRGAN/.git/description inflating: Real-ESRGAN/inference_realesrgan_video.py inflating: Real-ESRGAN/CODE_OF_CONDUCT.md inflating: Real-ESRGAN/README.md creating: Real-ESRGAN/realesrgan/ creating: Real-ESRGAN/realesrgan/.ipynb_checkpoints/ inflating: Real-ESRGAN/realesrgan/.ipynb_checkpoints/__init__-checkpoint.py creating: Real-ESRGAN/realesrgan/models/ inflating: Real-ESRGAN/realesrgan/models/realesrnet_model.py creating: Real-ESRGAN/realesrgan/models/__pycache__/ inflating: Real-ESRGAN/realesrgan/models/__pycache__/__init__.cpython-310.pyc inflating: Real-ESRGAN/realesrgan/models/__pycache__/realesrgan_model.cpython-310.pyc inflating: Real-ESRGAN/realesrgan/models/__pycache__/realesrnet_model.cpython-310.pyc inflating: Real-ESRGAN/realesrgan/models/realesrgan_model.py inflating: Real-ESRGAN/realesrgan/models/__init__.py inflating: Real-ESRGAN/realesrgan/train.py creating: Real-ESRGAN/realesrgan/__pycache__/ inflating: Real-ESRGAN/realesrgan/__pycache__/__init__.cpython-310.pyc inflating: Real-ESRGAN/realesrgan/__pycache__/utils.cpython-310.pyc creating: Real-ESRGAN/realesrgan/archs/ inflating: Real-ESRGAN/realesrgan/archs/discriminator_arch.py creating: Real-ESRGAN/realesrgan/archs/__pycache__/ inflating: Real-ESRGAN/realesrgan/archs/__pycache__/__init__.cpython-310.pyc inflating: Real-ESRGAN/realesrgan/archs/__pycache__/srvgg_arch.cpython-310.pyc inflating: Real-ESRGAN/realesrgan/archs/__pycache__/discriminator_arch.cpython-310.pyc inflating: Real-ESRGAN/realesrgan/archs/__init__.py inflating: Real-ESRGAN/realesrgan/archs/srvgg_arch.py creating: Real-ESRGAN/realesrgan/data/ creating: Real-ESRGAN/realesrgan/data/__pycache__/ inflating: Real-ESRGAN/realesrgan/data/__pycache__/realesrgan_paired_dataset.cpython-310.pyc inflating: Real-ESRGAN/realesrgan/data/__pycache__/__init__.cpython-310.pyc inflating: Real-ESRGAN/realesrgan/data/__pycache__/realesrgan_dataset.cpython-310.pyc inflating: Real-ESRGAN/realesrgan/data/realesrgan_dataset.py inflating: Real-ESRGAN/realesrgan/data/realesrgan_paired_dataset.py inflating: Real-ESRGAN/realesrgan/data/__init__.py inflating: Real-ESRGAN/realesrgan/__init__.py inflating: Real-ESRGAN/realesrgan/utils.py inflating: Real-ESRGAN/README_CN.md extracting: Real-ESRGAN/VERSION inflating: Real-ESRGAN/setup.py creating: Real-ESRGAN/.github/ creating: Real-ESRGAN/.github/workflows/ inflating: Real-ESRGAN/.github/workflows/no-response.yml inflating: Real-ESRGAN/.github/workflows/release.yml inflating: Real-ESRGAN/.github/workflows/pylint.yml inflating: Real-ESRGAN/.github/workflows/publish-pip.yml creating: Real-ESRGAN/docs/ inflating: Real-ESRGAN/docs/anime_comparisons.md inflating: Real-ESRGAN/docs/FAQ.md inflating: Real-ESRGAN/docs/ncnn_conversion.md inflating: Real-ESRGAN/docs/Training_CN.md inflating: Real-ESRGAN/docs/CONTRIBUTING.md inflating: Real-ESRGAN/docs/anime_video_model.md inflating: Real-ESRGAN/docs/feedback.md inflating: Real-ESRGAN/docs/anime_model.md inflating: Real-ESRGAN/docs/Training.md inflating: Real-ESRGAN/docs/model_zoo.md inflating: Real-ESRGAN/docs/anime_comparisons_CN.md inflating: Real-ESRGAN/cog_predict.py creating: Real-ESRGAN/scripts/ inflating: Real-ESRGAN/scripts/pytorch2onnx.py inflating: Real-ESRGAN/scripts/extract_subimages.py inflating: Real-ESRGAN/scripts/generate_meta_info.py inflating: Real-ESRGAN/scripts/generate_multiscale_DF2K.py inflating: Real-ESRGAN/scripts/generate_meta_info_pairdata.py creating: Real-ESRGAN/weights/ inflating: Real-ESRGAN/weights/RealESRNet_x4plus.pth inflating: Real-ESRGAN/weights/README.md inflating: Real-ESRGAN/weights/RealESRGAN_x4plus.pth inflating: Real-ESRGAN/weights/RealESRGAN_x4plus_anime_6B.pth creating: Real-ESRGAN/options/ inflating: Real-ESRGAN/options/train_realesrnet_x2plus.yml inflating: Real-ESRGAN/options/finetune_realesrgan_x4plus_pairdata.yml inflating: Real-ESRGAN/options/train_realesrnet_x4plus.yml inflating: Real-ESRGAN/options/train_realesrgan_x2plus.yml inflating: Real-ESRGAN/options/train_realesrgan_x4plus.yml inflating: Real-ESRGAN/options/finetune_realesrgan_x4plus.yml根据需要运行合适的推理任务。命令执行成功后,您可以在

./results/{task_name}中查看修复后的图像结果。单击此处查看运行结果

Testing 0 10 Tile 1/1 Testing 1 2 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 2 20 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 3 34 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 4 4 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 5 40 Tile 1/1 Testing 6 50 Tile 1/1 Testing 7 54 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 8 64 Tile 1/1 Testing 9 70 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 0 10 Tile 1/1 Testing 1 2 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 2 20 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 3 34 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 4 4 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 5 40 Tile 1/1 Testing 6 50 Tile 1/1 Testing 7 54 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 8 64 Tile 1/1 Testing 9 70 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 0 10 Tile 1/1 Testing 1 2 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 2 20 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 3 34 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 4 4 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 5 40 Tile 1/1 Testing 6 50 Tile 1/1 Testing 7 54 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4 Testing 8 64 Tile 1/1 Testing 9 70 Tile 1/4 Tile 2/4 Tile 3/4 Tile 4/4

SwinIR

下载代码及预训练文件。下载解压完成后,您可以在

./SwinIR文件夹中查看该算法的源代码。单击此处查看运行结果

http://pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com/aigc-data/restoration/repo/swinir.zip cn-hangzhou --2023-09-05 01:34:02-- http://pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com/aigc-data/restoration/repo/swinir.zip Resolving pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com (pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com)... 100.118.28.44, 100.118.28.49, 100.118.28.45, ... Connecting to pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com (pai-vision-data-hz2.oss-cn-hangzhou-internal.aliyuncs.com)|100.118.28.44|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 580693761 (554M) [application/zip] Saving to: ‘swinir.zip’ swinir.zip 100%[===================>] 553.79M 11.4MB/s in 51s 2023-09-05 01:34:54 (10.8 MB/s) - ‘swinir.zip’ saved [580693761/580693761] Archive: swinir.zip creating: SwinIR/ creating: SwinIR/.ipynb_checkpoints/ inflating: SwinIR/.ipynb_checkpoints/main_test_swinir-checkpoint.py inflating: SwinIR/.ipynb_checkpoints/predict-checkpoint.py creating: SwinIR/models/ creating: SwinIR/models/__pycache__/ inflating: SwinIR/models/__pycache__/network_swinir.cpython-310.pyc inflating: SwinIR/models/network_swinir.py creating: SwinIR/pretrained_model/ inflating: SwinIR/pretrained_model/004_grayDN_DFWB_s128w8_SwinIR-M_noise50.pth inflating: SwinIR/pretrained_model/006_colorCAR_DFWB_s126w7_SwinIR-M_jpeg10.pth inflating: SwinIR/pretrained_model/004_grayDN_DFWB_s128w8_SwinIR-M_noise25.pth inflating: SwinIR/pretrained_model/005_colorDN_DFWB_s128w8_SwinIR-M_noise50.pth inflating: SwinIR/pretrained_model/001_classicalSR_DF2K_s64w8_SwinIR-M_x4.pth inflating: SwinIR/pretrained_model/006_colorCAR_DFWB_s126w7_SwinIR-M_jpeg40.pth inflating: SwinIR/pretrained_model/006_colorCAR_DFWB_s126w7_SwinIR-M_jpeg20.pth inflating: SwinIR/pretrained_model/006_colorCAR_DFWB_s126w7_SwinIR-M_jpeg30.pth inflating: SwinIR/pretrained_model/005_colorDN_DFWB_s128w8_SwinIR-M_noise15.pth inflating: SwinIR/pretrained_model/004_grayDN_DFWB_s128w8_SwinIR-M_noise15.pth inflating: SwinIR/pretrained_model/003_realSR_BSRGAN_DFO_s64w8_SwinIR-M_x4_GAN.pth inflating: SwinIR/pretrained_model/005_colorDN_DFWB_s128w8_SwinIR-M_noise25.pth inflating: SwinIR/demo.py inflating: SwinIR/cog.yaml inflating: SwinIR/LICENSE inflating: SwinIR/download-weights.sh creating: SwinIR/utils/ creating: SwinIR/utils/__pycache__/ inflating: SwinIR/utils/__pycache__/util_calculate_psnr_ssim.cpython-310.pyc inflating: SwinIR/utils/util_calculate_psnr_ssim.py creating: SwinIR/.git/ creating: SwinIR/.git/logs/ creating: SwinIR/.git/logs/refs/ creating: SwinIR/.git/logs/refs/remotes/ creating: SwinIR/.git/logs/refs/remotes/origin/ inflating: SwinIR/.git/logs/refs/remotes/origin/HEAD creating: SwinIR/.git/logs/refs/heads/ inflating: SwinIR/.git/logs/refs/heads/main inflating: SwinIR/.git/logs/HEAD inflating: SwinIR/.git/config creating: SwinIR/.git/refs/ creating: SwinIR/.git/refs/remotes/ creating: SwinIR/.git/refs/remotes/origin/ extracting: SwinIR/.git/refs/remotes/origin/HEAD creating: SwinIR/.git/refs/heads/ extracting: SwinIR/.git/refs/heads/main creating: SwinIR/.git/refs/tags/ extracting: SwinIR/.git/HEAD creating: SwinIR/.git/hooks/ inflating: SwinIR/.git/hooks/push-to-checkout.sample inflating: SwinIR/.git/hooks/commit-msg.sample inflating: SwinIR/.git/hooks/applypatch-msg.sample inflating: SwinIR/.git/hooks/pre-receive.sample inflating: SwinIR/.git/hooks/pre-push.sample inflating: SwinIR/.git/hooks/fsmonitor-watchman.sample inflating: SwinIR/.git/hooks/post-update.sample inflating: SwinIR/.git/hooks/update.sample inflating: SwinIR/.git/hooks/pre-merge-commit.sample inflating: SwinIR/.git/hooks/pre-commit.sample inflating: SwinIR/.git/hooks/pre-applypatch.sample inflating: SwinIR/.git/hooks/prepare-commit-msg.sample inflating: SwinIR/.git/hooks/pre-rebase.sample creating: SwinIR/.git/info/ inflating: SwinIR/.git/info/exclude creating: SwinIR/.git/objects/ creating: SwinIR/.git/objects/pack/ inflating: SwinIR/.git/objects/pack/pack-04d185ace674ba8b24be5a9b5fb3304d4c6a4e74.pack inflating: SwinIR/.git/objects/pack/pack-04d185ace674ba8b24be5a9b5fb3304d4c6a4e74.idx creating: SwinIR/.git/objects/info/ inflating: SwinIR/.git/packed-refs inflating: SwinIR/.git/index inflating: SwinIR/.git/description inflating: SwinIR/README.md creating: SwinIR/testsets/ creating: SwinIR/testsets/McMaster/ inflating: SwinIR/testsets/McMaster/17.tif inflating: SwinIR/testsets/McMaster/9.tif inflating: SwinIR/testsets/McMaster/3.tif inflating: SwinIR/testsets/McMaster/5.tif inflating: SwinIR/testsets/McMaster/16.tif inflating: SwinIR/testsets/McMaster/4.tif inflating: SwinIR/testsets/McMaster/12.tif inflating: SwinIR/testsets/McMaster/6.tif inflating: SwinIR/testsets/McMaster/14.tif inflating: SwinIR/testsets/McMaster/7.tif inflating: SwinIR/testsets/McMaster/8.tif inflating: SwinIR/testsets/McMaster/13.tif inflating: SwinIR/testsets/McMaster/1.tif inflating: SwinIR/testsets/McMaster/18.tif inflating: SwinIR/testsets/McMaster/11.tif inflating: SwinIR/testsets/McMaster/2.tif inflating: SwinIR/testsets/McMaster/15.tif inflating: SwinIR/testsets/McMaster/10.tif creating: SwinIR/testsets/classic5/ inflating: SwinIR/testsets/classic5/lena.bmp inflating: SwinIR/testsets/classic5/baboon.bmp inflating: SwinIR/testsets/classic5/barbara.bmp inflating: SwinIR/testsets/classic5/boats.bmp inflating: SwinIR/testsets/classic5/peppers.bmp creating: SwinIR/testsets/Set12/ inflating: SwinIR/testsets/Set12/06.png inflating: SwinIR/testsets/Set12/04.png inflating: SwinIR/testsets/Set12/03.png inflating: SwinIR/testsets/Set12/02.png inflating: SwinIR/testsets/Set12/11.png inflating: SwinIR/testsets/Set12/07.png inflating: SwinIR/testsets/Set12/09.png inflating: SwinIR/testsets/Set12/10.png inflating: SwinIR/testsets/Set12/12.png inflating: SwinIR/testsets/Set12/05.png inflating: SwinIR/testsets/Set12/08.png inflating: SwinIR/testsets/Set12/01.png creating: SwinIR/testsets/RealSRSet+5images/ inflating: SwinIR/testsets/RealSRSet+5images/building.png inflating: SwinIR/testsets/RealSRSet+5images/0030.jpg inflating: SwinIR/testsets/RealSRSet+5images/painting.png inflating: SwinIR/testsets/RealSRSet+5images/comic1.png inflating: SwinIR/testsets/RealSRSet+5images/0014.jpg inflating: SwinIR/testsets/RealSRSet+5images/OST_009.png inflating: SwinIR/testsets/RealSRSet+5images/comic2.png inflating: SwinIR/testsets/RealSRSet+5images/pattern.png inflating: SwinIR/testsets/RealSRSet+5images/foreman.png inflating: SwinIR/testsets/RealSRSet+5images/oldphoto3.png inflating: SwinIR/testsets/RealSRSet+5images/oldphoto6.png inflating: SwinIR/testsets/RealSRSet+5images/dog.png inflating: SwinIR/testsets/RealSRSet+5images/Lincoln.png inflating: SwinIR/testsets/RealSRSet+5images/oldphoto2.png inflating: SwinIR/testsets/RealSRSet+5images/00003.png inflating: SwinIR/testsets/RealSRSet+5images/frog.png inflating: SwinIR/testsets/RealSRSet+5images/butterfly.png inflating: SwinIR/testsets/RealSRSet+5images/ppt3.png inflating: SwinIR/testsets/RealSRSet+5images/butterfly2.png inflating: SwinIR/testsets/RealSRSet+5images/computer.png extracting: SwinIR/testsets/RealSRSet+5images/chip.png inflating: SwinIR/testsets/RealSRSet+5images/comic3.png inflating: SwinIR/testsets/RealSRSet+5images/dped_crop00061.png inflating: SwinIR/testsets/RealSRSet+5images/tiger.png inflating: SwinIR/testsets/RealSRSet+5images/ADE_val_00000114.jpg creating: SwinIR/testsets/Set5/ creating: SwinIR/testsets/Set5/HR/ inflating: SwinIR/testsets/Set5/HR/woman.png inflating: SwinIR/testsets/Set5/HR/butterfly.png inflating: SwinIR/testsets/Set5/HR/head.png inflating: SwinIR/testsets/Set5/HR/baby.png inflating: SwinIR/testsets/Set5/HR/bird.png creating: SwinIR/testsets/Set5/LR_bicubic/ creating: SwinIR/testsets/Set5/LR_bicubic/X8/ extracting: SwinIR/testsets/Set5/LR_bicubic/X8/womanx8.png extracting: SwinIR/testsets/Set5/LR_bicubic/X8/headx8.png extracting: SwinIR/testsets/Set5/LR_bicubic/X8/birdx8.png extracting: SwinIR/testsets/Set5/LR_bicubic/X8/babyx8.png extracting: SwinIR/testsets/Set5/LR_bicubic/X8/butterflyx8.png creating: SwinIR/testsets/Set5/LR_bicubic/X4/ extracting: SwinIR/testsets/Set5/LR_bicubic/X4/birdx4.png extracting: SwinIR/testsets/Set5/LR_bicubic/X4/butterflyx4.png extracting: SwinIR/testsets/Set5/LR_bicubic/X4/babyx4.png extracting: SwinIR/testsets/Set5/LR_bicubic/X4/womanx4.png extracting: SwinIR/testsets/Set5/LR_bicubic/X4/headx4.png creating: SwinIR/testsets/Set5/LR_bicubic/X3/ extracting: SwinIR/testsets/Set5/LR_bicubic/X3/butterflyx3.png inflating: SwinIR/testsets/Set5/LR_bicubic/X3/babyx3.png extracting: SwinIR/testsets/Set5/LR_bicubic/X3/womanx3.png extracting: SwinIR/testsets/Set5/LR_bicubic/X3/birdx3.png extracting: SwinIR/testsets/Set5/LR_bicubic/X3/headx3.png creating: SwinIR/testsets/Set5/LR_bicubic/X2/ inflating: SwinIR/testsets/Set5/LR_bicubic/X2/womanx2.png inflating: SwinIR/testsets/Set5/LR_bicubic/X2/headx2.png inflating: SwinIR/testsets/Set5/LR_bicubic/X2/butterflyx2.png inflating: SwinIR/testsets/Set5/LR_bicubic/X2/birdx2.png inflating: SwinIR/testsets/Set5/LR_bicubic/X2/babyx2.png creating: SwinIR/figs/ inflating: SwinIR/figs/ETH_SwinIR-L.png inflating: SwinIR/figs/OST_009_crop_realESRGAN.png inflating: SwinIR/figs/ETH_realESRGAN.jpg inflating: SwinIR/figs/SwinIR_archi.png inflating: SwinIR/figs/OST_009_crop_LR.png inflating: SwinIR/figs/jepg_compress_artfact_reduction.png inflating: SwinIR/figs/classic_image_sr.png inflating: SwinIR/figs/ETH_SwinIR.png inflating: SwinIR/figs/OST_009_crop_SwinIR.png inflating: SwinIR/figs/ETH_BSRGAN.png inflating: SwinIR/figs/color_image_denoising.png inflating: SwinIR/figs/classic_image_sr_visual.png inflating: SwinIR/figs/lightweight_image_sr.png inflating: SwinIR/figs/gray_image_denoising.png inflating: SwinIR/figs/OST_009_crop_SwinIR-L.png inflating: SwinIR/figs/OST_009_crop_BSRGAN.png inflating: SwinIR/figs/real_world_image_sr.png inflating: SwinIR/figs/ETH_LR.png creating: SwinIR/model_zoo/ inflating: SwinIR/model_zoo/README.md inflating: SwinIR/predict.py根据需要运行合适的推理任务。命令执行成功后,您可以在

./results/{task_name}中查看修复后的图像结果。单击此处查看运行结果

loading model from /mnt/workspace/SwinIR/pretrained_model/001_classicalSR_DF2K_s64w8_SwinIR-M_x4.pth /usr/local/lib/python3.10/dist-packages/torch/functional.py:504: UserWarning: torch.meshgrid: in an upcoming release, it will be required to pass the indexing argument. (Triggered internally at ../aten/src/ATen/native/TensorShape.cpp:3190.) return _VF.meshgrid(tensors, **kwargs) # type: ignore[attr-defined] results//swinir_classical_sr_x4 Testing 0 10 Testing 1 2 Testing 2 20 Testing 3 34 Testing 4 4 Testing 5 40 Testing 6 50 Testing 7 54 Testing 8 64 Testing 9 70 loading model from /mnt/workspace/SwinIR/pretrained_model/003_realSR_BSRGAN_DFO_s64w8_SwinIR-M_x4_GAN.pth /usr/local/lib/python3.10/dist-packages/torch/functional.py:504: UserWarning: torch.meshgrid: in an upcoming release, it will be required to pass the indexing argument. (Triggered internally at ../aten/src/ATen/native/TensorShape.cpp:3190.) return _VF.meshgrid(tensors, **kwargs) # type: ignore[attr-defined] results//swinir_real_sr_x4 Testing 0 10 Testing 1 2 Testing 2 20 Testing 3 34 Testing 4 4 Testing 5 40 Testing 6 50 Testing 7 54 Testing 8 64 Testing 9 70 loading model from /mnt/workspace/SwinIR/pretrained_model/004_grayDN_DFWB_s128w8_SwinIR-M_noise15.pth /usr/local/lib/python3.10/dist-packages/torch/functional.py:504: UserWarning: torch.meshgrid: in an upcoming release, it will be required to pass the indexing argument. (Triggered internally at ../aten/src/ATen/native/TensorShape.cpp:3190.) return _VF.meshgrid(tensors, **kwargs) # type: ignore[attr-defined] results//swinir_gray_dn_noise15 Testing 0 10 - PSNR: 33.61 dB; SSIM: 0.9556; PSNRB: 0.00 dB;PSNR_Y: 0.00 dB; SSIM_Y: 0.0000; PSNRB_Y: 0.00 dB. Testing 1 2 - PSNR: 33.54 dB; SSIM: 0.9048; PSNRB: 0.00 dB;PSNR_Y: 0.00 dB; SSIM_Y: 0.0000; PSNRB_Y: 0.00 dB. Testing 2 20 - PSNR: 32.79 dB; SSIM: 0.9033; PSNRB: 0.00 dB;PSNR_Y: 0.00 dB; SSIM_Y: 0.0000; PSNRB_Y: 0.00 dB. Testing 3 34 - PSNR: 32.80 dB; SSIM: 0.8973; PSNRB: 0.00 dB;PSNR_Y: 0.00 dB; SSIM_Y: 0.0000; PSNRB_Y: 0.00 dB. Testing 4 4 - PSNR: 39.13 dB; SSIM: 0.9357; PSNRB: 0.00 dB;PSNR_Y: 0.00 dB; SSIM_Y: 0.0000; PSNRB_Y: 0.00 dB. Testing 5 40 - PSNR: 31.12 dB; SSIM: 0.9500; PSNRB: 0.00 dB;PSNR_Y: 0.00 dB; SSIM_Y: 0.0000; PSNRB_Y: 0.00 dB. Testing 6 50 - PSNR: 31.57 dB; SSIM: 0.9437; PSNRB: 0.00 dB;PSNR_Y: 0.00 dB; SSIM_Y: 0.0000; PSNRB_Y: 0.00 dB. Testing 7 54 - PSNR: 36.47 dB; SSIM: 0.9115; PSNRB: 0.00 dB;PSNR_Y: 0.00 dB; SSIM_Y: 0.0000; PSNRB_Y: 0.00 dB. Testing 8 64 - PSNR: 35.19 dB; SSIM: 0.9507; PSNRB: 0.00 dB;PSNR_Y: 0.00 dB; SSIM_Y: 0.0000; PSNRB_Y: 0.00 dB. Testing 9 70 - PSNR: 31.88 dB; SSIM: 0.8856; PSNRB: 0.00 dB;PSNR_Y: 0.00 dB; SSIM_Y: 0.0000; PSNRB_Y: 0.00 dB. results//swinir_gray_dn_noise15 -- Average PSNR/SSIM(RGB): 33.81 dB; 0.9238 loading model from /mnt/workspace/SwinIR/pretrained_model/005_colorDN_DFWB_s128w8_SwinIR-M_noise15.pth /usr/local/lib/python3.10/dist-packages/torch/functional.py:504: UserWarning: torch.meshgrid: in an upcoming release, it will be required to pass the indexing argument. (Triggered internally at ../aten/src/ATen/native/TensorShape.cpp:3190.) return _VF.meshgrid(tensors, **kwargs) # type: ignore[attr-defined] results//swinir_color_dn_noise15 Testing 0 10 - PSNR: 36.38 dB; SSIM: 0.9705; PSNRB: 0.00 dB;PSNR_Y: 37.74 dB; SSIM_Y: 0.9749; PSNRB_Y: 0.00 dB. Testing 1 2 - PSNR: 35.28 dB; SSIM: 0.9327; PSNRB: 0.00 dB;PSNR_Y: 37.29 dB; SSIM_Y: 0.9463; PSNRB_Y: 0.00 dB. Testing 2 20 - PSNR: 35.06 dB; SSIM: 0.9315; PSNRB: 0.00 dB;PSNR_Y: 36.52 dB; SSIM_Y: 0.9432; PSNRB_Y: 0.00 dB. Testing 3 34 - PSNR: 35.23 dB; SSIM: 0.9307; PSNRB: 0.00 dB;PSNR_Y: 36.58 dB; SSIM_Y: 0.9418; PSNRB_Y: 0.00 dB. Testing 4 4 - PSNR: 39.07 dB; SSIM: 0.9320; PSNRB: 0.00 dB;PSNR_Y: 41.84 dB; SSIM_Y: 0.9568; PSNRB_Y: 0.00 dB. Testing 5 40 - PSNR: 34.48 dB; SSIM: 0.9716; PSNRB: 0.00 dB;PSNR_Y: 35.83 dB; SSIM_Y: 0.9751; PSNRB_Y: 0.00 dB. Testing 6 50 - PSNR: 34.92 dB; SSIM: 0.9648; PSNRB: 0.00 dB;PSNR_Y: 36.27 dB; SSIM_Y: 0.9702; PSNRB_Y: 0.00 dB. Testing 7 54 - PSNR: 38.24 dB; SSIM: 0.9331; PSNRB: 0.00 dB;PSNR_Y: 39.60 dB; SSIM_Y: 0.9463; PSNRB_Y: 0.00 dB. Testing 8 64 - PSNR: 37.77 dB; SSIM: 0.9678; PSNRB: 0.00 dB;PSNR_Y: 39.14 dB; SSIM_Y: 0.9733; PSNRB_Y: 0.00 dB. Testing 9 70 - PSNR: 34.51 dB; SSIM: 0.9226; PSNRB: 0.00 dB;PSNR_Y: 35.85 dB; SSIM_Y: 0.9349; PSNRB_Y: 0.00 dB. results//swinir_color_dn_noise15 -- Average PSNR/SSIM(RGB): 36.10 dB; 0.9457 -- Average PSNR_Y/SSIM_Y: 37.67 dB; 0.9563 loading model from /mnt/workspace/SwinIR/pretrained_model/006_colorCAR_DFWB_s126w7_SwinIR-M_jpeg10.pth /usr/local/lib/python3.10/dist-packages/torch/functional.py:504: UserWarning: torch.meshgrid: in an upcoming release, it will be required to pass the indexing argument. (Triggered internally at ../aten/src/ATen/native/TensorShape.cpp:3190.) return _VF.meshgrid(tensors, **kwargs) # type: ignore[attr-defined] results//swinir_color_jpeg_car_jpeg10 Testing 0 10 Testing 1 2 Testing 2 20 Testing 3 34 Testing 4 4 Testing 5 40 Testing 6 50 Testing 7 54 Testing 8 64 Testing 9 70

HAT

下载代码和预训练文件。下载解压完成后,您可以在

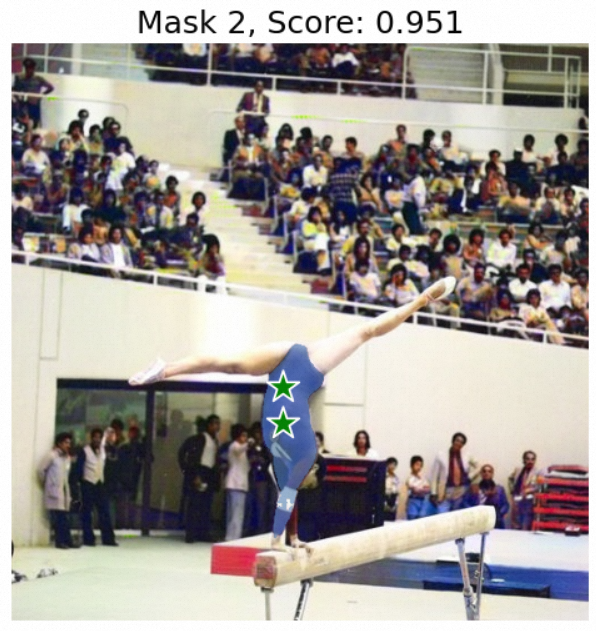

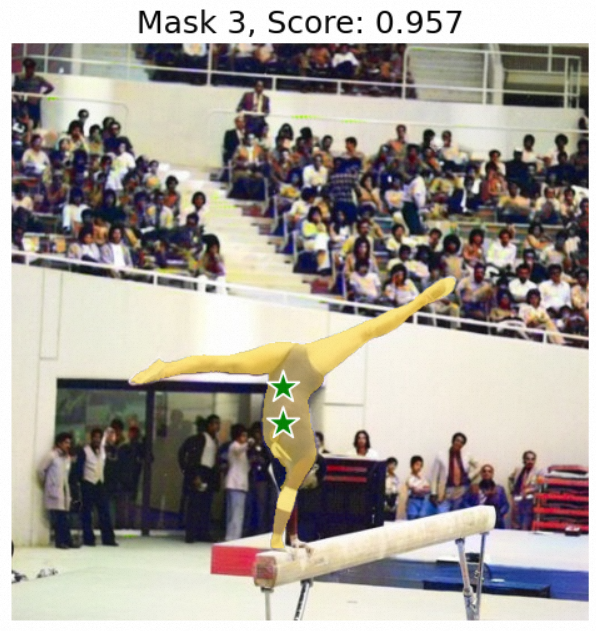

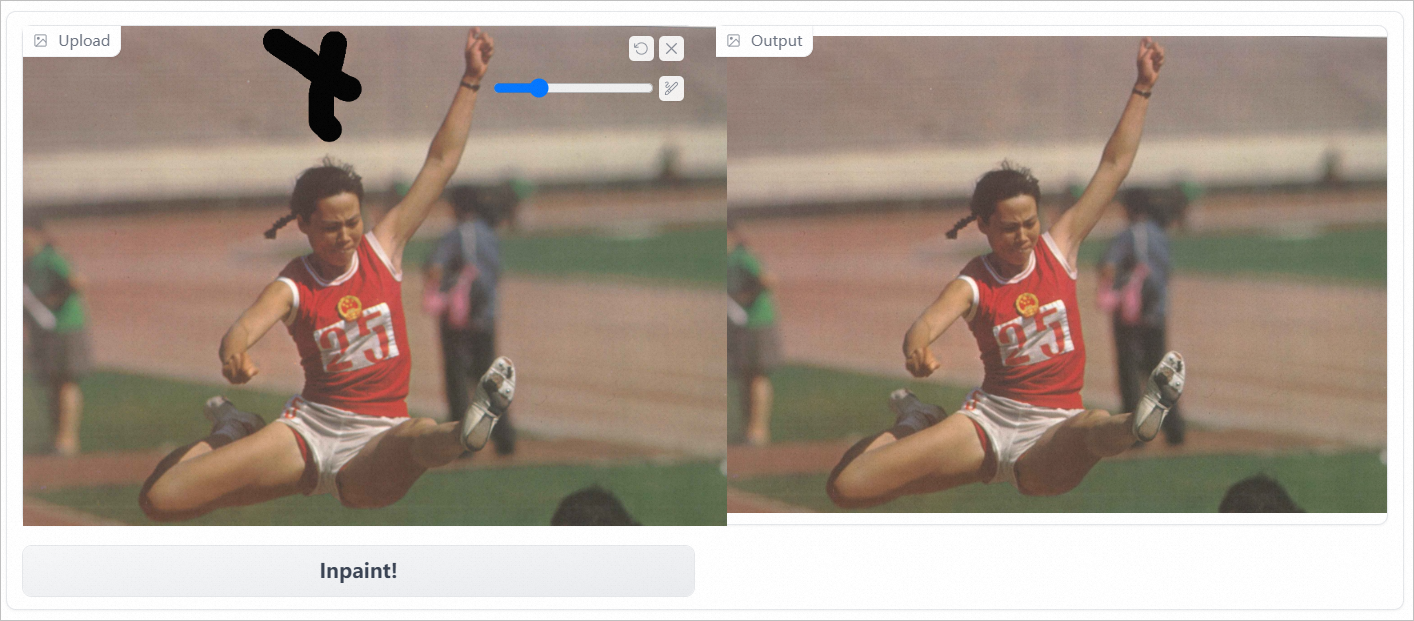

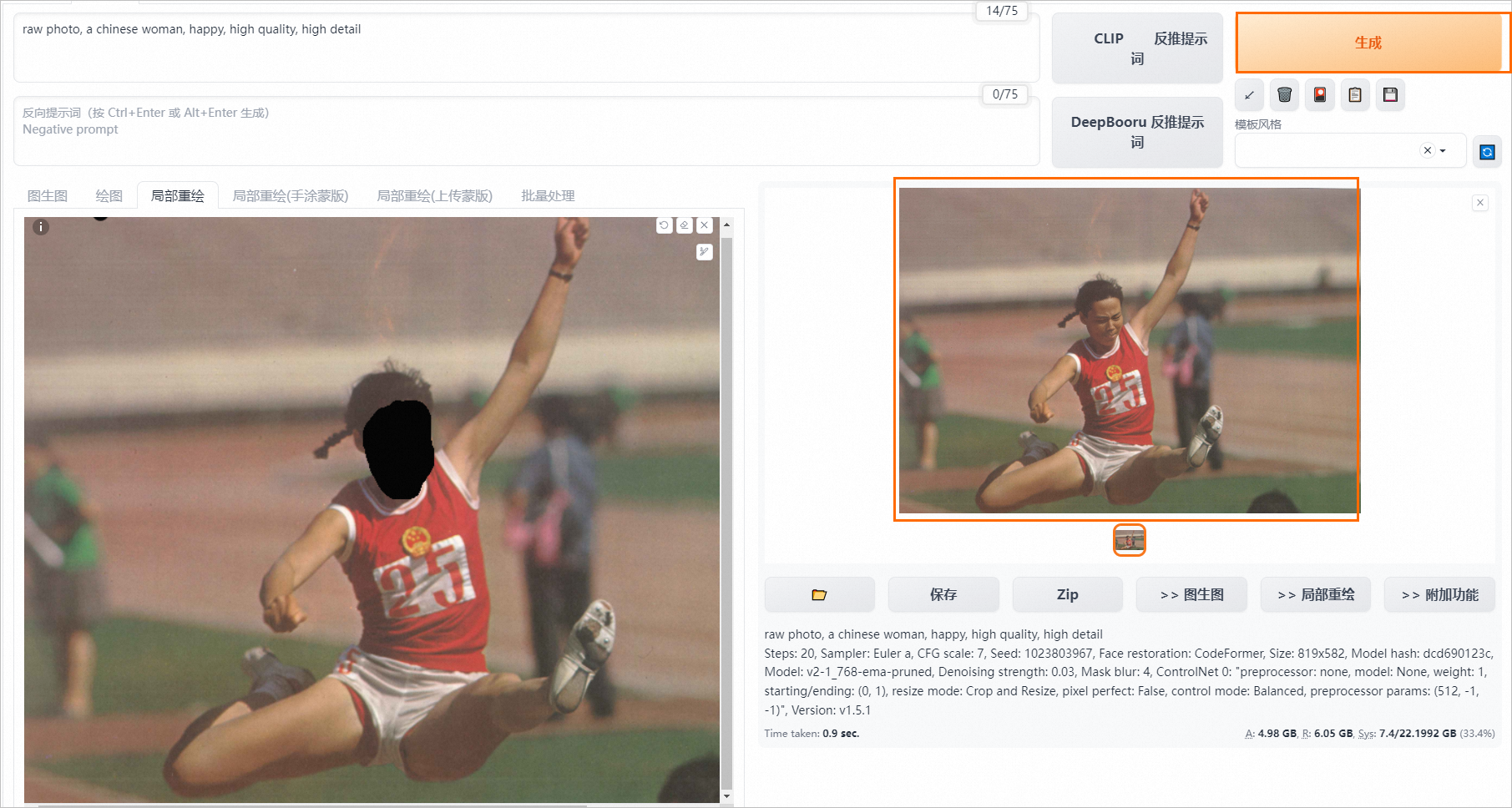

./HAT文件夹中查看该算法的源代码。单击此处查看运行结果