Prefill/Decode分离架构(PD分离),是当前主流的LLM推理优化技术,通过将LLM推理中的两个核心阶段解耦并分开部署在不同GPU上,避免资源争抢,从而显著降低TPOT,提升系统吞吐。本文以Qwen3-32B模型为例,演示如何通过Gateway with Inference Extension为部署在ACK中的SGLang PD分离架构模型推理服务。

读本文前,请确保您已经了解InferencePool和InferenceModel的相关概念。

关于PD分离的详细背景,请参见部署SGLang PD分离推理服务。

本文内容依赖1.4.0及以上版本的Gateway with Inference Extension。请确保安装组件时勾选了启用Gateway API推理扩展。

前提条件

已创建ACK集群且集群版本为1.22及以上,并且已经为集群添加GPU节点。具体操作,请参见创建ACK托管集群和为集群添加GPU节点。

本文要求集群中GPU卡>=6, 单个GPU卡显存>=32GB。由于SGLang PD分离框架依赖GPU Direct RDMA(GDR)进行数据传输,所选择节点规格需支持弹性RDMA(eRDMA),推荐使用ecs.ebmgn8is.32xlarge规格,更多规格信息可参考弹性裸金属服务器规格。

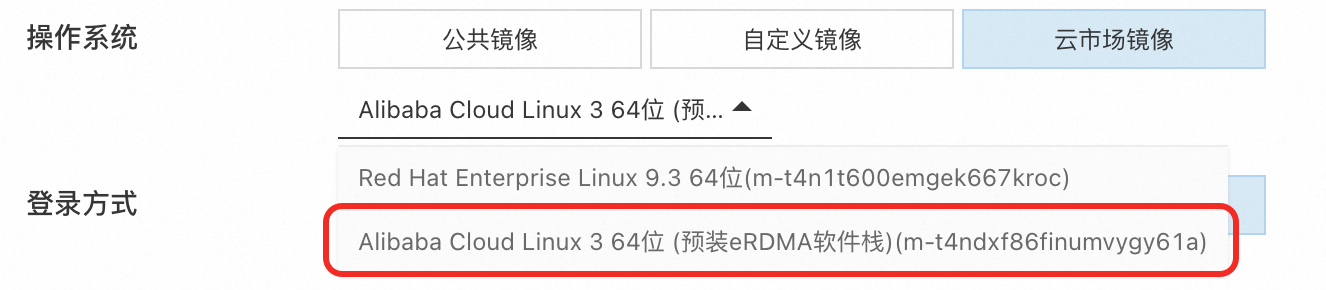

节点操作系统镜像选择:弹性RDMA的使用需要相关软件栈支持,因此在创建节点池时,推荐在操作系统-云市场镜像中选择Alibaba Cloud Linux 3 64位 (预装eRDMA软件栈)操作系统镜像。具体操作,请参见在ACK中添加eRDMA节点。

已安装ack eRDMA Controller组件,具体操作参见使用eRDMA加速容器网络,在集群中安装并配置ACK eRDMA Controller组件。

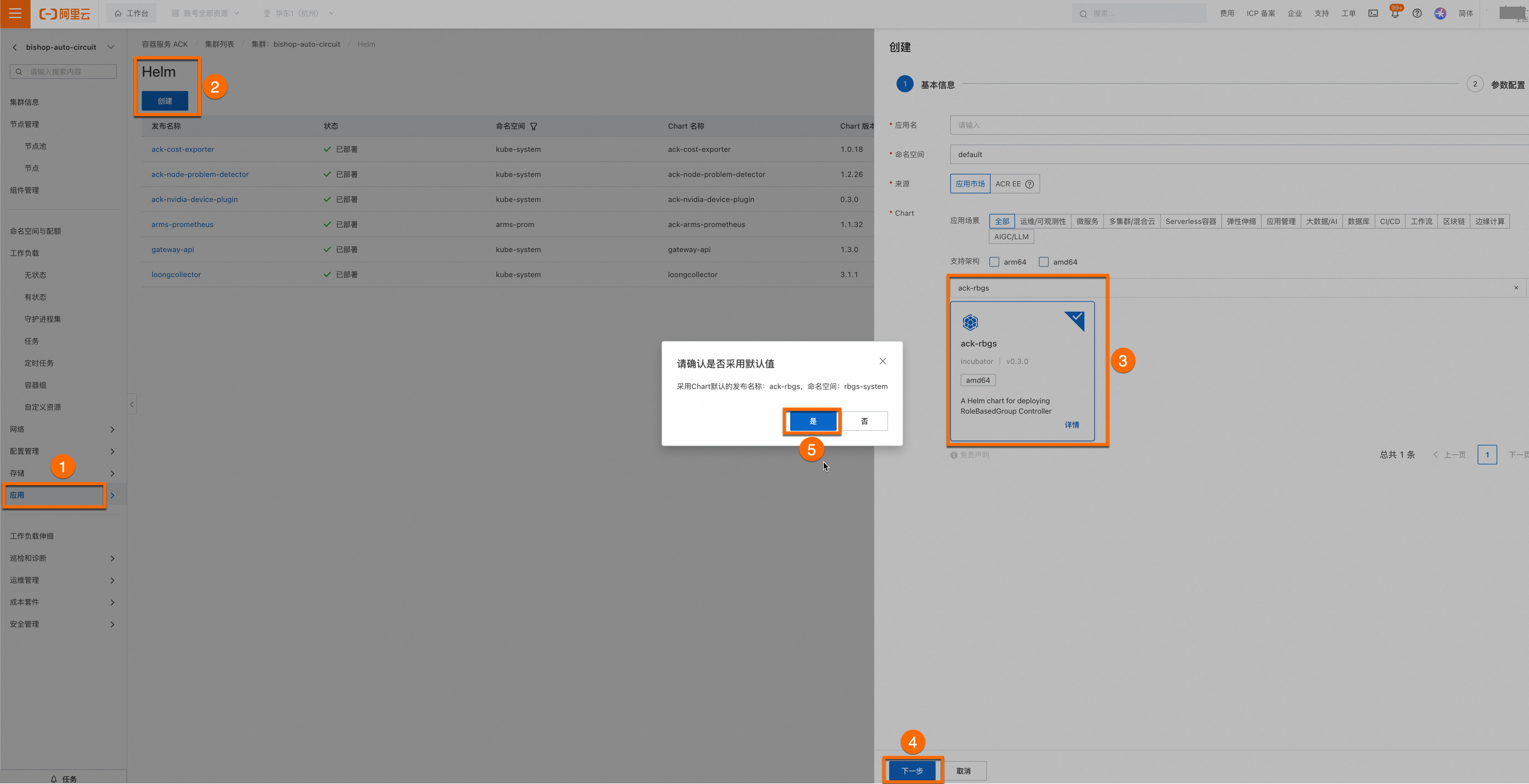

已安装ack-rbgs组件。组件安装步骤如下。

登录容器服务管理控制台,在左侧导航栏选择集群列表。单击目标集群名称,进入集群详情页面,使用Helm为目标集群安装ack-rbgs组件。您无需为组件配置应用名和命名空间,单击下一步后会出现一个请确认的弹框,单击是,即可使用默认的应用名(ack-rbgs)和命名空间(rbgs-system)。然后选择Chart 版本为最新版本,单击确定即可完成ack-rbgs组件的安装。

模型部署

步骤一:准备Qwen3-32B模型文件

从ModelScope下载Qwen-32B模型。

请确认是否已安装git-lfs插件,如未安装可执行

yum install git-lfs或者apt-get install git-lfs安装。更多的安装方式,请参见安装git-lfs。git lfs install GIT_LFS_SKIP_SMUDGE=1 git clone https://www.modelscope.cn/Qwen/Qwen3-32B.git cd Qwen3-32B/ git lfs pull在OSS中创建目录,将模型上传至OSS。

关于ossutil工具的安装和使用方法,请参见安装ossutil。

ossutil mkdir oss://<your-bucket-name>/Qwen3-32B ossutil cp -r ./Qwen3-32B oss://<your-bucket-name>/Qwen3-32B创建PV和PVC。为目标集群配置名为

llm-model的存储卷PV和存储声明PVC。具体操作,请参见使用ossfs 1.0静态存储卷。创建llm-model.yaml文件,该该YAML文件包含Secret、静态卷PV、静态卷PVC等配置。

apiVersion: v1 kind: Secret metadata: name: oss-secret stringData: akId: <your-oss-ak> # 配置用于访问OSS的AccessKey ID akSecret: <your-oss-sk> # 配置用于访问OSS的AccessKey Secret --- apiVersion: v1 kind: PersistentVolume metadata: name: llm-model labels: alicloud-pvname: llm-model spec: capacity: storage: 30Gi accessModes: - ReadOnlyMany persistentVolumeReclaimPolicy: Retain csi: driver: ossplugin.csi.alibabacloud.com volumeHandle: llm-model nodePublishSecretRef: name: oss-secret namespace: default volumeAttributes: bucket: <your-bucket-name> # bucket名称 url: <your-bucket-endpoint> # Endpoint信息,如oss-cn-hangzhou-internal.aliyuncs.com otherOpts: "-o umask=022 -o max_stat_cache_size=0 -o allow_other" path: <your-model-path> # 本示例中为/Qwen3-32B/ --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: llm-model spec: accessModes: - ReadOnlyMany resources: requests: storage: 30Gi selector: matchLabels: alicloud-pvname: llm-model创建Secret、创建静态卷PV、创建静态卷PVC。

kubectl create -f llm-model.yaml

步骤二:部署SGLang PD分离架构的推理服务

创建sglang_pd.yaml文件。

部署SGLang PD分离推理服务。

kubectl create -f sglang_pd.yaml

推理路由部署

步骤一:部署推理路由策略

创建inference-policy.yaml。

# InferencePool为工作负载声明开启推理路由 apiVersion: inference.networking.x-k8s.io/v1alpha2 kind: InferencePool metadata: name: qwen-inference-pool spec: targetPortNumber: 8000 selector: alibabacloud.com/inference_backend: sglang # 同时选中prefill和decode工作负载 --- # InferenceTrafficPolicy 指定了针对InferencePool应用的具体流量策略 apiVersion: inferenceextension.alibabacloud.com/v1alpha1 kind: InferenceTrafficPolicy metadata: name: inference-policy spec: poolRef: name: qwen-inference-pool modelServerRuntime: sglang # 指定后端服务运行框架为SGLang profile: pd: # 指定后端服务以PD分离方式部署 pdRoleLabelName: rolebasedgroup.workloads.x-k8s.io/role #通过指定pod标签区分InferencePool中的prefill和decode角色 kvTransfer: bootstrapPort: 34000 # SGLang PD分离服务进行KVCache传输时使用的bootstrap port,和RoleBasedGroup部署中指定的 disaggregation-bootstrap-port 参数一致。部署推理路由策略。

kubectl apply -f inference-policy.yaml

步骤二:部署网关和网关路由规则

创建inference-gateway.yaml。其中包含了网关、网关路由规则、以及网关后端超时规则。

apiVersion: gateway.networking.k8s.io/v1 kind: Gateway metadata: name: inference-gateway spec: gatewayClassName: ack-gateway listeners: - name: http-llm protocol: HTTP port: 8080 --- apiVersion: gateway.networking.k8s.io/v1 kind: HTTPRoute metadata: name: inference-route spec: parentRefs: - name: inference-gateway rules: - matches: - path: type: PathPrefix value: /v1 backendRefs: - name: qwen-inference-pool kind: InferencePool group: inference.networking.x-k8s.io --- apiVersion: gateway.envoyproxy.io/v1alpha1 kind: BackendTrafficPolicy metadata: name: backend-timeout spec: timeout: http: requestTimeout: 24h targetRef: group: gateway.networking.k8s.io kind: Gateway name: inference-gateway部署网关和路由规则。

kubectl apply -f inference-gateway.yaml

步骤三:验证针对SGLang PD分离服务的推理路由

获取网关的IP地址。

export GATEWAY_IP=$(kubectl get gateway/inference-gateway -o jsonpath='{.status.addresses[0].value}')验证网关在8080端口上通过正常HTTP路由到推理服务。

curl http://$GATEWAY_IP:8080/v1/chat/completions \ -H "Content-Type: application/json" \ -d '{ "model": "/models/Qwen3-32B", "messages": [ {"role": "user", "content": "你好,这是一个测试"} ], "max_tokens": 50 }'预期输出:

{"id":"02ceade4e6f34aeb98c2819b8a2545d6","object":"chat.completion","created":1755589644,"model":"/models/Qwen3-32B","choices":[{"index":0,"message":{"role":"assistant","content":"<think>\n好的,用户发来“你好,这是一个测试”,看起来是在测试我的反应。首先,我需要确认用户的需求是什么。可能的情况是他们想看看我的回复是否符合预期,或者检查是否有错误。我应该保持友好和","reasoning_content":null,"tool_calls":null},"logprobs":null,"finish_reason":"length","matched_stop":null}],"usage":{"prompt_tokens":12,"total_tokens":62,"completion_tokens":50,"prompt_tokens_details":null}}输出结果表明Gateway with Inference Extension已经可以正常地对发往SGLang PD分离推理服务的请求进行调度,根据给定的输入生成相应的回复。