本文为您介绍在Gateway环境下升级EMRHOOK组件的操作步骤。

背景说明

使用场景:在EMR Gateway环境中执行计算操作,Gateway通过使用EMR-CLI自定义部署Gateway环境,且使用数据湖构建(DLF)数据概况访问次数指标,生命周期数据访问频次规则时,需要手工升级EMRHOOK组件。

升级过程不会影响正在运行的计算任务,升级完成后重新拉起的计算任务会自动生效。

适用条件

EMR集群的元数据管理使用数据湖构建(DLF)。

以下操作仅对通过EMR-CLI自定义部署的Gateway集群生效。

升级步骤(适用于EMR版本 >= EMR-5.10.1, EMR-3.44.1)

步骤一:升级JAR包

SSH登录到Gateway,并执行以下脚本(需要有root权限),注意替换${region}成当前区域,如cn-hangzhou。

sudo mkdir -p /opt/apps/EMRHOOK/upgrade/

sudo wget https://dlf-repo-${region}.oss-${region}-internal.aliyuncs.com/emrhook/latest/emrhook.tar.gz -P /opt/apps/EMRHOOK/upgrade

sudo tar -p -zxf /opt/apps/EMRHOOK/upgrade/emrhook.tar.gz -C /opt/apps/EMRHOOK/upgrade/

sudo cp -p /opt/apps/EMRHOOK/upgrade/emrhook/* /opt/apps/EMRHOOK/emrhook-current/步骤二:修改HIVE配置

${hive-jar}根据Spark版本不一样选择不一样,Hive2填写hive-hook-hive23.jar,Hive3填写hive-hook-hive31.jar。

配置文件 | 配置项 | 配置值 |

hive-site.xml (/etc/taihao-apps/hive-conf/hive-site.xml) |

| 末尾添加 说明 分隔符是逗号。 |

| 添加 | |

hive-env.sh (/etc/taihao-apps/hive-conf/hive-env.sh) |

| 末尾添加 说明 分隔符是逗号。 |

步骤三:修改SPARK配置

${spark-jar}根据Spark版本不一样选择不一样,Spark2填写spark-hook-spark24.jar,Spark3填写spark-hook-spark30.jar。

配置文件 | 配置项 | 配置值 |

spark-defaults.conf (/etc/taihao-apps/spark-conf/spark-defaults.conf) |

| 末尾添加(注意分隔符是冒号) 说明 分隔符是冒号。 |

| 末尾添加(注意分隔符是冒号) 说明 分隔符是冒号。 | |

| 添加 |

升级步骤(适用于EMR版本 < EMR-5.10.1, EMR-3.44.1)

步骤一:升级JAR包

SSH登录到Gateway,并执行以下脚本(需要有root权限),注意替换

${region}成当前区域,如cn-hangzhou。执行以下脚本,下载并解压最近的EMRHOOK JAR包,解压完成后按照后续操作进行JAR包升级。

sudo mkdir -p /opt/apps/EMRHOOK/upgrade/ sudo wget https://dlf-repo-${region}.oss-${region}-internal.aliyuncs.com/emrhook/latest/emrhook.tar.gz -P /opt/apps/EMRHOOK/upgrade sudo tar -p -zxf /opt/apps/EMRHOOK/upgrade/emrhook.tar.gz -C /opt/apps/EMRHOOK/upgrade/替换JAR时,由于EMRHOOK小版本号不同EMR版本不一致,需要手动重命名JAR包成当前的EMRHOOK版本后,再进行拷贝替换操作。

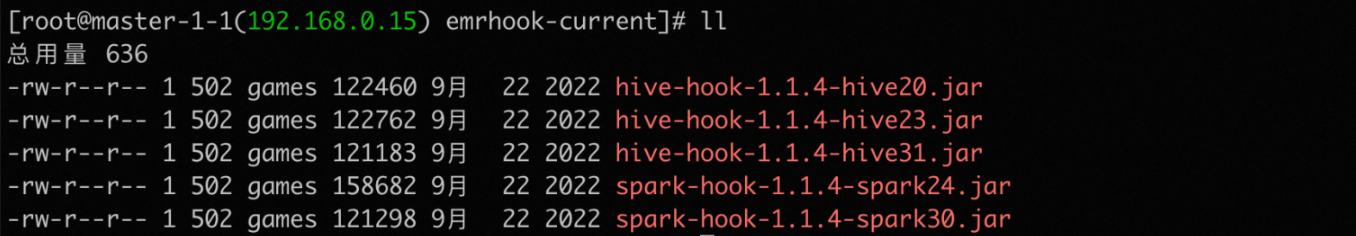

例如EMR-3.43.1版本EMRHOOK组件小版本为1.1.4,JAR包命名规则hive-hook-${version}-hive20.jar,则需要将上述解压好的JAR包修改成一样的命名。

cd /opt/apps/EMRHOOK/upgrade/emrhook mv hive-hook-hive20.jar hive-hook-1.1.4-hive20.jar mv hive-hook-hive23.jar hive-hook-1.1.4-hive23.jar mv hive-hook-hive31.jar hive-hook-1.1.4-hive31.jar mv spark-hook-spark24.jar spark-hook-1.1.4-spark24.jar mv spark-hook-spark30.jar spark-hook-1.1.4-spark30.jar

修改完成后执行以下命令。

sudo cp -p /opt/apps/EMRHOOK/upgrade/emrhook/* /opt/apps/EMRHOOK/emrhook-current/

步骤二:修改HIVE配置

${hive-jar}根据Spark版本不一样选择不一样,Hive2填写hive-hook-${emrhook-version}-hive23.jar,Hive3填写hive-hook-${emrhook-version}-hive31.jar;${emrhook-version}填写组件版本,如hive-hook-1.1.4-hive23.jar。

配置文件 | 配置项 | 配置值 |

hive-site.xml (/etc/taihao-apps/hive-conf/hive-site.xml) |

| 末尾添加 说明 分隔符是逗号。 |

| 添加 | |

hive-env.sh (/etc/taihao-apps/hive-conf/hive-env.sh) |

| 末尾添加 说明 分隔符是逗号。 |

步骤三:修改SPARK配置

${spark-jar}根据Spark版本不一样选择不一样,Spark2填写spark-hook-${emrhook-version}-spark24.jar,Spark3填写spark-hook-${emrhook-version}-spark30.jar;${emrhook-version}填写组件版本,如spark-hook-1.1.4-spark24.jar。

配置文件 | 配置项 | 配置值 |

spark-defaults.conf (/etc/taihao-apps/spark-conf/spark-defaults.conf) |

| 末尾添加(注意分隔符是冒号) 说明 分隔符是冒号。 |

| 末尾添加(注意分隔符是冒号) 说明 分隔符是冒号。 | |

| 添加 |