千问OCR 是专用于文字提取的视觉理解模型,可从各类图像(如扫描文档、表格、票据等)中提取文本或解析结构化数据,支持识别多种语言,并能通过特定任务指令实现信息抽取、表格解析、公式识别等高级功能。

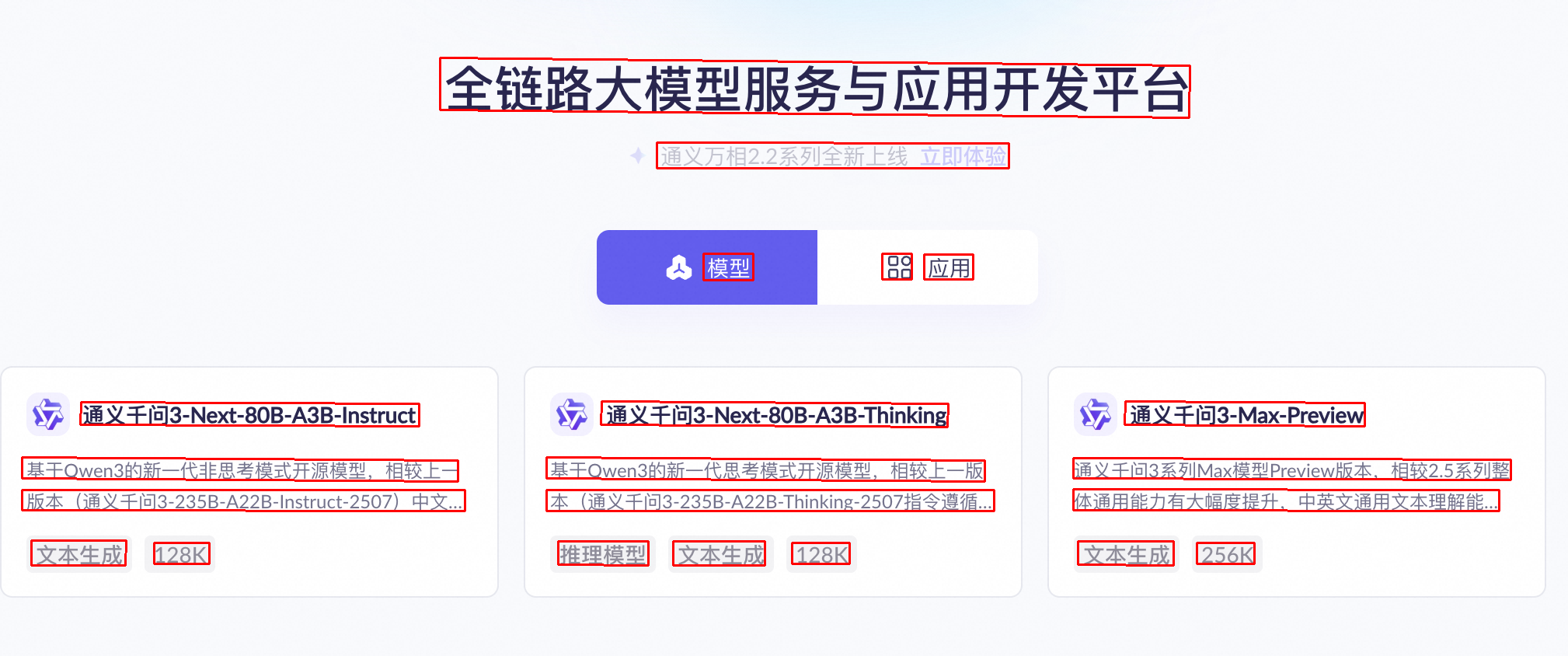

在线体验:阿里云百炼平台(北京)、阿里云百炼平台(新加坡)或 阿里云百炼平台(弗吉尼亚)

效果示例

输入图像 | 识别结果 |

识别多种语言

|

|

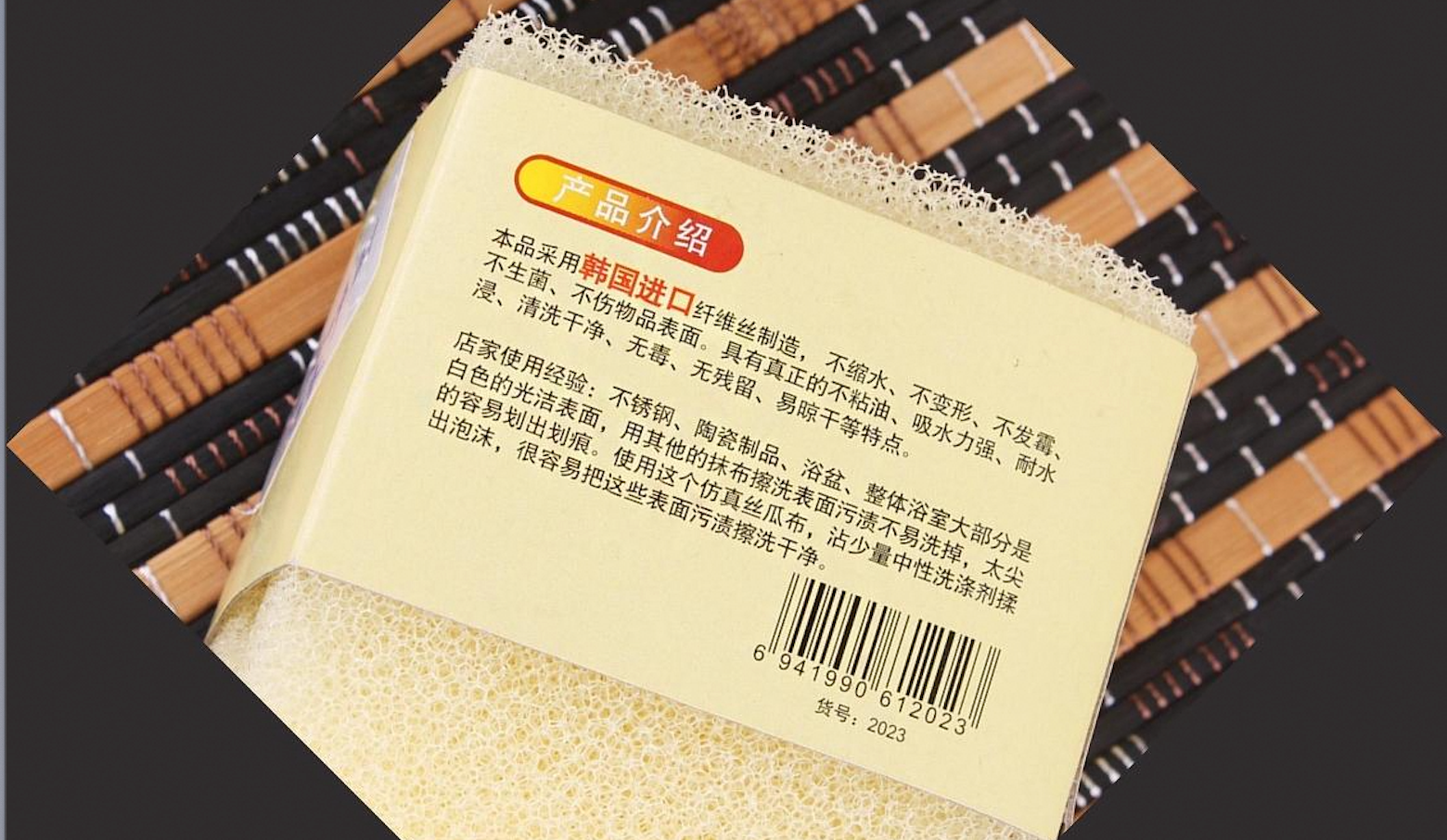

识别倾斜图像

| 产品介绍 本品采用韩国进口纤维丝制造,不缩水、不变形、不发霉、不生菌、不伤物品表面。具有真正的不粘油、吸水力强、耐水浸、清洗干净、无毒、无残留、易晾干等特点。 店家使用经验:不锈钢、陶瓷制品、浴盆、整体浴室大部分是白色的光洁表面,用其他的抹布擦洗表面污渍不易洗掉,太尖的容易划出划痕。使用这个仿真丝瓜布,沾少量中性洗涤剂揉出泡沫,很容易把这些表面污渍擦洗干净。 6941990612023 货号:2023 |

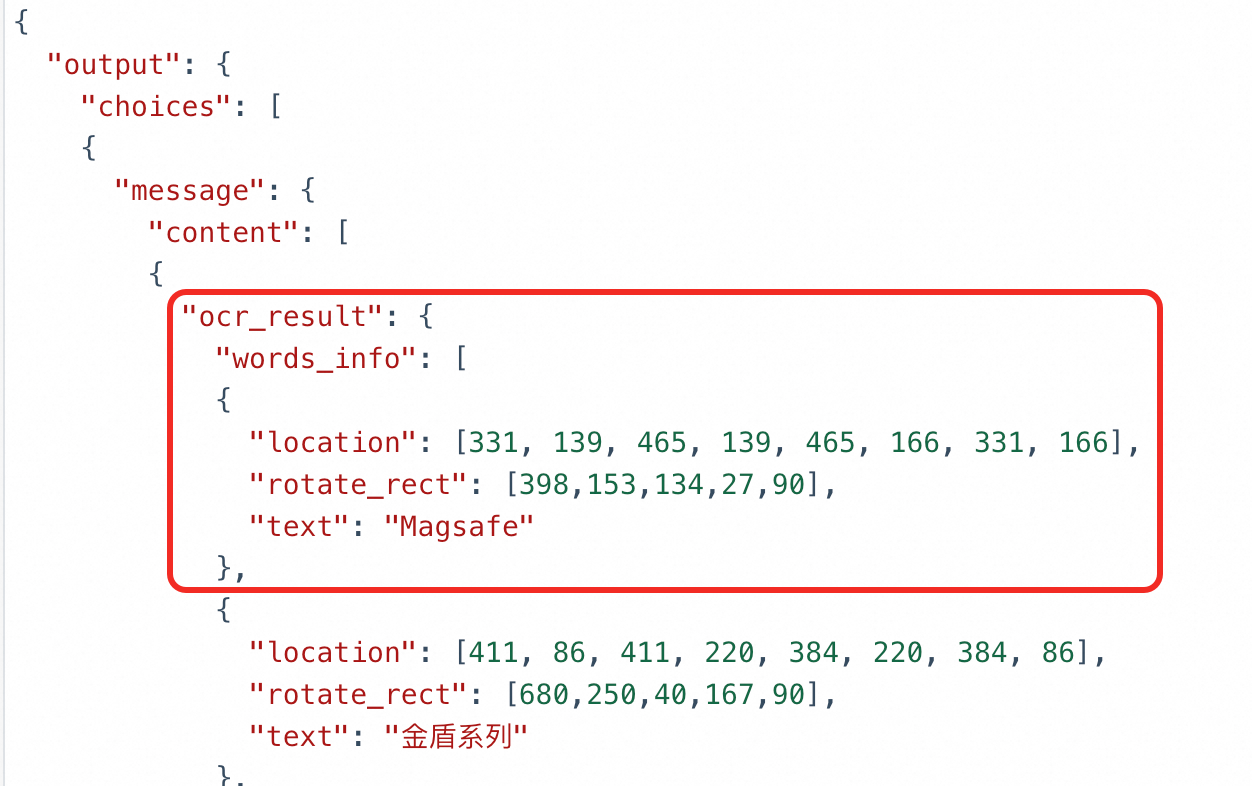

定位文字位置

高精识别任务支持文字定位功能。 | 可视化定位效果

可参见常见问题将每行文本的边界框绘制到原图上。 |

适用范围

支持的地域

支持的模型

中国内地

在中国内地部署模式下,接入点与数据存储均位于北京地域,模型推理计算资源仅限于中国内地。

|

模型名称 |

版本 |

上下文长度 |

最大输入 |

最大输出 |

输入单价 |

输出单价 |

免费额度 |

|

(Token数) |

(每百万Token) |

||||||

|

qwen-vl-ocr 当前与qwen-vl-ocr-2025-11-20能力相同 Batch 调用半价 |

稳定版 |

38,192 |

30,000 单图最大30000 |

8,192 |

0.3元 |

0.5元 |

各100万Token 有效期:百炼开通后90天内 |

|

qwen-vl-ocr-latest 始终与最新版能力相同 Batch 调用半价 |

最新版 |

||||||

|

qwen-vl-ocr-2025-11-20 基于Qwen3-VL架构,大幅提升文档解析、文字定位能力。 |

快照版 |

||||||

|

qwen-vl-ocr-2025-08-28 又称qwen-vl-ocr-0828 |

34,096 |

4,096 |

5元 |

5元 |

|||

|

qwen-vl-ocr-2025-04-13 又称qwen-vl-ocr-0413 |

|||||||

|

qwen-vl-ocr-2024-10-28 又称qwen-vl-ocr-1028 |

|||||||

全球

在全球部署模式下,接入点与数据存储均位于美国(弗吉尼亚)地域,模型推理计算资源在全球范围内动态调度。

|

模型名称 |

版本 |

上下文长度 |

最大输入 |

最大输出 |

输入单价 |

输出单价 |

免费额度 |

|

(Token数) |

(每百万Token) |

||||||

|

qwen-vl-ocr 当前与qwen-vl-ocr-2025-11-20能力相同 |

稳定版 |

38,192 |

30,000 单图最大30000 |

8,192 |

0.514元 |

1.174元 |

无免费额度 |

|

qwen-vl-ocr-2025-11-20 又称qwen-vl-ocr-1120 基于Qwen3-VL架构,大幅提升文档解析、文字定位能力。 |

快照版 |

||||||

国际

在国际部署模式下,接入点与数据存储均位于新加坡地域,模型推理计算资源在全球范围内动态调度(不含中国内地)。

|

模型名称 |

版本 |

上下文长度 |

最大输入 |

最大输出 |

输入单价 |

输出单价 |

免费额度 |

|

(Token数) |

(每百万Token) |

||||||

|

qwen-vl-ocr 当前与qwen-vl-ocr-2025-11-20能力相同 |

稳定版 |

38,192 |

30,000 单图最大30000 |

8,192 |

0.514元 |

1.174元 |

无免费额度 |

|

qwen-vl-ocr-2025-11-20 又称qwen-vl-ocr-1120 基于Qwen3-VL架构,大幅提升文档解析、文字定位能力。 |

快照版 |

||||||

qwen-vl-ocr、qwen-vl-ocr-2025-04-13、qwen-vl-ocr-2025-08-28模型,max_tokens参数(最大输出长度)默认为 4096,如需提高该参数值(4097~8192范围),请发送邮件至 modelstudio@service.aliyun.com进行申请,并提供以下信息:主账号ID、图像类型(如文档图、电商图、合同等)、模型名称、预计 QPS 和每日请求总数,以及模型输出长度超过4096的请求占比。

准备工作

如果通过 OpenAI SDK 或 DashScope SDK进行调用,请先安装最新版 SDK。DashScope Python SDK 最低版本为1.22.2, Java SDK 最低版本为2.21.8。

DashScope SDK

优势:支持所有高级特性,如图像旋转矫正和内置 OCR 任务。功能更完整,调用方式更简洁。

适用场景:需要使用完整功能的项目。

OpenAI 兼容 SDK

优势:方便已使用 OpenAI SDK 或生态工具的用户快速迁移。

限制:高级功能(图像旋转矫正和内置 OCR 任务)不支持直接通过参数调用,需要通过构造复杂的 Prompt 手动模拟,输出结果需要自行解析。

适用场景:已有 OpenAI 集成基础,且不依赖 DashScope 独有高级功能的项目。

快速开始

以下示例将从火车票图片(URL)中提取关键信息,并以 JSON 格式返回。了解如何传入本地文件和图像限制。

OpenAI 兼容

Python

from openai import OpenAI

import os

PROMPT_TICKET_EXTRACTION = """

请提取车票图像中的发票号码、车次、起始站、终点站、发车日期和时间点、座位号、席别类型、票价、身份证号码、购票人姓名。

要求准确无误的提取上述关键信息、不要遗漏和捏造虚假信息,模糊或者强光遮挡的单个文字可以用英文问号?代替。

返回数据格式以json方式输出,格式为:{'发票号码':'xxx', '车次':'xxx', '起始站':'xxx', '终点站':'xxx', '发车日期和时间点':'xxx', '座位号':'xxx', '席别类型':'xxx','票价':'xxx', '身份证号码':'xxx', '购票人姓名':'xxx'"},

"""

try:

client = OpenAI(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx"

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

api_key=os.getenv("DASHSCOPE_API_KEY"),

# 以下为北京地域的 base_url,若使用弗吉尼亚地域模型,需要将base_url换成https://dashscope-us.aliyuncs.com/compatible-mode/v1

# 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/compatible-mode/v1

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1",

)

completion = client.chat.completions.create(

model="qwen-vl-ocr-latest",

messages=[

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {"url":"https://img.alicdn.com/imgextra/i2/O1CN01ktT8451iQutqReELT_!!6000000004408-0-tps-689-487.jpg"},

# 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

"min_pixels": 32 * 32 * 3,

# 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

"max_pixels": 32 * 32 * 8192

},

# 模型支持在text字段中传入Prompt,若未传入,则会使用默认的Prompt:Please output only the text content from the image without any additional descriptions or formatting.

{"type": "text",

"text": PROMPT_TICKET_EXTRACTION}

]

}

])

print(completion.choices[0].message.content)

except Exception as e:

print(f"错误信息: {e}")Node.js

import OpenAI from 'openai';

// 定义提取车票信息的Prompt

const PROMPT_TICKET_EXTRACTION = `

请提取车票图像中的发票号码、车次、起始站、终点站、发车日期和时间点、座位号、席别类型、票价、身份证号码、购票人姓名。

要求准确无误的提取上述关键信息、不要遗漏和捏造虚假信息,模糊或者强光遮挡的单个文字可以用英文问号?代替。

返回数据格式以json方式输出,格式为:{'发票号码':'xxx', '车次':'xxx', '起始站':'xxx', '终点站':'xxx', '发车日期和时间点':'xxx', '座位号':'xxx', '席别类型':'xxx','票价':'xxx', '身份证号码':'xxx', '购票人姓名':'xxx'"}

`;

// 初始化OpenAI客户端

const client = new OpenAI({

// 若没有配置环境变量,请用百炼API Key将下行替换为:apiKey: "sk-xxx",

// 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

apiKey: process.env.DASHSCOPE_API_KEY,

// 以下为北京地域的 base_url,若使用弗吉尼亚地域模型,需要将base_url换成https://dashscope-us.aliyuncs.com/compatible-mode/v1

// 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/compatible-mode/v1

baseURL: "https://dashscope.aliyuncs.com/compatible-mode/v1",

});

async function main() {

try {

// 创建聊天完成请求

const completion = await client.chat.completions.create({

model: "qwen-vl-ocr-latest",

messages: [

{

role: "user",

content: [

// 模型支持在text字段中传入Prompt,若未传入,则会使用默认的Prompt:Please output only the text content from the image without any additional descriptions or formatting.

{

type: "image_url",

image_url: {

url: "https://img.alicdn.com/imgextra/i2/O1CN01ktT8451iQutqReELT_!!6000000004408-0-tps-689-487.jpg",

},

// 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

min_pixels: 32 * 32 * 3,

// 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

max_pixels: 32 * 32 * 8192

},

{type: "text",

text: PROMPT_TICKET_EXTRACTION}

]

}

]

});

// 输出结果

console.log(completion.choices[0].message.content);

} catch (error) {

console.log(`错误信息: ${error}`);

}

}

main();curl

# ======= 重要提示 =======

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

# 以下为北京地域的 base_url,若使用弗吉尼亚地域模型,需要将base_url换成:https://dashscope-us.aliyuncs.com/compatible-mode/v1/chat/completions

# 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/compatible-mode/v1/chat/completions

# === 执行时请删除该注释 ===

curl -X POST https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "qwen-vl-ocr-latest",

"messages": [

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {"url":"https://img.alicdn.com/imgextra/i2/O1CN01ktT8451iQutqReELT_!!6000000004408-0-tps-689-487.jpg"},

"min_pixels": 3072,

"max_pixels": 8388608

},

{"type": "text", "text": "请提取车票图像中的发票号码、车次、起始站、终点站、发车日期和时间点、座位号、席别类型、票价、身份证号码、购票人姓名。要求准确无误的提取上述关键信息、不要遗漏和捏造虚假信息,模糊或者强光遮挡的单个文字可以用英文问号?代替。返回数据格式以json方式输出,格式为:{'发票号码':'xxx', '车次':'xxx', '起始站':'xxx', '终点站':'xxx', '发车日期和时间点':'xxx', '座位号':'xxx', '席别类型':'xxx','票价':'xxx', '身份证号码':'xxx', '购票人姓名':'xxx'"}

]

}

]

}'响应示例

DashScope

Python

import os

import dashscope

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

# 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

dashscope.base_http_api_url = "https://dashscope.aliyuncs.com/api/v1"

PROMPT_TICKET_EXTRACTION = """

请提取车票图像中的发票号码、车次、起始站、终点站、发车日期和时间点、座位号、席别类型、票价、身份证号码、购票人姓名。

要求准确无误的提取上述关键信息、不要遗漏和捏造虚假信息,模糊或者强光遮挡的单个文字可以用英文问号?代替。

返回数据格式以json方式输出,格式为:{'发票号码':'xxx', '车次':'xxx', '起始站':'xxx', '终点站':'xxx', '发车日期和时间点':'xxx', '座位号':'xxx', '席别类型':'xxx','票价':'xxx', '身份证号码':'xxx', '购票人姓名':'xxx'"},

"""

try:

response = dashscope.MultiModalConversation.call(

model='qwen-vl-ocr-latest',

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx",

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

api_key=os.getenv('DASHSCOPE_API_KEY'),

messages=[{

'role': 'user',

'content': [

{'image': 'https://img.alicdn.com/imgextra/i2/O1CN01ktT8451iQutqReELT_!!6000000004408-0-tps-689-487.jpg',

# 输入图像的最小像素阈值,小于该值图像会放大,直到总像素大于min_pixels

"min_pixels": 32 * 32 * 3,

# 输入图像的最大像素阈值,超过该值图像会缩小,直到总像素低于max_pixels

"max_pixels": 32 * 32 * 8192,

# 是否开启图像自动转正功能

"enable_rotate": False,

},

# 未设置内置任务时,支持在text字段中传入Prompt,若未传入则使用默认的Prompt:Please output only the text content from the image without any additional descriptions or formatting.

{'text': PROMPT_TICKET_EXTRACTION}

]

}]

)

print(response.output.choices[0].message.content[0]['text'])

except Exception as e:

print(f"An error occurred: {e}")

Java

import java.util.Arrays;

import java.util.Collections;

import java.util.Map;

import java.util.HashMap;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.Constants;

public class Main {

// 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

// 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

static {Constants.baseHttpApiUrl="https://dashscope.aliyuncs.com/api/v1";}

public static void simpleMultiModalConversationCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

Map<String, Object> map = new HashMap<>();

map.put("image", "https://img.alicdn.com/imgextra/i2/O1CN01ktT8451iQutqReELT_!!6000000004408-0-tps-689-487.jpg");

// 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

map.put("max_pixels", 8388608);

// 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

map.put("min_pixels", 3072);

// 开启图像自动转正功能

map.put("enable_rotate", false);

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

map,

// 未设置内置任务时,支持在text字段中传入Prompt,若未传入则使用默认的Prompt:Please output only the text content from the image without any additional descriptions or formatting.

Collections.singletonMap("text", "请提取车票图像中的发票号码、车次、起始站、终点站、发车日期和时间点、座位号、席别类型、票价、身份证号码、购票人姓名。要求准确无误的提取上述关键信息、不要遗漏和捏造虚假信息,模糊或者强光遮挡的单个文字可以用英文问号?代替。返回数据格式以json方式输出,格式为:{'发票号码':'xxx', '车次':'xxx', '起始站':'xxx', '终点站':'xxx', '发车日期和时间点':'xxx', '座位号':'xxx', '席别类型':'xxx','票价':'xxx', '身份证号码':'xxx', '购票人姓名':'xxx'"))).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-ocr-latest")

.message(userMessage)

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

simpleMultiModalConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}curl

# ======= 重要提示 =======

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# === 执行时请删除该注释 ===

curl --location 'https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation'\

--header "Authorization: Bearer $DASHSCOPE_API_KEY"\

--header 'Content-Type: application/json'\

--data '{

"model": "qwen-vl-ocr-latest",

"input": {

"messages": [

{

"role": "user",

"content": [{

"image": "https://img.alicdn.com/imgextra/i2/O1CN01ktT8451iQutqReELT_!!6000000004408-0-tps-689-487.jpg",

"min_pixels": 3072,

"max_pixels": 8388608,

"enable_rotate": false

},

{

"text": "请提取车票图像中的发票号码、车次、起始站、终点站、发车日期和时间点、座位号、席别类型、票价、身份证号码、购票人姓名。要求准确无误的提取上述关键信息、不要遗漏和捏造虚假信息,模糊或者强光遮挡的单个文字可以用英文问号?代替。返回数据格式以json方式输出,格式为:{'发票号码':'xxx', '车次':'xxx', '起始站':'xxx', '终点站':'xxx', '发车日期和时间点':'xxx', '座位号':'xxx', '席别类型':'xxx','票价':'xxx', '身份证号码':'xxx', '购票人姓名':'xxx'"

}

]

}

]

}

}'调用内置任务

为简化特定场景下的调用,模型(除qwen-vl-ocr-2024-10-28外)内置了多种任务。

使用方法:

Dashscope SDK:您无需设计和传入

Prompt,模型内部会采用固定的Prompt,设置ocr_options参数即可调用内置任务。OpenAI 兼容 SDK:您需手动填写任务指定的

Prompt。

下表列出了各内置任务对应的task的取值、指定的Prompt、输出格式与示例:

高精识别

建议优先使用qwen-vl-ocr-2025-08-28以后更新的或最新版模型调用高精识别任务,该任务具有以下特性:

识别文本内容(提取文字)

检测文本位置(定位文本行、输出坐标)

获取文本边界框的坐标后,可参见常见问题将边界框绘制到原图上。

task的取值 | 指定的Prompt | 输出格式与示例 |

| 定位所有的文字行,并且返回旋转矩形 |

|

import os

import dashscope

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

# 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

dashscope.base_http_api_url = "https://dashscope.aliyuncs.com/api/v1"

messages = [{

"role": "user",

"content": [{

"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/ctdzex/biaozhun.jpg",

# 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

"min_pixels": 32 * 32 * 3,

# 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

"max_pixels": 32 * 32 * 8192,

# 是否开启图像自动转正功能

"enable_rotate": False}]

}]

response = dashscope.MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx",

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen-vl-ocr-latest',

messages=messages,

# 设置内置任务为高精识别

ocr_options={"task": "advanced_recognition"}

)

# 高精识别任务以纯文本返回结果

print(response["output"]["choices"][0]["message"].content[0]["text"])// dashscope SDK的版本 >= 2.21.8

import java.util.Arrays;

import java.util.Collections;

import java.util.Map;

import java.util.HashMap;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.aigc.multimodalconversation.OcrOptions;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.Constants;

public class Main {

// 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

// 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

static {Constants.baseHttpApiUrl="https://dashscope.aliyuncs.com/api/v1";}

public static void simpleMultiModalConversationCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

Map<String, Object> map = new HashMap<>();

map.put("image", "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/ctdzex/biaozhun.jpg");

// 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

map.put("max_pixels", 8388608);

// 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

map.put("min_pixels", 3072);

// 是否开启图像自动转正功能

map.put("enable_rotate", false);

// 配置内置的OCR任务

OcrOptions ocrOptions = OcrOptions.builder()

.task(OcrOptions.Task.ADVANCED_RECOGNITION)

.build();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

map

)).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

// 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-ocr-latest")

.message(userMessage)

.ocrOptions(ocrOptions)

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

simpleMultiModalConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}# ======= 重要提示 =======

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成:https://dashscope-us.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# 若使用新加坡地域的模型,需要将base_url换成:https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# === 执行时请删除该注释 ===

curl --location 'https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation' \

--header "Authorization: Bearer $DASHSCOPE_API_KEY" \

--header 'Content-Type: application/json' \

--data '

{

"model": "qwen-vl-ocr-latest",

"input": {

"messages": [

{

"role": "user",

"content": [

{

"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/ctdzex/biaozhun.jpg",

"min_pixels": 3072,

"max_pixels": 8388608,

"enable_rotate": false

}

]

}

]

},

"parameters": {

"ocr_options": {

"task": "advanced_recognition"

}

}

}

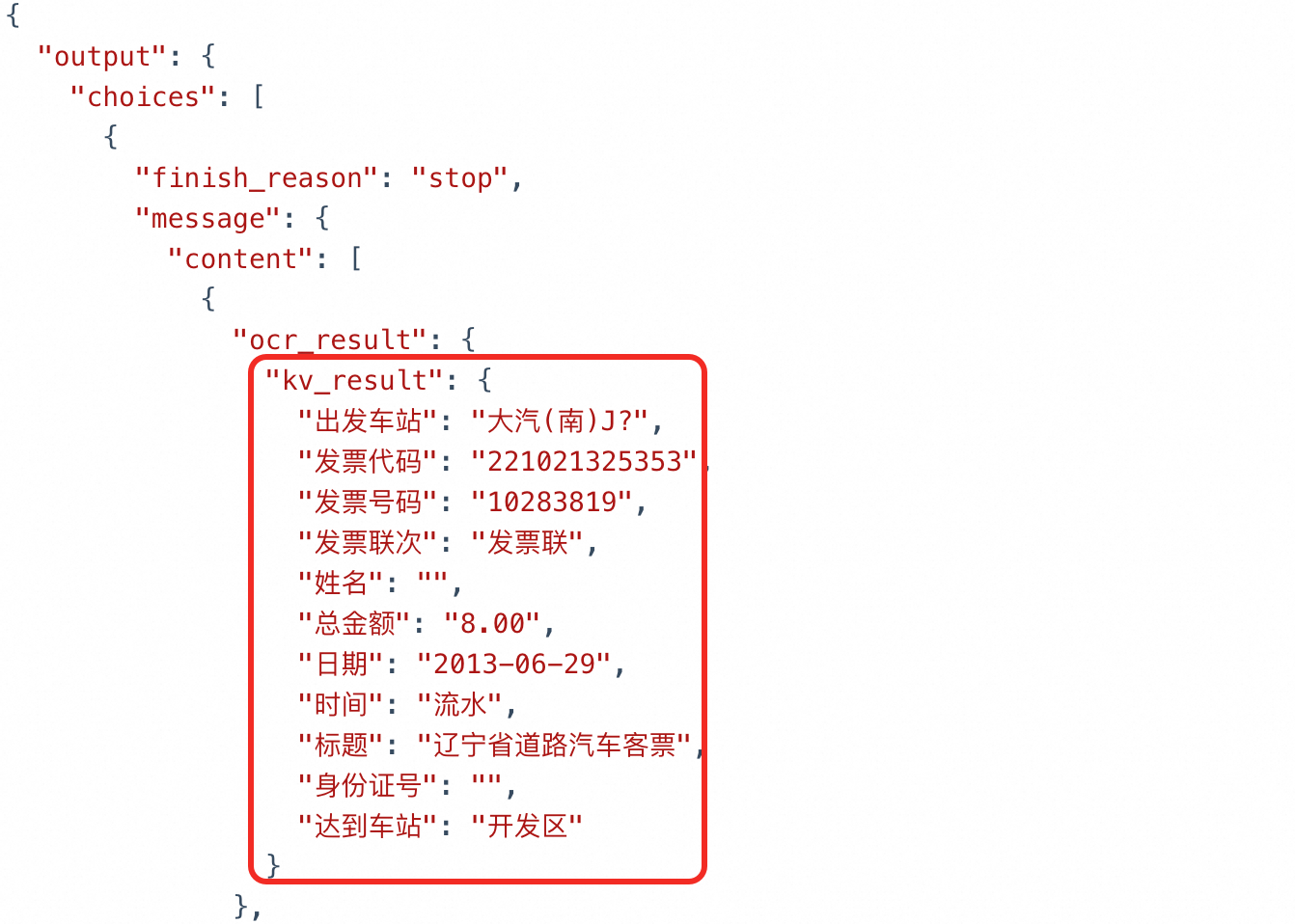

'信息抽取

该模型支持从票据、证件、表单等文档中提取结构化信息,并以JSON格式返回结果。用户可选择两种模式:

自定义字段抽取:指定需要提取的特定字段,需在

ocr_options.task_config参数中指定一个自定义的 JSON 模板(result_schema),定义需要提取的特定字段名(key)。模型将自动填充对应的值(value)。模板最多支持 3 层嵌套。全字段抽取:若未指定

result_schema参数,模型将提取图像中的所有字段。

两种模式对应的Prompt不同:

task的取值 | 指定的Prompt | 输出格式与示例 |

| 自定义字段抽取: |

|

全字段抽取: |

|

以下是通过Dashscope SDK 及 HTTP 方式调用的示例代码:

# use [pip install -U dashscope] to update sdk

import os

import dashscope

from dashscope import MultiModalConversation

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

# 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

dashscope.base_http_api_url = "https://dashscope.aliyuncs.com/api/v1"

messages = [

{

"role":"user",

"content":[

{

"image":"http://duguang-labelling.oss-cn-shanghai.aliyuncs.com/demo_ocr/receipt_zh_demo.jpg",

"min_pixels": 32 * 32 * 3,

"max_pixels": 32 * 32 *8192,

"enable_rotate": False

}

]

}

]

# 指定抽取字段

params = {

"ocr_options":{

"task": "key_information_extraction",

"task_config": {

"result_schema": {

"乘车日期": "对应图中乘车日期时间,格式为年-月-日,比如2025-03-05",

"发票代码": "提取图中的发票代码,通常为一组数字或字母组合",

"发票号码": "提取发票上的号码,通常由纯数字组成。"

}

}

}

}

response = MultiModalConversation.call(

model='qwen-vl-ocr-latest',

messages=messages,

**params,

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

api_key=os.getenv('DASHSCOPE_API_KEY'))

print(response.output.choices[0].message.content[0]["ocr_result"])import java.util.Arrays;

import java.util.Collections;

import java.util.Map;

import java.util.HashMap;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.aigc.multimodalconversation.OcrOptions;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.google.gson.JsonObject;

import com.alibaba.dashscope.utils.Constants;

public class Main {

// 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

// 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

static {Constants.baseHttpApiUrl="https://dashscope.aliyuncs.com/api/v1";}

public static void simpleMultiModalConversationCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

Map<String, Object> map = new HashMap<>();

map.put("image", "http://duguang-labelling.oss-cn-shanghai.aliyuncs.com/demo_ocr/receipt_zh_demo.jpg");

// 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

map.put("max_pixels", 8388608);

// 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

map.put("min_pixels",3072);

// 开启图像自动转正功能

map.put("enable_rotate", false);

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

map

)).build();

// 创建主JSON对象

JsonObject resultSchema = new JsonObject();

resultSchema.addProperty("乘车日期", "对应图中乘车日期时间,格式为年-月-日,比如2025-03-05");

resultSchema.addProperty("发票代码", "提取图中的发票代码,通常为一组数字或字母组合");

resultSchema.addProperty("发票号码", "提取发票上的号码,通常由纯数字组成。");

// 配置内置的OCR任务

OcrOptions ocrOptions = OcrOptions.builder()

.task(OcrOptions.Task.KEY_INFORMATION_EXTRACTION)

.taskConfig(OcrOptions.TaskConfig.builder()

.resultSchema(resultSchema)

.build())

.build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

// 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-ocr-latest")

.message(userMessage)

.ocrOptions(ocrOptions)

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("ocr_result"));

}

public static void main(String[] args) {

try {

simpleMultiModalConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}# ======= 重要提示 =======

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成:https://dashscope-us.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# 若使用新加坡地域的模型,需要将base_url换成:https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# === 执行时请删除该注释 ===

curl --location 'https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation' \

--header "Authorization: Bearer $DASHSCOPE_API_KEY" \

--header 'Content-Type: application/json' \

--data '

{

"model": "qwen-vl-ocr-latest",

"input": {

"messages": [

{

"role": "user",

"content": [

{

"image": "http://duguang-labelling.oss-cn-shanghai.aliyuncs.com/demo_ocr/receipt_zh_demo.jpg",

"min_pixels": 3072,

"max_pixels": 8388608,

"enable_rotate": false

}

]

}

]

},

"parameters": {

"ocr_options": {

"task": "key_information_extraction",

"task_config": {

"result_schema": {

"乘车日期": "对应图中乘车日期时间,格式为年-月-日,比如2025-03-05",

"发票代码": "提取图中的发票代码,通常为一组数字或字母组合",

"发票号码": "提取发票上的号码,通常由纯数字组成。"

}

}

}

}

}

'若使用OpenAI SDK及HTTP方式调用,自定义的 JSON schema 拼接到 Prompt 字符串的末尾,参考代码如下:

表格解析

模型会对图像中的表格元素进行解析,以带有HTML格式的文本返回识别结果。

task的取值 | 指定的Prompt | 输出格式与示例 |

|

|

|

以下是通过Dashscope SDK 及 HTTP 方式调用的示例代码:

import os

import dashscope

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

# 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

dashscope.base_http_api_url = "https://dashscope.aliyuncs.com/api/v1"

messages = [{

"role": "user",

"content": [{

"image": "http://duguang-llm.oss-cn-hangzhou.aliyuncs.com/llm_data_keeper/data/doc_parsing/tables/photo/eng/17.jpg",

# 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

"min_pixels": 32 * 32 * 3,

# 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

"max_pixels": 32 * 32 * 8192,

# 是否开启图像自动转正功能

"enable_rotate": False}]

}]

response = dashscope.MultiModalConversation.call(

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx",

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen-vl-ocr-latest',

messages=messages,

# 设置内置任务为表格解析

ocr_options= {"task": "table_parsing"}

)

# 表格解析任务以HTML格式返回结果

print(response["output"]["choices"][0]["message"].content[0]["text"])import java.util.Arrays;

import java.util.Collections;

import java.util.Map;

import java.util.HashMap;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.aigc.multimodalconversation.OcrOptions;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.Constants;

public class Main {

// 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

// 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

static {Constants.baseHttpApiUrl="https://dashscope.aliyuncs.com/api/v1";}

public static void simpleMultiModalConversationCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

Map<String, Object> map = new HashMap<>();

map.put("image", "https://duguang-llm.oss-cn-hangzhou.aliyuncs.com/llm_data_keeper/data/doc_parsing/tables/photo/eng/17.jpg");

// 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

map.put("max_pixels", 8388608);

// 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

map.put("min_pixels", 3072);

// 是否开启图像自动转正功能

map.put("enable_rotate", false);

// 配置内置的OCR任务

OcrOptions ocrOptions = OcrOptions.builder()

.task(OcrOptions.Task.TABLE_PARSING)

.build();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

map

)).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

// 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-ocr-latest")

.message(userMessage)

.ocrOptions(ocrOptions)

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

simpleMultiModalConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}# ======= 重要提示 =======

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成:https://dashscope-us.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# 若使用新加坡地域的模型,需要将base_url换成:https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# === 执行时请删除该注释 ===

curl --location 'https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation' \

--header "Authorization: Bearer $DASHSCOPE_API_KEY" \

--header 'Content-Type: application/json' \

--data '

{

"model": "qwen-vl-ocr-latest",

"input": {

"messages": [

{

"role": "user",

"content": [

{

"image": "http://duguang-llm.oss-cn-hangzhou.aliyuncs.com/llm_data_keeper/data/doc_parsing/tables/photo/eng/17.jpg",

"min_pixels": 3072,

"max_pixels": 8388608,

"enable_rotate": false

}

]

}

]

},

"parameters": {

"ocr_options": {

"task": "table_parsing"

}

}

}

'文档解析

模型支持解析以图像形式存储的扫描件或PDF文档,能识别文件中的标题、摘要、标签等,以带有LaTeX格式的文本返回识别结果。

task的取值 | 指定的Prompt | 输出格式与示例 |

|

|

|

以下是通过 Dashscope SDK及 HTTP 方式调用的示例代码:

import os

import dashscope

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

# 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

dashscope.base_http_api_url = "https://dashscope.aliyuncs.com/api/v1"

messages = [{

"role": "user",

"content": [{

"image": "https://img.alicdn.com/imgextra/i1/O1CN01ukECva1cisjyK6ZDK_!!6000000003635-0-tps-1500-1734.jpg",

# 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

"min_pixels": 32 * 32 * 3,

# 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

"max_pixels": 32 * 32 * 8192,

# 是否开启图像自动转正功能

"enable_rotate": False}]

}]

response = dashscope.MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx",

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen-vl-ocr-latest',

messages=messages,

# 设置内置任务为文档解析

ocr_options= {"task": "document_parsing"}

)

# 文档解析任务以LaTeX格式返回结果

print(response["output"]["choices"][0]["message"].content[0]["text"])import java.util.Arrays;

import java.util.Collections;

import java.util.Map;

import java.util.HashMap;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.aigc.multimodalconversation.OcrOptions;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.Constants;

public class Main {

// 以下为北京地域 base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

// 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

static {Constants.baseHttpApiUrl="https://dashscope.aliyuncs.com/api/v1";}

public static void simpleMultiModalConversationCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

Map<String, Object> map = new HashMap<>();

map.put("image", "https://img.alicdn.com/imgextra/i1/O1CN01ukECva1cisjyK6ZDK_!!6000000003635-0-tps-1500-1734.jpg");

// 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

map.put("max_pixels", 8388608);

// 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

map.put("min_pixels", 3072);

// 是否开启图像自动转正功能

map.put("enable_rotate", false);

// 配置内置的OCR任务

OcrOptions ocrOptions = OcrOptions.builder()

.task(OcrOptions.Task.DOCUMENT_PARSING)

.build();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

map

)).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

// 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-ocr-latest")

.message(userMessage)

.ocrOptions(ocrOptions)

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

simpleMultiModalConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}# ======= 重要提示 =======

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成:https://dashscope-us.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# 若使用新加坡地域的模型,需要将base_url换成:https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# === 执行时请删除该注释 ===

curl --location 'https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation'\

--header "Authorization: Bearer $DASHSCOPE_API_KEY"\

--header 'Content-Type: application/json'\

--data '{

"model": "qwen-vl-ocr-latest",

"input": {

"messages": [

{

"role": "user",

"content": [{

"image": "https://img.alicdn.com/imgextra/i1/O1CN01ukECva1cisjyK6ZDK_!!6000000003635-0-tps-1500-1734.jpg",

"min_pixels": 3072,

"max_pixels": 8388608,

"enable_rotate": false

}

]

}

]

},

"parameters": {

"ocr_options": {

"task": "document_parsing"

}

}

}

'公式识别

模型支持解析图像中的公式,以带有LaTeX格式的文本返回识别结果。

task的取值 | 指定的Prompt | 输出格式与示例 |

|

|

|

以下是通过 Dashscope SDK及 HTTP 方式调用的示例代码:

import os

import dashscope

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

# 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

dashscope.base_http_api_url = "https://dashscope.aliyuncs.com/api/v1"

messages = [{

"role": "user",

"content": [{

"image": "http://duguang-llm.oss-cn-hangzhou.aliyuncs.com/llm_data_keeper/data/formula_handwriting/test/inline_5_4.jpg",

# 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

"min_pixels": 32 * 32 * 3,

# 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

"max_pixels": 32 * 32 * 8192,

# 是否开启图像自动转正功能

"enable_rotate": False }]

}]

response = dashscope.MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx"

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen-vl-ocr-latest',

messages=messages,

# 设置内置任务为公式识别

ocr_options= {"task": "formula_recognition"}

)

# 公式识别任务以LaTeX格式返回结果

print(response["output"]["choices"][0]["message"].content[0]["text"])import java.util.Arrays;

import java.util.Collections;

import java.util.Map;

import java.util.HashMap;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.aigc.multimodalconversation.OcrOptions;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.Constants;

public class Main {

// 以下为北京地域 base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

// 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

static {Constants.baseHttpApiUrl="https://dashscope.aliyuncs.com/api/v1";}

public static void simpleMultiModalConversationCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

Map<String, Object> map = new HashMap<>();

map.put("image", "http://duguang-llm.oss-cn-hangzhou.aliyuncs.com/llm_data_keeper/data/formula_handwriting/test/inline_5_4.jpg");

// 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

map.put("max_pixels", 8388608);

// 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

map.put("min_pixels", 3072);

// 是否开启图像自动转正功能

map.put("enable_rotate", false);

// 配置内置的OCR任务

OcrOptions ocrOptions = OcrOptions.builder()

.task(OcrOptions.Task.FORMULA_RECOGNITION)

.build();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

map

)).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

// 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-ocr-latest")

.message(userMessage)

.ocrOptions(ocrOptions)

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

simpleMultiModalConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}# ======= 重要提示 =======

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成:https://dashscope-us.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# 若使用新加坡地域的模型,需要将base_url换成:https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# === 执行时请删除该注释 ===

curl --location 'https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation' \

--header "Authorization: Bearer $DASHSCOPE_API_KEY" \

--header 'Content-Type: application/json' \

--data '

{

"model": "qwen-vl-ocr-latest",

"input": {

"messages": [

{

"role": "user",

"content": [

{

"image": "http://duguang-llm.oss-cn-hangzhou.aliyuncs.com/llm_data_keeper/data/formula_handwriting/test/inline_5_4.jpg",

"min_pixels": 3072,

"max_pixels": 8388608,

"enable_rotate": false

}

]

}

]

},

"parameters": {

"ocr_options": {

"task": "formula_recognition"

}

}

}

'通用文字识别

通用文字识别主要用于对中英文场景,以纯文本格式返回识别结果。

task的取值 | 指定的Prompt | 输出格式与示例 |

|

|

|

以下是通过 Dashscope SDK 及 HTTP 方式调用的示例代码:

import os

import dashscope

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

# 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

dashscope.base_http_api_url = "https://dashscope.aliyuncs.com/api/v1"

messages = [{

"role": "user",

"content": [{

"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/ctdzex/biaozhun.jpg",

# 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

"min_pixels": 32 * 32 * 3,

# 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

"max_pixels": 32 * 32 * 8192,

# 是否开启图像自动转正功能

"enable_rotate": False}]

}]

response = dashscope.MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx",

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen-vl-ocr-latest',

messages=messages,

# 设置内置任务为通用文字识别

ocr_options= {"task": "text_recognition"}

)

# 通用文字识别任务以纯文本格式返回结果

print(response["output"]["choices"][0]["message"].content[0]["text"])import java.util.Arrays;

import java.util.Collections;

import java.util.Map;

import java.util.HashMap;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.aigc.multimodalconversation.OcrOptions;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.Constants;

public class Main {

// 以下为北京地域 base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

// 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

static {Constants.baseHttpApiUrl="https://dashscope.aliyuncs.com/api/v1";}

public static void simpleMultiModalConversationCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

Map<String, Object> map = new HashMap<>();

map.put("image", "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/ctdzex/biaozhun.jpg");

// 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

map.put("max_pixels", 8388608);

// 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

map.put("min_pixels", 3072);

// 是否开启图像自动转正功能

map.put("enable_rotate", false);

// 配置内置任务

OcrOptions ocrOptions = OcrOptions.builder()

.task(OcrOptions.Task.TEXT_RECOGNITION)

.build();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

map

)).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

// 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-ocr-latest")

.message(userMessage)

.ocrOptions(ocrOptions)

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

simpleMultiModalConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}# ======= 重要提示 =======

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成:https://dashscope-us.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# 若使用新加坡地域的模型,需要将base_url换成:https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# === 执行时请删除该注释 ===

curl --location 'https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation'\

--header "Authorization: Bearer $DASHSCOPE_API_KEY"\

--header 'Content-Type: application/json'\

--data '{

"model": "qwen-vl-ocr-latest",

"input": {

"messages": [

{

"role": "user",

"content": [{

"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/ctdzex/biaozhun.jpg",

"min_pixels": 3072,

"max_pixels": 8388608,

"enable_rotate": false

}

]

}

]

},

"parameters": {

"ocr_options": {

"task": "text_recognition"

}

}

}'多语言识别

多语言识别适用于中英文之外的小语种场景,支持的小语种有:阿拉伯语、法语、德语、意大利语、日语、韩语、葡萄牙语、俄语、西班牙语、越南语,以纯文本格式返回识别结果。

task的取值 | 指定的Prompt | 输出格式与示例 |

|

|

|

以下是通过Dashscope SDK及HTTP方式调用的示例代码:

import os

import dashscope

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

# 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

dashscope.base_http_api_url = "https://dashscope.aliyuncs.com/api/v1"

messages = [{

"role": "user",

"content": [{

"image": "https://img.alicdn.com/imgextra/i2/O1CN01VvUMNP1yq8YvkSDFY_!!6000000006629-2-tps-6000-3000.png",

# 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

"min_pixels": 32 * 32 * 3,

# 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

"max_pixels": 32 * 32 * 8192,

# 是否开启图像自动转正功能

"enable_rotate": False }]

}]

response = dashscope.MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx",

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen-vl-ocr-latest',

messages=messages,

# 设置内置任务为多语言识别

ocr_options={"task": "multi_lan"}

)

# 多语言识别任务以纯文本的形式返回结果

print(response["output"]["choices"][0]["message"].content[0]["text"])import java.util.Arrays;

import java.util.Collections;

import java.util.Map;

import java.util.HashMap;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.aigc.multimodalconversation.OcrOptions;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.Constants;

public class Main {

// 以下为北京地域 base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

// 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

static {Constants.baseHttpApiUrl="https://dashscope.aliyuncs.com/api/v1";}

public static void simpleMultiModalConversationCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

Map<String, Object> map = new HashMap<>();

map.put("image", "https://img.alicdn.com/imgextra/i2/O1CN01VvUMNP1yq8YvkSDFY_!!6000000006629-2-tps-6000-3000.png");

// 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

map.put("max_pixels", 8388608);

// 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

map.put("min_pixels", 3072);

// 是否开启图像自动转正功能

map.put("enable_rotate", false);

// 配置内置的OCR任务

OcrOptions ocrOptions = OcrOptions.builder()

.task(OcrOptions.Task.MULTI_LAN)

.build();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

map

)).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

// 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-ocr-latest")

.message(userMessage)

.ocrOptions(ocrOptions)

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

simpleMultiModalConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}# ======= 重要提示 =======

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成:https://dashscope-us.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# 若使用新加坡地域的模型,需要将base_url换成:https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# === 执行时请删除该注释 ===

curl --location 'https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation' \

--header "Authorization: Bearer $DASHSCOPE_API_KEY" \

--header 'Content-Type: application/json' \

--data '

{

"model": "qwen-vl-ocr-latest",

"input": {

"messages": [

{

"role": "user",

"content": [

{

"image": "https://img.alicdn.com/imgextra/i2/O1CN01VvUMNP1yq8YvkSDFY_!!6000000006629-2-tps-6000-3000.png",

"min_pixels": 3072,

"max_pixels": 8388608,

"enable_rotate": false

}

]

}

]

},

"parameters": {

"ocr_options": {

"task": "multi_lan"

}

}

}

'传入本地文件(Base64 编码或文件路径)

千问VL 提供两种本地文件上传方式:Base64 编码上传和文件路径直接上传。可根据文件大小、SDK类型选择上传方式,具体建议请参见如何选择文件上传方式;两种方式均需满足图像限制中对文件的要求。

Base64 编码上传

将文件转换为 Base64 编码字符串,再传入模型。适用于 OpenAI 和 DashScope SDK 及 HTTP 方式

文件路径上传

直接向模型传入本地文件路径。仅 DashScope Python 和 Java SDK 支持,不支持 DashScope HTTP 和 OpenAI 兼容方式。

请您参考下表,结合您的编程语言与操作系统指定文件的路径。

文件路径传入

传入文件路径仅支持 DashScope Python 和 Java SDK方式调用,不支持 DashScope HTTP 和OpenAI 兼容方式。

Python

import os

import dashscope

from dashscope import MultiModalConversation

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

# 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

dashscope.base_http_api_url = "https://dashscope.aliyuncs.com/api/v1"

# 将xxxx/test.png替换为您本地图像的绝对路径

local_path = "xxx/test.jpg"

image_path = f"file://{local_path}"

messages = [

{

"role": "user",

"content": [

{

"image": image_path,

# 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

"min_pixels": 32 * 32 * 3,

# 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

"max_pixels": 32 * 32 * 8192,

# 是否开启图像自动转正功能

"enable_rotate": False,

},

# 模型未设置内置任务时,支持在text字段中传入Prompt,若未传入则使用默认的Prompt:Please output only the text content from the image without any additional descriptions or formatting.

{

"text": "请提取车票图像中的发票号码、车次、起始站、终点站、发车日期和时间点、座位号、席别类型、票价、身份证号码、购票人姓名。要求准确无误的提取上述关键信息、不要遗漏和捏造虚假信息,模糊或者强光遮挡的单个文字可以用英文问号?代替。返回数据格式以json方式输出,格式为:{'发票号码':'xxx', '车次':'xxx', '起始站':'xxx', '终点站':'xxx', '发车日期和时间点':'xxx', '座位号':'xxx', '席别类型':'xxx','票价':'xxx', '身份证号码':'xxx', '购票人姓名':'xxx'"

},

],

}

]

response = MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx"

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

api_key=os.getenv("DASHSCOPE_API_KEY"),

model="qwen-vl-ocr-latest",

messages=messages,

)

print(response["output"]["choices"][0]["message"].content[0]["text"])

Java

import java.util.Arrays;

import java.util.Collections;

import java.util.Map;

import java.util.HashMap;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.Constants;

public class Main {

// 以下为北京地域 base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

// 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

static {Constants.baseHttpApiUrl="https://dashscope.aliyuncs.com/api/v1";}

public static void simpleMultiModalConversationCall(String localPath)

throws ApiException, NoApiKeyException, UploadFileException {

String filePath = "file://"+localPath;

MultiModalConversation conv = new MultiModalConversation();

Map<String, Object> map = new HashMap<>();

map.put("image", filePath);

// 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

map.put("max_pixels", 8388608);

// 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

map.put("min_pixels", 3072);

// 是否开启图像自动转正功能

map.put("enable_rotate", false);

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

map,

// 模型未设置内置任务时,支持在text字段中传入Prompt,若未传入则使用默认的Prompt:Please output only the text content from the image without any additional descriptions or formatting.

Collections.singletonMap("text", "请提取车票图像中的发票号码、车次、起始站、终点站、发车日期和时间点、座位号、席别类型、票价、身份证号码、购票人姓名。要求准确无误的提取上述关键信息、不要遗漏和捏造虚假信息,模糊或者强光遮挡的单个文字可以用英文问号?代替。返回数据格式以json方式输出,格式为:{'发票号码':'xxx', '车次':'xxx', '起始站':'xxx', '终点站':'xxx', '发车日期和时间点':'xxx', '座位号':'xxx', '席别类型':'xxx','票价':'xxx', '身份证号码':'xxx', '购票人姓名':'xxx'"))).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

// 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-ocr-latest")

.message(userMessage)

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

// 将xxxx/test.jpg替换为您本地图像的绝对路径

simpleMultiModalConversationCall("xxx/test.jpg");

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}Base64 编码传入

OpenAI 兼容

Python

from openai import OpenAI

import os

import base64

# 读取本地文件,并编码为 Base64 格式

def encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode("utf-8")

# 将xxxx/test.png替换为您本地图像的绝对路径

base64_image = encode_image("xxx/test.png")

client = OpenAI(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx"

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

api_key=os.getenv("DASHSCOPE_API_KEY"),

# 以下为北京地域的 base_url,若使用弗吉尼亚地域模型,需要将base_url换成https://dashscope-us.aliyuncs.com/compatible-mode/v1

# 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/compatible-mode/v1

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1",

)

completion = client.chat.completions.create(

model="qwen-vl-ocr-latest",

messages=[

{

"role": "user",

"content": [

{

"type": "image_url",

# 需要注意,传入Base64,图像格式(即image/{format})需要与支持的图片列表中的Content Type保持一致。"f"是字符串格式化的方法。

# PNG图像: f"data:image/png;base64,{base64_image}"

# JPEG图像: f"data:image/jpeg;base64,{base64_image}"

# WEBP图像: f"data:image/webp;base64,{base64_image}"

"image_url": {"url": f"data:image/png;base64,{base64_image}"},

# 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

"min_pixels": 32 * 32 * 3,

# 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

"max_pixels": 32 * 32 * 8192

},

# 模型支持在以下text字段中传入Prompt,若未传入,则会使用默认的Prompt:Please output only the text content from the image without any additional descriptions or formatting.

{"type": "text", "text": "请提取车票图像中的发票号码、车次、起始站、终点站、发车日期和时间点、座位号、席别类型、票价、身份证号码、购票人姓名。要求准确无误的提取上述关键信息、不要遗漏和捏造虚假信息,模糊或者强光遮挡的单个文字可以用英文问号?代替。返回数据格式以json方式输出,格式为:{'发票号码':'xxx', '车次':'xxx', '起始站':'xxx', '终点站':'xxx', '发车日期和时间点':'xxx', '座位号':'xxx', '席别类型':'xxx','票价':'xxx', '身份证号码':'xxx', '购票人姓名':'xxx'"},

],

}

],

)

print(completion.choices[0].message.content)Node.js

import OpenAI from "openai";

import {

readFileSync

} from 'fs';

// 初始化OpenAI客户端

const client = new OpenAI({

// 若没有配置环境变量,请用百炼API Key将下行替换为:apiKey: "sk-xxx",

// 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

apiKey: process.env.DASHSCOPE_API_KEY,

// 以下为北京地域的 base_url,若使用弗吉尼亚地域模型,需要将base_url换成https://dashscope-us.aliyuncs.com/compatible-mode/v1

// 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/compatible-mode/v1

baseURL: "https://dashscope.aliyuncs.com/compatible-mode/v1",

});

// 读取本地文件,并编码为 base 64 格式

const encodeImage = (imagePath) => {

const imageFile = readFileSync(imagePath);

return imageFile.toString('base64');

};

// 将xxxx/test.png替换为您本地图像的绝对路径

const base64Image = encodeImage("xxx/test.jpg")

async function main() {

const completion = await client.chat.completions.create({

model: "qwen-vl-ocr-latest",

messages: [{

"role": "user",

"content": [{

"type": "image_url",

"image_url": {

// 需要注意,传入Base64,图像格式(即image/{format})需要与支持的图片列表中的Content Type保持一致。

// PNG图像: data:image/png;base64,${base64Image}

// JPEG图像: data:image/jpeg;base64,${base64Image}

// WEBP图像: data:image/webp;base64,${base64Image}

"url": `data:image/jpeg;base64,${base64Image}`

},

// 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

"min_pixels": 32 * 32 * 3,

// 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

"max_pixels": 32 * 32 * 8192

},

// 模型支持在以下text字段中传入Prompt,若未传入,则会使用默认的Prompt:Please output only the text content from the image without any additional descriptions or formatting.

{

"type": "text",

"text": "请提取车票图像中的发票号码、车次、起始站、终点站、发车日期和时间点、座位号、席别类型、票价、身份证号码、购票人姓名。要求准确无误的提取上述关键信息、不要遗漏和捏造虚假信息,模糊或者强光遮挡的单个文字可以用英文问号?代替。返回数据格式以json方式输出,格式为:{'发票号码':'xxx', '车次':'xxx', '起始站':'xxx', '终点站':'xxx', '发车日期和时间点':'xxx', '座位号':'xxx', '席别类型':'xxx','票价':'xxx', '身份证号码':'xxx', '购票人姓名':'xxx'"

}

]

}]

});

console.log(completion.choices[0].message.content);

}

main();curl

将文件转换为 Base64 编码的字符串的方法可参见示例代码;

为了便于展示,代码中的

"data:image/png;base64,/9j/4AAQSkZJRgABAQAAAQABAAD/2wBDAA...",该Base64 编码字符串是截断的。在实际使用中,请务必传入完整的编码字符串。

# ======= 重要提示 =======

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

# 以下是北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成https://dashscope-us.aliyuncs.com/compatible-mode/v1/chat/completions

# 如果使用新加坡地域的模型,需要将base_url替换为:https://dashscope-intl.aliyuncs.com/compatible-mode/v1/chat/completions

# === 执行时请删除该注释 ===

curl --location 'https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions' \

--header "Authorization: Bearer $DASHSCOPE_API_KEY" \

--header 'Content-Type: application/json' \

--data '{

"model": "qwen-vl-ocr-latest",

"messages": [

{

"role": "user",

"content": [

{"type": "image_url", "image_url": {"url": "data:image/png;base64,/9j/4AAQSkZJRgABAQAAAQABAAD/2wBDAA..."}},

{"type": "text", "text": "请提取车票图像中的发票号码、车次、起始站、终点站、发车日期和时间点、座位号、席别类型、票价、身份证号码、购票人姓名。要求准确无误的提取上述关键信息、不要遗漏和捏造虚假信息,模糊或者强光遮挡的单个文字可以用英文问号?代替。返回数据格式以json方式输出,格式为:{'发票号码':'xxx', '车次':'xxx', '起始站':'xxx', '终点站':'xxx', '发车日期和时间点':'xxx', '座位号':'xxx', '席别类型':'xxx','票价':'xxx', '身份证号码':'xxx', '购票人姓名':'xxx'"}

]

}]

}'DashScope

Python

import os

import base64

import dashscope

from dashscope import MultiModalConversation

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

# 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

dashscope.base_http_api_url = "https://dashscope.aliyuncs.com/api/v1"

# base64 编码格式

def encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode("utf-8")

# 将xxx/test.jpg替换为你本地图像的绝对路径

base64_image = encode_image("xxx/test.jpg")

messages = [

{

"role": "user",

"content": [

{

# 需要注意,传入Base64,图像格式(即image/{format})需要与支持的图片列表中的Content Type保持一致。"f"是字符串格式化的方法。

# PNG图像: f"data:image/png;base64,{base64_image}"

# JPEG图像: f"data:image/jpeg;base64,{base64_image}"

# WEBP图像: f"data:image/webp;base64,{base64_image}"

"image": f"data:image/jpeg;base64,{base64_image}",

# 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

"min_pixels": 32 * 32 * 3,

# 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

"max_pixels": 32 * 32 * 8192,

# 是否开启图像自动转正功能

"enable_rotate": False,

},

# 模型未设置内置任务时,支持在text字段中传入Prompt,若未传入则使用默认的Prompt:Please output only the text content from the image without any additional descriptions or formatting.

{

"text": "请提取车票图像中的发票号码、车次、起始站、终点站、发车日期和时间点、座位号、席别类型、票价、身份证号码、购票人姓名。要求准确无误的提取上述关键信息、不要遗漏和捏造虚假信息,模糊或者强光遮挡的单个文字可以用英文问号?代替。返回数据格式以json方式输出,格式为:{'发票号码':'xxx', '车次':'xxx', '起始站':'xxx', '终点站':'xxx', '发车日期和时间点':'xxx', '座位号':'xxx', '席别类型':'xxx','票价':'xxx', '身份证号码':'xxx', '购票人姓名':'xxx'"

},

],

}

]

response = MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx"

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

api_key=os.getenv("DASHSCOPE_API_KEY"),

model="qwen-vl-ocr-latest",

messages=messages,

)

print(response["output"]["choices"][0]["message"].content[0]["text"])Java

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

import java.util.*;

import java.util.Arrays;

import java.util.Collections;

import java.util.Map;

import java.util.HashMap;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.Constants;

public class Main {

// 以下为北京地域 base_url,若使用弗吉尼亚地域模型,需要将base_url换成 https://dashscope-us.aliyuncs.com/api/v1

// 若使用新加坡地域的模型,需将base_url替换为:https://dashscope-intl.aliyuncs.com/api/v1

static {Constants.baseHttpApiUrl="https://dashscope.aliyuncs.com/api/v1";}

// Base64 编码格式

private static String encodeImageToBase64(String imagePath) throws IOException {

Path path = Paths.get(imagePath);

byte[] imageBytes = Files.readAllBytes(path);

return Base64.getEncoder().encodeToString(imageBytes);

}

public static void simpleMultiModalConversationCall(String localPath)

throws ApiException, NoApiKeyException, UploadFileException, IOException {

String base64Image = encodeImageToBase64(localPath); // Base64编码

MultiModalConversation conv = new MultiModalConversation();

Map<String, Object> map = new HashMap<>();

map.put("image", "data:image/jpeg;base64," + base64Image);

// 输入图像的最大像素阈值,超过该值图像会进行缩小,直到总像素低于max_pixels

map.put("max_pixels", 8388608);

// 输入图像的最小像素阈值,小于该值图像会进行放大,直到总像素大于min_pixels

map.put("min_pixels", 3072);

// 是否开启图像自动转正功能

map.put("enable_rotate", false);

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

map,

// 模型未设置内置任务时,支持在text字段中传入Prompt,若未传入则使用默认的Prompt:Please output only the text content from the image without any additional descriptions or formatting.

Collections.singletonMap("text", "请提取车票图像中的发票号码、车次、起始站、终点站、发车日期和时间点、座位号、席别类型、票价、身份证号码、购票人姓名。要求准确无误的提取上述关键信息、不要遗漏和捏造虚假信息,模糊或者强光遮挡的单个文字可以用英文问号?代替。返回数据格式以json方式输出,格式为:{'发票号码':'xxx', '车次':'xxx', '起始站':'xxx', '终点站':'xxx', '发车日期和时间点':'xxx', '座位号':'xxx', '席别类型':'xxx','票价':'xxx', '身份证号码':'xxx', '购票人姓名':'xxx'"))).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-ocr-latest")

.message(userMessage)

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

// 将xxxx/test.png替换为您本地图像的绝对路径

simpleMultiModalConversationCall("xxx/test.jpg");

} catch (ApiException | NoApiKeyException | UploadFileException | IOException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}curl

将文件转换为 Base64 编码的字符串的方法可参见示例代码;

为了便于展示,代码中的

"data:image/png;base64,/9j/4AAQSkZJRgABAQAAAQABAAD/2wBDAA...",该Base64 编码字符串是截断的。在实际使用中,请务必传入完整的编码字符串。

# ======= 重要提示 =======

# 各地域的API Key不同。获取API Key:https://help.aliyun.com/zh/model-studio/get-api-key

# 以下为北京地域base_url,若使用弗吉尼亚地域模型,需要将base_url换成:https://dashscope-us.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# 若使用新加坡地域的模型,需要将base_url换成:https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation

# === 执行时请删除该注释 ===

curl -X POST https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen-vl-ocr-latest",

"input":{

"messages":[

{

"role": "user",

"content": [

{"image": "data:image/png;base64,/9j/4AAQSkZJRgABAQAAAQABAAD/2wBDAA..."},

{"text": "请提取车票图像中的发票号码、车次、起始站、终点站、发车日期和时间点、座位号、席别类型、票价、身份证号码、购票人姓名。要求准确无误的提取上述关键信息、不要遗漏和捏造虚假信息,模糊或者强光遮挡的单个文字可以用英文问号?代替。返回数据格式以json方式输出,格式为:{'发票号码':'xxx', '车次':'xxx', '起始站':'xxx', '终点站':'xxx', '发车日期和时间点':'xxx', '座位号':'xxx', '席别类型':'xxx','票价':'xxx', '身份证号码':'xxx', '购票人姓名':'xxx'"}

]

}

]

}

}'更多用法

使用限制

图像限制

尺寸与比例:图像的宽度和高度均需大于 10 像素,宽高比不应超过 200:1 或 1:200 。

像素总量:对图像的像素总数无严格限制,模型会自动缩放图像,建议图像像素不超过 1568 万。

支持的图像格式

分辨率在4K

(3840x2160)以下,支持的图像格式如下:图像格式

常见扩展名

MIME Type

BMP

.bmp

image/bmp

JPEG

.jpe, .jpeg, .jpg

image/jpeg

PNG

.png

image/png

TIFF

.tif, .tiff

image/tiff

WEBP

.webp

image/webp

HEIC

.heic

image/heic

分辨率处于

4K(3840x2160)到8K(7680x4320)范围,仅支持 JPEG、JPG 、PNG 格式。

图像大小:

以公网 URL 和本地路径传入时:单个图像的大小不超过

10MB。以 Base64 编码传入时:编码后的字符串不超过

10MB。

如需压缩文件体积请参见如何将图像或视频压缩到满足要求的大小。

模型限制

System Message:千问OCR模型不支持自定义

System Message,模型内部会使用固定的System Message,所有指令必须通过User Message传入。无多轮对话能力:目前不支持多轮对话能力,只会对用户最新的问题进行回答。

幻觉风险:当图像中的文字太小或分辨率低时,模型可能会出现幻觉。对于非文字提取相关的问题,模型回答也无法保证准确性。

无法处理文本文件:

计费与限流

计费:千问OCR 为多模态模型,总费用 = 输入 Token 数 × 模型输入单价 + 模型输出 Token 数 × 模型输出单价,图像Token计算方法请参见图像Token转换方法。可在阿里云控制台的费用与成本页面查看账单或进行充值。

限流:千问OCR模型的限流条件参见限流

免费额度(仅北京地域):从开通百炼或模型申请通过之日起计算有效期,有效期90天内,千问OCR模型提供100万Token的免费额度。

应用于生产环境

处理多页文档 (如 PDF):

拆分:使用图像处理库(如

Python的pdf2image)将 PDF 文件按页转换为多张高质量的图片。提交请求:以使用多图像输入方式进行识别。

图像预处理:

确保输入图像清晰、光照均匀,避免过度压缩:

避免信息丢失:优先使用无损格式(如 PNG)进行图像的存储和传输。

提升图像清晰度:对于图像中的噪点,采用降噪(如均值滤波、中值滤波等)算法平滑噪声。

光照校正:对于光照不均的图像,采用自适应直方图均衡化等算法调整亮度和对比度。

对于倾斜的图像:使用 DashScope SDK 的

enable_rotate: true参数可以显著提升识别效果。对于过小或超大图像:使用

min_pixels和max_pixels参数控制图像处理前的缩放行为min_pixels:确保小图被放大以识别细节,保持默认值即可。max_pixels:防止超大图消耗过多资源。 对于大多数场景,使用默认值即可。如果发现某些小字识别不清,可以尝试调高max_pixels,但注意这会增加 Token 消耗。

结果校验:模型识别结果可能存在误差,对于关键业务,建议设计人工审核环节或引入校验规则(如身份证号、银行卡号的格式校验)来验证模型输出的准确性。

批量调用:在大规模、非实时场景下,可使用 Batch API 异步处理批量任务,成本更低。

常见问题

如何选择文件上传方式?

模型输出文字定位的结果后,如何将检测框绘制到原图上?

API参考

关于千问OCR模型的输入输出参数,请参见Qwen-OCR API参考。

错误码

如果模型调用失败并返回报错信息,请参见错误信息进行解决。