视觉理解(Qwen-VL)

通义千问VL模型可以根据您传入的图片来进行回答。

应用场景

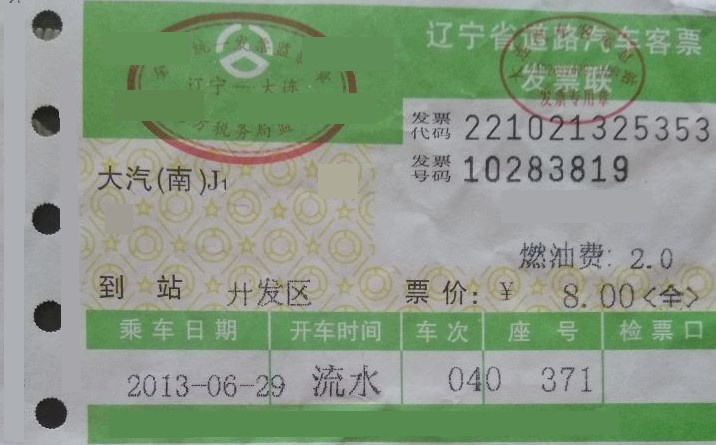

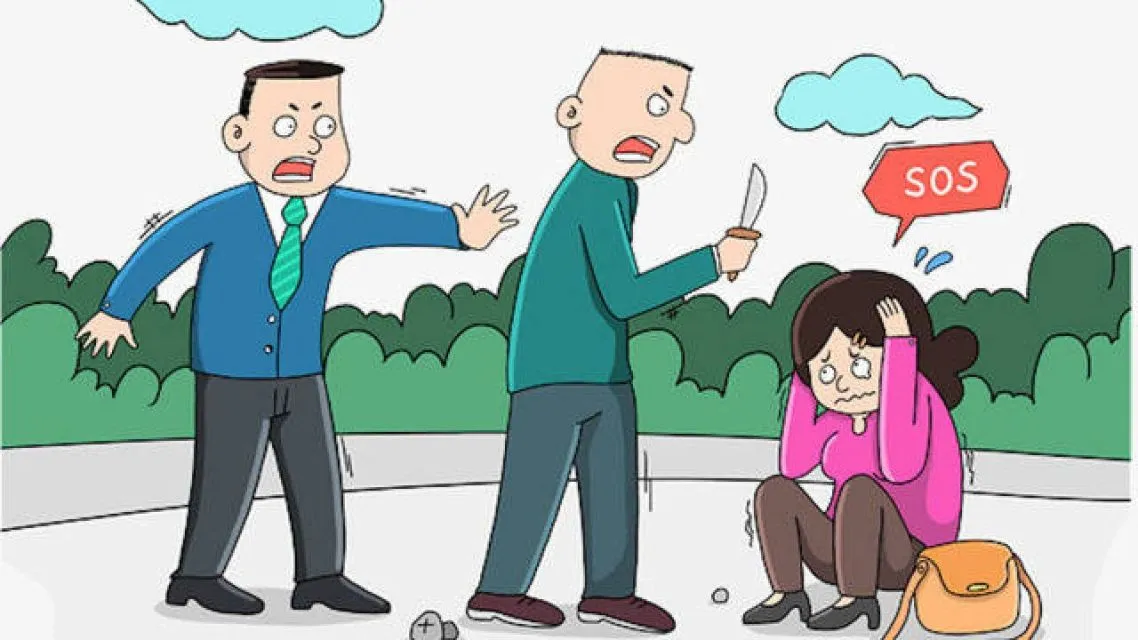

图像问答:描述图像中的内容或者对其进行分类打标,如识别人物、地点、花鸟鱼虫等。

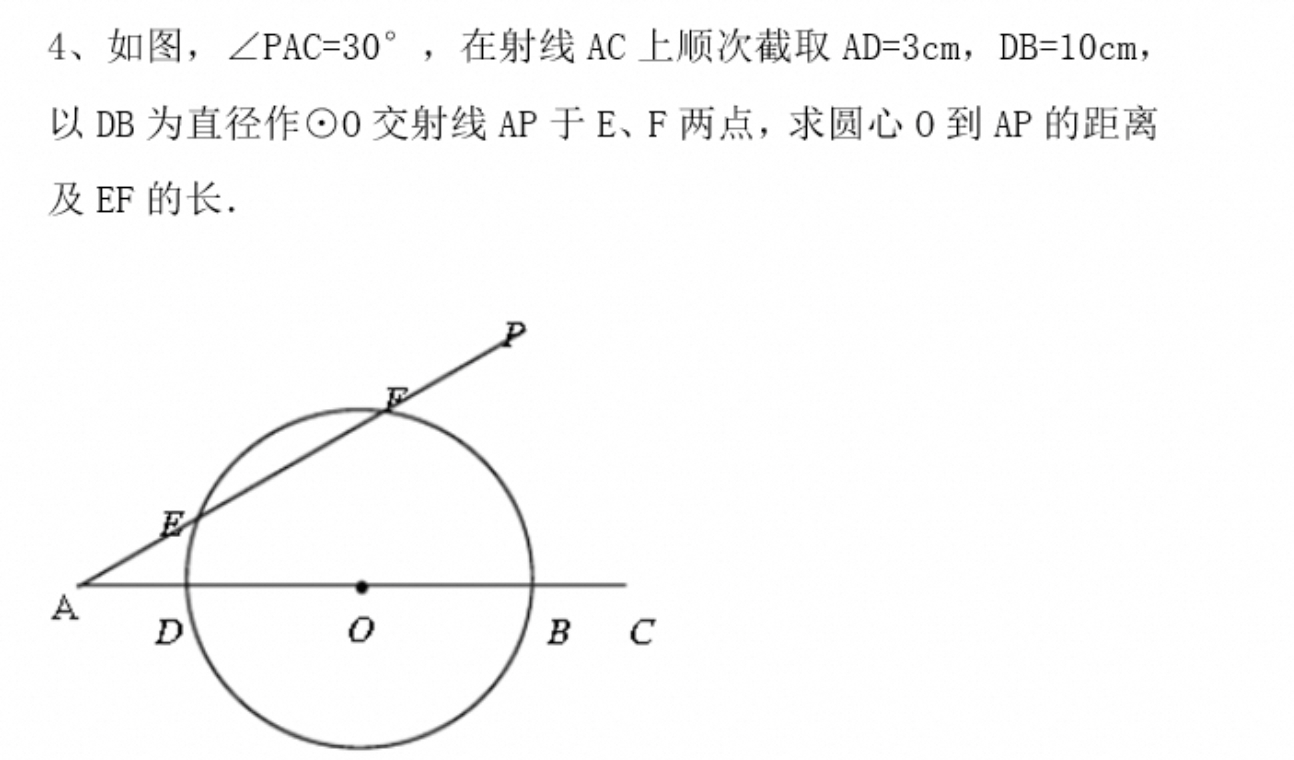

数学题目解答:解答图像中的数学问题,适用于中小学、大学以及成人教育阶段。

视频理解:分析视频内容,如对具体事件进行定位并获取时间戳,或生成关键时间段的摘要。

物体定位:定位图像中的物体,返回外边界矩形框的左上角、右下角坐标或者中心点坐标。

文档解析:将图像类的文档(如扫描件/图片PDF)解析为 QwenVL HTML格式,该格式不仅能精准识别文本,还能获取图像、表格等元素的位置信息。

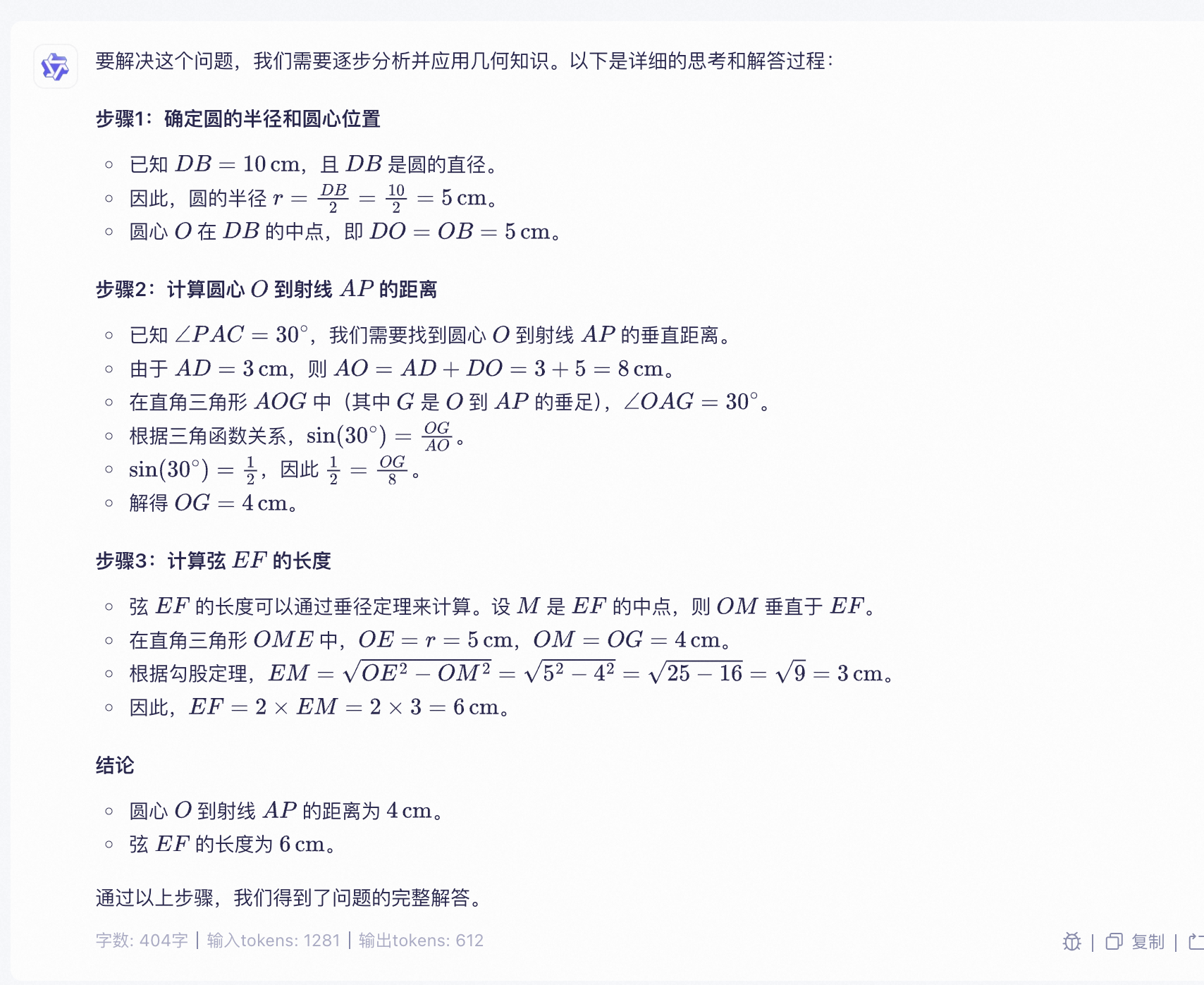

文字识别与信息抽取:识别图像中的文字、公式,或者抽取票据、证件、表单中的信息,支持格式化输出文本;可识别的语言有中文、英语、日语、韩语、阿拉伯语、越南语、法语、德语、意大利语、西班牙语和俄语。

模型列表与计费

阿里云百炼平台提供了商业版和开源版两种模型;相对于开源版,商业版模型会持续更新和升级,具有最新的能力。

qwen-vl-max、qwen-vl-plus模型已支持上下文缓存( Context Cache )和结构化输出。

商业版模型

通义千问VL-Max系列

通义千问VL系列能力最强的模型。

模型名称 | 版本 | 上下文长度 | 最大输入 | 最大输出 | 输入成本 | 输出成本 | 免费额度 |

(Token数) | (每千Token) | ||||||

qwen-vl-max 相比qwen-vl-plus再次提升视觉推理和指令遵循能力,在更多复杂任务中提供最佳性能。 当前与qwen-vl-max-2025-04-08能力相同 | 稳定版 | 131,072 | 129,024 单图最大16384 | 8,192 | 0.003元 Batch调用半价 | 0.009元 Batch调用半价 | 各100万Token 有效期:百炼开通后180天内 |

qwen-vl-max-latest 始终与最新快照版能力相同 | 最新版 | 0.0016元 Batch调用半价 | 0.004元 Batch调用半价 | ||||

qwen-vl-max-2025-08-13 又称qwen-vl-max-0813 视觉理解指标全面提升,数学、推理、物体识别、多语言处理能力显著增强。 | 快照版 | 0.0016元 | 0.004元 | ||||

qwen-vl-max-2025-04-08 又称qwen-vl-max-0408 增强数学和推理能力 | 0.003元 | 0.009元 | |||||

qwen-vl-max-2025-04-02 又称qwen-vl-max-0402 显著提高解决复杂数学问题的准确性 | |||||||

qwen-vl-max-2025-01-25 又称qwen-vl-max-0125 升级至Qwen2.5-VL系列,扩展上下文至128k,显著增强图像和视频的理解能力 | |||||||

通义千问VL-Plus系列

通义千问VL-Plus模型在效果、成本上比较均衡。

模型名称 | 版本 | 上下文长度 | 最大输入 | 最大输出 | 输入成本 | 输出成本 | 免费额度 |

(Token数) | (每千Token) | ||||||

qwen-vl-plus 当前与qwen-vl-plus-2025-05-07能力相同 | 稳定版 | 131,072 | 129,024 单图最大16384 | 8,192 | 0.0015元 Batch调用半价 | 0.0045元 Batch调用半价 | 各100万Token 有效期:百炼开通后180天内 |

qwen-vl-plus-latest 始终与最新快照版能力相同 | 最新版 | 0.0008元 Batch调用半价 | 0.002元 Batch调用半价 | ||||

qwen-vl-plus-2025-08-15 又称qwen-vl-plus-0815 在物体识别与定位、多语言处理的能力上有显著提升 | 快照版 | 0.0008元 | 0.002元 | ||||

qwen-vl-plus-2025-07-10 又称qwen-vl-plus-0710 进一步提升监控视频内容的理解能力 | 32,768 | 30,720 单图最大16384 | 0.00015元 | 0.0015元 | |||

qwen-vl-plus-2025-05-07 又称qwen-vl-plus-0507 显著提升数学、推理、监控视频内容的理解能力 | 131,072 | 129,024 单图最大16384 | 0.0015元 | 0.0045元 | |||

qwen-vl-plus-2025-01-25 又称qwen-vl-plus-0125 升级至Qwen2.5-VL系列,扩展上下文至128k,显著增强图像和视频理解能力 | |||||||

开源版模型

qvq-72b-preview模型是由 Qwen 团队开发的实验性研究模型,专注于提升视觉推理能力,尤其在数学推理领域。qvq-72b-preview模型的局限性请参见QVQ官方博客。使用方法 | API参考

如果希望模型先输出思考过程再输出回答内容,请使用商业版模型QVQ。

模型名称 | 上下文长度 | 最大输入 | 最大输出 | 输入成本 | 输出成本 | 免费额度 |

(Token数) | (每千Token) | |||||

qvq-72b-preview | 32,768 | 16,384 单图最大16384 | 16,384 | 0.012元 | 0.036元 | 10万Token 有效期:百炼开通后180天内 |

模型名称 | 上下文长度 | 最大输入 | 最大输出 | 输入成本 | 输出成本 | 免费额度 |

(Token数) | (每千Token) | |||||

qwen2.5-vl-72b-instruct | 131,072 | 129,024 单图最大16384 | 8,192 | 0.016元 | 0.048元 | 各100万Token 有效期:百炼开通后180天内 |

qwen2.5-vl-32b-instruct | 0.008元 | 0.024元 | ||||

qwen2.5-vl-7b-instruct | 0.002元 | 0.005元 | ||||

qwen2.5-vl-3b-instruct | 0.0012元 | 0.0036元 | ||||

qwen2-vl-72b-instruct | 32,768 | 30,720 单图最大16384 | 2,048 | 0.016元 | 0.048元 | |

qwen2-vl-7b-instruct | 32,000 | 30,000 单图最大16384 | 2,000 | 目前仅供免费体验。 免费额度用完后不可调用,建议改用qwen-vl-max、qwen-vl-plus模型。 | 各10万Token 有效期:百炼开通后180天内 | |

qwen2-vl-2b-instruct | 限时免费 | |||||

qwen-vl-v1 | 8,000 | 6,000 单图最大1280 | 1,500 | 目前仅供免费体验。 免费额度用完后不可调用,建议改用qwen-vl-max、qwen-vl-plus模型。 | ||

qwen-vl-chat-v1 | ||||||

模型选型建议

如何使用

前提条件

快速开始

下面是理解在线图像(通过URL指定,非本地图像)的示例代码。了解如何传入本地文件和图像限制。

OpenAI兼容

Python

import os

from openai import OpenAI

client = OpenAI(

api_key=os.getenv("DASHSCOPE_API_KEY"),

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1",

)

completion = client.chat.completions.create(

model="qwen-vl-max-latest", # 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages=[

{

"role": "system",

"content": [{"type": "text", "text": "You are a helpful assistant."}],

},

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {

"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"

},

},

{"type": "text", "text": "图中描绘的是什么景象?"},

],

},

],

)

print(completion.choices[0].message.content)返回结果

这是一张在海滩上拍摄的照片。照片中,一个人和一只狗坐在沙滩上,背景是大海和天空。人和狗似乎在互动,狗的前爪搭在人的手上。阳光从画面的右侧照射过来,给整个场景增添了一种温暖的氛围。Node.js

import OpenAI from "openai";

const openai = new OpenAI({

// 若没有配置环境变量,请用百炼API Key将下行替换为:apiKey: "sk-xxx"

apiKey: process.env.DASHSCOPE_API_KEY,

baseURL: "https://dashscope.aliyuncs.com/compatible-mode/v1"

});

async function main() {

const response = await openai.chat.completions.create({

model: "qwen-vl-max-latest", // 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages: [{

role: "system",

content: [{

type: "text",

text: "You are a helpful assistant."

}]

},

{

role: "user",

content: [{

type: "image_url",

image_url: {

"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"

}

},

{

type: "text",

text: "图中描绘的是什么景象?"

}

]

}

]

});

console.log(response.choices[0].message.content);

}

main()返回结果

这是一张在海滩上拍摄的照片。照片中,一位穿着格子衬衫的女性坐在沙滩上,与一只戴着项圈的黄色拉布拉多犬互动。背景是大海和天空,阳光洒在她们身上,营造出温暖的氛围。curl

curl --location 'https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions' \

--header "Authorization: Bearer $DASHSCOPE_API_KEY" \

--header 'Content-Type: application/json' \

--data '{

"model": "qwen-vl-max",

"messages": [

{"role":"system",

"content":[

{"type": "text", "text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

{"type": "image_url", "image_url": {"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"}},

{"type": "text", "text": "图中描绘的是什么景象?"}

]

}]

}'返回结果

{

"choices": [

{

"message": {

"content": "这张图片展示了一位女士和一只狗在海滩上互动。女士坐在沙滩上,微笑着与狗握手。背景是大海和天空,阳光洒在她们身上,营造出温暖的氛围。狗戴着项圈,显得很温顺。",

"role": "assistant"

},

"finish_reason": "stop",

"index": 0,

"logprobs": null

}

],

"object": "chat.completion",

"usage": {

"prompt_tokens": 1270,

"completion_tokens": 54,

"total_tokens": 1324

},

"created": 1725948561,

"system_fingerprint": null,

"model": "qwen-vl-max",

"id": "chatcmpl-0fd66f46-b09e-9164-a84f-3ebbbedbac15"

}DashScope

Python

import os

import dashscope

messages = [

{

"role": "system",

"content": [

{"text": "You are a helpful assistant."}]

},

{

"role": "user",

"content": [

{"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"},

{"text": "图中描绘的是什么景象?"}]

}]

response = dashscope.MultiModalConversation.call(

#若没有配置环境变量, 请用百炼API Key将下行替换为: api_key ="sk-xxx"

api_key = os.getenv('DASHSCOPE_API_KEY'),

model = 'qwen-vl-max-latest', # 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages = messages

)

print(response.output.choices[0].message.content[0]["text"])返回结果

是一张在海滩上拍摄的照片。照片中有一位女士和一只狗。女士坐在沙滩上,微笑着与狗互动。狗戴着项圈,似乎在与女士握手。背景是大海和天空,阳光洒在她们身上,营造出温馨的氛围。Java

import java.util.Arrays;

import java.util.Collections;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.JsonUtils;

public class Main {

public static void simpleMultiModalConversationCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

MultiModalMessage systemMessage = MultiModalMessage.builder().role(Role.SYSTEM.getValue())

.content(Arrays.asList(

Collections.singletonMap("text", "You are a helpful assistant."))).build();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

Collections.singletonMap("image", "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"),

Collections.singletonMap("text", "图中描绘的是什么景象?"))).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-max-latest") // 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

.messages(Arrays.asList(systemMessage, userMessage))

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

simpleMultiModalConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}返回结果

这是一张在海滩上拍摄的照片。照片中有一个穿着格子衬衫的人和一只戴着项圈的狗。人和狗面对面坐着,似乎在互动。背景是大海和天空,阳光洒在他们身上,营造出温暖的氛围。curl

curl -X POST https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen-vl-max-latest",

"input":{

"messages":[

{"role": "system",

"content": [

{"text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

{"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"},

{"text": "图中描绘的是什么景象?"}

]

}

]

}

}'返回结果

{

"output": {

"choices": [

{

"finish_reason": "stop",

"message": {

"role": "assistant",

"content": [

{

"text": "这是一张在海滩上拍摄的照片。照片中有一个穿着格子衬衫的人和一只戴着项圈的狗。他们坐在沙滩上,背景是大海和天空。阳光从画面的右侧照射过来,给整个场景增添了一种温暖的氛围。"

}

]

}

}

]

},

"usage": {

"output_tokens": 55,

"input_tokens": 1271,

"image_tokens": 1247

},

"request_id": "ccf845a3-dc33-9cda-b581-20fe7dc23f70"

}多轮对话(参考历史对话信息)

通义千问VL模型可以参考历史对话信息实现多轮对话,您需要维护一个messages 数组,将每一轮的对话历史以及新的指令添加到 messages 数组中。

OpenAI兼容

Python

from openai import OpenAI

import os

client = OpenAI(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx"

api_key=os.getenv("DASHSCOPE_API_KEY"),

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1"

)

messages = [

{

"role": "system",

"content": [{"type": "text", "text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {

"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"

},

},

{"type": "text", "text": "图中描绘的是什么景象?"},

],

}

]

completion = client.chat.completions.create(

model="qwen-vl-max-latest", # 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages=messages,

)

print(f"第一轮输出:{completion.choices[0].message.content}")

assistant_message = completion.choices[0].message

messages.append(assistant_message.model_dump())

messages.append({

"role": "user",

"content": [

{

"type": "text",

"text": "做一首诗描述这个场景"

}

]

})

completion = client.chat.completions.create(

model="qwen-vl-max-latest",

messages=messages,

)

print(f"第二轮输出:{completion.choices[0].message.content}")返回结果

第一轮输出:这是一张在海滩上拍摄的照片。照片中,一位穿着格子衬衫的女士坐在沙滩上,与一只戴着项圈的金毛犬互动。背景是大海和天空,阳光洒在她们身上,营造出温暖的氛围。

第二轮输出:沙滩上,阳光洒,

女子与犬,笑语哗。

海浪轻拍,风儿吹,

快乐时光,心儿醉。Node.js

import OpenAI from "openai";

const openai = new OpenAI(

{

// 若没有配置环境变量,请用百炼API Key将下行替换为:apiKey: "sk-xxx",

apiKey: process.env.DASHSCOPE_API_KEY,

baseURL: "https://dashscope.aliyuncs.com/compatible-mode/v1"

}

);

let messages = [

{

role: "system",

content: [{"type": "text", "text": "You are a helpful assistant."}]},

{

role: "user",

content: [

{ type: "image_url", image_url: { "url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg" } },

{ type: "text", text: "图中描绘的是什么景象?" },

]

}]

async function main() {

let response = await openai.chat.completions.create({

model: "qwen-vl-max-latest", // 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages: messages

});

console.log(`第一轮输出:${response.choices[0].message.content}`);

messages.push(response.choices[0].message);

messages.push({"role": "user", "content": "做一首诗描述这个场景"});

response = await openai.chat.completions.create({

model: "qwen-vl-max-latest",

messages: messages

});

console.log(`第二轮输出:${response.choices[0].message.content}`);

}

main()返回结果

第一轮输出:这是一张在海滩上拍摄的照片。照片中有一个穿着格子衬衫的人和一只戴着项圈的狗。人和狗面对面坐着,似乎在互动。背景是大海和天空,阳光从画面的右侧照射过来,营造出温暖的氛围。

第二轮输出:沙滩上,人与狗,

面对面,笑语稠。

海风轻拂,阳光柔,

心随波浪,共潮头。

项圈闪亮,情意浓,

格子衫下,心相通。

海天一色,无尽空,

此刻温馨,永铭中。curl

curl -X POST https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen-vl-max",

"messages": [

{

"role": "system",

"content": [{"type": "text", "text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {

"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"

}

},

{

"type": "text",

"text": "图中描绘的是什么景象?"

}

]

},

{

"role": "assistant",

"content": [

{

"type": "text",

"text": "这是一个女孩和一只狗。"

}

]

},

{

"role": "user",

"content": [

{

"type": "text",

"text": "写一首诗描述这个场景"

}

]

}

]

}'返回结果

{

"choices": [

{

"message": {

"content": "海风轻拂笑颜开, \n沙滩上与犬相陪。 \n夕阳斜照人影短, \n欢乐时光心自醉。",

"role": "assistant"

},

"finish_reason": "stop",

"index": 0,

"logprobs": null

}

],

"object": "chat.completion",

"usage": {

"prompt_tokens": 1295,

"completion_tokens": 32,

"total_tokens": 1327

},

"created": 1726324976,

"system_fingerprint": null,

"model": "qwen-vl-max",

"id": "chatcmpl-3c953977-6107-96c5-9a13-c01e328b24ca"

}DashScope

Python

import os

from dashscope import MultiModalConversation

messages = [

{

"role": "system",

"content": [{"text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

{

"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"

},

{"text": "图中描绘的是什么景象?"},

],

}

]

response = MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx",

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen-vl-max-latest', # 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages=messages)

print(f"模型第一轮输出:{response.output.choices[0].message.content[0]['text']}")

messages.append(response['output']['choices'][0]['message'])

user_msg = {"role": "user", "content": [{"text": "做一首诗描述这个场景"}]}

messages.append(user_msg)

response = MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx",

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen-vl-max-latest',

messages=messages)

print(f"模型第二轮输出:{response.output.choices[0].message.content[0]['text']}")

返回结果

模型第一轮输出:这是一张在海滩上拍摄的照片。照片中有一个穿着格子衬衫的人和一只戴着项圈的狗。人和狗面对面坐着,似乎在互动。背景是大海和天空,阳光洒在他们身上,营造出温暖的氛围。

模型第二轮输出:在阳光照耀的海滩上,人与狗共享欢乐时光。Java

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Collections;

import java.util.List;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

public class Main {

private static final String modelName = "qwen-vl-max-latest"; // 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

public static void MultiRoundConversationCall() throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

MultiModalMessage systemMessage = MultiModalMessage.builder().role(Role.SYSTEM.getValue())

.content(Arrays.asList(Collections.singletonMap("text", "You are a helpful assistant."))).build();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(Collections.singletonMap("image", "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"),

Collections.singletonMap("text", "图中描绘的是什么景象?"))).build();

List<MultiModalMessage> messages = new ArrayList<>();

messages.add(systemMessage);

messages.add(userMessage);

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model(modelName)

.messages(messages)

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println("第一轮输出:"+result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text")); // add the result to conversation

messages.add(result.getOutput().getChoices().get(0).getMessage());

MultiModalMessage msg = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(Collections.singletonMap("text", "做一首诗描述这个场景"))).build();

messages.add(msg);

param.setMessages((List)messages);

result = conv.call(param);

System.out.println("第二轮输出:"+result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text")); }

public static void main(String[] args) {

try {

MultiRoundConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}返回结果

第一轮输出:这是一张在海滩上拍摄的照片。照片中有一个穿着格子衬衫的人和一只戴着项圈的狗。人和狗面对面坐着,似乎在互动。背景是大海和天空,阳光洒在他们身上,营造出温暖的氛围。

第二轮输出:在阳光洒满的海滩上,人与狗共享欢乐时光。curl

curl -X POST https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen-vl-max-latest",

"input":{

"messages":[

{

"role": "system",

"content": [{"text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

{"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"},

{"text": "图中描绘的是什么景象?"}

]

},

{

"role": "assistant",

"content": [

{"text": "图中是一名女子和一只拉布拉多犬在沙滩上玩耍。"}

]

},

{

"role": "user",

"content": [

{"text": "写一首七言绝句描述这个场景"}

]

}

]

}

}'返回结果

{

"output": {

"choices": [

{

"finish_reason": "stop",

"message": {

"role": "assistant",

"content": [

{

"text": "海浪轻拍沙滩边,女孩与狗同嬉戏。阳光洒落笑颜开,快乐时光永铭记。"

}

]

}

}

]

},

"usage": {

"output_tokens": 27,

"input_tokens": 1298,

"image_tokens": 1247

},

"request_id": "bdf5ef59-c92e-92a6-9d69-a738ecee1590"

}流式输出

大模型接收到输入后,会逐步生成中间结果,最终结果由这些中间结果拼接而成。这种一边生成一边输出中间结果的方式称为流式输出。采用流式输出时,您可以在模型进行输出的同时阅读,减少等待模型回复的时间。

OpenAI兼容

通过 OpenAI 兼容方式开启流式输出十分方便,只需在请求参数中设置stream参数为true即可。

流式输出默认不会返回本次请求所使用的 Token 量。您可以通过设置stream_options参数为{"include_usage": True},使最后一个返回的 chunk 包含本次请求所使用的 Token 量。

Python

from openai import OpenAI

import os

client = OpenAI(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx"

api_key=os.getenv("DASHSCOPE_API_KEY"),

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1",

)

completion = client.chat.completions.create(

model="qwen-vl-max-latest", # 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages=[

{"role": "system",

"content": [{"type":"text","text": "You are a helpful assistant."}]},

{"role": "user",

"content": [{"type": "image_url",

"image_url": {"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"},},

{"type": "text", "text": "图中描绘的是什么景象?"}]}],

stream=True

)

full_content = ""

print("流式输出内容为:")

for chunk in completion:

# 如果stream_options.include_usage为True,则最后一个chunk的choices字段为空列表,需要跳过(可以通过chunk.usage获取 Token 使用量)

if chunk.choices and chunk.choices[0].delta.content != "":

full_content += chunk.choices[0].delta.content

print(chunk.choices[0].delta.content)

print(f"完整内容为:{full_content}")返回结果

流式输出内容为:

图

中

描绘

的是

一个

女人

......

温暖

和谐

的

氛围

。

完整内容为:图中描绘的是一个女人和一只狗在海滩上互动的场景。女人坐在沙滩上,微笑着与狗握手,显得非常开心。背景是大海和天空,阳光洒在她们身上,营造出一种温暖和谐的氛围。Node.js

import OpenAI from "openai";

const openai = new OpenAI(

{

// 若没有配置环境变量,请用百炼API Key将下行替换为:apiKey: "sk-xxx"

apiKey: process.env.DASHSCOPE_API_KEY,

baseURL: "https://dashscope.aliyuncs.com/compatible-mode/v1"

}

);

const completion = await openai.chat.completions.create({

model: "qwen-vl-max-latest", // 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages: [

{"role": "system",

"content": [{"type":"text","text": "You are a helpful assistant."}]},

{"role": "user",

"content": [{"type": "image_url",

"image_url": {"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"},},

{"type": "text", "text": "图中描绘的是什么景象?"}]}],

stream: true,

});

let fullContent = ""

console.log("流式输出内容为:")

for await (const chunk of completion) {

// 如果stream_options.include_usage为true,则最后一个chunk的choices字段为空数组,需要跳过(可以通过chunk.usage获取 Token 使用量)

if (Array.isArray(chunk.choices) && chunk.choices[0].delta.content != null) {

fullContent += chunk.choices[0].delta.content;

console.log(chunk.choices[0].delta.content);

}

}

console.log(`完整输出内容为:${fullContent}`)返回结果

流式输出内容为:

图中描绘的是

一个女人和一只

狗在海滩上

互动的景象。

......

在她们身上,

营造出温暖和谐

的氛围。

完整内容为:图中描绘的是一个女人和一只狗在海滩上互动的景象。女人穿着格子衬衫,坐在沙滩上,微笑着与狗握手。狗戴着项圈,看起来很开心。背景是大海和天空,阳光洒在她们身上,营造出温暖和谐的氛围。curl

curl --location 'https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions' \

--header "Authorization: Bearer $DASHSCOPE_API_KEY" \

--header 'Content-Type: application/json' \

--data '{

"model": "qwen-vl-max-latest",

"messages": [

{

"role": "system",

"content": [{"type":"text","text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {

"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"

}

},

{

"type": "text",

"text": "图中描绘的是什么景象?"

}

]

}

],

"stream":true,

"stream_options":{"include_usage":true}

}'返回结果

data: {"choices":[{"delta":{"content":"","role":"assistant"},"index":0,"logprobs":null,"finish_reason":null}],"object":"chat.completion.chunk","usage":null,"created":1721823635,"system_fingerprint":null,"model":"qwen-vl-plus","id":"chatcmpl-9a9ec75a-3109-9910-b79e-7bcbce81c8f9"}

data: {"choices":[{"finish_reason":null,"delta":{"content":"图"},"index":0,"logprobs":null}],"object":"chat.completion.chunk","usage":null,"created":1721823635,"system_fingerprint":null,"model":"qwen-vl-plus","id":"chatcmpl-9a9ec75a-3109-9910-b79e-7bcbce81c8f9"}

data: {"choices":[{"delta":{"content":"中"},"finish_reason":null,"index":0,"logprobs":null}],"object":"chat.completion.chunk","usage":null,"created":1721823635,"system_fingerprint":null,"model":"qwen-vl-plus","id":"chatcmpl-9a9ec75a-3109-9910-b79e-7bcbce81c8f9"}

......

data: {"choices":[{"delta":{"content":"分拍摄的照片。整体氛围显得非常"},"finish_reason":null,"index":0,"logprobs":null}],"object":"chat.completion.chunk","usage":null,"created":1721823635,"system_fingerprint":null,"model":"qwen-vl-plus","id":"chatcmpl-9a9ec75a-3109-9910-b79e-7bcbce81c8f9"}

data: {"choices":[{"finish_reason":"stop","delta":{"content":"和谐而温馨。"},"index":0,"logprobs":null}],"object":"chat.completion.chunk","usage":null,"created":1721823635,"system_fingerprint":null,"model":"qwen-vl-plus","id":"chatcmpl-9a9ec75a-3109-9910-b79e-7bcbce81c8f9"}

data: {"choices":[],"object":"chat.completion.chunk","usage":{"prompt_tokens":1276,"completion_tokens":85,"total_tokens":1361},"created":1721823635,"system_fingerprint":null,"model":"qwen-vl-plus","id":"chatcmpl-9a9ec75a-3109-9910-b79e-7bcbce81c8f9"}

data: [DONE]

DashScope

您可以通过DashScope SDK或HTTP方式调用通义千问VL模型,体验流式输出的功能。根据不同的调用方式,您可以设置相应的参数来实现流式输出:

Python SDK方式:设置

stream参数为True。Java SDK方式:需要通过

streamCall接口调用。HTTP方式:需要在Header中指定

X-DashScope-SSE为enable。

流式输出的内容默认是非增量式(即每次返回的内容都包含之前生成的内容),如果您需要使用增量式流式输出,请设置incremental_output(Java 为incrementalOutput)参数为true。

Python

import os

from dashscope import MultiModalConversation

messages = [

{

"role": "system",

"content": [{"text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

{"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"},

{"text": "图中描绘的是什么景象?"}

]

}

]

responses = MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx"

api_key=os.getenv("DASHSCOPE_API_KEY"),

model='qwen-vl-max-latest', # 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages=messages,

stream=True,

incremental_output=True

)

full_content = ""

print("流式输出内容为:")

for response in responses:

if response["output"]["choices"][0]["message"].content:

print(response["output"]["choices"][0]["message"].content[0]["text"])

full_content += response["output"]["choices"][0]["message"].content[0]["text"]

print(f"完整内容为:{full_content}")返回结果

流式输出内容为:

图中描绘的是

一个人和一只狗

在海滩上互动

......

阳光洒在他们

身上,营造出

温暖和谐的氛围

。

完整内容为:图中描绘的是一个人和一只狗在海滩上互动的景象。这个人穿着格子衬衫,坐在沙滩上,与一只戴着项圈的金毛猎犬握手。背景是海浪和天空,阳光洒在他们身上,营造出温暖和谐的氛围。Java

import java.util.Arrays;

import java.util.Collections;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import io.reactivex.Flowable;

public class Main {

public static void streamCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

MultiModalMessage systemMessage = MultiModalMessage.builder().role(Role.SYSTEM.getValue())

.content(Arrays.asList(Collections.singletonMap("text", "You are a helpful assistant."))).build();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(Collections.singletonMap("image", "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"),

Collections.singletonMap("text", "图中描绘的是什么景象?"))).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-max-latest") // 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

.messages(Arrays.asList(systemMessage, userMessage))

.incrementalOutput(true)

.build();

Flowable<MultiModalConversationResult> result = conv.streamCall(param);

result.blockingForEach(item -> {

try {

var content = item.getOutput().getChoices().get(0).getMessage().getContent();

// 判断content是否存在且不为空

if (content != null && !content.isEmpty()) {

System.out.println(content.get(0).get("text"));

}

} catch (Exception e) {

System.out.println(e.getMessage());

}

});

}

public static void main(String[] args) {

try {

streamCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}返回结果

图

中

描绘

的是

一个

女人

和

一只

狗

在

海滩

......

营造

出

一种

温暖

和谐

的

氛围

。curl

curl -X POST https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-H 'X-DashScope-SSE: enable' \

-d '{

"model": "qwen-vl-max-latest",

"input":{

"messages":[

{

"role": "system",

"content": [

{"text": "You are a helpful assistant."}

]

},

{

"role": "user",

"content": [

{"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"},

{"text": "图中描绘的是什么景象?"}

]

}

]

},

"parameters": {

"incremental_output": true

}

}'返回结果

iid:1

event:result

:HTTP_STATUS/200

data:{"output":{"choices":[{"message":{"content":[{"text":"这张"}],"role":"assistant"},"finish_reason":"null"}]},"usage":{"input_tokens":1276,"output_tokens":1,"image_tokens":1247},"request_id":"00917f72-d927-9344-8417-2c4088d64c16"}

id:2

event:result

:HTTP_STATUS/200

data:{"output":{"choices":[{"message":{"content":[{"text":"图片"}],"role":"assistant"},"finish_reason":"null"}]},"usage":{"input_tokens":1276,"output_tokens":2,"image_tokens":1247},"request_id":"00917f72-d927-9344-8417-2c4088d64c16"}

......

id:17

event:result

:HTTP_STATUS/200

data:{"output":{"choices":[{"message":{"content":[{"text":"的欣赏。这是一个温馨的画面,展示了"}],"role":"assistant"},"finish_reason":"null"}]},"usage":{"input_tokens":1276,"output_tokens":112,"image_tokens":1247},"request_id":"00917f72-d927-9344-8417-2c4088d64c16"}

id:18

event:result

:HTTP_STATUS/200

data:{"output":{"choices":[{"message":{"content":[{"text":"人与动物之间深厚的情感纽带。"}],"role":"assistant"},"finish_reason":"null"}]},"usage":{"input_tokens":1276,"output_tokens":120,"image_tokens":1247},"request_id":"00917f72-d927-9344-8417-2c4088d64c16"}

id:19

event:result

:HTTP_STATUS/200

data:{"output":{"choices":[{"message":{"content":[],"role":"assistant"},"finish_reason":"stop"}]},"usage":{"input_tokens":1276,"output_tokens":121,"image_tokens":1247},"request_id":"00917f72-d927-9344-8417-2c4088d64c16"}高分辨率图像理解

您可以通过设置vl_high_resolution_images参数为true,将通义千问VL模型的单图Token上限从1280提升至16384:

参数值 | 单图Token上限 | 描述 | 使用场景 |

True | 16384 |

| 内容丰富、需要关注细节的场景 |

False(默认值) | 1280 |

| 细节较少、只需用模型理解大致信息或对速度有较高要求的场景 |

vl_high_resolution_images参数仅支持DashScope SDK及HTTP方式下使用。Python

import os

import dashscope

messages = [

{

"role": "user",

"content": [

{"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20250212/earbrt/vcg_VCG211286867973_RF.jpg"},

{"text": "这张图表现了什么内容?"}

]

}

]

response = dashscope.MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx"

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen-vl-max-latest', # 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages=messages,

vl_high_resolution_images=True

)

print("大模型的回复:\n ",response.output.choices[0].message.content[0]["text"])

print("Token用量情况:","输入总Token:",response.usage["input_tokens"] , ",输入图像Token:" , response.usage["image_tokens"])

返回结果

大模型的回复:

这张图片展示了一个温馨的圣诞装饰场景。图中可以看到以下元素:

1. **圣诞树**:两棵小型的圣诞树,上面覆盖着白色的雪。

2. **驯鹿摆件**:一只棕色的驯鹿摆件,带有大大的鹿角。

3. **蜡烛和烛台**:几个木制的烛台,里面点燃了小蜡烛,散发出温暖的光芒。

4. **圣诞装饰品**:包括金色的球形装饰、松果、红色浆果串等。

5. **圣诞礼物盒**:一个小巧的金色礼物盒,用金色丝带系着。

6. **圣诞字样**:木质的“MERRY CHRISTMAS”字样,增加了节日气氛。

7. **背景**:木质的背景板,给人一种自然和温暖的感觉。

整体氛围非常温馨和喜庆,充满了浓厚的圣诞节气息。

Token用量情况: 输入总Token: 5368 ,输入图像Token: 5342Java

// dashscope SDK的版本 >= 2.20.8

import java.util.Arrays;

import java.util.Collections;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

public class Main {

public static void simpleMultiModalConversationCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

MultiModalMessage systemMessage = MultiModalMessage.builder().role(Role.SYSTEM.getValue())

.content(Arrays.asList(

Collections.singletonMap("text", "You are a helpful assistant."))).build();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

Collections.singletonMap("image", "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20250212/earbrt/vcg_VCG211286867973_RF.jpg"),

Collections.singletonMap("text", "这张图表现了什么内容?"))).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-max-latest") // 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

.messages(Arrays.asList(systemMessage, userMessage))

.vlHighResolutionImages(true)

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println("大模型的回复:\n" + result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

System.out.println("Token 用量情况:输入总Token:" + result.getUsage().getInputTokens() + ",输入图像的Token:" + result.getUsage().getImageTokens());

}

public static void main(String[] args) {

try {

simpleMultiModalConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}curl

curl -X POST https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen-vl-max-latest",

"input":{

"messages":[

{"role": "system",

"content": [

{"text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

{"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20250212/earbrt/vcg_VCG211286867973_RF.jpg"},

{"text": "这张图表现了什么内容?"}

]

}

]

},

"parameters": {

"vl_high_resolution_images": true

}

}'返回结果

{

"output": {

"choices": [

{

"finish_reason": "stop",

"message": {

"role": "assistant",

"content": [

{

"text": "这张图片展示了一个温馨的圣诞装饰场景。画面中包括以下元素:\n\n1. **圣诞树**:两棵小型的圣诞树,上面覆盖着白色的雪。\n2. **驯鹿摆件**:一只棕色的驯鹿摆件,位于画面中央偏右的位置。\n3. **蜡烛**:几根木制的蜡烛,其中两根已经点燃,发出温暖的光芒。\n4. **圣诞装饰品**:一些金色和红色的装饰球、松果、浆果和绿色的松枝。\n5. **圣诞礼物**:一个小巧的金色礼物盒,旁边还有一个带有圣诞图案的袋子。\n6. **“MERRY CHRISTMAS”字样**:用木质字母拼写的“MERRY CHRISTMAS”,放在画面左侧。\n\n整个场景布置在一个木质背景前,营造出一种温暖、节日的氛围,非常适合圣诞节的庆祝活动。"

}

]

}

}

]

},

"usage": {

"total_tokens": 5553,

"output_tokens": 185,

"input_tokens": 5368,

"image_tokens": 5342

},

"request_id": "38cd5622-e78e-90f5-baa0-c6096ba39b04"

}多图像输入

通义千问VL 模型支持单次请求传入多张图片进行综合分析,所有图像的总Token数需在模型的最大输入之内,可传入图像的最大数量请参考图像数量限制。

以下是理解多张在线图像(通过URL指定,非本地图像)的示例代码。了解如何传入本地文件和图像限制。

OpenAI兼容

Python

import os

from openai import OpenAI

client = OpenAI(

api_key=os.getenv("DASHSCOPE_API_KEY"),

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1",

)

completion = client.chat.completions.create(

model="qwen-vl-max-latest", # 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages=[

{"role":"system","content":[{"type": "text", "text": "You are a helpful assistant."}]},

{"role": "user","content": [

# 第一张图像url,如果传入本地文件,请将url的值替换为图像的Base64编码格式

{"type": "image_url","image_url": {"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"},},

# 第二张图像url,如果传入本地文件,请将url的值替换为图像的Base64编码格式

{"type": "image_url","image_url": {"url": "https://dashscope.oss-cn-beijing.aliyuncs.com/images/tiger.png"},},

{"type": "text", "text": "这些图描绘了什么内容?"},

],

}

],

)

print(completion.choices[0].message.content)返回结果

图1中是一位女士和一只拉布拉多犬在海滩上互动的场景。女士穿着格子衬衫,坐在沙滩上,与狗进行握手的动作,背景是海浪和天空,整个画面充满了温馨和愉快的氛围。

图2中是一只老虎在森林中行走的场景。老虎的毛色是橙色和黑色条纹相间,它正向前迈步,周围是茂密的树木和植被,地面上覆盖着落叶,整个画面给人一种野生自然的感觉。Node.js

import OpenAI from "openai";

const openai = new OpenAI(

{

// 若没有配置环境变量,请用百炼API Key将下行替换为:apiKey: "sk-xxx"

apiKey: process.env.DASHSCOPE_API_KEY,

baseURL: "https://dashscope.aliyuncs.com/compatible-mode/v1"

}

);

async function main() {

const response = await openai.chat.completions.create({

model: "qwen-vl-max-latest", // 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages: [

{role: "system",content:[{ type: "text", text: "You are a helpful assistant." }]},

{role: "user",content: [

// 第一张图像链接,如果传入本地文件,请将url的值替换为图像的Base64编码格式

{type: "image_url",image_url: {"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"}},

// 第二张图像链接,,如果传入本地文件,请将url的值替换为图像的Base64编码格式

{type: "image_url",image_url: {"url": "https://dashscope.oss-cn-beijing.aliyuncs.com/images/tiger.png"}},

{type: "text", text: "这些图描绘了什么内容?" },

]}]

});

console.log(response.choices[0].message.content);

}

main()返回结果

第一张图片中,一个人和一只狗在海滩上互动。人穿着格子衬衫,狗戴着项圈,他们似乎在握手或击掌。

第二张图片中,一只老虎在森林中行走。老虎的毛色是橙色和黑色条纹,背景是绿色的树木和植被。curl

curl -X POST https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen-vl-max-latest",

"messages": [

{

"role": "system",

"content": [{"type": "text", "text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {

"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"

}

},

{

"type": "image_url",

"image_url": {

"url": "https://dashscope.oss-cn-beijing.aliyuncs.com/images/tiger.png"

}

},

{

"type": "text",

"text": "这些图描绘了什么内容?"

}

]

}

]

}'返回结果

{

"choices": [

{

"message": {

"content": "图1中是一位女士和一只拉布拉多犬在海滩上互动的场景。女士穿着格子衬衫,坐在沙滩上,与狗进行握手的动作,背景是海景和日落的天空,整个画面显得非常温馨和谐。\n\n图2中是一只老虎在森林中行走的场景。老虎的毛色是橙色和黑色条纹相间,它正向前迈步,周围是茂密的树木和植被,地面上覆盖着落叶,整个画面充满了自然的野性和生机。",

"role": "assistant"

},

"finish_reason": "stop",

"index": 0,

"logprobs": null

}

],

"object": "chat.completion",

"usage": {

"prompt_tokens": 2497,

"completion_tokens": 109,

"total_tokens": 2606

},

"created": 1725948561,

"system_fingerprint": null,

"model": "qwen-vl-max",

"id": "chatcmpl-0fd66f46-b09e-9164-a84f-3ebbbedbac15"

}DashScope

Python

import os

import dashscope

messages = [

{

"role": "system",

"content": [{"text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

# 第一张图像url

# 如果传入本地文件,请将url替换为:file://ABSOLUTE_PATH/test.jpg,ABSOLUTE_PATH为本地文件的绝对路径

{"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"},

# 第二张图像url

{"image": "https://dashscope.oss-cn-beijing.aliyuncs.com/images/tiger.png"},

# 第三张图像url

{"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/hbygyo/rabbit.jpg"},

{"text": "这些图描绘了什么内容?"}

]

}

]

response = dashscope.MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx"

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen-vl-max-latest', # 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages=messages

)

print(response.output.choices[0].message.content[0]["text"])返回结果

这些图片展示了一些动物和自然场景。第一张图片中,一个人和一只狗在海滩上互动。第二张图片是一只老虎在森林中行走。第三张图片是一只卡通风格的兔子在草地上跳跃。Java

import java.util.Arrays;

import java.util.Collections;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import java.util.HashMap;

public class Main {

public static void simpleMultiModalConversationCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

// 如果使用本地图像,请导入 import java.util.HashMap;,再为函数添加【String localPath】参数,表示本地文件的实际路径

// 并解除下面注释,当前测试系统为macOS。如果您使用Windows系统,文件路径请用 file:///"+localPath 代替

// String filePath = "file://"+localPath;

MultiModalMessage systemMessage = MultiModalMessage.builder().role(Role.SYSTEM.getValue())

.content(Arrays.asList(

Collections.singletonMap("text", "You are a helpful assistant."))).build();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

// 第一张图像url

Collections.singletonMap("image", "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"),

// 如果使用本地图像,请并解除下面注释

// new HashMap<String, Object>(){{put("image", filePath);}},

// 第二张图像url

Collections.singletonMap("image", "https://dashscope.oss-cn-beijing.aliyuncs.com/images/tiger.png"),

// 第三张图像url

Collections.singletonMap("image", "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/hbygyo/rabbit.jpg"),

Collections.singletonMap("text", "这些图描绘了什么内容?"))).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-max-latest") // 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

.messages(Arrays.asList(systemMessage, userMessage))

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text")); }

public static void main(String[] args) {

try {

simpleMultiModalConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}返回结果

这些图片展示了一些动物和自然场景。

1. 第一张图片:一个女人和一只狗在海滩上互动。女人穿着格子衬衫,坐在沙滩上,狗戴着项圈,伸出爪子与女人握手。

2. 第二张图片:一只老虎在森林中行走。老虎的毛色是橙色和黑色条纹,背景是树木和树叶。

3. 第三张图片:一只卡通风格的兔子在草地上跳跃。兔子是白色的,耳朵是粉红色的,背景是蓝天和黄色的花朵。curl

curl --location 'https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation' \

--header "Authorization: Bearer $DASHSCOPE_API_KEY" \

--header 'Content-Type: application/json' \

--data '{

"model": "qwen-vl-max-latest",

"input":{

"messages":[

{

"role": "system",

"content": [{"text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

{"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241022/emyrja/dog_and_girl.jpeg"},

{"image": "https://dashscope.oss-cn-beijing.aliyuncs.com/images/tiger.png"},

{"image": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/hbygyo/rabbit.jpg"},

{"text": "这些图展现了什么内容?"}

]

}

]

}

}'返回结果

{

"output": {

"choices": [

{

"finish_reason": "stop",

"message": {

"role": "assistant",

"content": [

{

"text": "这张图片显示了一位女士和她的狗在海滩上。她们似乎正在享受彼此的陪伴,狗狗坐在沙滩上伸出爪子与女士握手或互动。背景是美丽的日落景色,海浪轻轻拍打着海岸线。\n\n请注意,我提供的描述基于图像中可见的内容,并不包括任何超出视觉信息之外的信息。如果您需要更多关于这个场景的具体细节,请告诉我!"

}

]

}

}

]

},

"usage": {

"output_tokens": 81,

"input_tokens": 1277,

"image_tokens": 1247

},

"request_id": "ccf845a3-dc33-9cda-b581-20fe7dc23f70"

}视频理解

部分通义千问VL模型支持对视频内容的理解,文件形式包括图像列表(视频帧)或视频文件。

建议使用性能较优的最新版或近期快照版模型理解视频文件。

视频文件

视频文件限制

视频大小:

传入公网URL:Qwen2.5-VL系列模型支持传入的视频大小不超过1 GB;其他模型不超过150MB。

传入本地文件时:

使用OpenAI SDK方式,经Base64编码后的视频需小于10MB;

使用DashScope SDK方式,视频本身需小于100MB。详情请参见传入本地文件。

视频时长:

Qwen2.5-VL系列模型:2秒至10分钟;

其他模型:2秒至40秒。

视频格式: MP4、AVI、MKV、MOV、FLV、WMV 等。

视频尺寸:无特定限制,模型处理前会被调整到约60万像素数,更大尺寸的视频文件不会有更好的理解效果。

暂时不支持对视频文件的音频进行理解。

视频抽帧说明

通义千问VL模型通过抽帧来分析视频,抽帧频率决定了模型分析的精细度,不同SDK的控制方式如下:

使用 DashScope SDK:

可通过设置 fps 参数来控制抽帧频率,表示每隔

秒抽取一帧图像。建议为高速运动场景(如体育赛事、动作电影)设置较大的较大的 fps值,为内容静态或较长的视频设置较小的fps值。使用 OpenAI SDK:

抽帧频率固定为每隔0.5秒抽取一帧,无法通过参数修改。

以下是理解在线视频(通过URL指定)的示例代码。了解如何传入本地文件。

OpenAI兼容

使用OpenAI SDK或HTTP方式向通义千问VL模型直接输入视频文件时,需要将用户消息中的"type"参数设为"video_url"。

Python

import os

from openai import OpenAI

client = OpenAI(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx",

api_key=os.getenv("DASHSCOPE_API_KEY"),

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1",

)

completion = client.chat.completions.create(

model="qwen-vl-max-latest",

messages=[

{"role": "system",

"content": [{"type": "text","text": "You are a helpful assistant."}]},

{"role": "user","content": [{

# 直接传入视频文件时,请将type的值设置为video_url

# 使用OpenAI SDK时,视频文件默认每间隔0.5秒抽取一帧,且不支持修改,如需自定义抽帧频率,请使用DashScope SDK.

"type": "video_url",

"video_url": {"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241115/cqqkru/1.mp4"}},

{"type": "text","text": "这段视频的内容是什么?"}]

}]

)

print(completion.choices[0].message.content)Node.js

import OpenAI from "openai";

const openai = new OpenAI(

{

// 若没有配置环境变量,请用百炼API Key将下行替换为:apiKey: "sk-xxx"

apiKey: process.env.DASHSCOPE_API_KEY,

baseURL: "https://dashscope.aliyuncs.com/compatible-mode/v1"

}

);

async function main() {

const response = await openai.chat.completions.create({

model: "qwen-vl-max-latest",

messages: [

{role:"system",content:["You are a helpful assistant."]},

{role: "user",content: [

// 直接传入视频文件时,请将type的值设置为video_url

// 使用OpenAI SDK时,视频文件默认每间隔0.5秒抽取一帧,且不支持修改,如需自定义抽帧频率,请使用DashScope SDK.

{type: "video_url", video_url: {"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241115/cqqkru/1.mp4"}},

{type: "text", text: "这段视频的内容是什么?" },

]}]

});

console.log(response.choices[0].message.content);

}

main()curl

curl -X POST https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen-vl-max-latest",

"messages": [

{"role": "system", "content": [{"type": "text","text": "You are a helpful assistant."}]},

{"role": "user","content": [{"type": "video_url","video_url": {"url": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241115/cqqkru/1.mp4"}},

{"type": "text","text": "这段视频的内容是什么?"}]}]

}'DashScope

Python

import dashscope

import os

messages = [

{"role":"system","content":[{"text": "You are a helpful assistant."}]},

{"role": "user",

"content": [

# fps 可参数控制视频抽帧频率,表示每隔 1/fps 秒抽取一帧,完整用法请参见:https://help.aliyun.com/zh/model-studio/use-qwen-by-calling-api?#2ed5ee7377fum

{"video": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241115/cqqkru/1.mp4","fps":2},

{"text": "这段视频的内容是什么?"}

]

}

]

response = dashscope.MultiModalConversation.call(

# 若没有配置环境变量, 请用百炼API Key将下行替换为: api_key ="sk-xxx"

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen-vl-max-latest',

messages=messages

)

print(response.output.choices[0].message.content[0]["text"])Java

import java.util.Arrays;

import java.util.Collections;

import java.util.Map;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

import com.alibaba.dashscope.utils.JsonUtils;

public class Main {

public static void simpleMultiModalConversationCall()

throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

// fps 可参数控制视频抽帧频率,表示每隔 1/fps 秒抽取一帧,完整用法请参见:https://help.aliyun.com/zh/model-studio/use-qwen-by-calling-api?#2ed5ee7377fum

Map<String, Object> params = Map.of(

"video", "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241115/cqqkru/1.mp4",

"fps",2);

MultiModalMessage systemMessage = MultiModalMessage.builder().role(Role.SYSTEM.getValue())

.content(Arrays.asList(

Collections.singletonMap("text", "You are a helpful assistant."))).build();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

params,

Collections.singletonMap("text", "这段视频的内容是什么?"))).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-max-latest")

.messages(Arrays.asList(systemMessage, userMessage))

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

simpleMultiModalConversationCall();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}curl

curl -X POST https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen-vl-max-latest",

"input":{

"messages":[

{"role": "system","content": [{"text": "You are a helpful assistant."}]},

{"role": "user","content": [{"video": "https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241115/cqqkru/1.mp4","fps":2},

{"text": "这段视频的内容是什么?"}]}]}

}'图像列表

图像列表数量限制

Qwen2.5-VL系列模型:最少传入4张图片,最多512张图片

其他模型:最少传入4张图片,最多80张图片

视频抽帧说明

以图像列表(即预先抽取的视频帧)传入时,可通过fps参数告知模型视频帧之间的时间间隔,帮助模型更准确地理解事件的顺序、持续时间和动态变化。

使用 DashScope SDK:

可在调用 Qwen2.5-VL系列模型 时设置

fps参数,表示视频帧是每隔秒从原始视频中抽取的。 使用 OpenAI SDK:

无法设置

fps参数,模型将默认视频帧是按照每 0.5 秒一帧的频率抽取的。

以下是理解在线视频帧(通过URL指定)的示例代码。了解如何传入本地文件。

OpenAI兼容

使用OpenAI SDK或HTTP方式向通义千问VL模型输入图片列表形式的视频时,需要将用户消息中的"type"参数设为"video"。

Python

import os

from openai import OpenAI

client = OpenAI(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx",

api_key=os.getenv("DASHSCOPE_API_KEY"),

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1",

)

completion = client.chat.completions.create(

model="qwen-vl-max-latest", # 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages=[{"role": "user","content": [

# 传入图像列表时,用户消息中的"type"参数为"video",

# 使用OpenAI SDK时,图像列表默认是以每隔0.5秒从视频中抽取出来的,且不支持修改。如需自定义抽帧频率,请使用DashScope SDK.

{"type": "video","video": ["https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/xzsgiz/football1.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/tdescd/football2.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/zefdja/football3.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/aedbqh/football4.jpg"]},

{"type": "text","text": "描述这个视频的具体过程"},

]}]

)

print(completion.choices[0].message.content)Node.js

// 确保之前在 package.json 中指定了 "type": "module"

import OpenAI from "openai";

const openai = new OpenAI({

// 若没有配置环境变量,请用百炼API Key将下行替换为:apiKey: "sk-xxx",

apiKey: process.env.DASHSCOPE_API_KEY,

baseURL: "https://dashscope.aliyuncs.com/compatible-mode/v1"

});

async function main() {

const response = await openai.chat.completions.create({

model: "qwen-vl-max-latest", // 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages: [{

role: "user",

content: [

{

// 传入图像列表时,用户消息中的"type"参数为"video"

// 使用OpenAI SDK时,图像列表默认是以每隔0.5秒从视频中抽取出来的,且不支持修改。如需自定义抽帧频率,请使用DashScope SDK.

type: "video",

video: [

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/xzsgiz/football1.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/tdescd/football2.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/zefdja/football3.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/aedbqh/football4.jpg"

]

},

{

type: "text",

text: "描述这个视频的具体过程"

}

]

}]

});

console.log(response.choices[0].message.content);

}

main();curl

curl -X POST https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen-vl-max-latest",

"messages": [{"role": "user",

"content": [{"type": "video",

"video": ["https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/xzsgiz/football1.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/tdescd/football2.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/zefdja/football3.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/aedbqh/football4.jpg"]},

{"type": "text",

"text": "描述这个视频的具体过程"}]}]

}'DashScope

Python

import os

# dashscope版本需要不低于1.20.10

import dashscope

messages = [{"role": "user",

"content": [

# 若模型属于Qwen2.5-VL系列且传入图像列表时,可设置fps参数,表示图像列表是由原视频每隔 1/fps 秒抽取的

{"video":["https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/xzsgiz/football1.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/tdescd/football2.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/zefdja/football3.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/aedbqh/football4.jpg"],

"fps":2},

{"text": "描述这个视频的具体过程"}]}]

response = dashscope.MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx",

api_key=os.getenv("DASHSCOPE_API_KEY"),

model='qwen-vl-max-latest', # 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages=messages

)

print(response["output"]["choices"][0]["message"].content[0]["text"])Java

// DashScope SDK版本需要不低于2.18.3

import java.util.Arrays;

import java.util.Collections;

import java.util.Map;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

public class Main {

private static final String MODEL_NAME = "qwen-vl-max-latest"; // 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

public static void videoImageListSample() throws ApiException, NoApiKeyException, UploadFileException {

MultiModalConversation conv = new MultiModalConversation();

MultiModalMessage systemMessage = MultiModalMessage.builder()

.role(Role.SYSTEM.getValue())

.content(Arrays.asList(Collections.singletonMap("text", "You are a helpful assistant.")))

.build();

// 若模型属于Qwen2.5-VL系列且传入的是图像列表时,可设置fps参数,表示图像列表是由原视频每隔 1/fps 秒抽取的

Map<String, Object> params = Map.of(

"video", Arrays.asList("https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/xzsgiz/football1.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/tdescd/football2.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/zefdja/football3.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/aedbqh/football4.jpg"),

"fps",2);

MultiModalMessage userMessage = MultiModalMessage.builder()

.role(Role.USER.getValue())

.content(Arrays.asList(

params,

Collections.singletonMap("text", "描述这个视频的具体过程")))

.build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model(MODEL_NAME)

.messages(Arrays.asList(systemMessage, userMessage)).build();

MultiModalConversationResult result = conv.call(param);

System.out.print(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

videoImageListSample();

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}curl

curl -X POST https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen-vl-max-latest",

"input": {

"messages": [

{

"role": "user",

"content": [

{

"video": [

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/xzsgiz/football1.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/tdescd/football2.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/zefdja/football3.jpg",

"https://help-static-aliyun-doc.aliyuncs.com/file-manage-files/zh-CN/20241108/aedbqh/football4.jpg"

],

"fps":2

},

{

"text": "描述这个视频的具体过程"

}

]

}

]

}

}'传入本地文件(Base64 编码或文件路径)

通义千问VL 提供两种本地文件上传方式:

Base64 编码上传

文件路径直接上传(传输更稳定,推荐方式)

上传方式:

Base64 编码上传

将文件转换为 Base64 编码字符串,再传入模型。适用于 OpenAI 和 DashScope SDK 及 HTTP 方式

文件路径上传

直接向模型传入本地文件路径。仅 DashScope Python 和 Java SDK 支持,不支持 DashScope HTTP 和OpenAI 兼容方式。

请您参考下表,结合您的编程语言与操作系统指定文件的路径。

使用限制:

建议优先选择文件路径上传(稳定性更高),1MB以下的文件也可使用 Base64 编码;

直接传入文件路径时,单张图像或视频帧(图像列表)本身需小于 10MB,单个视频需小于100MB;

Base64编码方式传入时,由于Base64编码会增加数据体积,需保证编码后的单个图像或视频需小于 10MB。

如需压缩文件体积请参见如何将图像或视频压缩到满足要求的大小?

图像

文件路径传入

传入文件路径仅支持 DashScope Python 和 Java SDK方式调用,不支持 DashScope HTTP 和OpenAI 兼容方式。

Python

import os

from dashscope import MultiModalConversation

# 将xxx/eagle.png替换为你本地图像的绝对路径

local_path = "xxx/eagle.png"

image_path = f"file://{local_path}"

messages = [{"role": "system",

"content": [{"text": "You are a helpful assistant."}]},

{'role':'user',

'content': [{'image': image_path},

{'text': '图中描绘的是什么景象?'}]}]

response = MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx"

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen-vl-max-latest', # 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages=messages)

print(response["output"]["choices"][0]["message"].content[0]["text"])Java

import java.util.Arrays;

import java.util.Collections;

import java.util.HashMap;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

public class Main {

public static void callWithLocalFile(String localPath)

throws ApiException, NoApiKeyException, UploadFileException {

String filePath = "file://"+localPath;

MultiModalConversation conv = new MultiModalConversation();

MultiModalMessage systemMessage = MultiModalMessage.builder().role(Role.SYSTEM.getValue())

.content(Arrays.asList(Collections.singletonMap("text", "You are a helpful assistant."))).build();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(new HashMap<String, Object>(){{put("image", filePath);}},

new HashMap<String, Object>(){{put("text", "图中描绘的是什么景象?");}})).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 若没有配置环境变量,请用百炼API Key将下行替换为:.apiKey("sk-xxx")

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-max-latest") // 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

.messages(Arrays.asList(systemMessage, userMessage))

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));}

public static void main(String[] args) {

try {

// 将xxx/eagle.png替换为你本地图像的绝对路径

callWithLocalFile("xxx/eagle.png");

} catch (ApiException | NoApiKeyException | UploadFileException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}Base64 编码传入

OpenAI兼容

Python

from openai import OpenAI

import os

import base64

# 编码函数: 将本地文件转换为 Base64 编码的字符串

def encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode("utf-8")

# 将xxxx/eagle.png替换为你本地图像的绝对路径

base64_image = encode_image("xxx/eagle.png")

client = OpenAI(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx"

api_key=os.getenv('DASHSCOPE_API_KEY'),

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1",

)

completion = client.chat.completions.create(

model="qwen-vl-max-latest", # 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages=[

{

"role": "system",

"content": [{"type":"text","text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

{

"type": "image_url",

# 需要注意,传入Base64,图像格式(即image/{format})需要与支持的图片列表中的Content Type保持一致。"f"是字符串格式化的方法。

# PNG图像: f"data:image/png;base64,{base64_image}"

# JPEG图像: f"data:image/jpeg;base64,{base64_image}"

# WEBP图像: f"data:image/webp;base64,{base64_image}"

"image_url": {"url": f"data:image/png;base64,{base64_image}"},

},

{"type": "text", "text": "图中描绘的是什么景象?"},

],

}

],

)

print(completion.choices[0].message.content)Node.js

import OpenAI from "openai";

import { readFileSync } from 'fs';

const openai = new OpenAI(

{

// 若没有配置环境变量,请用百炼API Key将下行替换为:apiKey: "sk-xxx"

apiKey: process.env.DASHSCOPE_API_KEY,

baseURL: "https://dashscope.aliyuncs.com/compatible-mode/v1"

}

);

const encodeImage = (imagePath) => {

const imageFile = readFileSync(imagePath);

return imageFile.toString('base64');

};

// 将xxx/eagle.png替换为你本地图像的绝对路径

const base64Image = encodeImage("xxx/eagle.png")

async function main() {

const completion = await openai.chat.completions.create({

model: "qwen-vl-max-latest", // 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages: [

{"role": "system",

"content": [{"type":"text","text": "You are a helpful assistant."}]},

{"role": "user",

"content": [{"type": "image_url",

// 需要注意,传入Base64,图像格式(即image/{format})需要与支持的图片列表中的Content Type保持一致。

// PNG图像: data:image/png;base64,${base64Image}

// JPEG图像: data:image/jpeg;base64,${base64Image}

// WEBP图像: data:image/webp;base64,${base64Image}

"image_url": {"url": `data:image/png;base64,${base64Image}`},},

{"type": "text", "text": "图中描绘的是什么景象?"}]}]

});

console.log(completion.choices[0].message.content);

}

main();curl

将文件转换为 Base64 编码的字符串的方法可参见示例代码;

为了便于展示,代码中的

"data:image/png;base64,/9j/4AAQSkZJRgABAQAAAQABAAD/2wBDAA...",该Base64 编码字符串是截断的。在实际使用中,请务必传入完整的编码字符串。

curl --location 'https://dashscope.aliyuncs.com/compatible-mode/v1/chat/completions' \

--header "Authorization: Bearer $DASHSCOPE_API_KEY" \

--header 'Content-Type: application/json' \

--data '{

"model": "qwen-vl-max",

"messages": [

{"role":"system",

"content":[

{"type": "text", "text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

{"type": "image_url", "image_url": {"url": f"data:image/png;base64,/9j/4AAQSkZJRgABAQAAAQABAAD/2wBDAA..."}},

{"type": "text", "text": "图中描绘的是什么景象?"}

]

}]

}'DashScope

Python

import base64

import os

from dashscope import MultiModalConversation

# 编码函数: 将本地文件转换为 Base64 编码的字符串

def encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode("utf-8")

# 将xxxx/eagle.png替换为你本地图像的绝对路径

base64_image = encode_image("xxxx/eagle.png")

messages = [

{"role": "system", "content": [{"text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

# 需要注意,传入Base64,图像格式(即image/{format})需要与支持的图片列表中的Content Type保持一致。"f"是字符串格式化的方法。

# PNG图像: f"data:image/png;base64,{base64_image}"

# JPEG图像: f"data:image/jpeg;base64,{base64_image}"

# WEBP图像: f"data:image/webp;base64,{base64_image}"

{"image": f"data:image/png;base64,{base64_image}"},

{"text": "图中描绘的是什么景象?"},

],

},

]

response = MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx"

api_key=os.getenv("DASHSCOPE_API_KEY"),

model="qwen-vl-max-latest", # 此处以qwen-vl-max-latest为例,可按需更换模型名称。模型列表:https://help.aliyun.com/zh/model-studio/models

messages=messages,

)

print(response["output"]["choices"][0]["message"].content[0]["text"])Java

import java.io.IOException;

import java.util.Arrays;

import java.util.Collections;

import java.util.HashMap;

import java.util.Base64;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

import com.alibaba.dashscope.aigc.multimodalconversation.*;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

public class Main {

private static String encodeImageToBase64(String imagePath) throws IOException {

Path path = Paths.get(imagePath);

byte[] imageBytes = Files.readAllBytes(path);

return Base64.getEncoder().encodeToString(imageBytes);

}

public static void callWithLocalFile(String localPath) throws ApiException, NoApiKeyException, UploadFileException, IOException {

String base64Image = encodeImageToBase64(localPath); // Base64编码

MultiModalConversation conv = new MultiModalConversation();

MultiModalMessage systemMessage = MultiModalMessage.builder().role(Role.SYSTEM.getValue())

.content(Arrays.asList(Collections.singletonMap("text", "You are a helpful assistant."))).build();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(

new HashMap<String, Object>() {{ put("image", "data:image/png;base64," + base64Image); }},

new HashMap<String, Object>() {{ put("text", "图中描绘的是什么景象?"); }}

)).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-max-latest")

.messages(Arrays.asList(systemMessage, userMessage))

.build();

MultiModalConversationResult result = conv.call(param);

System.out.println(result.getOutput().getChoices().get(0).getMessage().getContent().get(0).get("text"));

}

public static void main(String[] args) {

try {

// 将 xxx/eagle.png 替换为你本地图像的绝对路径

callWithLocalFile("xxx/eagle.png");

} catch (ApiException | NoApiKeyException | UploadFileException | IOException e) {

System.out.println(e.getMessage());

}

System.exit(0);

}

}curl

将文件转换为 Base64 编码的字符串的方法可参见示例代码;

为了便于展示,代码中的

"data:image/png;base64,/9j/4AAQSkZJRgABAQAAAQABAAD/2wBDAA...",该Base64 编码字符串是截断的。在实际使用中,请务必传入完整的编码字符串。

curl -X POST https://dashscope.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation \

-H "Authorization: Bearer $DASHSCOPE_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"model": "qwen-vl-max-latest",

"input":{

"messages":[

{"role": "system",

"content": [

{"text": "You are a helpful assistant."}]},

{

"role": "user",

"content": [

{"image": f"data:image/png;base64,/9j/4AAQSkZJRgABAQAAAQABAAD/2wBDAA..."},

{"text": "图中描绘的是什么景象?"}

]

}

]

}

}'视频文件

以保存在本地的test.mp4为例。

文件路径传入

传入文件路径仅支持 DashScope Python 和 Java SDK方式调用,不支持 DashScope HTTP 和OpenAI 兼容方式。

Python

import os

from dashscope import MultiModalConversation

# 将xxxx/test.mp4替换为你本地视频的绝对路径

local_path = "xxx/test.mp4"

video_path = f"file://{local_path}"

messages = [{'role': 'system',

'content': [{'text': 'You are a helpful assistant.'}]},

{'role':'user',

# fps参数控制视频抽帧数量,表示每隔1/fps 秒抽取一帧

'content': [{'video': video_path,"fps":2},

{'text': '这段视频描绘的是什么景象?'}]}]

response = MultiModalConversation.call(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx"

api_key=os.getenv('DASHSCOPE_API_KEY'),

model='qwen-vl-max-latest',

messages=messages)

print(response["output"]["choices"][0]["message"].content[0]["text"])Java

import java.util.Arrays;

import java.util.Collections;

import java.util.HashMap;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversation;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationParam;

import com.alibaba.dashscope.aigc.multimodalconversation.MultiModalConversationResult;

import com.alibaba.dashscope.common.MultiModalMessage;

import com.alibaba.dashscope.common.Role;

import com.alibaba.dashscope.exception.ApiException;

import com.alibaba.dashscope.exception.NoApiKeyException;

import com.alibaba.dashscope.exception.UploadFileException;

public class Main {

public static void callWithLocalFile(String localPath)

throws ApiException, NoApiKeyException, UploadFileException {

String filePath = "file://"+localPath;

MultiModalConversation conv = new MultiModalConversation();

MultiModalMessage systemMessage = MultiModalMessage.builder().role(Role.SYSTEM.getValue())

.content(Arrays.asList(Collections.singletonMap("text", "You are a helpful assistant."))).build();

MultiModalMessage userMessage = MultiModalMessage.builder().role(Role.USER.getValue())

.content(Arrays.asList(new HashMap<String, Object>()

{{

put("video", filePath);// fps参数控制视频抽帧数量,表示每隔1/fps 秒抽取一帧

put("fps", 2);

}},

new HashMap<String, Object>(){{put("text", "这段视频描绘的是什么景象?");}})).build();

MultiModalConversationParam param = MultiModalConversationParam.builder()

// 新加坡和北京地域的API Key不同。获取API Key:https://www.alibabacloud.com/help/zh/model-studio/get-api-key

.apiKey(System.getenv("DASHSCOPE_API_KEY"))

.model("qwen-vl-max-latest")

.messages(Arrays.asList(systemMessage, userMessage))

.build();

MultiModalConversationResult result = conv.call(param);