EAS内置的EasyRec Processor支持将EasyRec或TensorFlow训练的推荐模型部署为打分服务,并具备集成特征工程的能力。通过联合优化特征工程和TensorFlow模型,EasyRec Processor能够实现高性能的打分服务。本文为您介绍如何部署及调用EasyRec模型服务。

背景信息

EasyRec Processor是基于PAI-EAS的Processor规范(使用C或C++开发自定义Processor)编写的推理服务。应用在两种情况下:

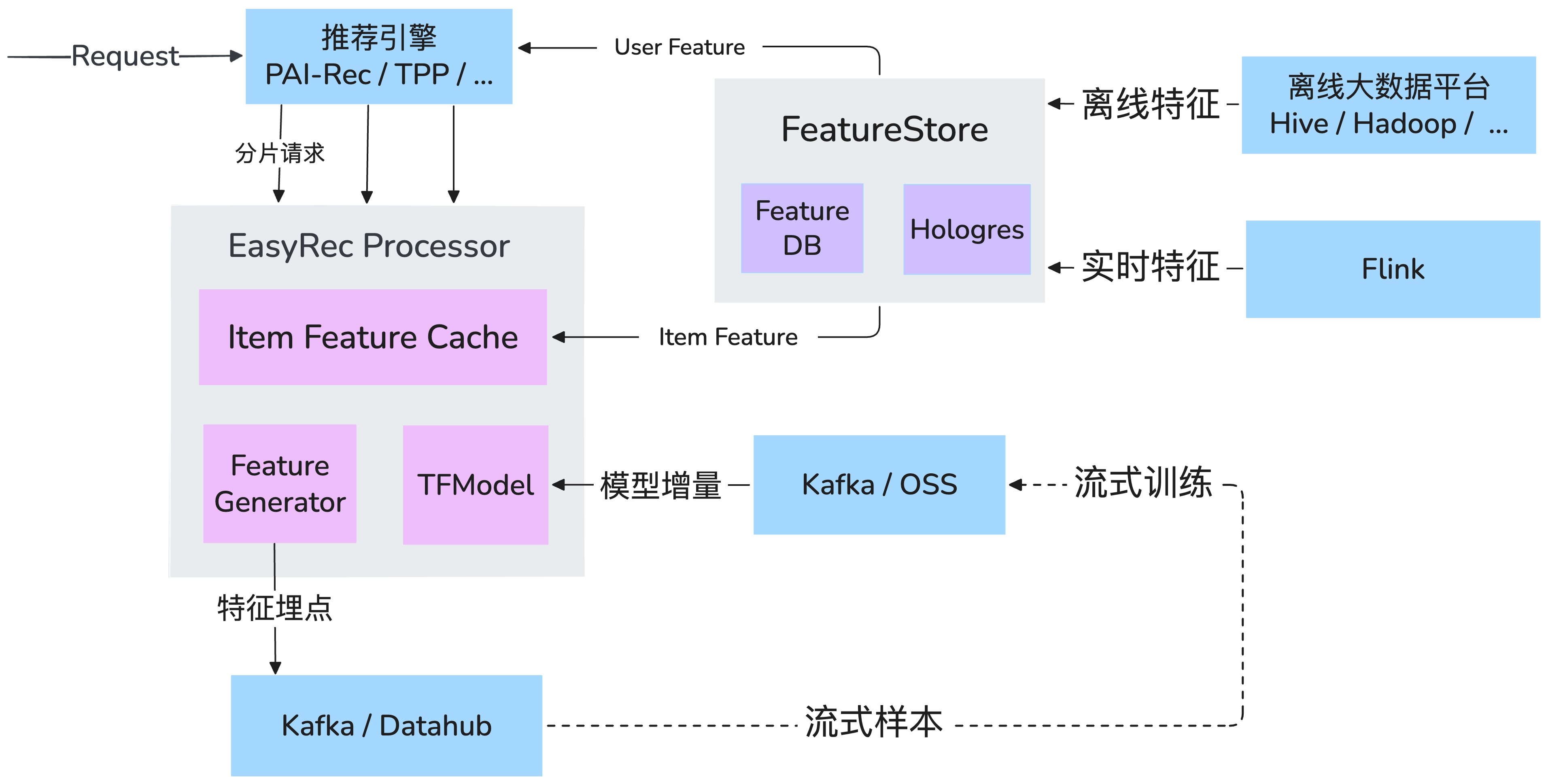

基于Feature Generator(简称FG)和EasyRec训练的深度学习模型,在EasyRec Processor中通过把物品特征缓存到内存,并且对特征变换和推理性能做了优化,能够充分提高打分性能。在此方案基础上,还可以使用FeatureStore管理在线特征、实时特征。基于PAI-Rec推荐系统开发平台的推荐方案定制产出相关代码,打通了训练、特征变化、推理优化,结合PAI-Rec引擎,能够快速对接模型的部署和上线服务。当客户选择了这一套方案,节约了成本,提高了开发效率。

基于EasyRec或者TensorFlow训练的模型,不用Feature Generator,也可以提供服务。

基于EasyRec Processor的推荐引擎的架构图如下所示:

其中EasyRec Processor主要包含以下模块:

使用限制

CPU机型推理:仅支持使用通用型实例规格族g6、g7或g8机型(仅支持Intel系列的CPU)。

GPU机型推理:支持T4、A10、GU30、L20、3090或4090等GPU型号(不包含P100)。

详情请参见通用型(g系列)。

版本列表

EasyRec Processor仍然在迭代中,建议使用最新的版本部署推理服务,新的版本将提供更多的功能和更高的推理性能,已经发布的版本:

步骤一:部署服务

使用eascmd客户端部署EasyRec模型服务时,您需要指定Processor种类为easyrec-{version},关于如何使用客户端工具部署服务,详情请参见服务部署:EASCMD。服务配置文件示例如下:

使用新版FG lib的示例(fg_mode=normal)

下面使用PyOdps3节点类型来部署。这种模式下可以使用新版的特征生成(FeatureGenerator),支持丰富的内置特征变换算子,同时支持用户自定义FG算子,也能够支持复杂类型(array,map等)的输入特征,同时支持特征间的相互依赖(DAG模式)。

下面这个示例使用了PAI-FeatureStore来管理特征数据,脚本中${fs_project},${fs_model}两个变量需要替换为实际的值,请参考步骤二:创建与部署EAS模型服务,了解更多细节。

import json

import os

service_name = 'ali_rec_rnk_with_fg'

config = {

'name': service_name,

'metadata': {

"cpu": 8,

#"cuda": "11.2",

"gateway": "default",

"gpu": 0,

"memory": 32000,

"rolling_strategy": {

"max_unavailable": 1

},

"rpc": {

"enable_jemalloc": 1,

"max_queue_size": 256

}

},

"model_path": "",

"processor": "easyrec-3.0",

"storage": [

{

"mount_path": "/home/admin/docker_ml/workspace/model/",

"oss": {

"path": "oss://easyrec/ali_rec_sln_acc_rnk/20250722/export/final_with_fg"

}

}

],

# 当变更fg_mode时,对应的调用方式也必须改变。

# fg_mode为:normal和tf时,调用方式采用EasyRecRequest SDK

# fg_mode为:bypass时,调用方式采用TFRequest SDK

'model_config': {

'outputs': 'probs_ctr,probs_cvr',

'fg_mode': 'normal',

'steady_mode': True,

'period': 2880,

'access_key_id': f'{o.account.access_id}',

'access_key_secret': f'{o.account.secret_access_key}',

"load_feature_from_offlinestore": True,

'region': 'cn-shanghai',

'fs_project': '${fs_project}',

'fs_model': '${fs_model}',

'fs_entity': 'item',

'featuredb_username': 'guest',

'featuredb_password': '123456',

'log_iterate_time_threshold': 100,

'iterate_featuredb_interval': 5,

'mc_thread_pool_num': 1,

}

}

with open('echo.json', 'w') as output_file:

json.dump(config, output_file)

os.system(f'/home/admin/usertools/tools/eascmd -i {o.account.access_id} -k {o.account.secret_access_key} -e pai-eas.cn-shanghai.aliyuncs.com create echo.json')

# os.system(f'/home/admin/usertools/tools/eascmd -i {o.account.access_id} -k {o.account.secret_access_key} -e pai-eas.cn-shanghai.aliyuncs.com modify {service_name} -s echo.json')备注:featuredb_username、featuredb_password这两个配置项的值需要改为实际可用的用户名和密码。

使用TF OP版FG的示例(fg_mode=tf)

注意:TF OP版FG支持的FG内置特征有限,只有:id_feature, raw_feature, combo_feature, lookup_feature, match_feature, sequence_feature,并且无法支持自定义FG OP。

注意下面使用Shell脚本来部署,脚本中包含了AccessKeyID和AccessKeySecret的明文密码。好处是比较简单易懂,但是里面没有PAI-FeatureStore,没有讲如何从MaxCompute中加载表数据从而降低对Hologres的压力。具体使用PAI-FeatureStore和从MaxCompute加载数据,请参考步骤二:创建与部署EAS模型服务,注意这个文档里面是通过python脚本来部署,使用的是DataWorks内置的对象o,并且使用了临时STS更加安全,其中load_feature_from_offlinestore设置为True。

bizdate=$1

# 当变更fg_mode时,对应的调用方式也需要改变。fg_mode为:normal和tf时,调用方式采用EasyRecRequest SDK, fg_mode为:bypass时,调用方式采用TFRequest SDK

cat << EOF > echo.json

{

"name":"ali_rec_rnk_with_fg",

"metadata": {

"instance": 2,

"rpc": {

"enable_jemalloc": 1,

"max_queue_size": 100

}

},

"cloud": {

"computing": {

"instance_type": "ecs.g7.large",

"instances": null

}

},

"model_config": {

"remote_type": "hologres",

"url": "postgresql://<AccessKeyID>:<AccessKeySecret>@<域名>:<port>/<database>",

"tables": [{"name":"<schema>.<table_name>","key":"<index_column_name>","value": "<column_name>"}],

"period": 2880,

"fg_mode": "tf",

"outputs":"probs_ctr,probs_cvr",

},

"model_path": "",

"processor": "easyrec-2.9",

"storage": [

{

"mount_path": "/home/admin/docker_ml/workspace/model/",

"oss": {

"path": "oss://easyrec/ali_rec_sln_acc_rnk/20221122/export/final_with_fg"

}

}

]

}

EOF

# 执行部署命令。

eascmd create echo.json

# eascmd -i <AccessKeyID> -k <AccessKeySecret> -e <endpoint> create echo.json

# 执行更新命令

eascmd update ali_rec_rnk_with_fg -s echo.json不使用FG的示例(fg_mode=bypass)

bizdate=$1

# 当变更fg_mode时,对应的调用方式也需要改变。fg_mode为:normal和tf时,调用方式采用EasyRecRequest SDK, fg_mode为:bypass时,调用方式采用TFRequest SDK。

cat << EOF > echo.json

{

"name":"ali_rec_rnk_no_fg",

"metadata": {

"instance": 2,

"rpc": {

"enable_jemalloc": 1,

"max_queue_size": 100

}

},

"cloud": {

"computing": {

"instance_type": "ecs.g7.large",

"instances": null

}

},

"model_config": {

"fg_mode": "bypass"

},

"processor": "easyrec-2.9",

"processor_envs": [

{

"name": "INPUT_TILE",

"value": "2"

}

],

"storage": [

{

"mount_path": "/home/admin/docker_ml/workspace/model/",

"oss": {

"path": "oss://easyrec/ali_rec_sln_acc_rnk/20221122/export/final/"

}

}

],

"warm_up_data_path": "oss://easyrec/ali_rec_sln_acc_rnk/rnk_warm_up.bin"

}

EOF

# 执行部署命令。

eascmd create echo.json

# eascmd -i <AccessKeyID> -k <AccessKeySecret> -e <endpoint> create echo.json

# 执行更新命令

eascmd update ali_rec_rnk_no_fg -s echo.json其中关键参数说明如下,其他参数说明,请参见JSON部署。

参数 | 是否必选 | 描述 | 示例 |

processor | 是 | EasyRec Processor。 |

|

fg_mode | 是 | 用于指定特征工程模式,根据选择的特征工程模式,后续调用服务时需采用对应的SDK和请求构建方法。

|

|

outputs | 是 | tf模型预测的输出变量名称,如probs_ctr。如果是多个则用逗号分隔。如果不清楚输出变量名称,请执行tf的命令saved_model_cli来查看。 | "outputs":"probs_ctr,probs_cvr" |

save_req | 否 | 是否将请求获得的数据文件保存到模型目录下,保存的文件可以用来做warmup和性能测试。取值如下:

| "save_req": "false" |

Item Feature Cache相关参数 | |||

period | 是 | Item feature cache特征周期性更新的间隔,单位是分钟。如果Item特征是天级更新的话, 一般设置的值大于一天即可(例如2880,1天1440分钟,2880即表示两天),一天之内就不需要更新特征了,因为每天例行更新服务的时候同时也会更新特征。 |

|

remote_type | 是 | Item特征数据源, 目前支持:

|

|

tables | 否 | Item特征表,当remote_type为hologres时需要配置,包含以下参数:

支持从多个表中读取输入Item数据,配置格式为:

如果多张表有重复的列,后面的表将覆盖前面的表。 |

|

url | 否 | Hologres的访问地址。 |

|

Processor访问FeatureStore相关参数 | |||

fs_project | 否 | FeatureStore 项目名称,使用 FeatureStore 时需指定该字段。 FeatureStore文档请参考:配置FeatureStore项目。 | "fs_project": "fs_demo" |

fs_model | 否 | FeatureStore模型特征名称。 | "fs_model": "fs_rank_v1" |

fs_entity | 否 | FeatureStore实体名称。 | "fs_entity": "item" |

region | 否 | FeatureStore 产品所在的地区。 | "region": "cn-beijing" |

access_key_id | 否 | FeatureStore 产品的 access_key_id。 | "access_key_id": "xxxxx" |

access_key_secret | 否 | FeatureStore 产品的 access_key_secret。 | "access_key_secret": "xxxxx" |

featuredb_username | 否 | FeatureDB的用户名 | "featuredb_username": "xxxxx" |

featuredb_password | 否 | FeatureDB的密码 | "featuredb_password": "xxxxx" |

load_feature_from_offlinestore | 否 | 离线特征是否直接从FeatureStore OfflineStore中获取数据,取值如下:

| "load_feature_from_offlinestore": True |

iterate_featuredb_interval | 否 | 间隔多少秒更新实时统计特征。 更新时间越短,特征实时性越好,但是当实时特征变化快的时候读成本会更高。需要权衡准确性和成本。 | "iterate_featuredb_interval": 5 |

input_tile: 特征自动扩展相关参数 | |||

INPUT_TILE | 否 | 设置INPUT_TILE环境变量为1,支持item feature自动broadcast,对于一次请求中值都相同的feature(例如user_id),可以只传一个值。设置INPUT_TILE环境变量为2时,

说明

| "processor_envs": [ { "name": "INPUT_TILE", "value": "2" } ] |

ADAPTE_FG_CONFIG | 否 | 为了兼容基于老版fg的样本训练导出的模型,可开启该变量完成适配 | "processor_envs": [ { "name": "ADAPTE_FG_CONFIG", "value": "true" } ] |

DISABLE_FG_PRECISION | 否 | 为了兼容基于老版fg的样本训练导出的模型,可关闭该变量完成适配(老版fg默认限制float类型的特征为6个有效数字,新版fg默认取消了这个限制) | "processor_envs": [ { "name": "DISABLE_FG_PRECISION", "value": "false" } ] |

EasyRecProcessor的推理优化参数

参数 | 是否必选 | 描述 | 示例 |

TF_XLA_FLAGS | 否 | 在使用GPU前提下,使用 XLA 对模型进行编译优化和自动算子融合 | "processor_envs": [ { "name": "TF_XLA_FLAGS", "value": "--tf_xla_auto_jit=2" }, { "name": "XLA_FLAGS", "value": "--xla_gpu_cuda_data_dir=/usr/local/cuda/" }, { "name": "XLA_ALIGN_SIZE", "value": "64" } ] |

TF调度参数 | 否 | inter_op_parallelism_threads: 控制执行不同操作的线程数 intra_op_parallelism_threads: 控制单个操作内部使用的线程数. 一般32核CPU时,使用设置为16性能较高。注意设置两个线程数量不要大于CPU核的数量。 | "model_config": { "inter_op_parallelism_threads": 16, "intra_op_parallelism_threads": 16, } |

rpc.worker_threads | 否 | PAI-EAS的metadata下的参数,建议设置为实例的cpu数,例如cpu核数为15,则worker_threads设置为15。 | "metadata": { "rpc": { "worker_threads": 15 } |

步骤二:调用服务

2.1 网络配置

我们把PAI-Rec的引擎、模型打分服务都部署在PAI-EAS上面,因此产品需要设置网络直连的方式,在PAI-EAS实例界面(如下图)右上角点击“专有网络”,设置同样的VPC、交换机、安全组:参考网络配置。如果使用了Hologres也要设置同样的VPC信息。如下图:

2.2 获取服务信息

EasyRec模型服务部署完成后,在模型在线服务(EAS)页面,单击待调用服务服务方式列下的调用信息,查看服务的访问地址和Token信息。

2.3 使用SDK调用代码示例

EasyRec模型服务的输入、输出格式均为Protobuf格式,因此不能在PAI-EAS的产品界面上做测试。

在调用服务前,请务必明确步骤一部署时所设置的model_config中的fg_mode。不同模式对应完全不同的客户端调用方法。

部署模式(fg_mode) | 使用的SDK请求类 |

normal或tf (包含内置特征工程) | EasyRecRequest |

bypass (不包含内置特征工程) | TFRequest |

包含FG:fg_mode=normal或tf

Java

Maven环境配置请参考Java SDK使用说明,请求服务ali_rec_rnk_with_fg的示例代码如下:

import com.aliyun.openservices.eas.predict.http.*;

import com.aliyun.openservices.eas.predict.request.EasyRecRequest;

PredictClient client = new PredictClient(new HttpConfig());

// 通过普通网关访问时,需要使用以用户UID开头的Endpoint,在EAS控制台服务的调用信息中可以获得该信息。

client.setEndpoint("xxxxxxx.vpc.cn-hangzhou.pai-eas.aliyuncs.com");

client.setModelName("ali_rec_rnk_with_fg");

// 替换为服务Token信息。

client.setToken("******");

EasyRecRequest easyrecRequest = new EasyRecRequest(separator);

// userFeatures: 用户特征, 特征之间用\u0002(CTRL_B)分隔, 特征名和特征值之间用:分隔。

// user_fea0:user_fea0_val\u0002user_fea1:user_fea1_val

// 特征值的格式请参考: https://easyrec.readthedocs.io/en/latest/feature/rtp_fg.html

easyrecRequest.appendUserFeatureString(userFeatures);

// 也可以每次添加一个user特征:

// easyrecRequest.addUserFeature(String userFeaName, T userFeaValue)。

// 特征值的类型T: String, float, long, int。

// contextFeatures: context特征, 特征之间用\u0002(CTRL_B)分隔, 特征名和特征值之间用:分割, 特征值和特征值之间用:分隔。

// ctxt_fea0:ctxt_fea0_ival0:ctxt_fea0_ival1:ctxt_fea0_ival2\u0002ctxt_fea1:ctxt_fea1_ival0:ctxt_fea1_ival1:ctxt_fea1_ival2

easyrecRequest.appendContextFeatureString(contextFeatures);

// 也可以每次添加一个context特征:

// easyrecRequest.addContextFeature(String ctxtFeaName, List<Object> ctxtFeaValue)。

// ctxtFeaValue的类型: String, Float, Long, Integer。

// itemIdStr: 要预测的itemId的列表,以半角逗号(,)分割。

easyrecRequest.appendItemStr(itemIdStr, ",");

// 也可以每次添加一个itemId:

// easyrecRequest.appendItemId(String itemId)

PredictProtos.PBResponse response = client.predict(easyrecRequest);

for (Map.Entry<String, PredictProtos.Results> entry : response.getResultsMap().entrySet()) {

String key = entry.getKey();

PredictProtos.Results value = entry.getValue();

System.out.print("key: " + key);

for (int i = 0; i < value.getScoresCount(); i++) {

System.out.format("value: %.6g\n", value.getScores(i));

}

}

// 获取FG之后的特征,以便和离线的特征对比一致性。

// 将DebugLevel设置成1,即可返回生成的特征。

easyrecRequest.setDebugLevel(1);

PredictProtos.PBResponse response = client.predict(easyrecRequest);

Map<String, String> genFeas = response.getGenerateFeaturesMap();

for(String itemId: genFeas.keySet()) {

System.out.println(itemId);

System.out.println(genFeas.get(itemId));

}Python

环境配置请参见Python SDK使用说明。在实际应用中建议使用Java客户端。示例代码:

from eas_prediction import PredictClient

from eas_prediction.easyrec_request import EasyRecRequest

from eas_prediction.easyrec_predict_pb2 import PBFeature

from eas_prediction.easyrec_predict_pb2 import PBRequest

if __name__ == '__main__':

endpoint = 'http://xxxxxxx.vpc.cn-hangzhou.pai-eas.aliyuncs.com'

service_name = 'ali_rec_rnk_with_fg'

token = '******'

client = PredictClient(endpoint, service_name)

client.set_token(token)

client.init()

req = PBRequest()

uid = PBFeature()

uid.string_feature = 'u0001'

req.user_features['user_id'] = uid

age = PBFeature()

age.int_feature = 12

req.user_features['age'] = age

weight = PBFeature()

weight.float_feature = 129.8

req.user_features['weight'] = weight

req.item_ids.extend(['item_0001', 'item_0002', 'item_0003'])

easyrec_req = EasyRecRequest()

easyrec_req.add_feed(req, debug_level=0)

res = client.predict(easyrec_req)

print(res)其中:

endpoint:需要配置为以用户UID开头的Endpoint。在PAI EAS模型在线服务页面,单击待调用服务服务方式列下的调用信息,可以获得该信息。

service_name: 服务名称,在PAI EAS模型在线服务页面获取。

token:需要配置为服务Token信息。在调用信息对话框,可以获得该信息。

不包含FG:fg_mode=bypass

Java

Maven环境配置请参考Java SDK使用说明,请求服务ali_rec_rnk_no_fg的示例代码如下:

import java.util.List;

import com.aliyun.openservices.eas.predict.http.PredictClient;

import com.aliyun.openservices.eas.predict.http.HttpConfig;

import com.aliyun.openservices.eas.predict.request.TFDataType;

import com.aliyun.openservices.eas.predict.request.TFRequest;

import com.aliyun.openservices.eas.predict.response.TFResponse;

public class TestEasyRec {

public static TFRequest buildPredictRequest() {

TFRequest request = new TFRequest();

request.addFeed("user_id", TFDataType.DT_STRING,

new long[]{3}, new String []{ "u0001", "u0001", "u0001"});

request.addFeed("age", TFDataType.DT_FLOAT,

new long[]{3}, new float []{ 18.0f, 18.0f, 18.0f});

// 注意: 如果设置了INPUT_TILE=2,那么上述值都相同的feature可以只传一次:

// request.addFeed("user_id", TFDataType.DT_STRING,

// new long[]{1}, new String []{ "u0001" });

// request.addFeed("age", TFDataType.DT_FLOAT,

// new long[]{1}, new float []{ 18.0f});

request.addFeed("item_id", TFDataType.DT_STRING,

new long[]{3}, new String []{ "i0001", "i0002", "i0003"});

request.addFetch("probs");

return request;

}

public static void main(String[] args) throws Exception {

PredictClient client = new PredictClient(new HttpConfig());

// 如果要使用网络直连功能,需使用setDirectEndpoint方法, 如:

// client.setDirectEndpoint("pai-eas-vpc.cn-shanghai.aliyuncs.com");

// 网络直连需打通在EAS控制台开通,提供用于访问EAS服务的源vswitch,

// 网络直连具有更好的稳定性和性能。

client.setEndpoint("xxxxxxx.vpc.cn-hangzhou.pai-eas.aliyuncs.com");

client.setModelName("ali_rec_rnk_no_fg");

client.setToken("");

long startTime = System.currentTimeMillis();

for (int i = 0; i < 100; i++) {

try {

TFResponse response = client.predict(buildPredictRequest());

// probs为模型的输出的字段名, 可以使用curl命令查看模型的输入输出:

// curl xxxxxxx.vpc.cn-hangzhou.pai-eas.aliyuncs.com -H "Authorization:{token}"

List<Float> result = response.getFloatVals("probs");

System.out.print("Predict Result: [");

for (int j = 0; j < result.size(); j++) {

System.out.print(result.get(j).floatValue());

if (j != result.size() - 1) {

System.out.print(", ");

}

}

System.out.print("]\n");

} catch (Exception e) {

e.printStackTrace();

}

}

long endTime = System.currentTimeMillis();

System.out.println("Spend Time: " + (endTime - startTime) + "ms");

client.shutdown();

}

}Python

请参考Python SDK使用说明。由于python性能比较差,建议仅在调试服务时使用,在生产环境中应使用Java SDK。请求服务ali_rec_rnk_no_fg的示例代码如下:

#!/usr/bin/env python

from eas_prediction import PredictClient

from eas_prediction import StringRequest

from eas_prediction import TFRequest

if __name__ == '__main__':

client = PredictClient('http://xxxxxxx.vpc.cn-hangzhou.pai-eas.aliyuncs.com', 'ali_rec_rnk_no_fg')

client.set_token('')

client.init()

# 注意请将 server_default 替换为真实模型的 signature_name,详细见上文的使用说明文档

req = TFRequest('server_default')

req.add_feed('user_id', [3], TFRequest.DT_STRING, ['u0001'] * 3)

req.add_feed('age', [3], TFRequest.DT_FLOAT, [18.0] * 3)

# 注意: 开启INPUT_TILE=2的优化之后, 上述特征可以只传一个值

# req.add_feed('user_id', [1], TFRequest.DT_STRING, ['u0001'])

# req.add_feed('age', [1], TFRequest.DT_FLOAT, [18.0])

req.add_feed('item_id', [3], TFRequest.DT_STRING,

['i0001', 'i0002', 'i0003'])

for x in range(0, 100):

resp = client.predict(req)

print(resp)2.4 自行构建服务请求

除Python和Java外,使用其他语言客户端调用服务都需要根据.proto文件手动生成预测的请求代码文件。如果您希望自行构建服务请求,则可以参考如下protobuf的定义来生成相关的代码:

tf_predict.proto: tensorflow模型的请求定义

syntax = "proto3"; option cc_enable_arenas = true; option go_package = ".;tf"; option java_package = "com.aliyun.openservices.eas.predict.proto"; option java_outer_classname = "PredictProtos"; enum ArrayDataType { // Not a legal value for DataType. Used to indicate a DataType field // has not been set. DT_INVALID = 0; // Data types that all computation devices are expected to be // capable to support. DT_FLOAT = 1; DT_DOUBLE = 2; DT_INT32 = 3; DT_UINT8 = 4; DT_INT16 = 5; DT_INT8 = 6; DT_STRING = 7; DT_COMPLEX64 = 8; // Single-precision complex DT_INT64 = 9; DT_BOOL = 10; DT_QINT8 = 11; // Quantized int8 DT_QUINT8 = 12; // Quantized uint8 DT_QINT32 = 13; // Quantized int32 DT_BFLOAT16 = 14; // Float32 truncated to 16 bits. Only for cast ops. DT_QINT16 = 15; // Quantized int16 DT_QUINT16 = 16; // Quantized uint16 DT_UINT16 = 17; DT_COMPLEX128 = 18; // Double-precision complex DT_HALF = 19; DT_RESOURCE = 20; DT_VARIANT = 21; // Arbitrary C++ data types } // Dimensions of an array message ArrayShape { repeated int64 dim = 1 [packed = true]; } // Protocol buffer representing an array message ArrayProto { // Data Type. ArrayDataType dtype = 1; // Shape of the array. ArrayShape array_shape = 2; // DT_FLOAT. repeated float float_val = 3 [packed = true]; // DT_DOUBLE. repeated double double_val = 4 [packed = true]; // DT_INT32, DT_INT16, DT_INT8, DT_UINT8. repeated int32 int_val = 5 [packed = true]; // DT_STRING. repeated bytes string_val = 6; // DT_INT64. repeated int64 int64_val = 7 [packed = true]; // DT_BOOL. repeated bool bool_val = 8 [packed = true]; } // PredictRequest specifies which TensorFlow model to run, as well as // how inputs are mapped to tensors and how outputs are filtered before // returning to user. message PredictRequest { // A named signature to evaluate. If unspecified, the default signature // will be used string signature_name = 1; // Input tensors. // Names of input tensor are alias names. The mapping from aliases to real // input tensor names is expected to be stored as named generic signature // under the key "inputs" in the model export. // Each alias listed in a generic signature named "inputs" should be provided // exactly once in order to run the prediction. map<string, ArrayProto> inputs = 2; // Output filter. // Names specified are alias names. The mapping from aliases to real output // tensor names is expected to be stored as named generic signature under // the key "outputs" in the model export. // Only tensors specified here will be run/fetched and returned, with the // exception that when none is specified, all tensors specified in the // named signature will be run/fetched and returned. repeated string output_filter = 3; // Debug flags // 0: just return prediction results, no debug information // 100: return prediction results, and save request to model_dir // 101: save timeline to model_dir int32 debug_level = 100; } // Response for PredictRequest on successful run. message PredictResponse { // Output tensors. map<string, ArrayProto> outputs = 1; }easyrec_predict.proto: Tensorflow模型+FG的请求定义

syntax = "proto3"; option cc_enable_arenas = true; option go_package = ".;easyrec"; option java_package = "com.aliyun.openservices.eas.predict.proto"; option java_outer_classname = "EasyRecPredictProtos"; import "tf_predict.proto"; // context features message ContextFeatures { repeated PBFeature features = 1; } message PBFeature { oneof value { int32 int_feature = 1; int64 long_feature = 2; string string_feature = 3; float float_feature = 4; } } // PBRequest specifies the request for aggregator message PBRequest { // Debug flags // 0: just return prediction results, no debug information // 3: return features generated by FG module, string format, feature values are separated by \u0002, // could be used for checking feature consistency check and generating online deep learning samples // 100: return prediction results, and save request to model_dir // 101: save timeline to model_dir // 102: for recall models such as DSSM and MIND, only only return Faiss retrieved results // but also return user embedding vectors. int32 debug_level = 1; // user features map<string, PBFeature> user_features = 2; // item ids, static(daily updated) item features // are fetched from the feature cache resides in // each processor node by item_ids repeated string item_ids = 3; // context features for each item, realtime item features // could be passed as context features. map<string, ContextFeatures> context_features = 4; // embedding retrieval neighbor number. int32 faiss_neigh_num = 5; } // return results message Results { repeated double scores = 1 [packed = true]; } enum StatusCode { OK = 0; INPUT_EMPTY = 1; EXCEPTION = 2; } // PBResponse specifies the response for aggregator message PBResponse { // results map<string, Results> results = 1; // item features map<string, string> item_features = 2; // fg generate features map<string, string> generate_features = 3; // context features map<string, ContextFeatures> context_features = 4; string error_msg = 5; StatusCode status_code = 6; // item ids repeated string item_ids = 7; repeated string outputs = 8; // all fg input features map<string, string> raw_features = 9; // output tensors map<string, ArrayProto> tf_outputs = 10; }