本文以游戏行业计算留存为例,介绍在Hologres实现超长周期的留存计算最佳实践。

背景信息

在游戏行业中,从角色创建到玩家每日登录的留存业务含义,主要关注的是玩家参与度和游戏的长期吸引力。这一过程涉及到几个关键的留存指标,用以评估游戏的用户粘性及玩家持续回归游戏的倾向。具体包括如下表所示。

留存指标 | 说明 |

角色创建 | 玩家首次与游戏互动的关键点,标志着玩家从潜在用户转变为活跃用户的转换。良好的新手引导流程、吸引人的角色定制选项和低门槛的入门体验对于提升角色创建后的初次体验至关重要。 |

次日留存率 | 是指新创建角色的玩家在首次登录游戏后的第二天再次登录的比例。该指标反映了游戏在最初接触后给玩家留下的印象,以及游戏是否有足够的吸引力让玩家愿意快速回归。 |

日留存率、7日留存率 | 分别是衡量玩家在首次登录后第三天和第七天的回访情况。这些中期留存指标可以帮助理解游戏在新玩家中建立习惯形成的能力,以及游戏内容是否足够丰富和有趣以保持玩家的兴趣。 |

长期留存率 | 超过7日之后的留存数据,比如30~365日的时间,可评估游戏能否持续吸引玩家并构建稳定的玩家基础。这往往与游戏的深度、社区互动、持续的内容更新等因素紧密相关。 |

游戏公司可以通过分析这些留存指标,了解游戏在哪些方面有效吸引了玩家,哪些环节可能导致玩家流失,进而指导产品优化、营销策略和内容更新计划。例如:

如果次日留存率较低,可能意味着游戏的初始用户体验需要改进。

如果长期留存率较低,则可能需要增加游戏的可玩性、社交功能或定期更新内容来维持玩家兴趣。

总之,游戏角色创建到每日登录的留存过程是评估游戏健康状况和制定增长策略的重要依据。

实时数仓引擎的挑战

在进行留存指标分析时,实时数仓Hologres面临多重挑战:

计算周期较长,需要处理大量历史数据,这导致了较高的IO开销,并且在JOIN操作中关联维表和实时表时,效率降低,长周期的用户唯一值(UV)计算对内存造成较大压力。

计算多个群体的交集时,需要处理长时间范围内的笛卡尔积交集,进一步增加了计算复杂性。

SQL代码的复杂性影响了引擎的计算效率,大量的

CASE WHEN语句用于判断留存日期,使得代码开发效率低下,同时也加大了运维的复杂度。因此,优化这些方面对于实现高效、简洁的超长周期留存计算至关重要。

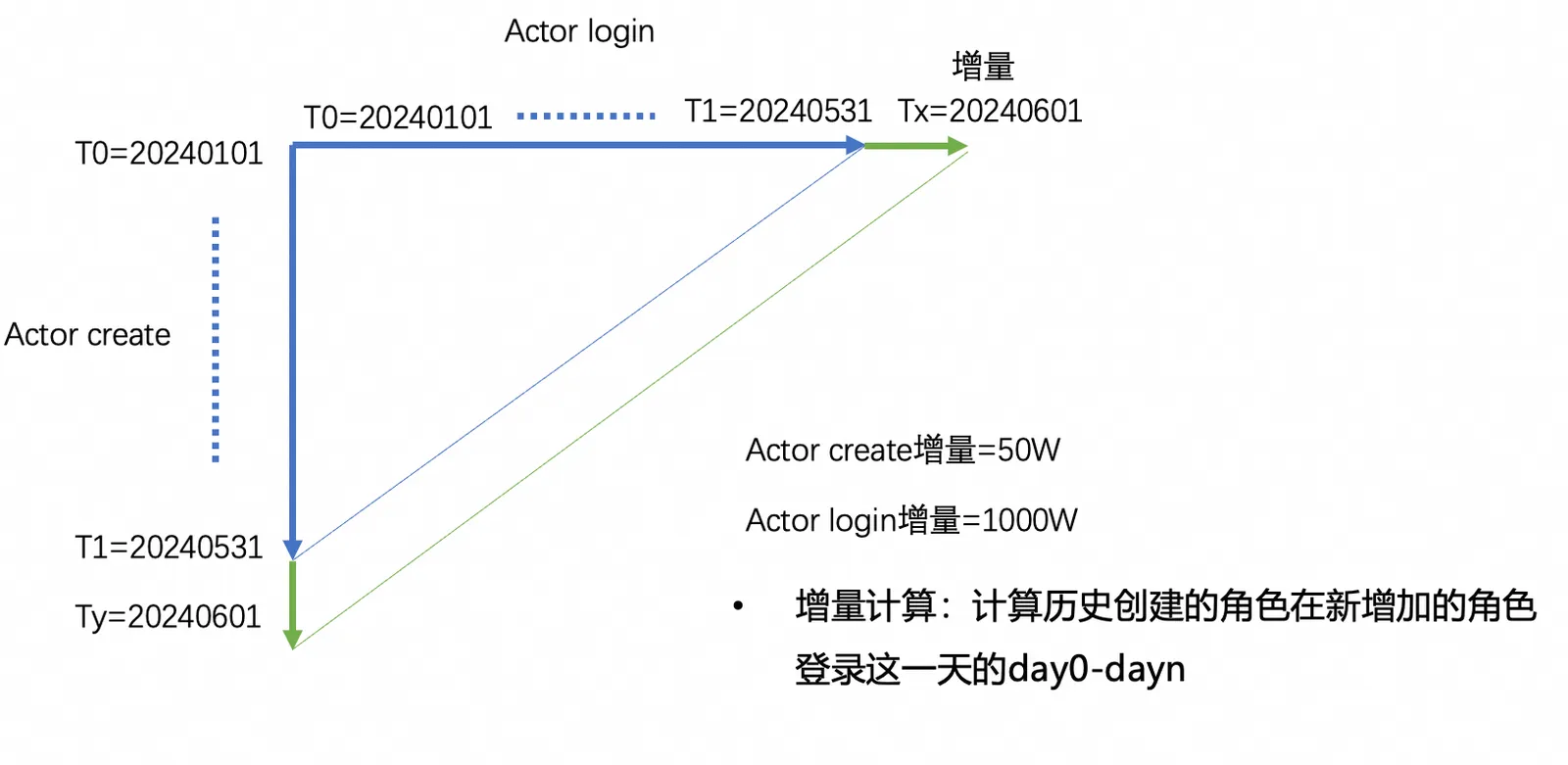

基于CUBE的增量计算方案

基于CUBE的增量计算是先初始化并计算周期内的留存唯一用户(UV),然后每日处理新增的角色登录数据,与关联0~T-1历史创建的角色,以得出从day0~dayn的留存情况。这种方法的优势在于:

通过T+1的方式预先计算固定维度下的留存,利用存储换取查询速度,提高了性能和QPS(每秒查询率)。

新增角色登录数据只需与历史角色创建表进行一对一关联,减少了计算复杂性,避免了N * N的计算粒度,并降低了计算的复杂性。

基于CUBE的增量计算方案,查询的维度和时间范围是基于预先计算好的UV结果值,因此查询操作较为高效。然而,这种实现的扩展性较差,只能适用于固定的CUBE维度。

WITH aa AS (

SELECT

aa.start_date,

aa.gameid,

aa.gameversion,

cc.statdate as end_date,

'day' || cc.statdate::date - aa.start_date AS nday,

aa.actorid AS new_actorid,

cc.actorid AS log_actorid

FROM

(

SELECT

createtime::date AS start_date,

gameid,

gameversion,

actorid

FROM dim.dim_sdkactor

WHERE createtime > '2024-01-01'::date

) aa

LEFT JOIN

(

SELECT

*

FROM app.dws_actor_day_agg_di

WHERE statdate = '2024-06-04'

) cc

ON aa.gameid = cc.gameid

AND aa.actorid = cc.actorid

WHERE aa.start_date <= cc.statdate

)

SELECT

aa.start_date,

aa.gameid,

aa.gameversion,

aa.end_date,

aa.nday,

COUNT(DISTINCT aa.new_actorid) as NewCount,

COUNT(DISTINCT aa.log_actorid) as LogCount

FROM aa

GROUP BY

aa.start_date,

aa.gameid,

aa.gameversion,

aa.end_date,

aa.nday;基于Hologres RoaringBitmap计算方案

Hologres RoaringBitmap方案能够实现高效灵活的查询方式。通过构建每日维度的最小粒度的Bitmap,可以实现对维度的多属性和任意时间范围进行GROUP BY操作,以计算UV的交集。RoaringBitmap的核心优势在于:

分桶策略(Bucketing):将整个数据范围划分成多个小的区间(Buckets),每个容器(Container)负责存储一定范围内的数据。这种划分方式可以实现局部化数据访问,减少了查询时不必要的遍历。

动态容器选择:根据每个container内数据的分布特点(稀疏或密集),RoaringBitmap会自动选择最合适的容器类型来存储数据,确保数据在不同密度下都能保持高效。容器类型说明如下:

Bitmap Container:适合数据密集型场景,利用位操作高效地表示数据的存在状态。

Array Container:适用于数据稀疏且数量不多的情况,直接存储数值列表。

Run-Length Encoding Container:针对连续或近似连续的数据,通过编码连续的值和长度来压缩数据。

高效集合运算:由于每个Container内部数据组织有序且采用高效编码,进行集合间的交、并、差等运算时,可以针对性地优化这些操作,避免全量数据扫描,仅在必要时进行数据交互,从而显著提高了运算速度。

位图压缩技术:通过上述的容器选择和编码方式,RoaringBitmap在保持查询性能的同时,能够显著减少存储空间需求,特别是在处理大量稀疏数据时效果更为显著。

并行处理友好:RoaringBitmap的数据结构天然支持并行处理,因为不同的Container可以独立处理,这对于分布式系统和并行计算环境尤其有利。

Hologres RoaringBitmap计算方案示例

数据准备

基础角色维表和角色登录事实表

基础角色维表(

dim.dim_sdkactor),代码示例如下。游戏角色创建表,预计每款游戏每天创建的角色在50万级别。

BEGIN; CREATE SCHEMA dim; CALL HG_CREATE_TABLE_GROUP ('flink_tg_48', 48); CREATE TABLE dim.dim_sdkactor ( gameid text NOT NULL, channelid text NOT NULL, sdkuserid text NOT NULL default ''::text, gameversion text NOT NULL, worldid text, actorid text NOT NULL, actorname text, actorlevel integer, ip text, createtime timestamp with time zone NOT NULL default CURRENT_TIMESTAMP, createtime_user timestamp with time zone, first_logintime timestamp with time zone, os text, country text, uuid text, radid text, rsid text, activetime timestamp with time zone, adclicktime timestamp with time zone, cur_worldid text, last_logintime timestamp with time zone, first_rechargetime timestamp with time zone, viplevel integer, total_amount integer, is_old integer, first_recharge_level integer, ca_adclciktime timestamp with time zone, ca_radid text, ca_rsid text, devicetype text, match_flag integer, viptime timestamp with time zone, vip1time timestamp with time zone ,PRIMARY KEY (gameid, gameversion, actorid) ) PARTITION BY LIST (gameid); CALL set_table_property('dim.dim_sdkactor', 'orientation', 'row,column'); CALL set_table_property('dim.dim_sdkactor', 'storage_format', 'sst,orc'); CALL set_table_property('dim.dim_sdkactor', 'bitmap_columns', 'gameid,channelid,sdkuserid,gameversion,worldid,actorid,actorname,ip,os,country,uuid,radid,rsid'); CALL set_table_property('dim.dim_sdkactor', 'clustering_key', 'createtime:asc,gameversion:asc'); CALL set_table_property('dim.dim_sdkactor', 'dictionary_encoding_columns', 'gameid:auto,channelid:auto,sdkuserid:auto,gameversion:auto,worldid:auto,actorid:auto,actorname:auto,ip:auto,os:auto,country:auto,uuid:auto,radid:auto,rsid:auto'); CALL set_table_property('dim.dim_sdkactor', 'distribution_key', 'gameversion,actorid'); CALL set_table_property('dim.dim_sdkactor', 'segment_key', 'createtime'); CALL set_table_property('dim.dim_sdkactor', 'table_group', 'flink_tg_48'); CALL set_table_property('dim.dim_sdkactor', 'table_storage_mode', 'any'); CALL set_table_property('dim.dim_sdkactor', 'time_to_live_in_seconds', '3153600000'); COMMENT ON TABLE dim.dim_sdkactor IS NULL; COMMENT ON COLUMN dim.dim_sdkactor.gameid IS '游戏ID'; COMMENT ON COLUMN dim.dim_sdkactor.channelid IS '渠道ID(pid)'; COMMENT ON COLUMN dim.dim_sdkactor.sdkuserid IS 'SDK用户ID'; COMMENT ON COLUMN dim.dim_sdkactor.worldid IS '游戏世界ID'; COMMENT ON COLUMN dim.dim_sdkactor.actorid IS '游戏角色ID'; COMMENT ON COLUMN dim.dim_sdkactor.actorname IS '游戏角色名称'; COMMENT ON COLUMN dim.dim_sdkactor.ip IS 'IP地址'; COMMENT ON COLUMN dim.dim_sdkactor.createtime IS '角色创建时间'; COMMENT ON COLUMN dim.dim_sdkactor.createtime_user IS '用户创建时间'; COMMENT ON COLUMN dim.dim_sdkactor.first_logintime IS '首次登录时间'; COMMENT ON COLUMN dim.dim_sdkactor.os IS '系统'; COMMENT ON COLUMN dim.dim_sdkactor.country IS '国家'; COMMENT ON COLUMN dim.dim_sdkactor.radid IS '广告radid'; COMMENT ON COLUMN dim.dim_sdkactor.rsid IS '广告rsid'; COMMENT ON COLUMN dim.dim_sdkactor.activetime IS '归属设备激活时间'; COMMENT ON COLUMN dim.dim_sdkactor.adclicktime IS '归属设备点击广告时间'; COMMENT ON COLUMN dim.dim_sdkactor.viplevel IS 'vip等级'; COMMENT ON COLUMN dim.dim_sdkactor.total_amount IS '付费总金额'; COMMENT ON COLUMN dim.dim_sdkactor.is_old IS '是否是滚服角色'; COMMENT ON COLUMN dim.dim_sdkactor.first_recharge_level IS '首次付费等级'; COMMENT ON COLUMN dim.dim_sdkactor.viptime IS 'vip等级更新时间'; COMMENT ON COLUMN dim.dim_sdkactor.vip1time IS '到达vip1的时间'; COMMIT;角色登录事实表(

app.dws_actor_day_agg_di),代码示例如下。游戏角色登录事件轻度汇总表,预计每15分钟执行一次Upsert操作,每款游戏每天登录的角色在1000万级别。

BEGIN; CREATE SCHEMA app; CALL HG_CREATE_TABLE_GROUP ('dw_tg_48', 48); CREATE TABLE app.dws_actor_day_agg_di ( statdate timestamp with time zone NOT NULL, gameid text NOT NULL, gameversion text NOT NULL, actorid text NOT NULL, worldid text NOT NULL, channelid text NOT NULL, sdkuserid text NOT NULL, paybegincnt integer, payendcnt integer, payamount bigint, test_payamount bigint, logincnt integer, gameonlinetime integer, createtime timestamp with time zone, ad_flag text, dt_year text, dt_month text, dt_week text, one_week_range text, country text, country_code text, country_area text, timezone text NOT NULL, os text, channelname text, createtime_user text, first_worldid text, is_old text, first_channelid_actor text, language text, realamount bigint ,PRIMARY KEY (statdate, gameid, gameversion, actorid, worldid, channelid, sdkuserid, timezone) ); CALL set_table_property('app.dws_actor_day_agg_di', 'orientation', 'column'); CALL set_table_property('app.dws_actor_day_agg_di', 'storage_format', 'orc'); CALL set_table_property('app.dws_actor_day_agg_di', 'bitmap_columns', 'statdate,gameid,gameversion,actorid,worldid,channelid,sdkuserid'); CALL set_table_property('app.dws_actor_day_agg_di', 'clustering_key', 'statdate:asc,gameid:asc,channelid:asc'); CALL set_table_property('app.dws_actor_day_agg_di', 'dictionary_encoding_columns', 'ad_flag:auto,dt_year:auto,dt_month:auto,dt_week:auto,one_week_range:auto,country:auto,country_code:auto,country_area:auto,timezone:auto,os:auto,channelname:auto,createtime_user:auto,first_worldid:auto,statdate:auto,gameid:auto,gameversion:auto,actorid:auto,worldid:auto,channelid:auto,sdkuserid:auto'); CALL set_table_property('app.dws_actor_day_agg_di', 'distribution_key', 'statdate,gameid,gameversion'); CALL set_table_property('app.dws_actor_day_agg_di', 'segment_key', 'statdate'); CALL set_table_property('app.dws_actor_day_agg_di', 'table_group', 'dw_tg_48'); CALL set_table_property('app.dws_actor_day_agg_di', 'table_storage_mode', 'any'); CALL set_table_property('app.dws_actor_day_agg_di', 'time_to_live_in_seconds', '3153600000'); COMMENT ON TABLE app.dws_actor_day_agg_di IS NULL; COMMENT ON COLUMN app.dws_actor_day_agg_di.statdate IS '统计日期'; COMMENT ON COLUMN app.dws_actor_day_agg_di.gameid IS '游戏ID'; COMMENT ON COLUMN app.dws_actor_day_agg_di.gameversion IS '游戏版本'; COMMENT ON COLUMN app.dws_actor_day_agg_di.actorid IS '角色ID'; COMMENT ON COLUMN app.dws_actor_day_agg_di.worldid IS '区服ID'; COMMENT ON COLUMN app.dws_actor_day_agg_di.channelid IS '渠道ID'; COMMENT ON COLUMN app.dws_actor_day_agg_di.sdkuserid IS '账号id'; COMMENT ON COLUMN app.dws_actor_day_agg_di.paybegincnt IS '日充值意向次数'; COMMENT ON COLUMN app.dws_actor_day_agg_di.payendcnt IS '日充值次数'; COMMENT ON COLUMN app.dws_actor_day_agg_di.payamount IS '日充值金额'; COMMENT ON COLUMN app.dws_actor_day_agg_di.test_payamount IS '测试的日充值金额'; COMMENT ON COLUMN app.dws_actor_day_agg_di.logincnt IS '登录游戏次数'; COMMENT ON COLUMN app.dws_actor_day_agg_di.gameonlinetime IS '游戏在线时长'; COMMENT ON COLUMN app.dws_actor_day_agg_di.createtime IS '角色创建时间'; COMMENT ON COLUMN app.dws_actor_day_agg_di.ad_flag IS '来源'; COMMENT ON COLUMN app.dws_actor_day_agg_di.dt_year IS '年'; COMMENT ON COLUMN app.dws_actor_day_agg_di.dt_month IS '月'; COMMENT ON COLUMN app.dws_actor_day_agg_di.dt_week IS '周'; COMMENT ON COLUMN app.dws_actor_day_agg_di.one_week_range IS '一周的日期范围'; COMMENT ON COLUMN app.dws_actor_day_agg_di.country IS '国家'; COMMENT ON COLUMN app.dws_actor_day_agg_di.country_code IS '国家代码'; COMMENT ON COLUMN app.dws_actor_day_agg_di.country_area IS '国家地区'; COMMENT ON COLUMN app.dws_actor_day_agg_di.timezone IS '时区(UTC+8:0;UTC+0:-8)'; COMMENT ON COLUMN app.dws_actor_day_agg_di.os IS '系统'; COMMENT ON COLUMN app.dws_actor_day_agg_di.channelname IS '渠道名称'; COMMENT ON COLUMN app.dws_actor_day_agg_di.createtime_user IS '用户创建时间'; COMMENT ON COLUMN app.dws_actor_day_agg_di.first_worldid IS '角色创建时的区服id'; COMMENT ON COLUMN app.dws_actor_day_agg_di.is_old IS '是否滚服角色(1:是,0:否)'; COMMENT ON COLUMN app.dws_actor_day_agg_di.first_channelid_actor IS '角色最开始进入游戏的渠道id'; COMMENT ON COLUMN app.dws_actor_day_agg_di.language IS '角色首次选择的语言'; COMMENT ON COLUMN app.dws_actor_day_agg_di.realamount IS '实收金额'; COMMIT;

Mapping表

Hologres RoaringBitmap要求用户ID为32位INT类型且应尽量密集以达到最优性能,鉴于实际业务场景中用户标识(如userId、actorId)多为字符串形式,为解决这个问题,采取构建映射表的策略,通过Hologres的Serial类型,即自增的32位INT类型,实现了从字符串到整型ID的高效、自动化映射管理,确保了数据的一致性和映射关系的稳定性。

游戏角色创建表的映射表(

dws.actor_mapping),代码示例如下。BEGIN; CREATE SCHEMA dws; CALL HG_CREATE_TABLE_GROUP ('dw_tg_24', 24); CREATE TABLE dws.actor_mapping ( gameid integer NOT NULL, gameversion character varying(64) NOT NULL, actorid text NOT NULL, encode_actorid bigserial ,PRIMARY KEY (gameid, gameversion, actorid) ); CALL set_table_property('dws.actor_mapping', 'orientation', 'row,column'); CALL set_table_property('dws.actor_mapping', 'storage_format', 'sst,orc'); CALL set_table_property('dws.actor_mapping', 'bitmap_columns', 'gameversion,actorid'); CALL set_table_property('dws.actor_mapping', 'clustering_key', 'gameid:asc,gameversion:asc,actorid:asc'); CALL set_table_property('dws.actor_mapping', 'dictionary_encoding_columns', 'gameversion:auto,actorid:auto'); CALL set_table_property('dws.actor_mapping', 'distribution_key', 'gameid,gameversion,actorid'); CALL set_table_property('dws.actor_mapping', 'table_group', 'dw_tg_24'); CALL set_table_property('dws.actor_mapping', 'table_storage_mode', 'any'); CALL set_table_property('dws.actor_mapping', 'time_to_live_in_seconds', '3153600000'); COMMENT ON TABLE dws.actor_mapping IS '角色映射表'; COMMENT ON COLUMN dws.actor_mapping.gameid IS '游戏ID'; COMMENT ON COLUMN dws.actor_mapping.gameversion IS '游戏版本'; COMMENT ON COLUMN dws.actor_mapping.actorid IS '角色ID'; COMMENT ON COLUMN dws.actor_mapping.encode_actorid IS '角色编码'; END;dws.actor_mapping映射表的数据写入,代码示例如下。INSERT INTO dws.actor_mapping (gameid, gameversion, actorid) SELECT gameid::INT, gameversion, actorid FROM dim.dim_sdkactor ORDER BY createtime, gameid, actorid;

Roaringbitmap的结果表

RoaringBitmap使用前需要执行以下语句,创建EXTENSION,且EXTENSION是DB级别的函数,一个DB只需执行一次即可,新建DB需要重新执行。

--创建EXTENSION

CREATE EXTENSION roaringbitmap;游戏角色创建的Roaringbitmap结果表

游戏角色创建的Roaringbitmap结果表(

dws.actor_all_new_tag),代码示例如下。BEGIN; CREATE TABLE dws.actor_all_new_tag ( statdate date NOT NULL, gameid integer NOT NULL, tag_name text NOT NULL, tag_val text NOT NULL, rb_new roaringbitmap ,PRIMARY KEY (statdate, gameid, tag_name, tag_val) ); CALL set_table_property('dws.actor_all_new_tag', 'orientation', 'column'); CALL set_table_property('dws.actor_all_new_tag', 'storage_format', 'orc'); CALL set_table_property('dws.actor_all_new_tag', 'bitmap_columns', 'tag_name,tag_val'); CALL set_table_property('dws.actor_all_new_tag', 'dictionary_encoding_columns', 'tag_name:auto,tag_val:auto'); CALL set_table_property('dws.actor_all_new_tag', 'distribution_key', 'statdate,gameid,tag_name,tag_val'); CALL set_table_property('dws.actor_all_new_tag', 'segment_key', 'statdate'); CALL set_table_property('dws.actor_all_new_tag', 'table_group', 'dw_tg_24'); CALL set_table_property('dws.actor_all_new_tag', 'table_storage_mode', 'any'); CALL set_table_property('dws.actor_all_new_tag', 'time_to_live_in_seconds', '3153600000'); COMMENT ON TABLE dws.actor_all_new_tag IS '角色新创建列表'; COMMENT ON COLUMN dws.actor_all_new_tag.statdate IS '统计日期'; COMMENT ON COLUMN dws.actor_all_new_tag.gameid IS '游戏ID'; COMMENT ON COLUMN dws.actor_all_new_tag.tag_name IS '标签名(游戏版本、渠道ID、游戏世界ID、操作系统、国家编码、来源)'; COMMENT ON COLUMN dws.actor_all_new_tag.tag_val IS '标签值'; COMMENT ON COLUMN dws.actor_all_new_tag.rb_new IS '角色编码列表'; END;dws.actor_all_new_tag结果表的数据写入,代码示例如下。INSERT INTO dws.actor_all_new_tag (statdate, gameid, tag_name, tag_val, rb_new) SELECT createtime::DATE statdate, a.gameid::int, 'gameversion', a.gameversion, RB_BUILD_AGG (m.encode_actorid ::int) FROM dim.dim_sdkactor a INNER JOIN dws.actor_mapping m ON a.gameid::int = m.gameid AND a.gameversion = m.gameversion AND a.actorid = m.actorid GROUP BY a.createtime::DATE, a.gameid, a.gameversion

游戏角色登录的Roaringbitmap结果表

游戏角色登录的Roaringbitmap结果表(

dws.actor_all_login),代码示例如下。BEGIN; CREATE TABLE dws.actor_all_login ( statdate date NOT NULL, gameid integer NOT NULL, rb_login roaringbitmap ,PRIMARY KEY (statdate, gameid) ); CALL set_table_property('dws.actor_all_login', 'orientation', 'column'); CALL set_table_property('dws.actor_all_login', 'storage_format', 'orc'); CALL set_table_property('dws.actor_all_login', 'distribution_key', 'statdate,gameid'); CALL set_table_property('dws.actor_all_login', 'segment_key', 'statdate'); CALL set_table_property('dws.actor_all_login', 'table_group', 'dw_tg_24'); CALL set_table_property('dws.actor_all_login', 'table_storage_mode', 'any'); CALL set_table_property('dws.actor_all_login', 'time_to_live_in_seconds', '3153600000'); COMMENT ON TABLE dws.actor_all_login IS '角色登录行为指标表'; COMMENT ON COLUMN dws.actor_all_login.statdate IS '统计日期'; COMMENT ON COLUMN dws.actor_all_login.gameid IS '游戏ID'; COMMENT ON COLUMN dws.actor_all_login.rb_login IS '角色登录指标列表'; END;dws.actor_all_login结果表的数据写入,代码示例如下。INSERT INTO dws.actor_all_login (statdate, gameid, rb_login) SELECT statdate, A.gameid::int, RB_BUILD_AGG (m.encode_actorid ::int) FROM APP.dws_actor_day_agg_di A INNER JOIN dws.actor_mapping m ON A.gameid::int = M.gameid AND A.gameversion = M.gameversion AND A.actorid = M.actorid GROUP BY statdate, A.gameid::int

长周期留存查询SQL性能验证

获取从游戏角色创建到角色登录150天的留存分析。

Explain Analyze

WITH

actor_new AS

( SELECT statdate,

gameid,

RB_AND_AGG (rb_new) rb_new

FROM dws.actor_all_new_tag n

WHERE statdate >= '20240101'::date

AND gameid = '2131'

AND tag_name = 'gameversion'

AND tag_val = '2131-CN-ZS'

GROUP BY statdate,

gameid

)

,

actor_list

AS

( SELECT n.statdate start_date,

s.ds::date end_date,

'day' || s.ds::date - n.statdate AS nday,

gameid,

rb_new

FROM actor_new n,

generate_series ('20240101'::date, '20240604'::date, '1 day'::interval) AS s (ds)

WHERE n.statdate <= s.ds::date

)

SELECT count(1)

FROM ( SELECT al.start_date,

al.end_date,

al.nday,

RB_CARDINALITY (rb_new) NewCount,

RB_CARDINALITY (RB_AND (rb_new, rb_login)) LogCount

FROM actor_list al

INNER JOIN dws.actor_all_login aal

ON al.end_date = aal.statdate

AND al.gameid = aal.gameid

) t

;返回结果如下,执行耗时预估为6 s。若您有更低延迟的期望,您还可以增加分桶计算和分桶优化。

Gather (cost=0.00..7383.37 rows=777 width=32)

[24:1 id=100006 dop=1 time=6547/6547/6547ms rows=8001(8001/8001/8001) mem=75/75/75KB open=0/0/0ms get_next=6547/6547/6547ms]

-> Project (cost=0.00..7383.31 rows=777 width=32)

[id=27 dop=24 time=6549/3544/1202ms rows=8001(691/333/27) mem=2060/1314/131KB open=826/636/514ms get_next=5723/2908/688ms]

-> Hash Join (cost=0.00..7382.31 rows=777 width=32)

Hash Cond: ((actor_all_login.statdate = (date(actor_all_login.*))) AND (actor_all_login.gameid = actor_all_new_tag.gameid))

[id=25 dop=24 time=5768/3144/1160ms rows=8001(691/333/27) mem=2048/813/64MB open=826/636/513ms get_next=4942/2508/647ms]

-> Local Gather (cost=0.00..5.16 rows=777 width=16)

[id=22 dop=24 time=827/518/76ms rows=759(48/31/19) mem=600/600/600B open=0/0/0ms get_next=827/518/76ms local_dop=1/1/1]

-> Decode (cost=0.00..5.16 rows=777 width=16)

[id=20 split_count=24 time=1520/1154/765ms rows=759(48/31/19) mem=0/0/0B open=1/0/0ms get_next=1520/1154/765ms]

-> Seq Scan on actor_all_login (cost=0.00..5.06 rows=777 width=16)

Filter: (gameid = 2131)

RowGroupFilter: (gameid = 2131)

[id=19 split_count=24 time=1520/1154/765ms rows=759(48/31/19) mem=64/54/32MB open=1/0/0ms get_next=1520/1154/765ms physical_reads=51(3/2/1) scan_rows=37189(1634/1549/1495)]

-> Hash (cost=7377.15..7377.15 rows=606 width=28)

[id=24 dop=24 time=826/636/513ms rows=8001(691/333/27) mem=64/24/2MB open=672/571/512ms get_next=154/64/1ms rehash=1/1/1 hash_mem=384/384/384KB]

-> Redistribution (cost=0.00..7377.15 rows=606 width=28)

Hash Key: (date(actor_all_login.*)), actor_all_new_tag.gameid

[24:24 id=100005 dop=24 time=672/571/512ms rows=8001(691/333/27) mem=64/24/2MB open=2/0/0ms get_next=672/571/510ms]

-> Filter (cost=0.00..7377.14 rows=606 width=28)

Filter: (actor_all_new_tag.gameid = 2131)

[id=18 dop=24 time=380/376/371ms rows=8001(572/333/79) mem=2/2/2KB open=1/0/0ms get_next=379/375/371ms]

-> Project (cost=0.00..7377.14 rows=606 width=28)

[id=17 dop=24 time=380/375/371ms rows=8001(572/333/79) mem=10/7/4KB open=1/0/0ms get_next=379/375/371ms]

-> Project (cost=0.00..7376.14 rows=76 width=20)

[id=16 dop=24 time=379/375/371ms rows=8001(572/333/79) mem=4/3/2KB open=1/0/0ms get_next=379/374/370ms]

-> Nested Loop (cost=0.00..7375.14 rows=76 width=24)

Join Filter: (actor_all_ne w_tag.statdate <= date(actor_all_login.*))

[id=14 dop=24 time=379/375/370ms rows=8001(572/333/79) mem=453/276/115KB open=1/0/0ms get_next=379/374/370ms]

-> Filter (cost=0.00..1.10 rows=2 width=16)

Filter: (actor_all_new_tag.gameid = 2131)

[id=11 dop=24 time=371/370/368ms rows=126(9/5/2) mem=2/2/2KB open=1/0/0ms get_next=370/369/368ms]

-> GroupAggregate (cost=0.00..1.10 rows=2 width=16)

Group Key: actor_all_new_tag.statdate, actor_all_new_tag.gameid

[id=9 dop=24 time=371/370/368ms rows=126(9/5/2) mem=2107/1503/851KB open=1/0/0ms get_next=370/369/368ms]

-> Redistribution (cost=0.00..1.10 rows=2 width=16)

Hash Key: actor_all_new_tag.statdate, actor_all_new_tag.gameid

[24:24 id=100004 dop=24 time=370/368/367ms rows=126(9/5/2) mem=512/331/120KB open=1/0/0ms get_next=369/368/367ms]

-> Local Gather (cost=0.00..1.10 rows=2 width=16)

[id=5 dop=24 time=368/140/0ms rows=126(10/5/2) mem=600/600/600B open=0/0/0ms get_next=368/140/0ms local_dop=3/3/3]

-> Decode (cost=0.00..1.10 rows=2 width=16)

[id=3 split_count=72 time=373/47/0ms rows=126(10/1/0) mem=0/0/0B open=5/0/0ms get_next=372/47/0ms]

-> Index Scan using Clustering_index on actor_all_new_tag (cost=0.00..1.00 rows=2 width=16)

Filter: (gameid = 2131)

Segment Filter: (statdate >= '2024-01-01'::date)

Bitmap Filter: ((tag_name = 'gameversion'::text) AND (tag_val = '2131-CN-ZS'::text))

RowGroupFilter: ((gameid = 2131) AND (statdate >= '2024-01-01'::date))

[id=2 split_count=34 time=373/101/0ms rows=69(10/2/0) mem=621/199/5KB open=5/1/0ms get_next=372/100/0ms physical_reads=30(3/2/1) scan_rows=106496(8192/8192/8192) bitmap_used=37]

-> Materialize (cost=0.00..1.09 rows=3024 width=8)

[id=13 dop=24 time=1/0/0ms rows=15876(1134/661/252) mem=0/0/0B open=0/0/0ms get_next=1/0/0ms]

-> Broadcast (cost=0.00..1.09 rows=3024 width=8)

[1:24 id=100003 dop=24 time=3/0/0ms rows=3024(126/126/126) mem=600/600/600B open=0/0/0ms get_next=3/0/0ms]

-> Forward (cost=0.00..1.00 rows=126 width=8)

[0:1 id=100002 dop=1 time=3/3/3ms rows=126(126/126/126) mem=264/264/264B open=3/3/3ms get_next=0/0/0ms scan_rows=126(126/126/126)]

-> ExecuteExternalSQL on PQE (cost=0.00..0.00 rows=0 width=8)

External SQL: SELECT * FROM generate_series('2024-01-01 00:00:00+08'::timestamptz, '2024-06-04 00:00:00+08'::timestamptz, '1 day'::interval) AS TVF(c_b2615fb8_97)

ADVICE:

[node id : 2] Table actor_all_new_tag misses bitmap index: gameid.

[node id : 100004] distribution key miss match! table actor_all_new_tag defined distribution keys : statdate, gameid, tag_name, tag_val; request distribution columns : statdate, gameid;

[node id : 100005] shuffle data skew in different shards! max rows is 691, min rows is 27

[node id : 19] Table actor_all_login misses bitmap index: gameid.

Query id:[260095200828505288]

======================cost======================

Total cost:[6675] ms

Optimizer cost:[81] ms

Build execution plan cost:[4] ms

Init execution plan cost:[2] ms

Start query cost:[25] ms

- Wait schema cost:[0] ms

- Lock query cost:[0] ms

- Create dataset reader cost:[0] ms

- Create split reader cost:[6] ms

Get result cost:[6563] ms

- Get the first block cost:[2884] ms

====================resource====================

Memory: total 21322 MB. Worker stats: max 2137 MB, avg 592 MB, min 18 MB, max memory worker id: 810630269575977312.

CPU time: total 71368 ms. Worker stats: max 5967 ms, avg 1982 ms, min 41 ms, max CPU time worker id: 810630269575977312.

Ec wait time: total 683 ms. Worker stats: max 129 ms, avg 18 ms, min 0 ms, max ec wait time worker id: 729778468166517122.

Physical read bytes: total 3113 MB. Worker stats: max 169 MB, avg 86 MB, min 0 MB, max physical read bytes worker id: 729777124760795845.

Read bytes: total 2809 MB. Worker stats: max 147 MB, avg 78 MB, min 0 MB, max read bytes worker id: 729777124760795845.

DAG instance count: total 74. Worker stats: max 4, avg 2, min 1, max DAG instance count worker id: 729697499219933893.

Fragment instance count: total 170. Worker stats: max 8, avg 4, min 1, max fragment instance count worker id: 729697499219933893.增加分桶计算

在长周期范围内,需要计算多个日期的Bitmap交集组合,由于长周期Bitmap汇总计算性能较差。因此,采用分桶的方法,将Bitmap拆分成多段进行打散存储,以充分利用并发计算的能力,实现对人群的高度压缩,减少数据的IO操作,从而提升计算效率。

创建基于分桶的RoaringBitmap结果表。

基于分桶的游戏角色创建RoaringBitmap结果表

基于分桶的角色创建RoaringBitmap结果表(

dws.actor_all_new_tag_bucket),代码示例如下。BEGIN; CREATE TABLE dws.actor_all_new_tag_bucket ( statdate date NOT NULL, gameid integer NOT NULL, tag_name text NOT NULL, tag_val text NOT NULL, bucket integer NOT NULL, rb_new roaringbitmap ,PRIMARY KEY (statdate, gameid, tag_name, tag_val, bucket) ); CALL set_table_property('dws.actor_all_new_tag_bucket', 'orientation', 'column'); CALL set_table_property('dws.actor_all_new_tag_bucket', 'storage_format', 'orc'); CALL set_table_property('dws.actor_all_new_tag_bucket', 'bitmap_columns', 'tag_name,tag_val'); CALL set_table_property('dws.actor_all_new_tag_bucket', 'dictionary_encoding_columns', 'tag_name:auto,tag_val:auto'); CALL set_table_property('dws.actor_all_new_tag_bucket', 'distribution_key', 'statdate,gameid,bucket'); CALL set_table_property('dws.actor_all_new_tag_bucket', 'segment_key', 'statdate'); CALL set_table_property('dws.actor_all_new_tag_bucket', 'table_group', 'dw_tg_24'); CALL set_table_property('dws.actor_all_new_tag_bucket', 'table_storage_mode', 'any'); CALL set_table_property('dws.actor_all_new_tag_bucket', 'time_to_live_in_seconds', '3153600000'); COMMENT ON TABLE dws.actor_all_new_tag_bucket IS NULL; END;dws.actor_all_new_tag_bucket结果表的数据写入,代码示例如下。INSERT INTO dws.actor_all_new_tag_bucket (statdate, gameid, bucket, tag_name, tag_val, rb_new) SELECT createtime::DATE statdate, a.gameid::int, m.encode_actorid >> 24 as bucket,--右移24位(即高8位)作为桶号,低24位为作为Bitmap 'gameversion', a.gameversion, RB_BUILD_AGG (m.encode_actorid ::int) FROM dim.dim_sdkactor a INNER JOIN dws.actor_mapping m ON a.gameid::int = m.gameid AND a.gameversion = m.gameversion AND a.actorid = m.actorid GROUP BY a.createtime::DATE, a.gameid, m.encode_actorid >> 24, a.gameversion

基于分桶的游戏角色登录RoaringBitmap结果表

基于分桶的游戏角色登录RoaringBitmap结果表(

dws.actor_all_login_bucket),代码示例如下。BEGIN; CREATE TABLE dws.actor_all_login_bucket ( statdate date NOT NULL, gameid integer NOT NULL, bucket integer NOT NULL, rb_login roaringbitmap ,PRIMARY KEY (statdate, gameid, bucket) ); CALL set_table_property('dws.actor_all_login_bucket', 'orientation', 'column'); CALL set_table_property('dws.actor_all_login_bucket', 'storage_format', 'orc'); CALL set_table_property('dws.actor_all_login_bucket', 'distribution_key', 'statdate,gameid,bucket'); CALL set_table_property('dws.actor_all_login_bucket', 'segment_key', 'statdate'); CALL set_table_property('dws.actor_all_login_bucket', 'table_group', 'dw_tg_24'); CALL set_table_property('dws.actor_all_login_bucket', 'table_storage_mode', 'any'); CALL set_table_property('dws.actor_all_login_bucket', 'time_to_live_in_seconds', '3153600000'); COMMENT ON TABLE dws.actor_all_login_bucket IS '角色登录行为指标表'; COMMENT ON COLUMN dws.actor_all_login_bucket.statdate IS '统计日期'; COMMENT ON COLUMN dws.actor_all_login_bucket.gameid IS '游戏ID'; COMMENT ON COLUMN dws.actor_all_login_bucket.rb_login IS '角色登录指标列表'; END;dws.actor_all_login_bucket结果表的数据写入,代码示例如下。INSERT INTO dws.actor_all_login_bucket (statdate, gameid,bucket, rb_login) SELECT statdate, A.gameid::int, m.encode_actorid >> 24 as bucke,--右移24位(即高8位)作为桶号,低24位为作为Bitmap RB_BUILD_AGG (m.encode_actorid ::int) FROM APP.dws_actor_day_agg_di A INNER JOIN dws.actor_mapping m ON A.gameid::int = M.gameid AND A.gameversion = M.gameversion AND A.actorid = M.actorid GROUP BY statdate, A.gameid::int, m.encode_actorid >> 24

验证查询性能。

获取从游戏角色创建到角色登录150天的留存分析。

explain analyze WITH actor_new AS ( SELECT statdate, gameid, bucket, RB_AND_AGG (rb_new) rb_new FROM dws.actor_all_new_tag_bucket n WHERE statdate >= '20240101'::date AND gameid = '2131' AND tag_name = 'gameversion' AND tag_val = '2131-CN-ZS' GROUP BY statdate, gameid, bucket ) , actor_list AS ( SELECT n.statdate start_date, s.ds::date end_date, 'day' || s.ds::date - n.statdate AS nday, gameid, bucket, rb_new FROM actor_new n, generate_series ('20240101'::date, '20240604'::date, '1 day'::interval) AS s (ds) WHERE n.statdate <= s.ds::date ) SELECT al.start_date, al.end_date, al.nday, RB_CARDINALITY(rb_new), RB_CARDINALITY(rb_login) FROM actor_list al INNER JOIN dws.actor_all_login_bucket aal ON al.end_date = aal.statdate AND al.gameid = aal.gameid AND al.bucket = aal.bucket;返回结果如下,查询所需时间为3 s+。如果您期望更低的延迟,可以参考下文进行分桶优化。

QUERY PLAN Gather (cost=0.00..18005.55 rows=29454 width=32) [24:1 id=100005 dop=1 time=3527/3527/3527ms rows=24804(24804/24804/24804) mem=90/90/90KB open=0/0/0ms get_next=3527/3527/3527ms] -> Project (cost=0.00..18003.29 rows=29454 width=32) [id=23 dop=24 time=3540/2588/1711ms rows=24804(1569/1033/556) mem=27/19/11KB open=1458/1233/931ms get_next=2082/1355/549ms] -> Hash Join (cost=0.00..18002.21 rows=29454 width=32) Hash Cond: (((date(actor_all_new_tag_bucke.*)) = actor_all_login_bucket.statdate) AND (actor_all_new_tag_bucket.gameid = actor_all_login_bucket.gameid) AND (actor_all_new_tag_bucket.bucket = actor_all_login_bucket.bucket)) [id=22 dop=24 time=3367/2422/1611ms rows=24804(1569/1033/556) mem=536/442/264MB open=1458/1233/931ms get_next=1909/1188/438ms] -> Redistribution (cost=0.00..17996.60 rows=29454 width=32) Hash Key: (date(actor_all_new_tag_bucket.*)), actor_all_new_tag_bucke.gameid, actor_all_new_tag_bucket.bucket [24:24 id=100004 dop=24 time=117/57/26ms rows=24804(1569/1033/556) mem=32/22/16MB open=1/0/0ms get_next=117/56/26ms] -> Filter (cost=0.00..17996.47 rows=29454 width=32) Filter: (actor_all_new_tag_bucket.gameid = 2131) [id=16 dop=24 time=241/182/142ms rows=24804(1772/1033/652) mem=3/3/3KB open=1/0/0ms get_next=241/182/141ms] -> Project (cost=0.00..17996.43 rows=29454 width=32) [id=15 dop=24 time=241/182/141ms rows=24804(1772/1033/652) mem=22/15/11KB open=1/0/0ms get_next=241/182/141ms] -> Project (cost=0.00..17995.36 rows=3711 width=24) [id=14 dop=24 time=240/181/141ms rows=24804(1772/1033/652) mem=9/6/5KB open=1/0/0ms get_next=240/181/141ms] -> Nested Loop (cost=0.00..17994.35 rows=3711 width=28) Join Filter: (actor_all_new_tag_bucket.statdate <= date(actor_all_new_tag_bucke.*)) [id=12 dop=24 time=239/181/141ms rows=24804(1772/1033/652) mem=491/274/122KB open=1/0/0ms get_next=239/181/141ms] -> Filter (cost=0.00..1.10 rows=74 width=20) Filter: (actor_all_new_tag_bucket.gameid = 2131) [id=9 dop=24 time=223/173/134ms rows=350(21/14/10) mem=2/2/2KB open=1/0/0ms get_next=223/173/134ms] -> GroupAggregate (cost=0.00..1.10 rows=74 width=20) Group Key: actor_all_new_tag_bucke.statdate, actor_all_new_tag_bucket.gameid, actor_all_new_tag_bucket.bucket [id=7 dop=24 time=223/173/134ms rows=350(21/14/10) mem=2171/1446/647KB open=1/0/0ms get_next=223/173/134ms] -> Local Gather (cost=0.00..1.10 rows=74 width=20) [id=6 dop=24 time=221/171/133ms rows=350(21/14/10) mem=600/600/600B open=1/0/0ms get_next=221/171/133ms local_dop=1/1/1] -> Decode (cost=0.00..1.10 rows=74 width=20) [id=5 split_count=24 time=230/180/141ms rows=350(21/14/10) mem=0/0/0B open=9/1/1ms get_next=228/179/139ms] -> Index Scan using Clustering_index on actor_all_new_tag_bucket (cost=0.00..1.00 rows=74 width=20) Filter: (gameid = 2131) Segment Filter: (statdate >= '2024-01-25'::date) Bitmap Filter: ((tag_name = 'gameversion'::text) AND (tag_val = '2131-CN-ZS'::text)) RowGroupFilter: ((gameid = 2131) AND (statdate >= '2024-01-25'::date)) [id=4 split_count=24 time=230/180/141ms rows=350(21/14/10) mem=609/469/223KB open=9/1/1ms get_next=228/179/139ms physical_reads=96(4/4/4) scan_rows=124824(5443/5201/ 4870) bitmap_used=24] -> Materialize (cost=0.00..1.09 rows=3024 width=8) [id=11 dop=24 time=0/0/0ms rows=44100(2646/1837/1260) mem=0/0/0B open=0/0/0ms get_next=0/0/0ms] -> Broadcast (cost=0.00..1.09 rows=3024 width=8) [1:24 id=100003 dop=24 time=3/0/0ms rows=3024(126/126/126) mem=600/600/600B open=0/0/0ms get_next=3/0/0ms] -> Forward (cost=0.00..1.00 rows=126 width=8) [0:1 id=100002 dop=1 time=3/3/3ms rows=126(126/126/126) mem=264/264/264B open=3/3/3ms get_next=0/0/0ms scan_rows=126(126/126/126)] -> ExecuteExternalSQL on PQE (cost=0.00..0.00 rows=0 width=8) External SQL: SELECT * FROM generate_series('2024-01-25 00:00:00+08'::timestamptz, '2024-05-29 00:00:00+08'::timestamptz, '1 day'::interval) AS TVF(c_2c230678_101) -> Hash (cost=5.39..5.39 rows=6387 width=20) [id=21 dop=24 time=1458/1233/931ms rows=6360(316/265/239) mem=64/60/32MB open=1253/1066/864ms get_next=247/167/67ms rehash=1/1/1 hash_mem=384/384/384KB] -> Local Gather (cost=0.00..5.39 rows=6387 width=20) [id=20 dop=24 time=1253/1066/864ms rows=6360(316/265/239) mem=600/600/600B open=0/0/0ms get_next=1253/1066/864ms local_dop=1/1/1] -> Decode (cost=0.00..5.39 rows=6387 width=20) [id=19 split_count=24 time=1252/1065/863ms rows=6360(316/265/239) mem=0/0/0B open=1/0/0ms get_next=1252/1065/862ms] -> Seq Scan on actor_all_login_bucke (cost=0.00..5.29 rows=6387 width=20) Filter: (gameid = 2131) RowGroupFilter: (gameid = 2131) [id=18 split_count=24 time=1252/1065/862ms rows=6360(316/265/239) mem=64/60/32MB open=1/0/0ms get_next=1252/1065/862ms physical_reads=96(4/4/4) scan_rows=186373(7882/7765/7624)] ADVICE: [node id : 18] Table actor_all_login_bucke misses bitmap index: gameid. [node id : 4] Table actor_all_new_tag_bucke misses bitmap index: gameid. Query id:[70041957309637499] ======================cost====================== Total cost:[3765] ms Optimizer cost:[91] ms Build execution plan cost:[4] ms Init execution plan cost:[2] ms Start query cost:[32] ms - Wait schema cost:[0] ms - Lock query cost:[0] ms - Create dataset reader cost:[0] ms - Create split reader cost:[6] ms Get result cost:[3636] ms - Get the first block cost:[1752] ms ====================resource==================== Memory: total 11943 MB. Worker stats: max 605 MB, avg 497 MB, min 309 MB, max memory worker id: 729777686154468446. CPU time: total 48015 ms. Worker stats: max 2857 ms, avg 2000 ms, min 1080 ms, max CPU time worker id: 729778165074082501. Ec wait time: total 523 ms. Worker stats: max 42 ms, avg 21 ms, min 9 ms, max ec wait time worker id: 729776995498738050. Physical read bytes: total 2975 MB. Worker stats: max 134 MB, avg 123 MB, min 111 MB, max physical read bytes worker id: 729776995498738050. Read bytes: total 2976 MB. Worker stats: max 134 MB, avg 124 MB, min 111 MB, max read bytes worker id: 729776995498738050. DAG instance count: total 50. Worker stats: max 3, avg 2, min 2, max DAG instance count worker id: 810630269575977312. Fragment instance count: total 98. Worker stats: max 5, avg 4, min 4, max fragment instance count worker id: 810630269575977312. (80 rows)

分桶优化

分桶数据分析。

排查分桶的大小以及数据量是否合理。

查询

dws.actor_all_new_tag_bucket结果表的分桶大小以及数据量。SELECT bucket, count(1) FROM dws.actor_all_new_tag_bucket GROUP BY bucket;返回结果如下。

bucket | count -------+------- 8 | 7000 0 | 1100 2 | 1200 3 | 8000 14 | 4000 5 | 3777 9 | 8000 13 | 6000 10 | 9000 12 | 7000 1 | 17000 7 | 7000 4 | 3000 6 | 6000 11 | 8000 (15 rows)查询

dws.actor_all_login_bucket结果表的分桶大小以及数据量。SELECT bucket,count(1) FROM dws.actor_all_login_bucket GROUP BY bucket;返回结果如下。

bucket | count -------+------- 0 | 10000 5 | 15000 6 | 15000 11 | 10000 7 | 15000 13 | 5000 9 | 12000 4 | 13000 3 | 15000 2 | 19000 12 | 8000 1 | 15000 10 | 11000 8 | 13000 14 | 3000 (15 rows)

分桶数据均衡。

根据分桶数据显示,按照高8位进行分桶后,实际上有256个桶,但仅有15个桶含有数据。因此,分桶效果不均衡,需要调整分桶策略,采用低8位进行分桶,并仍然按照256个桶进行。

修改分桶数据。

修改

dws.actor_all_new_tag_bucket结果表的分桶数据。INSERT INTO dws.actor_all_new_tag_bucket (statdate, gameid, bucket, tag_name, tag_val, rb_new) SELECT createtime::DATE statdate, a.gameid::int, m.encode_actorid & 255 as bucket,--高8位作为桶 'gameversion', a.gameversion, RB_BUILD_AGG (m.encode_actorid ::int >> 8) --低8位来构建Bitmap数据 FROM dim.dim_sdkactor a INNER JOIN dws.actor_mapping m ON a.gameid::int = m.gameid AND a.gameversion = m.gameversion AND a.actorid = m.actorid GROUP BY a.createtime::DATE, a.gameid, m.encode_actorid & 255, a.gameversion修改

dws.actor_all_login_bucket结果表的分桶数据。INSERT INTO dws.actor_all_login_bucket (statdate, gameid,bucket, rb_login) SELECT statdate, A.gameid::int, m.encode_actorid & 255 as bucket, RB_BUILD_AGG (m.encode_actorid ::int >> 8) FROM APP.dws_actor_day_agg_di A INNER JOIN dws.actor_mapping m ON A.gameid::int = M.gameid AND A.gameversion = M.gameversion AND A.actorid = M.actorid GROUP BY statdate, A.gameid::int, m.encode_actorid & 255

调整Shard。

为了充分利用256个桶的并行处理能力,由于该场景对QPS(每秒查询率)的要求较低,因此将表的Shard扩大至480(需根据实例规格具体设置)。由于本文的测试实例使用了48个Worker,为了尽量确保数据均衡,此处使用480个Shard ,以确保数据能够均匀分配。调整Shard操作如下:

call hg_create_table_group('tg_480',480); call hg_move_table_to_table_group('dws.actor_all_login_bucket','tg_480'); call hg_move_table_to_table_group('dws.actor_all_new_tag_bucket','tg_480');

验证数据均衡性能。

获取从游戏角色创建到角色登录150天的留存分析。

Explain Analyze WITH actor_new AS ( SELECT statdate, gameid, bucket, RB_AND_AGG (rb_new) rb_new FROM dws.actor_all_new_tag_bucket n WHERE statdate >= '20240101'::date AND gameid = '2131' AND tag_name = 'gameversion' AND tag_val = '2131-CN-ZS' GROUP BY statdate, gameid, bucket ) , actor_list AS ( SELECT n.statdate start_date, s.ds::date end_date, 'day' || s.ds::date - n.statdate AS nday, gameid, bucket, rb_new FROM actor_new n, generate_series ('20240101'::date, '20240604'::date, '1 day'::interval) AS s (ds) WHERE n.statdate <= s.ds::date ) SELECT al.start_date, al.end_date, al.nday, RB_CARDINALITY (rb_new) NewCount, RB_AND_CARDINALITY (rb_new, rb_login) LogCount FROM actor_list al INNER JOIN dws.actor_all_login_bucket aal ON al.end_date = aal.statdate AND al.gameid = aal.gameid AND al.bucket = aal.bucket返回结果如下,查询所需时间为1 s+,性能得到更大的提升。

QUERY PLAN Gather (cost=0.00..48922.60 rows=1736538 width=32) [480:1 id=100006 dop=1 time=107/107/107ms rows=3129600(3129600/3129600/3129600) mem=134/134/134KB open=4/4/4ms get_next=103/103/103ms] -> Project (cost=0.00..48799.82 rows=1736538 width=32) [id=26 dop=480 time=681/298/173ms rows=3129600(9867/6520/3761) mem=164/103/100KB open=193/58/7ms get_next=641/240/145ms] -> Hash Join (cost=0.00..48798.59 rows=1736538 width=32) Hash Cond: (((date(actor_all_new_tag_bucke.*)) = actor_all_login_bucket.statdate) AND (actor_all_new_tag_bucket.gameid = actor_all_login_bucket.gameid) AND (actor_all_new_tag_bucket.bucket = actor_all_login_b ucket.bucke)) [id=25 dop=480 time=627/247/130ms rows=3129600(9867/6520/3761) mem=16/16/16MB open=193/57/7ms get_next=587/189/96ms] -> Redistribution (cost=0.00..48796.94 rows=1736538 width=32) Hash Key: (date(actor_all_new_tag_bucket.*)), actor_all_new_tag_bucket.gameid, actor_all_new_tag_bucket.bucket [480:480 id=100005 dop=480 time=116/22/11ms rows=3129600(9867/6520/3761) mem=760/579/480KB open=45/6/3ms get_next=111/15/8ms] -> Filter (cost=0.00..48796.58 rows=1736538 width=32) Filter: (actor_all_new_tag_bucket.gameid = 2131) [id=18 dop=480 time=170/38/11ms rows=3129600(9442/6520/4086) mem=3/3/3KB open=1/0/0ms get_next=170/38/11ms] -> Project (cost=0.00..48796.46 rows=1736538 width=32) [id=17 dop=480 time=169/36/11ms rows=3129600(9442/6520/4086) mem=29/27/25KB open=1/0/0ms get_next=169/36/11ms] -> Project (cost=0.00..48795.23 rows=270900 width=24) [id=16 dop=480 time=155/28/6ms rows=3129600(9442/6520/4086) mem=12/11/11KB open=1/0/0ms get_next=155/28/6ms] -> Nested Loop (cost=0.00..48794.21 rows=270900 width=28) Join Filter: (actor_all_new_tag_bucke_480.statdate <= date(actor_all_new_tag_bucket.*)) [id=14 dop=480 time=151/26/5ms rows=3129600(9442/6520/4086) mem=20/15/12KB open=1/0/0ms get_next=151/26/5ms] -> Filter (cost=0.00..1.10 rows=4341 width=20) Filter: (actor_all_new_tag_bucket.gameid = 2131) [id=11 dop=480 time=8/0/0ms rows=38400(114/80/55) mem=2/2/2KB open=1/0/0ms get_next=8/0/0ms] -> GroupAggregate (cost=0.00..1.10 rows=4341 width=20) Group Key: actor_all_new_tag_bucket.statdate, actor_all_new_tag_bucket.gameid, actor_all_new_tag_bucket.bucke [id=9 dop=480 time=8/0/0ms rows=38400(114/80/55) mem=291/283/211KB open=1/0/0ms get_next=8/0/0ms] -> Local Gather (cost=0.00..1.10 rows=4341 width=20) [id=8 dop=480 time=1/0/0ms rows=38400(114/80/55) mem=600/600/600B open=1/0/0ms get_next=1/0/0ms local_dop=1/1/1] -> Decode (cost=0.00..1.10 rows=4341 width=20) [id=6 split_count=480 time=48/5/0ms rows=38400(114/80/55) mem=0/0/0B open=15/0/0ms get_next=42/4/0ms] -> Index Scan using Clustering_index on actor_all_new_tag_bucket (cost=0.00..1.00 rows=4341 width=20) Segment Filter: (statdate >= '2024-01-01'::date) Cluster Filter: (gameid = 2131) Bitmap Filter: ((tag_name = 'gameversion'::text) AND (tag_val = '2131-CN-ZS'::text)) RowGroupFilter: (statdate >= '2024-01-01'::date) [id=5 split_count=480 time=48/5/0ms rows=38400(114/80/55) mem=139/130/117KB open=15/0/0ms get_next=42/4/0ms physical_reads=747(4/2/1) scan_rows=3285315(7441/6844/611 6) bitmap_used=480] -> Materialize (cost=0.00..1.17 rows=74880 width=8) [id=13 dop=480 time=11/0/0ms rows=5990400(17784/12480/8580) mem=0/0/0B open=0/0/0ms get_next=11/0/0ms] -> Local Broadcast From Worker (cost=0.00..1.17 rows=74880 width=8) [48:480 id=100004 dop=480 time=72/5/0ms rows=74880(156/156/156) mem=600/600/600B open=1/0/0ms get_next=71/5/0ms] -> Broadcast To Worker (cost=0.00..1.17 rows=9360 width=8) [1:48 id=100003 dop=48 time=1/0/0ms rows=7488(156/156/156) mem=600/600/600B open=1/0/0ms get_next=0/0/0ms] -> Forward (cost=0.00..1.00 rows=156 width=8) [0:1 id=100002 dop=1 time=25/25/25ms rows=156(156/156/156) mem=264/264/264B open=25/25/25ms get_next=0/0/0ms scan_rows=156(156/156/156)] -> ExecuteExternalSQL on PQE (cost=0.00..0.00 rows=0 width=8) External SQL: SELECT * FROM generate_series('2024-01-01 00:00:00+08'::timestamptz, '2024-06-04 00:00:00+08'::timestamptz, '1 day'::interval) AS TVF(c_2b35a738_101) -> Hash (cost=1.10..1.10 rows=189110 width=20) [id=24 dop=480 time=193/57/7ms rows=184991(443/385/319) mem=4/2/2MB open=189/53/5ms get_next=31/4/1ms rehash=1/1/1 hash_mem=384/384/384KB] -> Local Gather (cost=0.00..1.10 rows=189110 width=20) [id=23 dop=480 time=189/52/2ms rows=184991(443/385/319) mem=600/600/600B open=6/0/0ms get_next=184/52/1ms local_dop=1/1/1] -> Decode (cost=0.00..1.10 rows=189110 width=20) [id=21 split_count=480 time=162/43/0ms rows=184991(443/385/319) mem=0/0/0B open=42/2/0ms get_next=161/41/0ms] -> Index Scan using Clustering_index on actor_all_login_bucket (cost=0.00..1.00 rows=189110 width=20) Cluster Filter: (gameid = 2131) [id=20 split_count=473 time=152/39/0ms rows=182347(443/385/319) mem=4/2/2MB open=42/2/0ms get_next=152/36/0ms physical_reads=748(4/2/1) scan_rows=1875185(4348/3964/3599)] Query id:[70231656748298225] ======================cost====================== Total cost:[1403] ms Optimizer cost:[150] ms Build execution plan cost:[11] ms Init execution plan cost:[19] ms Start query cost:[218] ms - Wait schema cost:[0] ms - Lock query cost:[0] ms - Create dataset reader cost:[0] ms - Create split reader cost:[2] ms Get result cost:[1005] ms - Get the first block cost:[50] ms ====================resource==================== Memory: total 10047 MB. Worker stats: max 241 MB, avg 209 MB, min 182 MB, max memory worker id: 729692126084053701. CPU time: total 168268 ms. Worker stats: max 7134 ms, avg 3505 ms, min 2919 ms, max CPU time worker id: 745354404943128931. Ec wait time: total 56589 ms. Worker stats: max 2727 ms, avg 1178 ms, min 267 ms, max ec wait time worker id: 729692126084053701. Physical read bytes: total 1445 MB. Worker stats: max 59 MB, avg 30 MB, min 0 MB, max physical read bytes worker id: 729776188416688975. Read bytes: total 2718 MB. Worker stats: max 58 MB, avg 56 MB, min 51 MB, max read bytes worker id: 745354267590834885. DAG instance count: total 1010. Worker stats: max 22, avg 21, min 21, max DAG instance count worker id: 722022531458482562. Fragment instance count: total 1970. Worker stats: max 42, avg 41, min 41, max fragment instance count worker id: 722022531458482562. (76 rows)

通过JSON函数优化SQL语句

通常在查询明细表数据用于做留存分析时,返回的数据格式是多行,包含留存日期(dayn)对应的新创建的角色UV和基于登录的留存UV数据。为了以多列展示从day0~dayn的所有结果,用户经常使用N个CASE WHEN语句将这些行转换为列,这样SQL不仅降低了开发效率,也影响了计算效率和运维的复杂性。代码示例如下。

WITH

actor_new AS

( SELECT statdate,

gameid,

RB_AND_AGG (rb_new) rb_new

FROM dws.actor_all_new_tag n

WHERE statdate >= '20240125'::date

AND gameid = '2131'

AND tag_name = 'gameversion'

AND tag_val = '2131-CN-ZS'

GROUP BY statdate,

gameid

)

,

actor_list

AS

( SELECT n.statdate start_date,

s.ds::date end_date,

'day' || s.ds::date - n.statdate AS nday,

gameid,

rb_new

FROM actor_new n,

generate_series ('20240125'::date, '20240529'::date, '1 day'::interval) AS s (ds)

WHERE n.statdate <= s.ds::date

)

select

start_date,

CASE WHEN nday=0 and NewCount >0 THEN LogCount/NewCount END AS day0,

CASE WHEN nday=1 and NewCount >0 THEN LogCount/NewCount END AS day1,

CASE WHEN nday=2 and NewCount >0 THEN LogCount/NewCount END AS day2,

CASE WHEN nday=3 and NewCount >0 THEN LogCount/NewCount END AS day3,

........

CASE WHEN nday=365 and NewCount >0 THEN LogCount/NewCount END AS day365

from

(

SELECT

start_date,

nday,

SUM (LogCount) LogCount,

SUM (NewCount) NewCount

FROM ( SELECT al.start_date,

al.nday,

RB_CARDINALITY (rb_new) NewCount,

RB_AND_CARDINALITY (rb_new, rb_login) LogCount

FROM actor_list al

INNER JOIN dws.actor_all_login aal

ON al.end_date = aal.statdate

AND al.gameid = aal.gameid

) t

GROUP BY GROUPING sets ( (start_date, nday), (nday))

)A Hologres支持PostgreSQL生态的JSON相关函数,可以充分利用Hologres的jsonb_object_agg函数,将动态的JSON数据转换为一行,并且可以根据灵活的JSON数据通过jsonb_populate_record函数进行任意维度的展开,实现行转列。

关于Hologres支持PostgreSQL生态的JSON相关函数详情,请参见JSON和JSONB类型。

在使用jsonb_populate_record需要先定义一个固定的Schema(public.rowtype_365) ,作为base anyelement对象来接收数据,代码示例如下。

CREATE TABLE public.rowtype_365 (

day0 text,

day1 text,

day2 text,

day3 text,

day4 text,

day5 text,

day6 text,

day7 text,

day8 text,

day9 text,

day10 text,

day11 text,

day12 text,

day13 text,

day14 text,

day15 text,

day16 text,

day17 text,

day18 text,

day19 text,

day20 text,

day21 text,

day22 text,

day23 text,

day24 text,

day25 text,

day26 text,

day27 text,

day28 text,

day29 text,

day30 text,

day31 text,

day32 text,

day33 text,

day34 text,

day35 text,

day36 text,

day37 text,

day38 text,

day39 text,

day40 text,

day41 text,

day42 text,

day43 text,

day44 text,

day45 text,

day46 text,

day47 text,

day48 text,

day49 text,

day50 text,

day51 text,

day52 text,

day53 text,

day54 text,

day55 text,

day56 text,

day57 text,

day58 text,

day59 text,

day60 text,

day61 text,

day62 text,

day63 text,

day64 text,

day65 text,

day66 text,

day67 text,

day68 text,

day69 text,

day70 text,

day71 text,

day72 text,

day73 text,

day74 text,

day75 text,

day76 text,

day77 text,

day78 text,

day79 text,

day80 text,

day81 text,

day82 text,

day83 text,

day84 text,

day85 text,

day86 text,

day87 text,

day88 text,

day89 text,

day90 text,

day91 text,

day92 text,

day93 text,

day94 text,

day95 text,

day96 text,

day97 text,

day98 text,

day99 text,

day100 text,

day101 text,

day102 text,

day103 text,

day104 text,

day105 text,

day106 text,

day107 text,

day108 text,

day109 text,

day110 text,

day111 text,

day112 text,

day113 text,

day114 text,

day115 text,

day116 text,

day117 text,

day118 text,

day119 text,

day120 text,

day121 text,

day122 text,

day123 text,

day124 text,

day125 text,

day126 text,

day127 text,

day128 text,

day129 text,

day130 text,

day131 text,

day132 text,

day133 text,

day134 text,

day135 text,

day136 text,

day137 text,

day138 text,

day139 text,

day140 text,

day141 text,

day142 text,

day143 text,

day144 text,

day145 text,

day146 text,

day147 text,

day148 text,

day149 text,

day150 text,

day151 text,

day152 text,

day153 text,

day154 text,

day155 text,

day156 text,

day157 text,

day158 text,

day159 text,

day160 text,

day161 text,

day162 text,

day163 text,

day164 text,

day165 text,

day166 text,

day167 text,

day168 text,

day169 text,

day170 text,

day171 text,

day172 text,

day173 text,

day174 text,

day175 text,

day176 text,

day177 text,

day178 text,

day179 text,

day180 text,

day181 text,

day182 text,

day183 text,

day184 text,

day185 text,

day186 text,

day187 text,

day188 text,

day189 text,

day190 text,

day191 text,

day192 text,

day193 text,

day194 text,

day195 text,

day196 text,

day197 text,

day198 text,

day199 text,

day200 text,

day201 text,

day202 text,

day203 text,

day204 text,

day205 text,

day206 text,

day207 text,

day208 text,

day209 text,

day210 text,

day211 text,

day212 text,

day213 text,

day214 text,

day215 text,

day216 text,

day217 text,

day218 text,

day219 text,

day220 text,

day221 text,

day222 text,

day223 text,

day224 text,

day225 text,

day226 text,

day227 text,

day228 text,

day229 text,

day230 text,

day231 text,

day232 text,

day233 text,

day234 text,

day235 text,

day236 text,

day237 text,

day238 text,

day239 text,

day240 text,

day241 text,

day242 text,

day243 text,

day244 text,

day245 text,

day246 text,

day247 text,

day248 text,

day249 text,

day250 text,

day251 text,

day252 text,

day253 text,

day254 text,

day255 text,

day256 text,

day257 text,

day258 text,

day259 text,

day260 text,

day261 text,

day262 text,

day263 text,

day264 text,

day265 text,

day266 text,

day267 text,

day268 text,

day269 text,

day270 text,

day271 text,

day272 text,

day273 text,

day274 text,

day275 text,

day276 text,

day277 text,

day278 text,

day279 text,

day280 text,

day281 text,

day282 text,

day283 text,

day284 text,

day285 text,

day286 text,

day287 text,

day288 text,

day289 text,

day290 text,

day291 text,

day292 text,

day293 text,

day294 text,

day295 text,

day296 text,

day297 text,

day298 text,

day299 text,

day300 text,

day301 text,

day302 text,

day303 text,

day304 text,

day305 text,

day306 text,

day307 text,

day308 text,

day309 text,

day310 text,

day311 text,

day312 text,

day313 text,

day314 text,

day315 text,

day316 text,

day317 text,

day318 text,

day319 text,

day320 text,

day321 text,

day322 text,

day323 text,

day324 text,

day325 text,

day326 text,

day327 text,

day328 text,

day329 text,

day330 text,

day331 text,

day332 text,

day333 text,

day334 text,

day335 text,

day336 text,

day337 text,

day338 text,

day339 text,

day340 text,

day341 text,

day342 text,

day343 text,

day344 text,

day345 text,

day346 text,

day347 text,

day348 text,

day349 text,

day350 text,

day351 text,

day352 text,

day353 text,

day354 text,

day355 text,

day356 text,

day357 text,

day358 text,

day359 text,

day360 text,

day361 text,

day362 text,

day363 text,

day364 text,

day365 text,

day366 text

);

CALL set_table_property('public.rowtype_365', 'orientation', 'column');

CALL set_table_property('public.rowtype_365', 'storage_format', 'orc');

CALL set_table_property('public.rowtype_365', 'table_group', 'tg_480');

CALL set_table_property('public.rowtype_365', 'table_storage_mode', 'any');

CALL set_table_property('public.rowtype_365', 'time_to_live_in_seconds', '3153600000');

COMMENT ON TABLE public.rowtype_365 IS NULL;

END;使用jsonb_object_agg函数和jsonb_populate_record函数实现行转列,代码示例如下。

explain analyze

WITH

actor_new AS

( SELECT statdate,

gameid,

bucket,

RB_AND_AGG (rb_new) rb_new

FROM dws.actor_all_new_tag_bucket n

WHERE statdate >= '20240101'::date

AND gameid = '2131'

AND tag_name = 'gameversion'

AND tag_val = '2131-CN-ZS'

GROUP BY statdate,

gameid,

bucket

)

,

actor_list

AS

( SELECT n.statdate start_date,

s.ds::date end_date,

'day' || s.ds::date - n.statdate AS nday,

gameid,

bucket,

rb_new

FROM actor_new n,

generate_series ('20240101'::date, '20240604'::date, '1 day'::interval) AS s (ds)

WHERE n.statdate <= s.ds::date

)

SELECT start_date,

(jsonb_populate_record (NULL::rowtype_365, buff)) .*

FROM ( SELECT start_date,

jsonb_object_agg (nday, ROUND (LogCount * 100.0 / NewCount, 2)) buff

FROM ( SELECT start_date,

nday,

SUM (LogCount) LogCount,

SUM (NewCount) NewCount

FROM ( SELECT al.start_date,

al.end_date,

al.nday,

RB_CARDINALITY (rb_new) NewCount,

rb_and_cardinality(rb_new, rb_login) LogCount

FROM actor_list al

INNER JOIN dws.actor_all_login_bucket aal

ON al.end_date = aal.statdate

AND al.gameid = aal.gameid

AND al.bucket = aal.bucket) T

GROUP BY grouping sets ( (start_date, nday), (nday))) t

GROUP BY start_date) A

ORDER BY

CASE WHEN start_date IS NULL

THEN '1'

ELSE start_date::TEXT

END