本文介绍如何使用Hive访问LindormDFS。

准备工作

配置 Apache Derby

解压Hive压缩包到指定目录。

tar -zxvf db-derby-10.13.1.1-bin.tar.gz -C /usr/local/修改

/etc/profile文件,并配置环境变量。执行如下命令打开

/etc/profile配置文件。vim /etc/profile在文件末尾添加如下内容。

export DERBY_HOME=/usr/local/db-derby-10.13.1.1-bin export CLASSPATH=$CLASSPATH:$DERBY_HOME/lib/derby.jar:$DERBY_HOME/lib/derbytools.jar创建存储目录。

mkdir $DERBY_HOME/data启动服务。

nohup /usr/local/db-derby-10.13.1.1-bin/bin/startNetworkServer &

配置 Apache Hive

解压Hive压缩包到指定目录。

tar -zxvf apache-hive-2.3.7-bin.tar.gz -C /usr/local/修改

/etc/profile文件,配置环境变量。执行如下命令打开

/etc/profile置文件。vim /etc/profile在文件末尾添加如下内容。

export HIVE_HOME=/usr/local/apache-hive-2.3.7-bin

修改

hive-env.sh文件 。执行如下命令打开

hive-env.sh文件。vim /usr/local/apache-hive-2.3.7-bin/hive-env.sh修改

hive-env.sh文件,修改的内容如下所示。# The heap size of the jvm stared by hive shell script can be controlled via export HADOOP_HEAPSIZE=1024 # Set HADOOP_HOME to point to a specific hadoop install directory HADOOP_HOME=/usr/local/hadoop-2.7.3 # Hive Configuration Directory can be controlled by: export HIVE_CONF_DIR=/usr/local/apache-hive-2.3.7-bin/conf # Folder containing extra ibraries required for hive compilation/execution can be controlled by: export HIVE_AUX_JARS_PATH=/usr/local/apache-hive-2.3.7-bin/lib

修改

hive-site.xml文件。执行如下命令打开

hive-site.xml文件。vim /usr/local/apache-hive-2.3.7-bin/conf/hive-site.xml修改

hive-site.xml文件,修改的内容如下所示。<configuration> <property> <name>hive.metastore.warehouse.dir</name> <value>/user/hive/warehouse</value> <description>location of default database for the warehouse</description> </property> <property> <name>hive.exec.scratchdir</name> <value>/tmp/hive</value> <description>HDFS root scratch dir for Hive jobs which gets created with write all (733) permission. For each connecting user, an HDFS scratch dir: ${hive.exec.scratchdir}/<username> is created, with ${hive.scratch.dir.permission}.</description> </property> <property> <name>hive.metastore.schema.verification</name> <value>false</value> <description> Enforce metastore schema version consistency. True: Verify that version information stored in metastore matches with one from Hive jars. Also disable automatic schema migration attempt. Users are required to manually migrate schema after Hive upgrade which ensures proper metastore schema migration. (Default) False: Warn if the version information stored in metastore doesn't match with one from in Hive jars. </description> </property> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:derby://127.0.0.1:1527/metastore_db;create=true </value> <description>JDBC connect string for a JDBC metastore </description> </property> <property> <name>datanucleus.schema.autoCreateAll</name> <value>true</value> </property> </configuration>

创建

jpox.properties文件。执行如下命令,打开

jpox.properties文件。vim /usr/local/apache-hive-2.3.7-bin/conf/jpox.properties修改

jpox.properties文件,修改的内容如下所示。javax.jdo.PersistenceManagerFactoryClass =org.jpox.PersistenceManagerFactoryImpl org.jpox.autoCreateSchema = false org.jpox.validateTables = false org.jpox.validateColumns = false org.jpox.validateConstraints = false org.jpox.storeManagerType = rdbms org.jpox.autoCreateSchema = true org.jpox.autoStartMechanismMode = checked org.jpox.transactionIsolation = read_committed javax.jdo.option.DetachAllOnCommit = true javax.jdo.option.NontransactionalRead = true javax.jdo.option.ConnectionDriverName = org.apache.derby.jdbc.ClientDriver javax.jdo.option.ConnectionURL = jdbc:derby://127.0.0.1:1527/metastore_db;create = true javax.jdo.option.ConnectionUserName = APP javax.jdo.option.ConnectionPassword = mine

创建必要目录。

$HADOOP_HOME/bin/hadoop fs -ls / 如果没有发现上面提到的路径,因此需要新建这些目录,并且给它们赋予用户(W)权限。 $HADOOP_HOME/bin/hadoop fs -ls /user/hive/warehouse $HADOOP_HOME/bin/hadoop fs -ls /tmp/hive $HADOOP_HOME/bin/hadoop fs -chmod 775 /user/hive/warehouse $HADOOP_HOME/bin/hadoop fs -chmod 775 /tmp/hive修改

io.tmpdir路径。同时要修改

hive-site.xml中所有包含${system:java.io.tmpdir}字段的value即路径,您可以新建一个目录来替换它,例如/tmp/hive/iotmp。mkdir /usr/local/apache-hive-2.3.7-bin/iotmp chmod 777 /usr/local/apache-hive-2.3.7-bin/iotmp最后再修改以下代码中的

${system:user.name}。<property> <name>hive.exec.local.scratchdir</name> <value>/usr/local/apache-hive-2.3.7-bin/iotmp/${system:user.name}</value> <description>Local scratch space for Hive jobs</description> </property>修改如下:

<property> <name>hive.exec.local.scratchdir</name> <value>/usr/local/apache-hive-2.3.7-bin/iotmp/${user.name}</value> <description>Local scratch space for Hive jobs</description> </property>初始化Hive服务。

nohup /usr/local/apache-hive-2.3.7-bin/bin/hive --service metastore & nohup /usr/local/apache-hive-2.3.7-bin/bin/hive --service hiveserver2 &

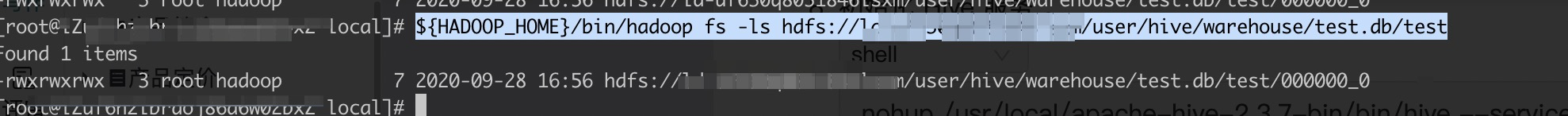

验证Apache Hive

在hive Shell中创建一张表。

create table test (f1 INT, f2 STRING);在表中写入一些数据。

insert into test values (1,'2222');查看数据是否已经写入LindormDFS。

${HADOOP_HOME}/bin/hadoop fs -ls /user/hive/warehouse/test.db/test

该文章对您有帮助吗?