本文介绍了 MPIDRSSDK 中可能用到的部分 MRTC 接口及接口的使用方法。

MRTC API

下面只列举了双录过程中可能会用到的部分 MRTC 接口,更多 MRTC 详细信息参见 iOS MRTC使用文档。

MRTC 配合 MPIDRSSDK 的使用说明

初始化 MRTC 实例

MPIDRSSDK 可以用来初始化 MRTC 实例,您获取 MRTC 实例之后可以配置音视频通话逻辑,以下为 Demo 配置。

[MPIDRSSDK initRTCWithUserId:self.uid appId:AppId success:^(id _Nonnull responseObject) {

self.artvcEgnine = responseObject;

// 音视频通话相关状态回调delegate

self.artvcEgnine.delegate = self;

//设置视频编码分辨率,默认是 ARTVCVideoProfileType_640x360_15Fps。

self.artvcEgnine.videoProfileType = ARTVCVideoProfileType_1280x720_30Fps;

// 音视频通话发布流配置

ARTVCPublishConfig *publishConfig = [[ARTVCPublishConfig alloc] init];

publishConfig.videoProfile = self.artvcEgnine.videoProfileType;

publishConfig.audioEnable = YES;

publishConfig.videoEnable = YES;

self.artvcEgnine.autoPublishConfig = publishConfig;

// 自动推流

self.artvcEgnine.autoPublish = YES;

// 音视频通话订阅配置

ARTVCSubscribeOptions *subscribeOptions = [[ARTVCSubscribeOptions alloc] init];

subscribeOptions.receiveAudio = YES;

subscribeOptions.receiveVideo = YES;

self.artvcEgnine.autoSubscribeOptions = subscribeOptions;

// 自动拉流(订阅)

self.artvcEgnine.autoSubscribe = YES;

// 如果需要回调本地音频数据,设置YES

self.artvcEgnine.enableAudioBufferOutput = YES;

// 如果需要回调本地视频数据,设置YES

self.artvcEgnine.enableCameraRawSampleOutput = YES;

// 声音模式

self.artvcEgnine.expectedAudioPlayMode = ARTVCAudioPlayModeSpeaker;

// 带宽不足时保证分辨率优先(帧率下降)还是流畅度优先 (分辨率下降)

/*

ARTVCDegradationPreferenceMAINTAIN_FRAMERATE, //流畅度优先

ARTVCDegradationPreferenceMAINTAIN_RESOLUTION, //分辨率优先

ARTVCDegradationPreferenceBALANCED, //自动平衡

*/

self.artvcEgnine.degradationPreference = ARTVCDegradationPreferenceMAINTAIN_FRAMERATE;

// 启动相机预览,默认使用前置摄像头,如果设置为 YES 则使用后置摄像头

[self.artvcEgnine startCameraPreviewUsingBackCamera:NO];

// 没有房间时选择创建房间

[IDRSSDK createRoom];

// 或者加入已有房间

// [IDRSSDK joinRoom:self.roomId token:self.rtoken];

} failure:^(NSError * _Nonnull error) {

}];MRTC 代理回调

下面仅列出了部分常用回调 API,更多 API 信息请参见 ARTVCEngineDelegate。

启动相机预览后,如果本地 feed 没有被回调过,则回调后返回一个 ARTVCFeed 对象,可用于关联后续返回的渲染 View。

- (void)didReceiveLocalFeed:(ARTVCFeed*)localFeed { switch(localFeed.feedType){ case ARTVCFeedTypeLocalFeedDefault: self.localFeed = localFeed; break; case ARTVCFeedTypeLocalFeedCustomVideo: self.customLocalFeed = localFeed; break; case ARTVCFeedTypeLocalFeedScreenCapture: self.screenLocalFeed = localFeed; break; default: break; } }本地和远端 feed 相关的 renderView 回调。

重要此时不代表 renderView 已经渲染首帧。

- (void)didVideoRenderViewInitialized:(UIView*)renderView forFeed:(ARTVCFeed*)feed { //可触发 UI 布局,把 renderView add 到 view 层级中去 [self.viewLock lock]; [self.contentView addSubview:renderView]; [self.viewLock unlock]; }本地和远端 feed 首帧渲染的回调。

//fist video frame has been rendered - (void)didFirstVideoFrameRendered:(UIView*)renderView forFeed:(ARTVCFeed*)feed { }某个 feed 停止渲染的回调。

- (void)didVideoViewRenderStopped:(UIView*)renderView forFeed:(ARTVCFeed*)feed { }创建房间成功,有房间信息回调。

-(void)didReceiveRoomInfo:(ARTVCRoomInfomation*)roomInfo { //拿到房间号、token // roomInfo.roomId // roomInfo.rtoken }创建房间失败,有 Error 回调。

-(void)didEncounterError:(NSError *)error forFeed:(ARTVCFeed*)feed{ //error.code == ARTVCErrorCodeProtocolErrorCreateRoomFailed }加入房间成功,会有加入房间成功的回调以及房间已有成员的回调。

-(void)didJoinroomSuccess{ } -(void)didParticepantsEntered:(NSArray<ARTVCParticipantInfo*>*)participants{ }加入房间失败、推流失败等音视频通话中出现错误的回调。

-(void)didEncounterError:(NSError *)error forFeed:(ARTVCFeed*)feed{ //error.code == ARTVCErrorCodeProtocolErrorJoinRoomFailed }成员离开房间后,房间其他成员会收到成员离开的回调

-(void)didParticepant:(ARTVCParticipantInfo*)participant leaveRoomWithReason:(ARTVCParticipantLeaveRoomReasonType)reason { }创建或者加入房间成功后开始推流与拉流,推流/拉流过程中,有如下相关状态回调。

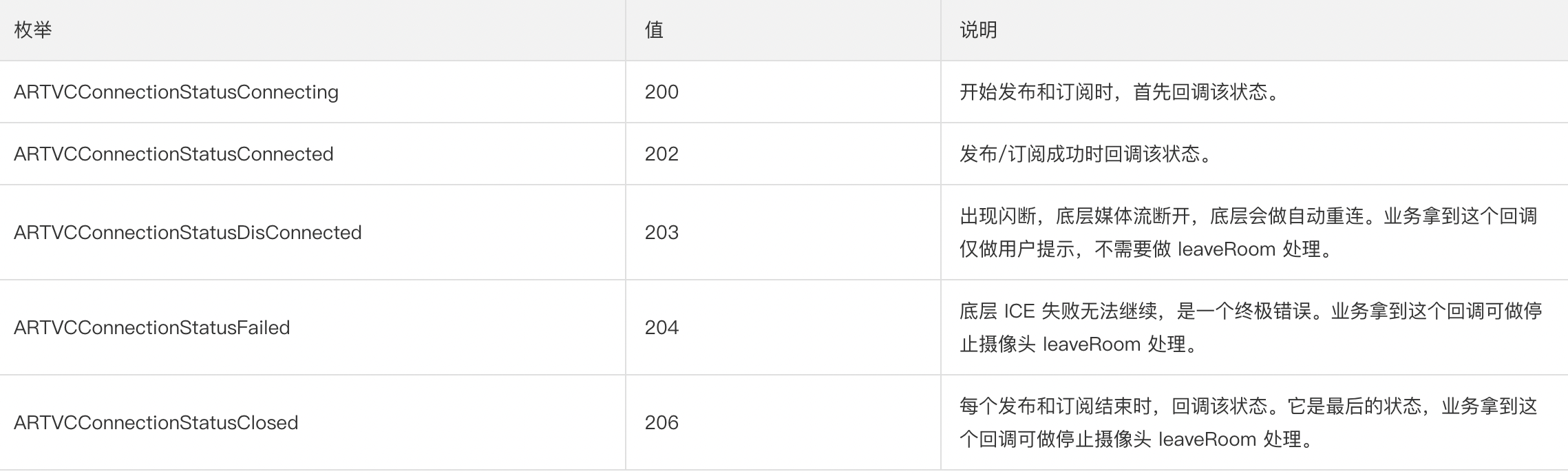

-(void)didConnectionStatusChangedTo:(ARTVCConnectionStatus)status forFeed:(ARTVCFeed*)feed{ [self showToastWith:[NSString stringWithFormat:@"connection status:%d\nfeed:%@",status,feed] duration:1.0]; if((status == ARTVCConnectionStatusClosed) && [feed.uid isEqualToString:[self uid]]){ [self.artvcEgnine stopCameraPreview];//音视频通话下,停止摄像头预览。 [self.artvcEgnine leaveRoom]; } }状态说明

推流成功后,其他房间成员会收到新 feed 的回调。

-(void)didNewFeedAdded:(ARTVCFeed*)feed { }取消发布后,房间其他成员会收到取消发布的回调。

-(void)didFeedRemoved:(ARTVCFeed*)feed{ }本地流音频数据回调。

- (void)didOutputAudioBuffer:(ARTVCAudioData*)audioData { if (audioData.audioBufferList->mBuffers[0].mData != NULL && audioData.audioBufferList->mBuffers[0].mDataByteSize > 0) { pcm_frame_t pcmModelInput; pcmModelInput.len = audioData.audioBufferList->mBuffers[0].mDataByteSize; pcmModelInput.buf = (uint8_t*)audioData.audioBufferList->mBuffers[0].mData; pcmModelInput.sample_rate = audioData.sampleRate; pcm_frame_t pcmModelOutput; pcm_resample_16k(&pcmModelInput, &pcmModelOutput); NSData *srcData = [NSData dataWithBytes:pcmModelOutput.buf length:pcmModelOutput.len]; //检测音频数据 [self.idrs feedAudioFrame:srcData]; } }远端流音频数据回调。

可用来检测远端语音,下面示例代码以检测远端激活词为例。

- (void)didOutputRemoteMixedAudioBuffer:(ARTVCAudioData *)audioData { if (audioData.audioBufferList->mBuffers[0].mData != NULL && audioData.audioBufferList->mBuffers[0].mDataByteSize > 0) { pcm_frame_t pcmModelInput; pcmModelInput.len = audioData.audioBufferList->mBuffers[0].mDataByteSize; pcmModelInput.buf = (uint8_t*)audioData.audioBufferList->mBuffers[0].mData; pcmModelInput.sample_rate = audioData.sampleRate; pcm_frame_t pcmModelOutput; pcm_resample_16k(&pcmModelInput, &pcmModelOutput); NSData *srcData = [NSData dataWithBytes:pcmModelOutput.buf length:pcmModelOutput.len]; //检测音频数据 [self.idrs feedAudioFrame:srcData]; } }本地相机流数据回调。

可用来检测人脸、手势、签名类型、身份证等,下方代码以检测人脸特征代码为例。

dispatch_queue_t testqueue = dispatch_queue_create("testQueue", NULL); - (void)didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer { dispatch_sync(testqueue, ^{ @autoreleasepool { CVPixelBufferRef newBuffer = CMSampleBufferGetImageBuffer(sampleBuffer); UIImage * image = [self.idrs getImageFromRPVideo:newBuffer]; IDRSFaceDetectParam *detectParam = [[IDRSFaceDetectParam alloc]init]; detectParam.dataType = IDRSFaceDetectInputTypePixelBuffer; detectParam.buffer = newBuffer; detectParam.inputAngle = 0; detectParam.outputAngle = 0; detectParam.faceNetType = 0; detectParam.supportFaceRecognition = false; detectParam.supportFaceLiveness = false; //人脸追踪 [self.idrs faceTrackFromVideo:detectParam faceDetectionCallback:^(NSError *error, NSArray<FaceDetectionOutput *> *faces) { dispatch_async(dispatch_get_main_queue(), ^{ self.drawView.faceDetectView.detectResult = faces; }); }]; } }) }自定义推音频数据流回调时,MRTC 会自动触发调用,此时则需要在下面这个方法中发送数据。

- (void)didCustomAudioDataNeeded { //[self.artvcEgnine sendCustomAudioData:data]; }

投屏

以下为 MRTC 自带的应用内投屏代码示例,如果需要使用跨应用投屏请参见 iOS 系统中如何实现跨应用共享屏幕

开始投屏

ARTVCCreateScreenCaputurerParams* screenParams = [[ARTVCCreateScreenCaputurerParams alloc] init];

screenParams.provideRenderView = YES;

[self.artvcEgnine startScreenCaptureWithParams:screenParams complete:^(NSError* error){

if(error){

[weakSelf showToastWith:[NSString stringWithFormat:@"Error:%@",error] duration:1.0];

}else {

ARTVCPublishConfig* config = [[ARTVCPublishConfig alloc] init];

config.videoSource = ARTVCVideoSourceType_Screen;

config.audioEnable = NO;

config.videoProfile = ARTVCVideoProfileType_ScreenRatio_1280_15Fps;

config.tag = @"MPIDRS_ShareScreen";

[weakSelf.artvcEgnine publish:config];

}

}];停止投屏

- (void)stopScreenSharing {

[self.artvcEgnine stopScreenCapture];

ARTVCUnpublishConfig* config = [[ARTVCUnpublishConfig alloc] init];

config.feed = self.screenLocalFeed;

[self.artvcEgnine unpublish:config complete:^(){

}];

}推 TTS 语音流

自定义推流(音频数据专用)。

// MRTC 发布自定义推流用于TTS音频文件 - (void)startCustomAudioCapture{ ARTVCCreateCustomVideoCaputurerParams* params = [[ARTVCCreateCustomVideoCaputurerParams alloc] init]; params.audioSourceType = ARTVCAudioSourceType_Custom; params.customAudioFrameFormat.sampleRate = 16000; params.customAudioFrameFormat.samplesPerChannel = 160; ARTVCPublishConfig* audioConfig = [[ARTVCPublishConfig alloc] init]; audioConfig.videoSource = ARTVCVideoSourceType_Custom; audioConfig.videoEnable = YES; audioConfig.audioSource = ARTVCAudioSourceType_Custom; audioConfig.tag = @"customAudioFeed"; self.audioConfig = audioConfig; self.customAudioCapturer = [_artvcEgnine createCustomVideoCapturer:params]; _artvcEgnine.autoPublish = NO; [_artvcEgnine publish:self.audioConfig]; }推荐在自定义推流发布成功后开始 TTS 合成播报。

- (void)didConnectionStatusChangedTo:(ARTVCConnectionStatus)status forFeed:(ARTVCFeed*)feed { if (status == ARTVCConnectionStatusConnected && [feed isEqual:self.customLocalFeed]) { NSString * string = @"盛先生您好,被保险人于本附加合同生效(或最后复效)之日起一百八十日内"; self.customAudioData = [[NSMutableData alloc] init]; self.customAudioDataIndex = 0; self.ttsPlaying = YES; [self.idrs setTTSParam:@"extend_font_name" value:@"xiaoyun"]; [self.idrs setTTSParam:@"speed_level" value:@"1"]; [self.idrs startTTSWithText:string]; [self.idrs getTTSParam:@"speed_level"]; } }在 TTS 代理回调中获取合成的音频数据。

- (void)onNuiTtsUserdataCallback:(NSString *)info infoLen:(int)info_len buffer:(char *)buffer len:(int)len taskId:(NSString *)task_id{ NSLog(@"remote :: onNuiTtsUserdataCallback:%@ -- %d",info,info_len); if (buffer) { NSData *audioData = [NSData dataWithBytes:buffer length:len]; [self.customAudioData appendData:audioData]; } }在 MRTC 代理回调中发送音频数据。

- (void)didCustomAudioDataNeeded { if (!self.ttsPlaying || ( self.customAudioDataIndex + 320 > (int)[self.customAudioData length])) { [self stopPublishCustomAudio]; return ; } NSRange range = NSMakeRange (self.customAudioDataIndex, 320); NSData *data = [self.customAudioData subdataWithRange:range]; //发送语音数据到MRTC [self.artvcEgnine sendCustomAudioData:data]; self.customAudioDataIndex += 320; }

开启服务端录制

成功初始化 MPIDRSSDK 和 MRTC 实例后相关录制功能才生效。

开启远程录制。

每开启一次服务端录制任务,录制回调则返回一个录制 ID,录制 ID 可用来停止、变更对应的录制任务。

MPRemoteRecordInfo *recordInfo = [[MPRemoteRecordInfo alloc] init]; recordInfo.roomId = self.roomId; // 控制台水印id //recordInfo.waterMarkId = self.watermarkId; recordInfo.tagFilter = tagPrefix; recordInfo.userTag = self.uid; recordInfo.recordType = MPRemoteRecordTypeBegin; // 业务根据实际情况传入是单流还是合流(混流) recordInfo.fileSuffix = MPRemoteRecordFileSingle; // 如果录制时有流布局要求,可参考MPRemoteRecordInfo自定义 // recordInfo.tagPositions = tagModelArray; // 如果录制时有端上自定义水印要求,可参考MPRemoteRecordInfo自定义 //recordInfo.overlaps = customOverlaps; [MPIDRSSDK executeRemoteRecord:recordInfo waterMarkHandler:^(NSError * _Nonnull error) { }];变更录制配置。

已经开启的录制任务,支持修改水印和流布局。

MPRemoteRecordInfo *recordInfo = [[MPRemoteRecordInfo alloc] init]; recordInfo.roomId = self.roomId; recordInfo.recordType = MPRemoteRecordTypeChange; // 1、录制任务id recordInfo.recordId = recordId; // 2、流布局 recordInfo.tagPositions = tagModelArray; // 3、水印 recordInfo.overlaps = customOverlaps; [MPIDRSSDK executeRemoteRecord:recordInfo waterMarkHandler:^(NSError * _Nonnull error) { }];MRTC 录制回调。

- (void)didReceiveCustomSignalingResponse:(NSDictionary *)dictionary { id opcmdObject = [dictionary objectForKey:@"opcmd"]; if ([opcmdObject isKindOfClass:[NSNumber class]]) { int opcmd = [opcmdObject intValue]; switch (opcmd) { case MPRemoteRecordTypeBeginResponse: { self.startTime = [NSDate date]; // 回调的录制id self.recordId = [dictionary objectForKey:@"recordId"]; if ([[dictionary objectForKey:@"msg"] isEqualToString:@"SUCCESS"]) { NSLog(@"开启录制成功"); }else { NSLog(@"开启录制失败"); } } break; case MPRemoteRecordTypeChangeResponse: { if ([[dictionary valueForKey:@"msg"] isEqualToString:@"SUCCESS"]) { NSLog(@"修改录制配置成功"); }else { NSLog(@"修改录制配置失败"); } } break; case MPRemoteRecordTypeStopResponse: { if ([[dictionary valueForKey:@"msg"] isEqualToString:@"SUCCESS"]) { NSLog(@"结束录制成功"); }else { NSLog(@"结束录制错误"); } } break; default: break; } } }停止指定的服务端录制任务。

[MPIDRSSDK stopRemoteRecord:@"录制id"];上传录制产物。

说明开启多次录制时,需要上传多次。

self.duration = [[NSDate date] timeIntervalSince1970] - [self.startTime timeIntervalSince1970]; IDRSUploadManagerParam *param = [[IDRSUploadManagerParam alloc] init]; param.duration = self.duration; param.appId = AppId; param.ak = Ak; param.sk = Sk; param.type = IDRSRecordRemote; param.recordAt = self.startTime; param.roomId = self.roomId; [IDRSUploadManager uploadFileWithParam:param success:^(id _Nonnull responseObject) { [self showToastWith:responseObject duration:3]; } failure:^(NSError * _Nonnull error, IDRSUploadManagerParam * _Nonnull upLoadParam) { if (upLoadParam) { [self showToastWith:@"upload error" duration:3]; } }];