AIGC是指通过人工智能技术自动生成内容的生产方式,其中,文生图(Text-to-image Generation)任务是流行的跨模态生成任务,旨在生成与给定文本对应的图像。本文实现的主要功能是在阿里云DSW中,通过对AIGC Stable Diffusion文生图Lora模型进行模型微调,并启动WebUI进行模型推理实现虚拟上装。

背景信息

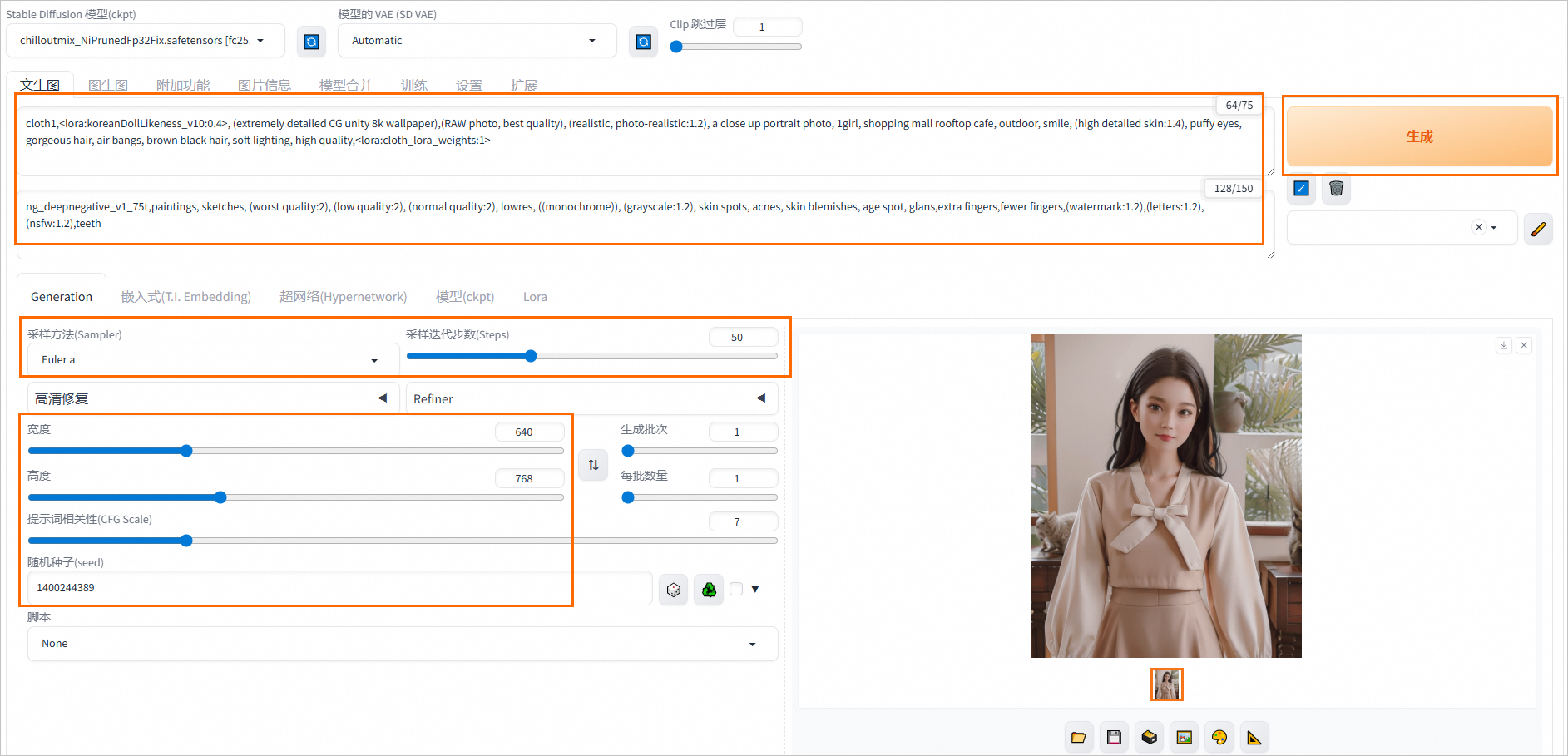

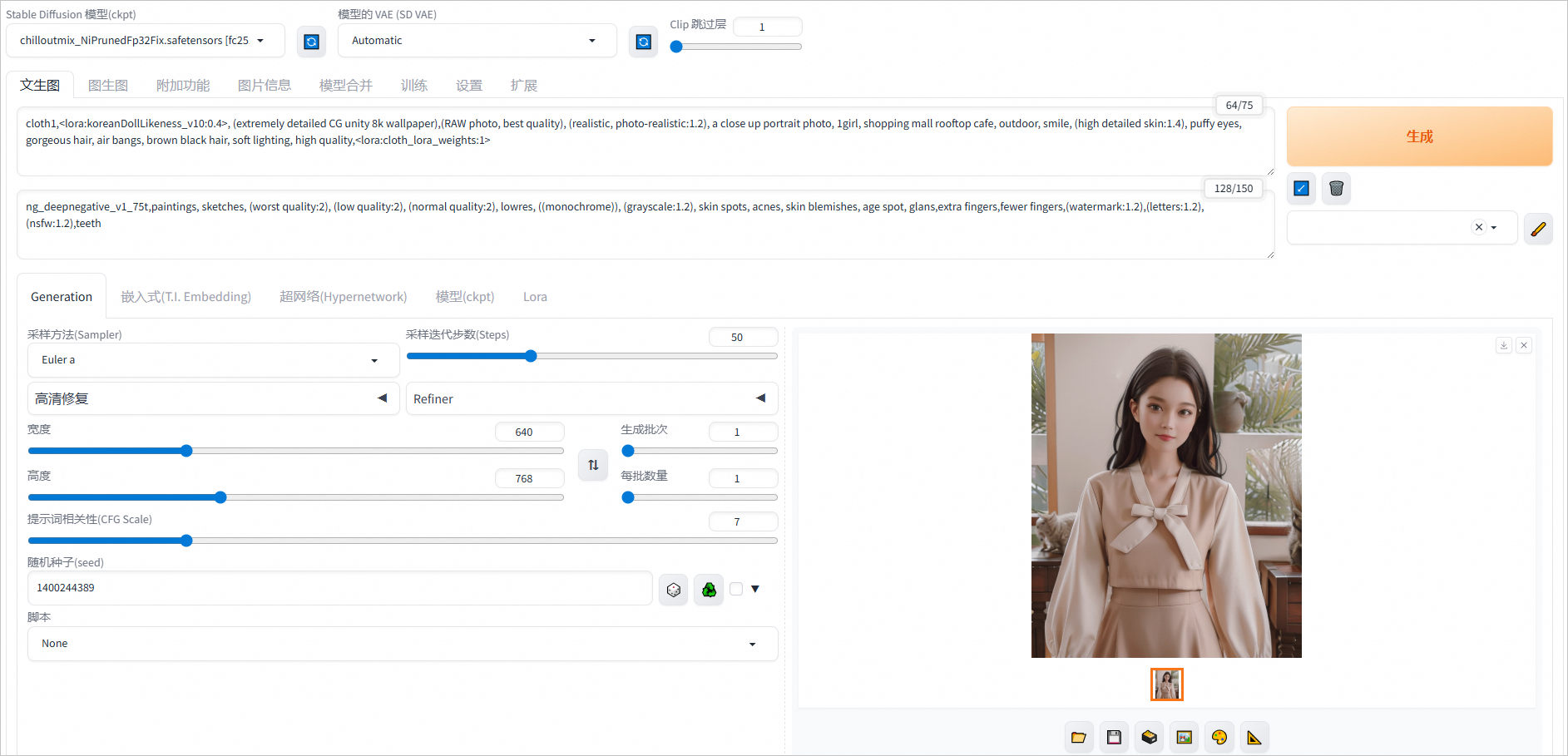

WebUI文生图推理效果如图所示。

前提条件

已创建工作空间,详情请参见创建及管理工作空间。

已创建DSW实例,其中关键参数配置如下。具体操作,请参见创建DSW实例。

资源类型选择:公共资源

资源规格选择:ecs.gn6v-c8g1.2xlarge。

镜像选择:

地域

镜像地址

华东1(杭州)

在官方镜像页签,选择stable-diffusion-webui-develop:1.0-pytorch2.0-gpu-py310-cu117-ubuntu22.04。

华北2(北京)

华东2(上海)

华南1(深圳)

步骤一:在DSW中打开教程文件

进入PAI-DSW开发环境。

登录PAI控制台。

在页面左上方,选择DSW实例所在的地域。

在左侧导航栏单击工作空间列表,在工作空间列表页面中单击默认工作空间名称,进入对应工作空间内。

在左侧导航栏,选择模型开发与训练>交互式建模(DSW)。

单击需要打开的实例操作列下的打开,进入PAI-DSW实例开发环境。

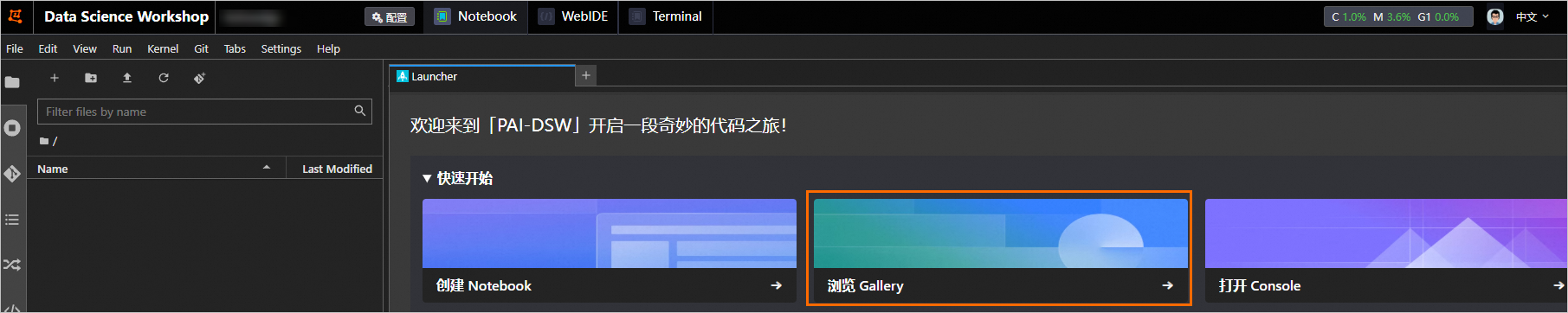

在Notebook页签的Launcher页面,单击快速开始区域的浏览Gallery,打开DSW Gallery页面。

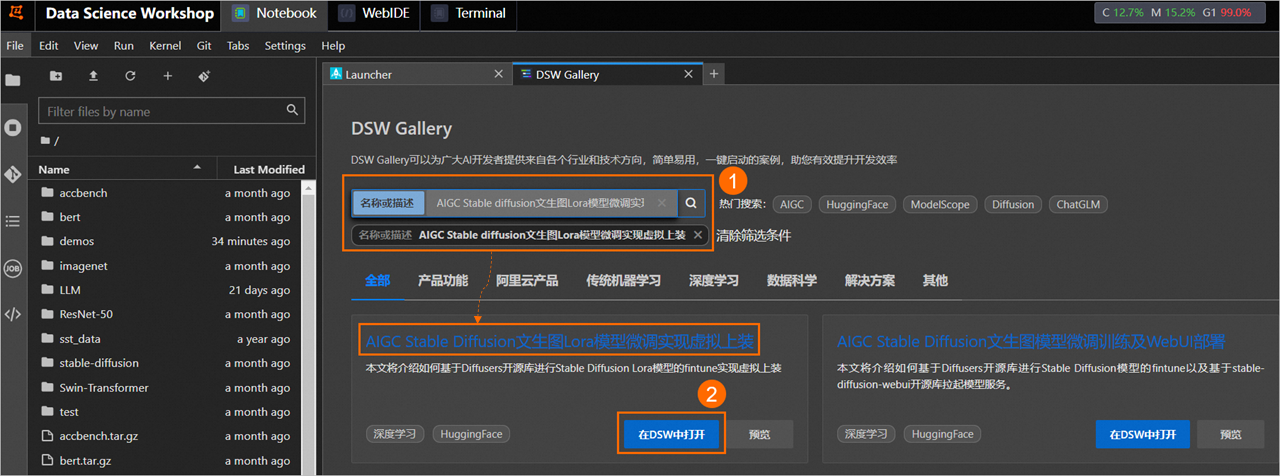

在DSW Gallery页面中,搜索并找到AIGC Stable diffusion文生图Lora模型微调实现虚拟上装教程,单击教程卡片中的在DSW中打开。

单击后即会自动将本教程所需的资源和教程文件下载至DSW实例中,并在下载完成后自动打开教程文件。

步骤二:运行教程文件

在打开的教程文件stable_diffusion_try_on.ipynb文件中,您可以直接看到教程文本,您可以在教程文件中直接运行教程。本教程一共3个运行步骤:

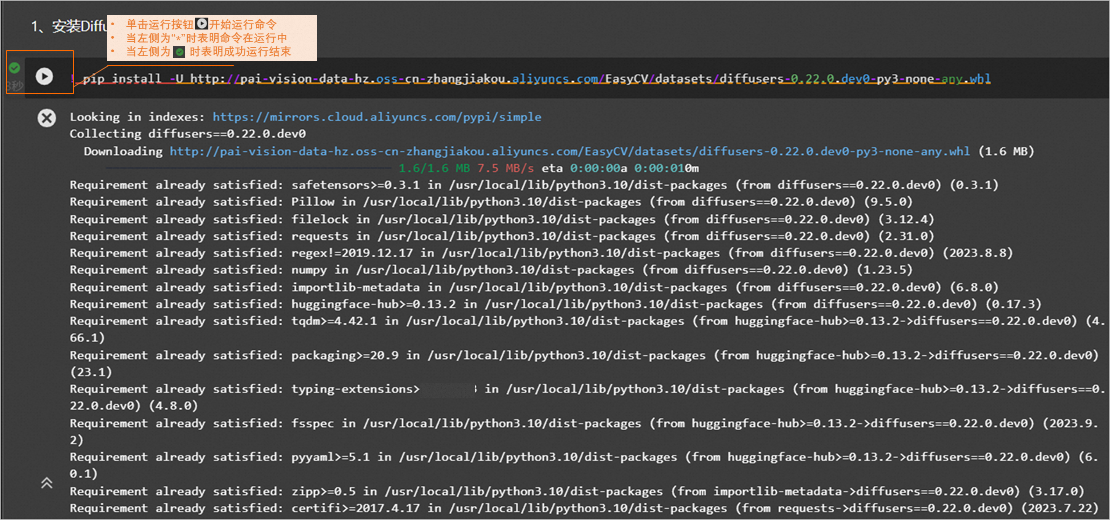

Diffusers安装。

Stable Diffusion+LORA模型fintune。

在DSW中启动WebUI。

在教程文件中直接运行对应的步骤的命令时,当成功运行结束一个步骤命令后,再顺次运行下个步骤的命令。

当第4步启动WebUI运行完成后,在返回的运行详情结果中单击URL链接(http://127.0.0.1:7860),进入WebUI页面。后续您可以在该页面进行模型推理。

说明由于

http://127.0.0.1:7860为内网访问地址,仅支持在当前的DSW实例内部通过单击链接来访问WebUI页面,不支持通过外部浏览器直接访问。

步骤三:推理结果验证

完成以上操作后,您已经成功完成了AIGC文生图模型微调训练及WebUI部署。您可以在WebUI页面,进行模型推理验证。

在文生图页签配置以下参数:

Prompt:

cloth1,<lora:koreanDollLikeness_v10:0.4>, (extremely detailed CG unity 8k wallpaper),(RAW photo, best quality), (realistic, photo-realistic:1.2), a close up portrait photo, 1girl, shopping mall rooftop cafe, outdoor, smile, (high detailed skin:1.4), puffy eyes, gorgeous hair, air bangs, brown black hair, soft lighting, high quality,<lora:cloth_lora_weights:1>Negative prompt:

ng_deepnegative_v1_75t,paintings, sketches, (worst quality:2), (low quality:2), (normal quality:2), lowres, ((monochrome)), (grayscale:1.2), skin spots, acnes, skin blemishes, age spot, glans,extra fingers,fewer fingers,(watermark:1.2),(letters:1.2),(nsfw:1.2),teeth采样方法(Sampler): Euler a

采样迭代步数(Steps): 50

宽度和高度: 640,768

随机种子(seed): 1400244389

提示词相关性(CFG Scale):7

单击生成,输出如图推理结果。